VSI DECset for OpenVMS Performance and Coverage Analyzer Command-Line Interface Guide

- Software Version:

- DECset Version 12.8 for OpenVMS

- Operating System and Version:

- VSI OpenVMS x86-64 Version 9.2-2 or higher

VSI OpenVMS IA-64 Version 8.4-1H1 or higher

VSI OpenVMS Alpha Version 8.4-2L1 or higher

Preface

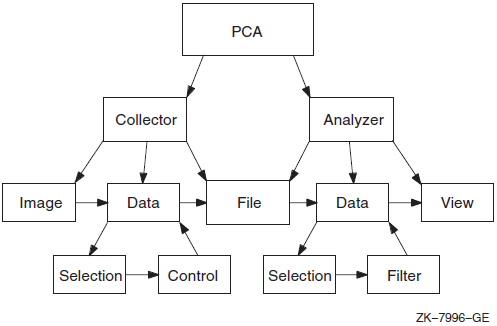

The Collector, which gathers various kinds of performance and test coverage data on your program.

The Analyzer, which processes and displays that data in histograms and tables.

1. About VSI

VMS Software, Inc. (VSI) is an independent software company licensed by Hewlett Packard Enterprise to develop and support the OpenVMS operating system.

2. Intended Audience

Analyze the performance characteristics of your applications

Analyze the text coverage characteristics of the tests you run on your applications to determine what code paths and tests are executing.

3. Document Structure

Chapter 1, "Introduction" provides an overview of PCA and briefly describes how to use the Collector and the Analyzer to gather and manipulate data.

Chapter 2, "Using the Collector" explains how to invoke the Collector and how to specify what kinds of data you want to gather.

Chapter 3, "Using the Analyzer" explains how to invoke the Analyzer and how to generate performance histograms and tables.

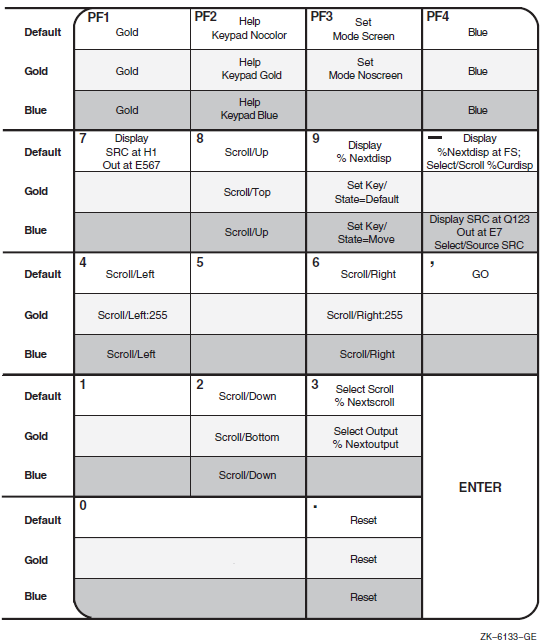

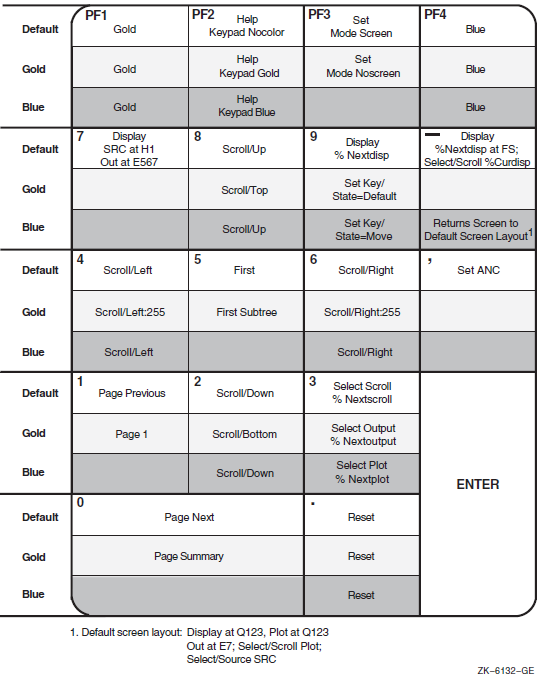

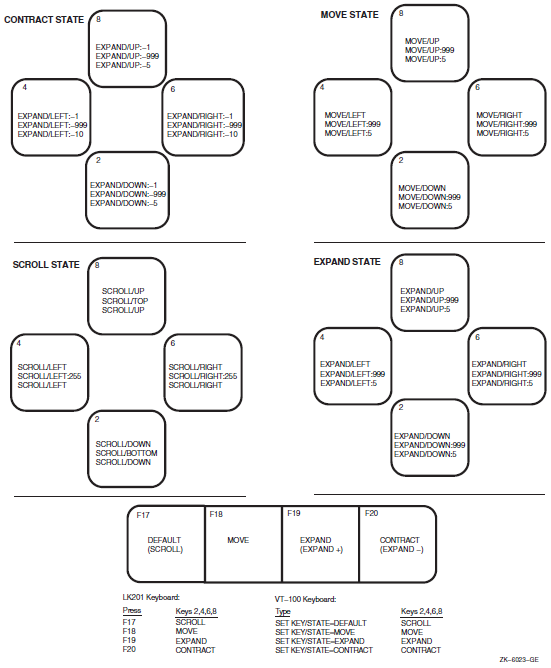

Chapter 4, "Productivity Enhancements with PCA" offers solutions to complex problems through practical examples. This chapter also introduces screen mode, discusses how to create and use screen displays, and how to use the predefined keypad definitions.

Chapter 5, "Using VAX Vectors with PCA" describes how to do performance analysis on applications containing vector instructions.

Appendix A, "Sample Programs" contains the sample program used for many of the examples throughout this file. It also contains the programs that are used in the examples in Chapter 4, "Productivity Enhancements with PCA".

Appendix B, "PCA Reference Tables" contains information on Collector and Analyzer logical names, node specifications, and data kinds. Also, this appendix contains keypad figures used in screen mode.

Appendix C, "Questions and Answers" answers questions that are commonly asked about this tool.

4. Related Documents

The VSI DECset for OpenVMS Performance and Coverage Analyzer Reference Manual describes all the commands available in PCA.

The Guide to Performance and Coverage Analyzer for OpenVMS Systems provides a tutorial description of the use of PCA from the windows interface, and contains other important user information.

VSI DECset for OpenVMS Installation Guide gives instructions for installing PCA on OpenVMS Alpha and OpenVMS VAX systems.

VSI Fortran Performance Guide details the performance features of the VSI Fortran High Performance Option, and discusses ways to improve the run-time performance of VSI Fortran programs.

5. References to Other Products

Note

These references serve only to provide examples to those who continue to use these products with DECset.

Refer to the Software Product Description for a current list of the products that the DECset components are warranted to interact with and support.

6. OpenVMS Documentation

The full VSI OpenVMS documentation set can be found on the VMS Software Documentation webpage at https://docs.vmssoftware.com.

7. VSI Encourages Your Comments

You may send comments or suggestions regarding this manual or any VSI document by sending electronic mail to the following Internet address: <docinfo@vmssoftware.com>. Users who have VSI OpenVMS support contracts through VSI can contact <support@vmssoftware.com> for help with this product.

8. Typographical Conventions

|

Convention |

Description |

|---|---|

|

$ |

A dollar sign ($) represents the OpenVMS DCL system prompt. |

|

Ctrl/x |

The key combination Ctrl/x indicates that you must press the key labeled Ctrl while you simultaneously press another key, for example, Ctrl/Y or Ctrl/Z. |

|

KPn |

The phrase KPn indicates that you must press the key labeled with the number or character n on the numeric keypad, for example, KP3 or KP-. |

|

file-spec, ... |

A horizontal ellipsis following a parameter, option, or value in syntax descriptions indicates additional parameters, options, or values you can enter. |

... |

A horizontal ellipsis in a figure or example indicates that not all of the statements are shown. |

. . . |

A vertical ellipsis indicates the omission of items from a code example or command format; the items are omitted because they are not important to the topic being described. |

|

( ) |

In format descriptions, if you choose more than one option, parentheses indicate that you must enclose the choices in parentheses. |

|

[ ] |

In format descriptions, brackets indicate that whatever is enclosed is optional; you can select none, one, or all of the choices. |

|

{ } |

In format descriptions, braces surround a required choice of options; you must choose one of the options listed. |

|

bold text |

Bold text represents the introduction of a new term. |

|

italic text |

Italic text represents book titles, parameters, arguments, and information that can vary in system messages (for example, Internal error number). |

|

UPPERCASE |

Uppercase indicates the name of a command,routine, file, file protection code, or the abbreviation of a system privilege. |

|

lowercase |

Lowercase in examples indicates that you are to substitute a word or value of your choice. |

Chapter 1. Introduction

This chapter presents an overview of the Performance and Coverage Analyzer (PCA). It includes a demonstration of a sample program used with PCA.

1.1. Overview

PCA is one of the DECset software development tools. It helps you produce efficient and reliable applications by analyzing your program's dynamic behavior. PCA also measures codepath coverage within your program so that you can devise tests that exercise all parts of your application.

Components

The Collector. The Collector gathers performance or test coverage data on a running program and writes that data to a performance data file.

The Analyzer. The Analyzer reads the performance data file produced by the Collector and processes the data to produce performance or coverage histograms and tables.

You can run the Collector and the Analyzer in batch as well as interactively.

Online Help

To get help with PCA commands, or any of the qualifiers or parameters used with these commands, type HELP, followed by the command or topic.

For the DECwindows interface, you can obtain help on any screen object by positioning the pointer on the desired object, pressing and holding the HELP key while you press MB1, and releasing both keys. You can also obtain help by choosing a menu item from the Help pull-down menu.

1.2. The Collector

The Collector collects that data you request and deposits the data into a data file during the program run. Afterward, you can use the Analyzer to display and filter the collected data.

Image selection

Measurement and control selection

Output to data file

Image Selection

You may select either the main image or one of the shareable images in the program address space. PCA measures the dynamic behavior of the image you have selected. The image must be in the address space when the application program is activated.

Measurement and Control Selection

Program counter (PC) sampling data. PCA samples the program counter at an interval you specify (by default, every 10 milliseconds).

CPU sampling data. This is the same as PC sampling data, except that PCA uses the virtual-process time instead of the system or wall clock time as the basis for sampling. Data counts reflect only the CPU time usage, and not the time spent by the program waiting for the completion of I/O operations, page faulting, and so on.

Counters. PCA counts the exact number of times that specified program locations execute.

Coverage data. PCA collects information that indicates which portions of your program are or are not executed during each test run.

Page fault data. PCA shows you where a page fault occurs and which program address caused it.

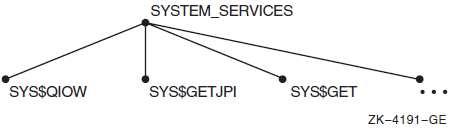

System services data. PCA counts which system services your program calls, how often it calls them, and which program segments do the calling.

Input/Output services data. PCA counts information about all I/O service calls that your program makes.

Ada tasking data. PCA collects information on all context switches in Ada multi-tasking applications.

Events data. PCA collects data from a specified phase of your program.

Vector program counter (VPC) sampling data. PCA samples the program counter at intervals you specify to show where the wall-clock time is being spent in the application performing vector instructions.

Vector CPU (VCPU) sampling data. This is the same as VPC sampling data, except that PCA uses the virtual-process time instead of the system or wall clock time.

Vector counters data. PCA counts all the vector instructions in all or part of your application containing vector instructions.

You must set a control to define the length of the sampling interval for PC sampling, CPU sampling, vector PC sampling, and vector CPU sampling. The fastest timer on the system is 10 milliseconds, the default.

You must set a program address control for gathering counters, vector counters, and coverage data.

You must define an event name and set program address controls for gathering events data.

Output to Data File

The data file receives the collected data and can then be passed to the Analyzer for analysis and filtering.

1.3. The Analyzer

The Analyzer lets you analyze and filter the data produced by the Collector and creates views of the specified data.

Data file selection

Data specification

View selection

Data File Selection

The Analyzer operates on data produced by the Collector. You may choose one data file or merge several from different collections.

Data Specification

Data kind

Domain

Filters

The Collector gathers many data kinds. In the Analyzer,you select which ones to analyze. Each data kind has one or more domains associated with it. For example, if you collected PC sampling, you can choose to view the following domains in the Analyzer: the program address, the call tree, the task, the task priority, and the task type. You can further restrict the data to be viewed with a filter. Any of the domains can be chosen and the value of the domain can be tested to be within a range of values. If it is within the range, then the selected domain value is passed along to be viewed. The general flow of control in PCA is shown in Figure 1.1, ''The PCA Model''.

The program counter (PC) of the I/O call

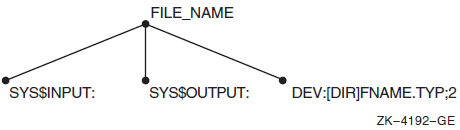

The file name

The virtual block number

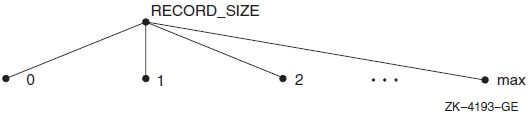

Record size

I/O service name

Choosing the I/O data kind means you want to know the frequency of I/O calls. Choosing the Program Address domain indicates that you want to know where in the program the I/O calls occur. The Analyzer creates a view of the I/O data kind with the value of the PC from each I/O "record". The Analyzer uses the data to construct a view of the program, which shows where the I/O calls originate.

Filter selection differs from other selections because it is based on the values in the domain, not on the larger class of the data kind. For example, if you choose the I/O data kind with the Program Address Domain, set the filter for the Virtual Block Number domain, and specify a range of 1 to 5, the Program Address domain values then passed to the View are those which have Virtual Block Number domain values in the range of 1 to 5. In other words, you are asking where in my program are the I/O calls for Virtual Block number 1 to 5.

Selecting data kinds and domains can be thought of as progressively refining selected data from the data file before passing it on to be viewed.

View Selection

Histograms (Plot/Tables)

Source listings annotated with bars or numbers

Lists

Trees

Selecting the appearance of the view

Defining the granularity or size of each bucket

Defining the complete range

Setting the upper, lower, no zeros limits

Setting or canceling acceptable noncoverage (ANC)

Traversing the table

Expanding to source

Scaling

Scrolling

Selecting vertical versus horizontal views

Selecting numbers versus bars

Changing the title

1.4. Getting Started

This section uses as an example a FORTRAN program called PCA$PRIMES, which generates all the prime numbers in a given integer range. The program's source code has the file name PCA$PRIMES.FOR. The complete program is included in Appendix A, "Sample Programs" and is supplied with the PCA kit. (After installation, you can find PCA demonstration programs in the directory PCA$EXAMPLES.)

1.4.1. Using the Collector

The Collector gathers performance or test coverage data on applications programs as they run. In the example in the following sections, the Collector gathers program counter sampling data on the FORTRAN program PCA$PRIMES.

1.4.1.1. Invoking the Collector

- Compile the source file. Use the /DEBUG qualifier with a compilation command to create a Debug Symbol Table (DST) in the object module. The DST contains all of the symbol and line number information PCA needs to specify user program locations. Enter the following DCL command to compile the FORTRAN program PCA$PRIMES.FOR for use with the Collector:

$ FORTRAN/DEBUG PCA$PRIMES.FOR

This command creates the file PCA$PRIMES.OBJ. - Link the user program. The /DEBUG qualifier passes the DST information generated by the compiler to the executable image file where it can be accessed by the Collector. Type the following DCL command to link PCA$PRIMES.OBJ for running under Collector control:

$ LINK/DEBUG PCA$PRIMES.OBJ

- Run the program. Invoke the Collector on an image linked for debugging by defining the LIB$DEBUG logical to be PCA$COLLECTOR.EXE. This causes the OpenVMS image activator to invoke the Collector as a debugger. You can then enter Collector commands. Type the following commands to define the LIB$DEBUG logical:

$ DEFINE LIB$DEBUG SYS$LIBRARY:PCA$COLLECTOR.EXE $ RUN PCA$PRIMES.EXE

1.4.1.2. Collecting the Data

PCAC> SET DATAFILE PCA$PRIMES

PCAC> SET PC_SAMPLING

PCAC> GO

%PCA-I-BEGINCOL, data collection begins 169 prime numbers generated between 1 and 1000 FORTRAN STOP %PCA-I-ENDCOL, data collection ends$

1.4.1.3. Exiting the Collector

To end the Collector session at any time prior to saying GO, or to suspend data collection, enter the EXIT command or press Ctrl/Z.

PCAC> SET DATAFILE/APPEND PCA$PRIMES.PCA

You can run your program without collecting data by entering the GO command with the /NOCOLLECT qualifier.

The Collector allows you to collect different kinds of data in the same collection run, but you should be cautious in doing so. Values may be distorted if different kinds of data are collected in the same collection run. The Collector provides informational messages warning about potential conflicts. Section 1.2, ''The Collector'' contains a listing of the different kinds of data you can collect.

1.4.2. Using the Analyzer

The Analyzer reads the performance data file written by the Collector and uses the data to produce histograms, tables, and other reports that help you evaluate your program's performance or coverage. In the example in the following sections, the Analyzer is used on the data file previously produced by the Collector to create histograms and tables, to display the source code and the summary page, and to print, file, and append Analyzer output.

1.4.2.1. Invoking the Analyzer

$ PCA PCA$PRIMES

Performance and Coverage Analyzer Version 5.0

PCAA>The

data file in the previous example, PCA$PRIMES.PCA, contains the performance or coverage data

and all symbol table information required by the Analyzer.$ PCA/COMMAND="SHOW DATAFILE; SHOW LANGUAGE" PCA$PRIMES.PCA

Performance and Coverage Analyzer Version 5.0

Performance Data File: SYS$DISK01:[SMITH]PCA$PRIMES.PCA;1

Language: FORTRAN

PCAA>In

this example, SHOW DATAFILE and SHOW LANGUAGE are executed before the Analyzer prompt

appears.1.4.2.2. Creating the Default Plot

After invoking the Analyzer and specifying the data file, you can enter a traverse command (NEXT, BACK, FIRST,CURRENT), and let the Analyzer default settings create a useful plot for you. The traverse commands walk you through your program's structure, pointing out the most significant portions of your application.

Entering the NEXT command at the first Analyzer prompt creates a source plot with the PC sampling data kind. A pointer is positioned at the line with the most data points in the routine with the most data points, that is, the place where the most PC samples were gathered and most of the time was spent. Subsequent NEXT commands traverse the program structure to show you the most-to-least significant line in the program.

The Analyzer supplies the default data kind. The default data kind is the last data kind collected by the Collector. If CPU sampling was the last data kind collected, then CPU sampling is the default data kind. You can change the default with the SET PLOT or PLOT commands.

1.4.2.3. Scrolling Through the Display on Your Terminal

A histogram or table is displayed on your terminal one page at a time. To see the next page, press the Return key. You can keep pressing Return to see successive pages until you reach the summary page. The summary page contains various summary statistics and lists all qualifiers and node specifications used to generate the Analyzer display. It can be more than one page. Section 1.4.2.4, ''Interpreting the Summary Page'' provides a detailed interpretation of the summary page. If you press Return at the end of the summary, the Analyzer brings you back to the first page of the histogram or table.

You can use the PAGE command to page through the histogram or table. You can also use the FIND command to look for a page with a specific label or line number.

When you have a histogram or table that you want to print or save in a file, you can do so with the PRINT, FILE, and APPEND commands (see Section 1.4.2.6, ''Printing, Filing and Appending Analyzer Output'').

1.4.2.4. Interpreting the Summary Page

Performance and Coverage Analyzer Page 2

Program Counter Sampling Data (27546 data points total) - "*"  PCA Version 5.0 20-OCT-2006 14:13:18

PCA Version 5.0 20-OCT-2006 14:13:18  PLOT Command Summary Information:

Number of buckets tallied: 17

PLOT Command Summary Information:

Number of buckets tallied: 17  Program Counter Sampling Data - "*"

Data count in largest defined bucket: 27477 99.7%

Program Counter Sampling Data - "*"

Data count in largest defined bucket: 27477 99.7%  Data count in all defined buckets: 27539 100.0%

Data count in all defined buckets: 27539 100.0%  Data count not in defined buckets: 0 0.0%

Data count not in defined buckets: 0 0.0%  Portion of above count in P0 space: 0 0.0%

Portion of above count in P0 space: 0 0.0%  Number of PC values in P1 space: 0 0.0%

Number of PC values in P1 space: 0 0.0%  Number of PC values in system space: 0 0.0%

Number of PC values in system space: 0 0.0%  Data points failing /STACK_DEPTH or /MAIN_IMAGE: 7 0.0%

Data points failing /STACK_DEPTH or /MAIN_IMAGE: 7 0.0%  Total number of data values collected: 27546 100.0%

Total number of data values collected: 27546 100.0%  Command qualifiers and parameters used:

Command qualifiers and parameters used:  Qualifiers:

/PC_SAMPLING /DESCENDING /NOMINIMUM /NOMAXIMUM

/NOCUMULATIVE /NOSOURCE /ZEROS /NOSCALE /NOCREATOR_PC

/NOPATHNAME /NOCHAIN_NAME /WRAP /NOPARENT_TASK /NOKEEP /NOTREE

/FILL=("*","O","x","@",":","#","/","+")

/NOSTACK_DEPTH /MAIN_IMAGE

Node specifications:

PROGRAM_ADDRESS BY MODULE

No filters are defined

Qualifiers:

/PC_SAMPLING /DESCENDING /NOMINIMUM /NOMAXIMUM

/NOCUMULATIVE /NOSOURCE /ZEROS /NOSCALE /NOCREATOR_PC

/NOPATHNAME /NOCHAIN_NAME /WRAP /NOPARENT_TASK /NOKEEP /NOTREE

/FILL=("*","O","x","@",":","#","/","+")

/NOSTACK_DEPTH /MAIN_IMAGE

Node specifications:

PROGRAM_ADDRESS BY MODULE

No filters are defined  PCAA>

PCAA>Not all of the lines shown in the example appear in all summary pages. For example, the summary page does not display the lines showing the number of PC values in P0, P1, or system space unless you have tallied program addresses.

Performance and Coverage Analyzer Page 3

I/O System Service Calls (3581 data points total) - "*"

Page Fault Program-Counter Data (121 data points total) - "O"  Program Counter Sampling Data (27546 data points total) - "x"

PCA Version 5.0 20-OCT-2006 14:15:32

PLOT Command Summary Information:

Number of buckets tallied: 17

I/O System Service Calls - "*"

Program Counter Sampling Data (27546 data points total) - "x"

PCA Version 5.0 20-OCT-2006 14:15:32

PLOT Command Summary Information:

Number of buckets tallied: 17

I/O System Service Calls - "*"  Data count in largest defined bucket: 3581 100.0%

Data count in all defined buckets: 3581 100.0%

Data count not in defined buckets: 0 0.0%

Portion of above count in P0 space: 0 0.0%

Number of PC values in P1 space: 0 0.0%

Number of PC values in system space: 0 0.0%

Data points failing /STACK_DEPTH or /MAIN_IMAGE: 0 0.0%

Total number of data values collected: 3581 100.0%

Command qualifiers and parameters used:

Data count in largest defined bucket: 3581 100.0%

Data count in all defined buckets: 3581 100.0%

Data count not in defined buckets: 0 0.0%

Portion of above count in P0 space: 0 0.0%

Number of PC values in P1 space: 0 0.0%

Number of PC values in system space: 0 0.0%

Data points failing /STACK_DEPTH or /MAIN_IMAGE: 0 0.0%

Total number of data values collected: 3581 100.0%

Command qualifiers and parameters used:  Qualifiers:

/IO_SERVICES /DESCENDING /NOMINIMUM /NOMAXIMUM

/NOCUMULATIVE /NOSOURCE /ZEROS /NOSCALE /NOCREATOR_PC

/NOPATHNAME /NOCHAIN_NAME /WRAP /NOPARENT_TASK /NOKEEP /NOTREE

/FILL=("*","O","x","@",":","#","/","+")

/NOSTACK_DEPTH /MAIN_IMAGE

Node specifications:

PROGRAM_ADDRESS BY MODULE

Filter definitions:

Qualifiers:

/IO_SERVICES /DESCENDING /NOMINIMUM /NOMAXIMUM

/NOCUMULATIVE /NOSOURCE /ZEROS /NOSCALE /NOCREATOR_PC

/NOPATHNAME /NOCHAIN_NAME /WRAP /NOPARENT_TASK /NOKEEP /NOTREE

/FILL=("*","O","x","@",":","#","/","+")

/NOSTACK_DEPTH /MAIN_IMAGE

Node specifications:

PROGRAM_ADDRESS BY MODULE

Filter definitions:  Filter F1:

RUN_NAME = 2

Page Fault Program-Counter Data - "O"

Data count in largest defined bucket: 46 38.0%

Data count in all defined buckets: 121 100.0%

Data count not in defined buckets: 0 0.0%

Portion of above count in P0 space: 0 0.0%

Number of PC values in P1 space: 0 0.0%

Number of PC values in system space: 24 19.8%

Total number of data values collected: 121 100.0%

Qualifiers applied to this datakind:

/NOCUMULATIVE /NOSTACK_DEPTH /NOPARENT_TASK /NOCREATOR_PC

/NOMAIN_IMAGE

Filter definitions:

Filter F1:

RUN_NAME = 1

Program Counter Sampling Data - "x"

Data count in largest defined bucket: 27477 99.7%

Data count in all defined buckets: 27539 100.0%

Data count not in defined buckets: 0 0.0%

Portion of above count in P0 space: 0 0.0%

Number of PC values in P1 space: 0 0.0%

Number of PC values in system space: 0 0.0%

Data points failing /STACK_DEPTH or /MAIN_IMAGE: 7 0.0%

Total number of data values collected: 27546 100.0%

Qualifiers applied to this datakind:

Filter F1:

RUN_NAME = 2

Page Fault Program-Counter Data - "O"

Data count in largest defined bucket: 46 38.0%

Data count in all defined buckets: 121 100.0%

Data count not in defined buckets: 0 0.0%

Portion of above count in P0 space: 0 0.0%

Number of PC values in P1 space: 0 0.0%

Number of PC values in system space: 24 19.8%

Total number of data values collected: 121 100.0%

Qualifiers applied to this datakind:

/NOCUMULATIVE /NOSTACK_DEPTH /NOPARENT_TASK /NOCREATOR_PC

/NOMAIN_IMAGE

Filter definitions:

Filter F1:

RUN_NAME = 1

Program Counter Sampling Data - "x"

Data count in largest defined bucket: 27477 99.7%

Data count in all defined buckets: 27539 100.0%

Data count not in defined buckets: 0 0.0%

Portion of above count in P0 space: 0 0.0%

Number of PC values in P1 space: 0 0.0%

Number of PC values in system space: 0 0.0%

Data points failing /STACK_DEPTH or /MAIN_IMAGE: 7 0.0%

Total number of data values collected: 27546 100.0%

Qualifiers applied to this datakind:  /NOCUMULATIVE /NOSTACK_DEPTH /NOPARENT_TASK /NOCREATOR_PC

/MAIN_IMAGE

No filters are defined

/NOCUMULATIVE /NOSTACK_DEPTH /NOPARENT_TASK /NOCREATOR_PC

/MAIN_IMAGE

No filters are definedData kind lines. Each line of this header information names one of the data kinds plotted, in the order that they were plotted. | |

Summary information by data kind. The summary information is repeated for each data kind, and is presented in the order that they were plotted. | |

Qualifier information. This list includes all qualifiers used by the Analyzer. Subsequent qualifier information lists only those applied to the data kind. | |

Filters applied to this data kind. This line includes only the filters that were defined for this data kind. | |

Qualifiers applied to this data kind. This list includes only the qualifiers applied to this data kind. |

Information that does not vary among the data kinds (such as the number of buckets, the qualifier list, and node specification) is only displayed once.

1.4.2.5. Viewing the Currently Active Plot

The plot or table resulting from the last PLOT or TABULATE command you entered is known as the currently active plot. The currently active plot is the source of all default qualifiers and parameters for a subsequent PLOT or TABULATE command. Use the SHOW PLOT command to view all the attributes of the currently active plot.

1.4.2.6. Printing, Filing and Appending Analyzer Output

The PRINT, FILE, and APPEND commands act on the output from the last PLOT, TABULATE, INCLUDE, EXCLUDE, LIST or traverse command you entered. You can use PRINT, FILE, APPEND commands to print or file raw performance data in addition to histograms and tables. Also, with the FILE/DDIF command, you can store your output in a DDIF file to process for a specified printing device.

PCAA> PRINT %PCA-I-FILQUE, print file queued to SYS$PRINT PCAA>To be notified on your terminal when the print job has completed, use the /NOTIFY qualifier with the PRINT command. No other qualifiers are accepted.

PCAA> FILE OUTFILE %PCA-I-CREFILE, creating file SYS$DISK01:[LEE]OUTFILE.PCALIS;1 PCAA>

PCAA> APPEND %PCA-I-APPFILE, appending to file SYS$DISK01:[LEE]OUTFILE.PCALIS;1 PCAA>

1.4.2.7. Stopping Terminal Output or Exiting the Analyzer Session

To stop the Analyzer's terminal output, such as long output from a SHOW or LIST command, press Ctrl/C. The Analyzer aborts the current output operation and prompts for a new command.

To end the Analyzer session, enter the EXIT command or press Ctrl/Z.

Chapter 2. Using the Collector

This chapter explains how to invoke the Collector, how to set up the Collector environment, and how to specify and filter the data you want to collect on your application's performance.

2.1. Overview

Invoke the Collector by compiling, linking and running your application.

Specify the name of the performance data file.

Specify the data kinds to collect.

Select the language of your application.

Name the collection run.

Type GO to start the collection run and store the data in the performance data file.

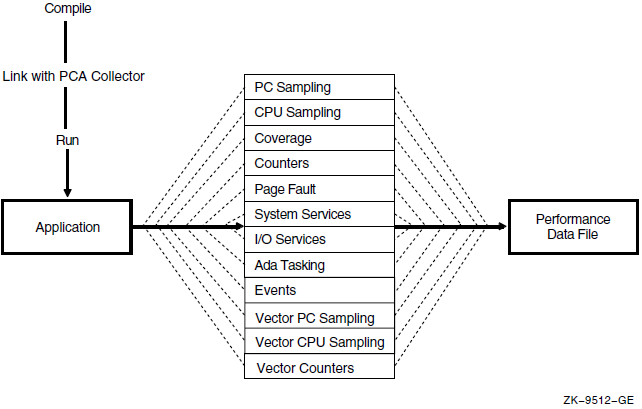

Figure 2.1, ''The PCA Collector'' shows how the Collector interacts with your application to collect data.

2.2. Invoking the Collector

$ LINK/DEBUG PCA$PRIMES.OBJ

$ DEFINE LIB$DEBUG PCA$COLLECTOR.EXE

$ RUN PCA$PRIMES.EXE

PCA Collector Version 4.6

PCAC>To

return to the debugger, deassign the logical name LIB$DEBUG, as

follows:$ DEASSIGN LIB$DEBUG

$ RUN PCA$PRIMES.EXE

OpenVMS Debugger Version 7.0

DBG>When collecting coverage data, use the /NOOPTIMIZE qualifier during compilation.

2.3. Specifying the Performance Data File

PCAC> SET DATAFILE PCA$PRIMES_PC_SMPL

If you do not specify a data file,the Collector names the data file after the executable image file of the program you are measuring with a default file type of .PCA.

To direct the gathered data to a new data file with each run,enter a new SET DATAFILE command for each collection run.

Appending a New Data Collection to an Existing File

PCAC> SET DATAFILE/APPEND/EXECUTABLEThis example sets the data file to the default data file. When using the /EXECUTABLE qualifier, omit the file specification parameter.

2.4. Specifying Data Collection

When you invoke the Collector and name the data file,you must specify the kinds of data you want to gather.

PC Sampling

CPU Sampling

Coverage

Counters

Page fault

System services

I/O services

Ada tasking

Events

Vector PC sampling

Vector CPU sampling

Vector counters

By default, PCA collects PC sampling and stack data when you enter a GO command without specifying a data kind.

| Set PC_Sampling | (see Section 2.4.1, ''Program Counter Sampling Data: System Time'') |

| Set PCU_Sampling | (see Section 2.4.2, ''Program Counter Sampling Data: CPU Time'') |

| Set Page_Faults | (see Section 2.4.1.4, ''Interpreting Page-Faulting Data'') |

| Set Services | (see Section 2.4.6, ''System Services Data'') |

| Set IO_Services | (see Section 2.4.7, ''Input/Output Data'') |

| Set Tasking | (see Section 2.4.8, ''Tasking Data'') |

2.4.1. Program Counter Sampling Data: System Time

PCAC> SET PC_SAMPLINGThe Collector samples the PC by setting up an asynchronous system trap (AST) timer routine in supervisor mode. By default, the AST timer routine is activated every 10 milliseconds. Each time the routine is activated, the Collector retrieves the current PC value for output to the data file. Because the timer operates in supervisor mode, it samples the PC in all user-mode and user AST-mode that your program executes. The Collector cannot collect data on programs that run in other modes.

PC sampling data collection is efficient. The extra overhead of gathering this data is typically no more than 5 percent of the total run time of the user program. However, run times of a minute or more are required to gather enough PC values for statistically significant results. You can gather at most 100 values per second, and you usually need to gather thousands of values to get significant results.

2.4.1.1. PC Sampling Data Distortion

PC sampling provides correct and repeatable results if the Collector supplies enough PC values for statistically significant results. When collecting PC values under ideal conditions, the chances of finding the PC in a given address range is proportional to the amount of time the program actually spends in that address range. However, when less than ideal conditions exist, the results of PC sampling may be distorted. This section discusses those situations that can distort PC sampling data and lead you to draw incorrect conclusions about the behavior of your program.

The overall system load at the time of collection affects the number of program counter values the Collector gathers, because program execution slows down as more demands are made on the system CPU. When program execution slows, the 10-millisecond timer (which is relatively unaffected by the CPU load) gathers more PC values per program run than when your system is lightly loaded and processing faster. The actual number of PC values collected is not important as long as the number is large enough to establish a representative sample. Only the proportion of PC values found in a given address range is significant and repeatable.

A less than ideal condition exists when the system load changes markedly during the PC sampling session. This condition may force the program you are measuring to share the system with a CPU-intensive process. This would cause the program to run more slowly in real time, produce more 10-millisecond ticks, and collect a greater number of PC values. Now, consider that the other process may terminate at some point through the PC sampling run. If this occurs, then the rest of the PC sampling run executes at full speed, experiences fewer 10-millisecond ticks, and collects fewer PC values than it did before the other process terminated. Under these conditions, the PC sampling data would be misleading because the program was not run on an evenly loaded system. There could be a skew of PC sampling data toward the beginning of the run.

Synchronizing your code with the 10-millisecond timer can also cause PC sampling data distortion. For example, if your program sets up a user-mode timer AST routine, then that routine normally gains control as soon as the Collector's supervisor-mode timer AST routine runs to completion. The AST routine starts early in the Collector's timer interval and probably finishes before the next 10-millisecond tick occurs. Therefore, no PC values are likely to be collected from such an AST routine, and you may wrongly conclude that no time is consumed in that routine. An example of this situation is an Ada multi-tasking program that is time slicing at every 10 milliseconds.

2.4.1.2. Interpreting System Service Wait Times

Interpreting system service wait times can also be deceiving. When your program calls a system service that runs in executive or kernel mode, the PC points to the first byte following a change-mode instruction in the system service vector in P1 space. However, as long as the system service is running in that higher mode, the Collector's supervisor-mode timer AST routine cannot gain control. When the higher-mode code has finished executing, the Collector's AST routine gains control and collects only one PC value, even if many 10-millisecond ticks occur in that time frame. Therefore, the amount of CPU time consumed in system services may not be properly represented in the PC sampling data.

2.4.1.3. Interpreting I/O Services Wait Times

In comparison to system service wait times, I/O services wait times are properly represented in PC sampling data. The CPU time used by the I/O system services may be under represented, but once the physical I/O operation is queued or started, the Collector's timer AST routine can continue to collect PC values. Therefore, I/O-bound programs may result in large numbers of PC values occurring in P1 space. These values represent I/O system service wait times.

Under some circumstances, I/O wait times should be evaluated cautiously. For example, if your program is accessing an overloaded disk, much of the wait time may be due to competition from other processes accessing the same device. In such cases, the best way to reduce program I/O wait time is to reduce the load on that disk, perhaps by spreading its files over several disks. Before you decide to code your program to make it faster, you should consider both the internal and external factors that can be causing poor I/O performance.

Because terminals are inherently slow devices, terminal I/O is likely to cause many PC values to be collected in P1 space. A terminal-bound application can easily spend 80 to 90 percent of its time waiting for the terminal. Collecting many PC values in P1 space is a normal characteristic of terminal-bound programs.

2.4.1.4. Interpreting Page-Faulting Data

PC sampling data measures page faulting time in addition to CPU and I/O time. When a page fault occurs, the Collector gathers the PC value of the instruction that caused the page fault. If you find a big PC sampling peak in a routine or in a code segment, the peak could be due to page faulting rather than to CPU usage. If you are in doubt about the PC data, collect page fault data or collect CPU sampling data to see if that can explain the PC sampling peak in that code segment.

2.4.1.5. Collecting PC Sampling Data and Other Data in the Same Run

You can collect different kinds of data in the same collection run. However, some kinds of data impose a high collection overhead that can substantially alter the run-time behavior of your program. For this reason, the Collector provides an informational message warning about possible conflicts. For example, execution counters can slow your program down several hundred times. If you try to collect execution counts and PC sampling data at the same time, the overhead of collecting execution counts may make the PC sampling data meaningless.

Although you should avoid collecting PC sampling data and execution count data at the same time, there are exceptions. For example, an execution counter that is "hit" only half a dozen times in a long collection run may have no appreciable effect on the PC sampling process.

Usually, you can collect page fault data and PC sampling data at the same time with little or no distortion in either kind of data. However, avoid collecting system services data, I/O data, CPU sampling data, execution counts,or test coverage data at the same time that you collect PC sampling data.

2.4.2. Program Counter Sampling Data: CPU Time

PCAC> SET CPU_SAMPLING

There are many external factors that can affect the behavior of a program in relation to the system clock (for example, page faulting and system service wait time, including I/O wait time). These conditions make it difficult to determine whether the program counter contains a specific location because of the structure of the program's algorithm or because of other external factors occurring in that interval. Under these conditions, sampling the PC values based on the CPU time is more effective and reproducible because the effects caused by contending processes are eliminated.

2.4.3. Test Coverage Data

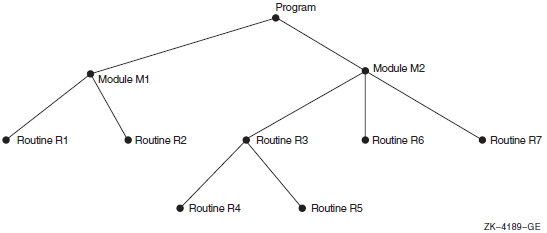

If you want to see which parts of your application are covered or not covered by your test suite, use the SET COVERAGE command. You must use node specifications to specify program locations on which to place breakpoints so that test coverage data or execution counts can be gathered for that area of the program. Node specifications refer to those elements of your application that can be defined as a program address, a module, a routine, a code path, or a line.

Figure 2.2, ''A Program Represented as a Tree'' depicts the different elements of a program as a tree structure. Many of the examples used in the following sections refer to its modules and routines by name.

PCAC> SET COVERAGE/STACK_PCS PROGRAM BY CODEPATH

By default, the Collector removes each breakpoint the first time the code executes. This allows the program to run faster because it does not collect counts at locations that have already been covered. Therefore, frequently executed code runs at full speed after the first execution.

The complete syntax of node specifications, as used with the SET COUNTERS, SET COVERAGE, and SET EVENT commands, is shown in Table B.3, ''Node Specification Parameter Syntax''.

2.4.3.1. Codepaths

When you use the SET COVERAGE command, the most useful node specification is one with a BY CODEPATH clause. This nodespec causes the Collector to examine the program's object code in order to find all relevant codepaths. The term codepath is any piece of object code that the executing program enters only at the beginning (at the first instruction) and exits only at the end.

The codepath search starts at all entry points and labels. The Collector puts breakpoints at the entry points of the routines and at all possible destinations of conditional branches. In this regard, you can think of the codepath source as being a basic block within a high level language. In the Analyzer, you can plot or tabulate the collected data to see which codepaths are not covered by your tests.

PCAC> SET COVERAGE MODULE M2 BY CODEPATHSimilarly, the following command places test coverage counters on all codepaths in the entire program:

PCAC> SET COVERAGE PROGRAM_ADDRESS BY CODEPATHBecause the MACRO assembler does not provide adequate symbol table information, the Collector may not be able to find all codepaths in MACRO programs. To minimize this problem, you should declare all MACRO routines with .ENTRY directives. Even then, constructs such as indexed jump instructions or passing code addresses as parameters may cause codepaths to be missed.

PCAC> SET COVERAGE ROUTINE R6, ROUTINE R7, MODULE M1 BY ROUTINE

PCAC> SET COVERAGE PROG BY LINE, PROG BY CODEThis covers the union of addresses specified in the nodespec but may create an inordinate number of buckets.

PCAC> SET COVERAGE/UNTIL:3 ROUTINE FINDSUM BY CODEPATH

When compiling your program to gather coverage data, use the/NOOPTIMIZE qualifier to prevent the compiler from optimizing code and gathering incorrect results.

2.4.3.2. Modules

PCAC> SET COVERAGE MODULE M1 BY ROUTINE

If the subtree with Module M1 as its root has routine nodes for both R1 and R2, coverage breakpoints are placed on those two routine nodes, but not on root node Module M1.

2.4.3.3. Routines

2.4.3.4. Lines

PCAC> SET COVERAGE ROUTINE R3 BY LINEYou can also use the PROGRAM_ADDRESS BY LINE nodespec. Measuring test coverage at that many program locations is reasonable because test coverage data is collected only once for each line.

You can use the SEARCH and TYPE commands to display specific lines, or a range of lines. See Section 4.7.4.1, ''Viewing Displays with TYPE and SEARCH Commands'' for information on these commands.

2.4.3.5. Path-Name Qualification

PCAC> SET COVERAGE ROUTINE M1\R2 BY LINEIf a routine is nested within other routines, further qualification may be required in the routine's path name. Routine R4, for example, is nested within Routine R3, which is nested in Module M2. Its full path name is M2\R3\R4.

Specifying Path Names for Source Lines

R4\%LINE 25, R3\R4\%LINE

25, or M2\R3\R4\%LINE 25. However, specifying only the module

name and line number is sufficient because line numbers are always unique within a separately

compiled module. For example:PCAC> SET COVERAGE LINE M2\%LINE 25

2.4.3.6. Collecting Coverage Data from Multiple Test Runs

PCAC> SET DATAFILE/APPEND MY_DATAFILE.PCA PCAC> SET COVERAGE/PREVIOUS PROGRAM_ADDRESS BY CODEPATH

The /PREVIOUS qualifier causes the Collector to measure coverage at each designated program location only once during the entire set of program executions (collection runs). If a location has been covered in a prior execution, the Collector does not attempt to measure coverage of that location in subsequent executions of the program.

If the SET DATAFILE command creates a new data file, it then places test coverage breakpoints on all program locations that you specified on the SET COVERAGE command. When the collection run ends, all breakpoint locations not hit (or not hit n times if /UNTIL:n was also specified) are recorded in the data file as not covered.

If the SET DATAFILE command finds an existing file, then the breakpoint locations recorded by the previous collection run (those that were requested but not hit) are used. In this case, if you specify node specifications, they are ignored. When the collection run ends, all breakpointl ocations still not hit are once again recorded in the data file as not covered. Thus, each collection run starts with the breakpoint locations remaining from the previous collection run and passes on a reduced breakpoint table to the next collection run.

If you intend to use the Analyzer MERGE/ANC command (see Section 3.4.1, ''Merging PCA Performance and Software Performance Monitor (SPM) Files'') to write acceptable noncoverage information to a table, use the /ANC qualifier. This qualifier instructs the Collector to save the codepaths of the current version of the program for comparison with codepaths from another version of the program. This information is comprised of all codepath information in the modules that were specified by the nodespec in the SETCOVERAGE/ANC command. The Analyzer can tell which routines have changed from this saved information. See Section 3.6, ''Using Acceptable Noncoverage (ANC)'' for information on acceptable noncoverage (ANC).See Section 4.5, ''Determining Acceptable Noncoverage (ANC)'' for an example of collecting, merging and setting ANC data.

2.4.3.7. Gathering Test Coverage Without Optimization

When gathering information on how completely a given set of test data covers your program, you may find it helpful to first compile your program with the /NOOPTIMIZE qualifier. Compiling the program without optimization makes the program run slower, but may make it easier to relate the coverage points in the object module back to the original source code. If you measure test coverage over fully optimized code, then simple one-to-one correspondence between the coverage points in the optimized object code and the constructs in your source program may be lost.

2.4.4. Execution Count Data

The SET COUNTERS command determines the exact number of times that various parts of your program execute by placing breakpoints at specified program locations. Each time a breakpoint executes, the Collector records a count for that location. The Collector lets you specify these locations either individually or collectively. For example, an individual location could be line 6 of routine FINDSUM in your program, whereas a type of program locations could be every routine in the whole program or every line in a given routine.

PCAC> SET COUNTERS LINE FINDSUM\%LINE 6The nodespec FINDSUM\%LINE 6 specifies an individual program location for an execution counter. In the next example, the PROGRAM_ADDRESS BYROUTINE nodespec causes execution counters to be placed on every routine in the entire program:

PCAC> SET COUNTERS PROGRAM_ADDRESS BY ROUTINE

Note

Counting the number of times that program locations execute greatly increases CPU overhead. Therefore, you should be selective in using execution counters.

Placing an execution counter on every line in a program may make the program run a hundred times slower. Place execution counters at the entry point to each routine in your program, or for all lines in one routine, or for only strategic program locations.

It is practical to use execution counters when you need more exact data than you can get from PC sampling. Because execution count results are repeatable (provided the program and its input are fixed), useful data can be collected in short collection runs.

2.4.5. Page Fault Data

The address of the instruction that caused the fault

The faulting virtual address

The current CPU time

PCAC> SET PAGE_FAULTS

Collecting page fault data alters your program's page faulting behavior. The Collector requires some code and data to collect page fault data and therefore produces paging of its own. This reduces your program's working set, which increases the program's paging. This usually has little or no effect on the sites of the page faulting peaks in your program; the small disruptions introduced by the Collector can usually be ignored when you are trying to discover where your program is producing the most page faults.

Distortions introduced by collecting page fault data and other data at the same time can be significant. The process of collecting these other kinds of data may in itself cause page faulting, which distorts the page fault data. Avoid collecting call stack return addresses when collecting page fault data because the Collector can affect your program's page faulting behavior as it traverses the call stack. Call stack return addresses cannot be collected for the page fault data itself, but can be collected for other kinds of data during the run.

PCA supports this command on both OpenVMS VAX and OpenVMS Alpha.

2.4.6. System Services Data

The system service index

The PC address of the system service call

The current CPU time

PCAC> SET SERVICES

PCA supports this command on both OpenVMS VAX and OpenVMS Alpha.

For more information on system services and a list of the available system services, see the VSI OpenVMS System Services Reference Manual.

2.4.7. Input/Output Data

PCAC> SET IO_SERVICES

The SET IO_SERVICES command measures several Record Management Services (RMS). See Appendix B, "PCA Reference Tables" for a listing of both RMS and non-RMS services measured by this command. For more information on RMS services, see the VSI OpenVMS Record Management Services Reference Manual.

The I/O system service index

The PC address of the I/O call

The current CPU time

The file name

The physical I/O read count

The physical I/O write count

The file access block (FAB)

The record access block (RAB)

PCA supports this command on both OpenVMS VAX and OpenVMS Alpha.

2.4.8. Tasking Data

PCAC> SET TASKING

The context switch

The task priority

The task type

The PC value of the address where the task was created

The CPU time

The parent task

PCA supports this command on both OpenVMS VAX and OpenVMS Alpha.

2.4.9. Events Data

You can filter your data so that the Analyzer creates histograms or tables using only data from a specified phase of your program. Place event markers in the datafile using the Collector SET EVENT command. An event marker is a record that the Collector inserts into the data file whenever control passes to a specified program location during program execution.

PCAC> SET EVENT COMPUTE LINE M1\R2\%LINE 25In this example, the event name is COMPUTE and the event breakpoint location is line 25 of Routine R2 in Module M1. Whenever line 25 executes, an event marker for event COMPUTE is written to the data file. If you specify more than one breakpoint location for the event COMPUTE, execution of any of the designated locations would mark that event in the file.

You can use event markers to limit analysis of performance data to data collected between any two events occurring in your program's execution. The event marked is the execution of code at that program location.

The SET EVENT command causes the Collector to insert breakpoints at specified program locations. When the breakpoint location executes, all currently accumulated data is written to the data file followed by a time-stamped event marker record. The event marker record signifies that the indicated event occurred. You must specify an event name for each event. The Collector records all event names in your data file. Event markers, in effect, partition the collected data by time.

For example, if your program consists of three main phases—an input phase,a compute phase, and an output phase, executed in that order—you may want to look at the data from only one of these phases. If you want to investigate how often your program calls a particular utility routine during the compute phase of your program, place an event marker at the beginning of the compute phase and at the beginning of the output phase. Then, you can examine the data that is collected between these event markers. Data gathered between two event markers is associated with the event name given to the first of the two markers.

2.4.10. Collecting Vector Instruction Data

SET VCOUNTERS

SET VCPU_SAMPLING

SET VPC_SAMPLING

This section summarizes each of these commands. Chapter 5, "Using VAX Vectors with PCA" contains a complete discussion of using VAX Vectors with PCA.

2.4.10.1. Vector Program Counter Sampling Data

The SET VPC_SAMPLING Collector command lets you collect PC values for random vector instructions based on the wall clock. The collected data lets you determine the scalar/vector parallelism throughout your entire program.

When you collect vector PC samples, you set a sampling interval timer that includes all idle time associated with the current run of the program. This form of sampling shows you where the time is being spent in the program with little cost to the time of actually running the program.

2.4.10.2. Vector CPU Time Data

The SET VCPU_SAMPLING Collector command allows you to collect PC values for random vector instructions based on the processor clock. The collected data lets you determine the scalar/vector parallelism throughout your entire program.

When you collect vector CPU samples, you set a sampling interval timer that includes only the time when the program is actually running the processor. This form of sampling allows you to focus on the particular area of the program's algorithm where the time is being spent, and not on the areas where outside influences consume time.

2.4.10.3. Vector Instruction Execution Count Data

PROGRAM ADDRESS by VINSTRUCTION

MODULE module-name by VINSTRUCTION

ROUTINE routine-name by VINSTRUCTION

2.4.11. Collecting PC Values from the Call Stack

For some forms of data collection, the Collector records the current value of the program counter, along with the other data collected. The Analyzer uses the program counter to associate the collected data with the particular module, routine, line, or other program unit that generate the data.

Sometimes the value of the program counter may not be the most meaningful program address to collect. For example, if you gather I/O data on your FORTRAN program, the PC values of all the I/O system service calls are within the address range of the FORTRAN Run-Time Library. What you really need to know is what sections of your FORTRAN program are causing the I/O calls. To discover what program code is calling the Run-Time Library, you must gather all subroutine return addresses stored on the stack for each collected data point.

PC sampling data

CPU sampling data

System services data

I/O services data

Exact execution counts

Test coverage data

Vector PC sampling data

Vector CPU sampling data

Vector counters data

PCAC> SET STACK_PCS

Collecting this data adds to the amount of data gathered and to the processing overhead of gathering the data. However, you can gather exactly the data you need and minimize PCA's overhead by selectively collecting desired data kinds with the /STACK_PCS qualifier.

2.4.11.1. Collecting Stack PCs by Data Kind

Call Stack return addresses can be gathered selectively by data kind with the /STACK_PCS qualifier. The /STACK_PCS qualifier allows you to collect only the data that you need, thus minimizing PCA's overhead.

The /STACK_PCS qualifier causes the collection of stack PC values. The /NOSTACK_PCS qualifier prevents the collection of stack PC values.

PCAC> SET CPU_SAMPLING/NOSTACK_PCS

PCAC> SET IO_SERVICE/STACK_PCS PCAC> CANCEL IO_SERVICE PCAC> SET IO_SERVICEYou can collect stack PC values for all nodespecs of a measurement,or for none. If you enter the following command sequence, then stack PC values are not collected:

PCAC> SET COUNTERS/STACK_PCS MODULE A BY LINE PCAC> SET COUNTERS/NOSTACK MODULE B BY LINE PCAC> SET COUNTERS MODULE A BY LINEThe SET STACK_PCS command overrides all previously set /NOSTACK_PCS qualifiers and causes a new default of /STACK_PCS. The CANCEL STACK_PCS command overrides all previously set /STACK_PCS qualifiers and causes a new default of /NOSTACK_PCS. For information on data kind collection in vector applications, see Chapter 5, "Using VAX Vectors with PCA".

2.5. Selecting the Language of Your Application

The language setting determines how PCA parses symbol names in command input. If the language is set to C, PCA treats symbol names as case sensitive. If the language is set to anything other than C or C++, symbol names are assumed to be case insensitive.

PCAC> SET LANGUAGE C

This command changes the language setting to C. Symbol names are then parsed by the C language rules.

2.6. Naming the Collection Run

Each program execution run under Collector control is called a collection run. The data collected from each collection run is recorded separately in the performance data file. Collection run numbers are assigned sequentially by the Collector, starting at 1.

PCAC> SET RUN_NAME name-of-run

If name-of-run does not start with an alphabetic character, you must enclose it in quotation marks.

If you assign the same collection run name to more than one collection run in the same data file, data from all those runs passes the filter specified by that name. Thus, you can assign the same collection run name to a whole group of collection runs when you intend to filter that group of runs as a unit in the Analyzer.

If you do not use the SET RUN_NAME command, you get a null run name; the Collector assigns a number as a run name. To show collection run names, use the SHOW RUN_NAME command.

2.7. Starting and Terminating Data Collection

After you invoke the Collector and optionally set the data file and collection type, enter the GO command to start data collection.

To stop a Collector session before you enter the GO command, enter the EXIT command or press Ctrl/Z. If the data collection has already started, you cannot enter an EXIT command; you must press Ctrl/Y to stop the collection run.

If you press Ctrl/Y, do not use the DCL STOP command immediately afterward. If Ctrl/Y interrupts the Collector when the Collector is executing supervisor-mode code, a subsequent STOP command may cause a supervisor-mode exception that kills your entire process and logs you out. To avoid this, execute another program after pressing Ctrl/Y, or type EXIT. The Collector's exit handlers then successfully close out the data collection.

To stop Collector output to your terminal, such as SHOW command output, press Ctrl/C. The Collector aborts the command and returns to DCL level.

To run your program without collecting data, use the /NOCOLLECT qualifier with the GO command.

2.8. Using Collector Command Procedures

If you repeatedly enter the same group of commands to the Collector, then place those commands into a command procedure. This makes command entry more efficient and less error prone.

A command procedure is a text file of commands that substitute for an interactively entered sequence of commands. The default file type is .PCAC. Add SET VERIFY to the procedure to view the commands as they are executed from the procedure.

When the Collector encounters a GO command in a command procedure, it starts collecting data and does not accept additional commands from the terminal or from the command procedure. If a command procedure does not contain a GO command, the Collector interactively prompts for further commands after executing the commands in the command procedure.

When the Collector encounters an EXIT command in a command procedure, that command procedure terminates and control returns to the command stream (either the terminal or a previous command procedure) that invoked the command procedure.

$ DEFINE PCAC$INIT SYS$LOGIN:COLLECTOR_STARTUP.PCAC

SET DATAFILE/APPEND PRIMES_IO ! Append data to existing file SET IO_SERVICES ! Collect I/O services data GO ! Start collection

2.9. Using Collector Logical Names

The Collector checks for a number of logical names which, if defined, modify the activity of the Collector in various ways. These names perform functions such as defining input and output streams and defining the name of the performance data file to use. In Section 2.8, ''Using Collector Command Procedures'', PCAC$INIT was used to define an initialization file. As a convenience, use logical names rather than repeated commands to pass information to the Collector.

|

PCAC$DATAFILE |

PCA$RUN_NAME |

|

PCA$INHIBIT_MSG |

PCAC$INIT |

|

PCAC$INPUT |

PCAC$OUTPUT |

|

PCAC$DECW$DISPLAY |

For a more detailed description of these logical names, see Table B.4, ''Collector Logical Names''.

2.10. Gathering Shareable Image Data

Note

There are two classes of shareable images: those that are user written, and those that are provided. The Analyzer can report symbolically only on user-written shareable images. See the VSI OpenVMS Linker Utility Manual for more information on shareable images.

The Collector writes all shareable image names and address ranges to the performance data file. The Analyzer uses this information to report on each shareable image.

User-Written Shareable Images

- Compile all sources for the shareable image with /DEBUG to gather performance data on a shareable image. To do symbolic performance analysis on the shareable image, first compile the sources:

$ FORTRAN/DEBUG SHARE

In this example, MAIN.FOR is the source file for your main image, and SHARE.FOR is the source file for the shareable image to be called by other programs. Assume that SHARE.FOR has a single universal symbol called SHARED_ROUTINE, which isa routine called from the main program MAIN.FOR.

- Enter LINK/SHAREABLE/DEBUG to create a version of the shareable image that contains the DST information the Collector needs and enter the universal symbol:

$ LINK/SHARE/DEBUG SHARE,SYS$INPUT/OPTION- _$ UNIVERSAL=SHARED_ROUTINE Ctrl/ZThis command builds the shareable image SHARE.EXE in your current directory.

- Link the program /DEBUG to link the programs that use the shareable image. Because it is a shareable image, you cannot run SHARE.EXE directly. Instead, you have to link your main image against it, then point the logical name SHARE to your copy of SHARE.EXE:

$ LINK/DEBUG MAIN,SYS$INPUT/OPTION SHARE.EXE/SHARE Ctrl/Z $ DEFINE SHARE SYS$DISK:[]SHARE.EXEPoint LIB$DEBUG to PCA Collector:$ DEFINE LIB$DEBUG PCA$COLLECTOR

- Run the programs that use your shareable image, as follows:

$ RUN MAIN PCA Collector Version 4.6 PCAC> - Enter the SET DATAFILE/SHAREABLE=img-name command. This command specifies the shareable image to be measured.(The /SHAREABLE=(img-name,dst-file) form of the /SHAREABLE qualifier is supported, but only for the sake of compatibility with PCA Version 1.0.) When you get the Collector prompt, enter the SET DATAFILE command:

PCAC> SET DATAFILE/SHAREABLE=SHARE MY_DATAFILE.PCA

SHARE is the name of the shareable image to be measured, and MY_DATAFILE.PCA is the file specification for the performance data file.

The Collector creates the performance data file, extracts the required symbol table information from the shareable image, and writes it to the data file. The Collector then prompts for additional commands.

Specify your remaining data collection commands and enter a GO command to start the data collection. After the collection run ends, you can process the performance data by running the Analyzer.

You can measure the performance of one shareable image averaged over many executions of different programs. Use the /APPEND qualifier to collect data from several program executions into one data file.

Chapter 3. Using the Analyzer

This chapter describes the Analyzer, explains the steps you must follow to use the Analyzer, and provides examples of tasks you can perform while using the Analyzer.

3.1. Overview

Invoke the Analyzer, which then reads the performance datafile that the Collector has built.

View the performance data results as tabular and graphical reports, filtering and refining the data to get a more detailed view.

Analyze and pinpoint the bottlenecks in your application or evaluate the thoroughness of your testing environment.

Each of these steps is described fully in this chapter.

Performance histograms showing how much time or other resource is consumed by various parts of your program

Tables showing information in the form of raw data counts and percentages

Annotated source listings showing performance or coverage data

Histograms and tables for other data domains, such as the number of calls per system service, or the amount of I/O per file used by your program

Listings of your raw performance data

Dynamic call trees that show the frequency of each call chain

3.2. Invoking the Analyzer

$ PCA PCA$PRIMES

Performance and Coverage Analyzer Version 5.0

PCAA>The data file in the previous example, PCA$PRIMES.PCA, contains the performance or coverage data and all symbol table information required by the Analyzer.

See Chapter 1, "Introduction" for complete information on invoking the Analyzer, creating the default plot, scrolling through your display, interpreting the summary page, and printing, filing, and appending Analyzer output.

3.3. Generating Histograms and Tables

The PLOT and TABULATE commands display performance and coverage data in an understandable form. The PLOT command produces performance histograms that plot the distribution of resource usage over your program or over other data domains. The TABULATE command presents the same information in the form of tables so you can see the actual data counts, instead of scaled histogram bars.

The PLOT and TABULATE command qualifiers specify the kind of data to analyze, and the format and display of the output.

When you enter a PLOT command, use the qualifiers to specify what kind of data to tally and how to partition the histogram into buckets. These two elements define the meanings of the horizontal and vertical axes of the histogram.

If you do not specify a data-kind qualifier on a PLOT or TABULATE command, then a default data kind is used based on the data collected in the data file. See the online PCA Command Dictionary for detailed information on the PLOT and TABULATE commands.

3.3.1. Specifying the Kind of Data to Tally in the Histogram or Table

In order to plot or tabulate a certain kind of data, you must have already collected the data in the Collector. The Analyzer data kind directly corresponds to the data gathered in the Collector. Table B.7, ''The SET Command with Corresponding Data-Kind Qualifiers'' shows the correspondence of the Collector SET commands to the Analyzer data-kind qualifiers.

PCAA> PLOT/COVERAGE

3.3.2. Partitioning Histograms into Buckets

PCAA> PLOT/PC_SAMPLING PROGRAM_ADDRESS BY ROUTINE

Buckets are defined by attributes of the data points in the data file. Depending on the data kind, these attributes can be the program address value, the CPU time stamp, the system service name, the file name, the record size, the physical I/O count, and so on. You can partition many different kinds of data domains along the vertical axis of the plot,not just the program address domain. For example, you can partition the system services domain so that each histogram bar represents the number of calls on one system service.

PCAA> PLOT/PC_SAMPLING PROGRAM_ADDRESS BY MODULE

Performance and Coverage Analyzer Page 1

Program Counter Sampling Data (386 data points total) - "*"

Bucket Name +----+----+----+----+----+----+----+----+----+

PROGRAM_ADDRESS\ |

PRIMES . . . . . . |* 0.8%

PRIME . . . . . . |********************************************** 61.9%

READ_RANGE . . . . |**** 4.7%

READ_END_OF_FILE . | 0.0%

READ_ERROR . . . . | 0.0%

OUTPUT_TO_DATAFILE | 0.3%

SHARE$FORRTL . . . |**** 5.4%

SHARE$LIBRTL . . . |** 2.6%

SHARE$PCA$COLLECTOR| 0.0%

SHARE$DBGSSISHR . | 0.0%

SHARE$PCA$PRVSHR . | 0.0%

SHARE$LBRSHR . . . | 0.0%

SHARE$SMGSHR . . . | 0.0%

|

|

|

+----+----+----+----+----+----+----+----+----+

PCAA>The /PC_SAMPLING qualifier defines the meaning of the horizontal axis of the previous plot;the number of PC sampling data points in each bucket is plotted along that dimension. The node specification defines the meaning of the vertical axis; modules are plotted along that dimension. In this case, each bucket is defined by a module address range and is labeled by a module name.

The scale of the histogram is adjusted so that the longest bar occupies the full width of the plot. You can adjust this scale with the /SCALE qualifier to make the comparison of histograms more convenient.

PCAA> TABULATE/PC_SAMPLING PROGRAM_ADDRESS BY MODULE

Performance and Coverage Analyzer Page 1

Program Counter Sampling Data (386 data points total) - "*"

Data 95% Conf

Bucket Name Count Percent Interval

PROGRAM_ADDRESS\ PRIMES . . . . . . . . . . 3 0.8%

PRIME . . . . . . . . . . 239 61.9% +/- 4.8%

READ_RANGE . . . . . . . . 18 4.7% +/- 2.2%

READ_END_OF_FILE . . . . . 0 0.0%

READ_ERROR . . . . . . . . 0 0.0%

OUTPUT_TO_DATAFILE . . . . 1 0.3%

SHARE$FORRTL . . . . . . . 21 5.4% +/- 2.3%

SHARE$LIBRTL . . . . . . . 10 2.6% +/- 1.6%

SHARE$PCA$COLLECTOR . . . 0 0.0%

SHARE$DBGSSISHR . . . . . 0 0.0%

SHARE$PCA$PRVSHR . . . . . 0 0.0%

SHARE$LBRSHR . . . . . . . 0 0.0%

SHARE$SMGSHR . . . . . . . 0 0.0%

PCAA>This example shows that most of the time is consumed in module PRIME. To focus on the area that consumes the most time in module PRIME, examine that module at a finer level of detail.

PCAA> PLOT MODULE PRIME BY LINE

Performance and Coverage Analyzer Page 1

Program Counter Sampling Data (386 data points total) - "*"

Percent Count Line

PRIME\

1:

2: C Function to identify whether a given

number is p

3: C If it is prime, the returned function

value is T

4: C

0.0% 5: LOGICAL FUNCTION PRIME(NUMBER)

0.0% 6: PRIME = .TRUE.

0.3% 7: DO 10 I = 2, NUMBER/2

60.4% ******** 8: IF ((NUMBER - ((NUMBER / I) * I)) .EQ.

0) THEN

9: PRIME = .FALSE.

0.0% 10: RETURN

0.0% 11: ENDIF

1.3% 12: 10 CONTINUE

0.0% 13: RETURN

14:

ENDPCAA>3.3.2.1. Filtering Performance Data

You can filter performance or coverage data before the data is used to generate histograms or tables. This is useful when you wantonly a certain part of that data to be considered in a given data reduction. For example, you may only want the data associated with a certain event marker to be included in your histogram.

Setting Filters

To filter data, you must establish filter definitions with the SET FILTER command. The SET FILTER command takes two parameters: a filter name that you define and a comma-delimited list of filter restrictions (see the online PCA Command Dictionary). The filter restrictions specify limits or conditions that any given data point must satisfy in order to pass the filter. You can filter data on all attributes that are used to plot or tabulate data.

PCAA> SET FILTER F1 RUN_NAME=3, RUN_NAME=5

Any data point that comes from collection run 3 or collection run 5 passes filter F1.

PCAA> SET FILTER F2 RUN_NAME<>8

Specifying Multiple Filter Restrictions

By specifying multiple restrictions for a single filter, you can logically OR the restrictions. Also, by declaring more than one filter, each with a separate SET FILTER command and name,you can logically AND the restrictions. That is, if you have several filters, data points must pass every one of those filters to be included in a plot or table. These capabilities give you considerable flexibility in filtering your performance or coverage data. See the description of the SET FILTER command in the online PCA Command Dictionary for examples of specifying multiple filter restrictions.

Multiple filters are applied in the order that they are specified. There are no precedence rules.

Filtering Data by Event Name

PCAA> SET FILTER F2 TIME=EVENT_NAMEEVENT_NAME is the name of an event marker whose data you want to include in subsequent plots or tables. Event markers are declared with the SET EVENT command in the Collector.

PCAA> SET FILTER CHAIN_NAME=(ROUTINE1,*)(ROUTINE1,*) filters all the data points with the call chains that have ROUTINE1 at the bottom of the stack. If you specified (*,ROUTINE1,*,ROUTINE2,*), the filter would apply to data points that have the call chain of ROUTINE1 calling ROUTINE2 directly or indirectly. Note that you can only use wildcards for program unit names as a whole, but not for portions of their identifiers. For example, (ROUT*) does not mean all of the routines that begin with ROUT. Rather, the meaning is for the program unit name of ROUT*.

The SET FILTER command, described in the online PCA Command Dictionary, contains the filter restrictions that specify the conditions that any given data point must satisfy in order to pass the filter. You can filter data on all attributes that are used to plot or tabulate data.

PCAA> SET FILTER/CUMULATIVE F1 PROG_ADDR=ROUTINE_1

PROG_ADDR=ROUTINE_1 indicates that the PC used must be in the address range of ROUTINE_1. /CUMULATIVE indicates that every PC on the stack should be looked at, and any PC that passes the data point passes the filter.

PCAA> SET FILTER/MAIN=ROUTINE_1/STACK=1 F1 PROG_ADDR=ROUTINE_2

/MAIN=ROUTINE_1 indicates a step down the stack until the return PC is in ROUTINE_1. /STACK=1 indicates a step one frame further down. PROG=ROUTINE2 indicates that the PC must be in the range of ROUTINE_2.

PCAA> SET FILTER/MAIN=ROUTINE_1/STACK=2/CUM F1 PROG_ADDR=ROUTINE_2

/MAIN=ROUTINE_1 indicates a step down the stack until the return PC is in ROUTINE_1. /STACK=2 indicates a step two frames further down the stack. /CUMULATIVE specifies to look at all the remaining addresses. PROG=ROUTINE_2 specifies that if the address is in the range of ROUTINE_2, the PC passes the filter.

Rules for Applying Filter Specifications

After you have defined a filter, that filter remains in effect for all subsequent PLOT and TABULATE commands. However, that does not mean that the filter will always be applied to the current plot. If the data kind is inconsistent with the specified filter because of the data collected, then the filter is ignored and the data point is included in the histogram. Table B.9, ''Filter Specification by Data Kind'' shows when the filter specification is applied and when it is not.

Canceling a Filter

You can cancel a filter with the CANCEL FILTER command or enter another SETFILTER command with the same filter name. The CANCEL FILTER command takes as a parameter the name of the filter to cancel. If you want to cancel all current filters, use the CANCEL FILTER/ALL command.

Showing Filters

To show what filters you currently have defined, use the SHOW FILTER command. The summary page from any PLOT or TABULATE command also lists the current filters.

3.3.2.2. Specifying Modules and Routines

PCAA> PLOT MODULE M1 BY ROUTINE

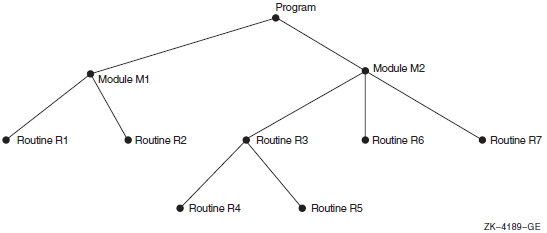

Figure 3.1, ''A Program Represented as a Tree'' shows the relationship of modules and routines to each other within a program's tree structure.

3.3.2.3. Specifying Individual Buckets

PCAA> PLOT ROUTINE R3

PCAA> PLOT ROUTINE R3, ROUTINE R4, MODULE M1This command results in a histogram with three buckets: one for Routine R3, one for Routine R4, and one for Module M1. Each histogram bar is labeled with the name of the corresponding routine or module.

3.3.2.4. Specifying a Set of Buckets

PCAA> PLOT MODULE M1 BY ROUTINEModule M1 specifies a node in the program tree, and that node is the root of a subtree. The BYROUTINE clause selects each routine node in the Module M1 subtree and creates a bucket for each one.

The subtree that has Module M1 at its root has routine nodes for routines R1 and R2. The Analyzer creates one histogram bar for each of these two routines.

PCAA> PLOT PROGRAM_ADDRESS BY ROUTINEThis command creates buckets for routines R4 and R5 (which are nested within routine R3) as well as for all the top-level routines. All routine nodes in the tree are therefore included, even if they are nested below other routine nodes. Similarly, the nodespec MODULE M2 BY ROUTINE includes routines R4 and R5 as well as R3, R6, and R7.

PCAA> PLOT PROGRAM_ADDRESS BY MODULEThis command is useful if your program uses shareable images,such as the run-time library, for your language. The Analyzer creates module nodes for all shareable images and assigns them the appropriate address ranges. Each such module has a formal name of the form MODULE SHARE$imgname, where imgname is the shareable image name. This PLOT command lets you see how much time or other resource is consumed in each shareable image your program calls. You can break down provided shareable images by module only.

3.3.2.5. Specifying Lines

The program tree contains nodes for all lines in the program. Using a BY LINE clause, you can create a bucket for each line in a given program unit. The BYLINE clause in Example 3.4, ''BY LINE Clause Output'' selects all line nodes in the subtree that have Routine R3 as their root.

PCAA> PLOT/NOSOURCE ROUTINE R3 BY LINE

Performance and Coverage Analyzer Page 2

Program Counter Sampling Data (386 data points total) - "*"

Bucket Name +----+----+----+----+----+----+----+----+----+

%LINE 37 . . .| 0.0%

%LINE 38 . . .| 0.0%

%LINE 45 . . .| 0.0%

%LINE 46 . . .| 0.0%

%LINE 48 . . .| 0.0%

PRIME\ |

%LINE 5 . . .| 0.0%

%LINE 6 . . .| 0.0%

%LINE 7 . . .| 0.3%

%LINE 8 . . .|********************************************** 60.4%

%LINE 10 . . .| 0.0%

%LINE 11 . . .| 0.0%

%LINE 12 . . .|* 1.3%

%LINE 13 . . .| 0.0%

READ_RANGE\ |

%LINE 5 . . .| 0.0%

%LINE 8 . . .|** 1.8%