Alpha Guide to Upgrading Privileged-Code Applications

- Operating System and Version:

- VSI OpenVMS IA-64 Version 8.4-1H1 or higher

VSI OpenVMS Alpha Version 8.4-2L1 or higher

Preface

This document describes reference information for System Management utilities used with the OpenVMS Alpha operating system.

1. About VSI

VMS Software, Inc. (VSI) is an independent software company licensed by Hewlett Packard Enterprise to develop and support the OpenVMS operating system.

2. Intended Audience

This guide is intended for system programmers who use privileged-mode interfaces in their applications.

3. Document Structure

This manual is organized as follows:

Chapter 1, "Introduction" describes how to use this guide.

Chapter 2, "Upgrading Privileged Software to OpenVMS Alpha Version 7.0" and Chapter 3, "Replacements for Removed Privileged Symbols" describe the infrastructure changes that might affect privileged-code applications and provides guidelines for upgrading them to OpenVMS Alpha Version 7.0.

Chapter 4, "Modifying Device Drivers to Support 64-Bit Addressing", Chapter 5, "Modifying User-Written System Services", and Chapter 6, "Kernel Threads Process Structure" describe the changes that can be made to customer-written system services and device drivers to support 64-bit addresses and kernel threads.

Appendix A, "Data Structure Changes", Appendix B, "I/O Support Routine Changes", and Appendix C, "Kernel Threads Routines and Macros" contain descriptions of I/O routines, I/O data structures, kernel threads routines, and kernel threads macros.

4. Related Documents

OpenVMS Alpha Guide to 64-Bit Addressing and VLM Features

VSI OpenVMS Programming Concepts Manual

VSI OpenVMS Record Management Services Reference Manual

VSI OpenVMS System Services Reference Manual: A–GETUAI and VSI OpenVMS System Services Reference Manual: GETUTC–Z

5. VSI Encourages Your Comments

You may send comments or suggestions regarding this manual or any VSI document by sending electronic mail to the following Internet address: <docinfo@vmssoftware.com>. Users who have VSI OpenVMS support contracts through VSI can contact <support@vmssoftware.com> for help with this product.

6. OpenVMS Documentation

The full VSI OpenVMS documentation set can be found on the VMS Software Documentation webpage at https://docs.vmssoftware.com.

7. Typographical Conventions

| Convention | Meaning |

|---|---|

|

… |

A horizontal ellipsis in examples indicates one of the

following possibilities:

|

|

⁝ |

A vertical ellipsis indicates the omission of items from a code example or command format; the items are omitted because they are not important to the topic being discussed. |

|

( ) |

In command format descriptions, parentheses indicate that you must enclose choices in parentheses if you specify more than one. |

|

[ ] |

In command format descriptions, brackets indicate optional choices. You can choose one or more items or no items. Do not type the brackets on the command line. However, you must include the brackets in the syntax for OpenVMS directory specifications and for a substring specification in an assignment statement. |

|

| |

In command format descriptions, vertical bars separate choices within brackets or braces. Within brackets, the choices are optional; within braces, at least one choice is required. Do not type the vertical bars on the command line. |

|

{ } |

In command format descriptions, braces indicate required choices; you must choose at least one of the items listed. Do not type the braces on the command line. |

|

bold text |

This typeface represents the name of an argument, an attribute, or a reason. |

|

italic text |

Italic text indicates important information, complete titles of manuals, or variables. Variables include information that varies in system output (Internal error number), in command lines (/PRODUCER= name), and in command parameters in text (where dd represents the predefined code for the device type). |

|

UPPERCASE TEXT |

Uppercase text indicates a command, the name of a routine, the name of a file, or the abbreviation for a system privilege. |

Monospace text |

Monospace type indicates code examples and interactive screen displays. In the C programming language, monospace type in text identifies the following elements:keywords, the names of independently compiled external functions and files, syntax summaries, and references to variables or identifiers introduced in an example. |

|

- |

A hyphen at the end of a command format description, command line, or code line indicates that the command or statement continues on the following line. |

|

numbers |

All numbers in text are assumed to be decimal unless otherwise noted. Nondecimal radixes—binary, octal, or hexadecimal—are explicitly indicated. |

Chapter 1. Introduction

This manual is divided into two parts: the first, which discusses changes to privileged code on OpenVMS Alpha to support 64-bit addressing and kernel threads; and the second, which discusses the changes necessary to privileged code and to OpenVMS physical infrastructure to support the OpenVMS operating system on the Intel ® Itanium ® architecture.

This is not an application porting guide. If you are looking for information on how to port applications that run on OpenVMS Alpha to OpenVMS IA-64, see Porting Applications from VSI OpenVMS Alpha to VSI OpenVMS Industry Standard 64 for Integrity Servers.

1.1. Quick Description of OpenVMS Alpha 64-Bit Virtual Addressing

OpenVMS Alpha Version 7.0 made significant changes to OpenVMS Alpha privileged interfaces and data structures to support 64-bit virtual addresses and kernel threads.

For 64-bit virtual addresses, these changes were necessary infrastructure work to enable processes to grow their virtual address space beyond the existing 1 GB limit of P0 space and the 1 GB limit of P1 space to include P2 space, making a total of 8TB. Likewise, S2 is the extension of system space.

Support for 64-bit virtual addresses, makes more of the 64-bit virtual address space defined by the Alpha architecture available to the OpenVMS Alpha operating system and to application programs. The 64-bit address features allow processes to map and access data beyond the previous limits of 32-bit virtual addresses. Both process-private and system virtual address space now extend to 8 TB.

In addition to the dramatic increase in virtual address space, OpenVMS Alpha 7.0 significantly increases the amount of physical memory that can be used by individual processes.

Many tools and languages supported by OpenVMS Alpha (including the Debugger, run-time library routines, and DEC C)are enhanced to support 64-bit virtual addressing. Input and output operations can be performed directly to and from the 64-bit addressable space by means of RMS services, the $QIO system service, and most of the device drivers supplied with OpenVMS Alpha systems.

Underlying this are new system services that allow an application to allocate and manage the 64-bit virtual address space that is available for process-private use.

For more information about OpenVMS Alpha 64-bit virtual address features, see the OpenVMS Alpha Guide to 64-Bit Addressing and VLM Features.

As a result of these changes, some privileged-code applications might need to make source-code changes to run on OpenVMS Alpha Version 7.0 and later.

This chapter briefly describes OpenVMS Alpha Version 7.0 64-bit virtual address and kernel threads support and suggests how you should use this guide to ensure that your privileged-code application runs successfully on OpenVMS Alpha Version 7.0 and later.

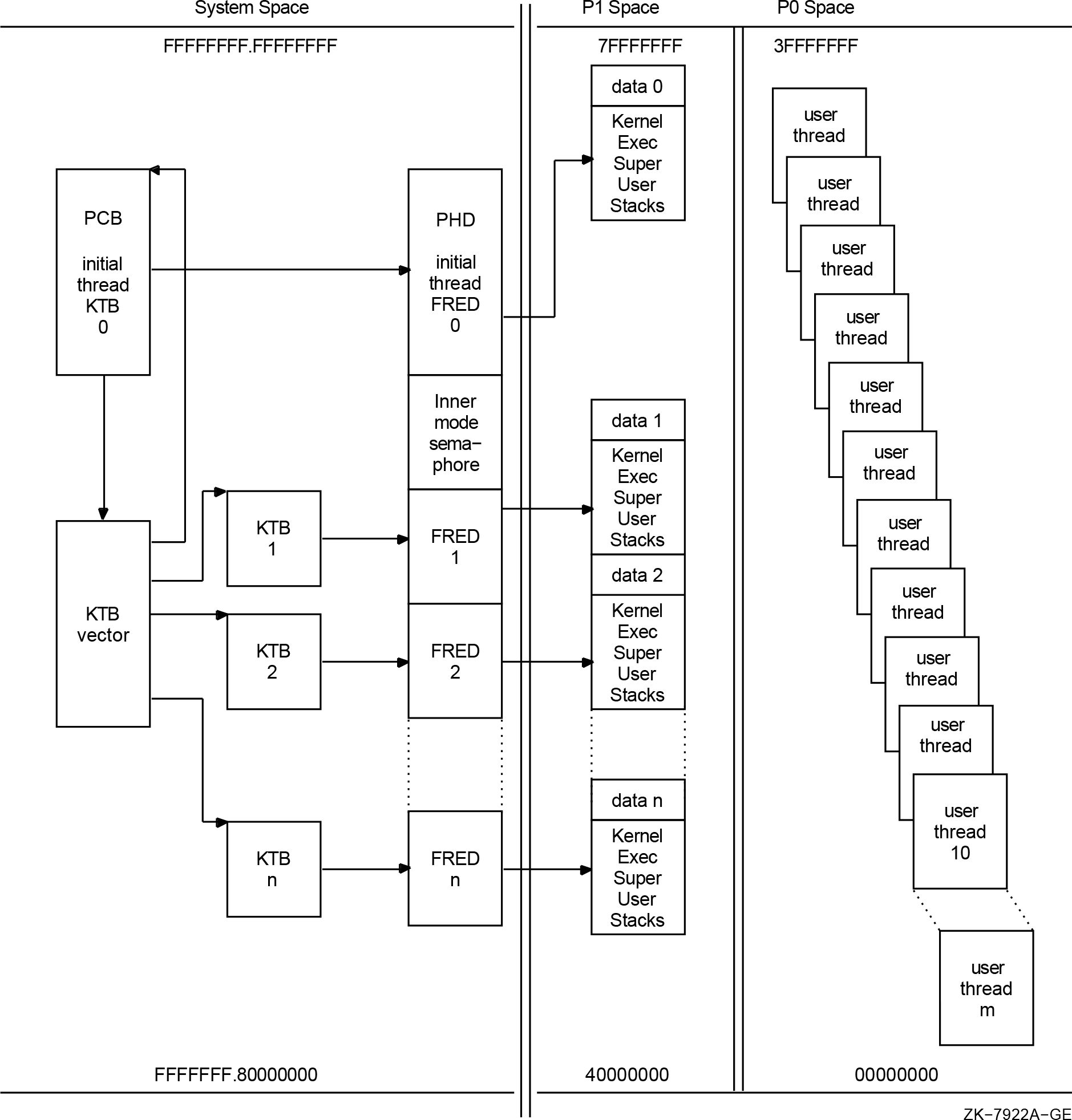

1.2. Quick Description of OpenVMS Alpha Kernel Threads

OpenVMS Alpha Version 7.0 provides kernel threads features, which extend process scheduling capabilities to allow threads of a process to run concurrently on multiple CPUs in a multiprocessor system. The only interface to kernel threads is through the DECthreads package. Existing threaded code that uses either the CMA API or the POSIX threads API should run without change and gain the advantages provided by the kernel threads project.

Kernel threads support causes significant changes to the process structure within OpenVMS (most notably to the process control block (PCB)).Although kernel threads support does not explicitly change any application programming interfaces (APIs) within OpenVMS, it does change the use of the PCB in such a way that some existing privileged code may be impacted.

Kernel threads allows a multithreaded process to execute code flows independently on more than one CPU at a time .This allows a threaded application to make better use of multiple CPUs in an SMP system. DECthreads uses these independent execution contexts as virtual CPUs and schedules application threads on them. OpenVMS then schedules the execution contexts (kernel threads) onto physical CPUs. By providing a callback mechanism from the OpenVMS scheduler to the DECthreads thread scheduler, scheduling latencies inherent in user-mode-only thread managers is greatly reduced. OpenVMS informs DECthreads when a thread has blocked in the kernel. Using this information, DECthreads can then opt to schedule some other ready thread.

For more information about kernel threads, refer to the Bookreader version of the VSI OpenVMS Programming Concepts Manual and Chapter 6, "Kernel Threads Process Structure" in this guide.

1.3. Quick Description of OpenVMS Industry Standard 64

OpenVMS IA-64 8.2 has a 64-bit model and basic system functions that are similar to OpenVMS Alpha. OpenVMS Alpha and OpenVMS IA-64 are produced from a single-source code base. OpenVMS IA-64 has the same look and feel as OpenVMS Alpha. Minor changes to the operating system were made to accommodate the architecture, but the basic structure and capabilities of the operating system are the same.

1.4. How to Use This Guide

Read Part I to learn about the changes that might be required for privileged-code applications to run on OpenVMS Alpha Version 7.0 and how to enhance customer-written system services and device drivers with OpenVMS Version 7.0 features.

Refer to Part II for information about changes that might be required for privileged-code applications to run on OpenVMS IA-64. In most cases, you can change your code so that it is common code with OpenVMS Alpha.

Refer to the Appendixes for more information about some of the data structures and routines mentioned throughout this guide.

Chapter 2. Upgrading Privileged Software to OpenVMS Alpha Version 7.0

The new features provided in OpenVMS Alpha Version 7.0 have required corresponding changes in internal system interfaces and data structures. These internal changes might require changes in some privileged software.

This chapter contains recommendations for upgrading privileged-code applications to ensure that they run on OpenVMS Alpha Version 7.0. Once your application is running on OpenVMS Alpha Version 7.0, you can enhance it as described in Part II.

2.1. Recommendations for Upgrading Privileged-Code Applications

Recompile and relink your application to identify almost all of the places where source changes will be necessary. Some changes can be identified by inspection.

If you encounter compile-time or link-time warnings or errors, you must make the source-code changes required to resolve them.

See Section 2.1.1, ''Summary of Infrastructure Changes'' for descriptions of the infrastructure changes that can affect your applications and more information about how to handle them.

Refer to Chapter 3, "Replacements for Removed Privileged Symbols" for information about the data structure fields, routines, macros, and system data cells obviated by OpenVMS Alpha Version 7.0 that might affect privileged-code applications.

Once your application recompiles and relinks without errors, you can enhance it to take advantage of the OpenVMS Alpha Version 7.0 features described in Chapter 2, "Upgrading Privileged Software to OpenVMS Alpha Version 7.0" and Chapter 3, "Replacements for Removed Privileged Symbols".

2.1.1. Summary of Infrastructure Changes

Page tables have moved from the balance set slots to page table space. (Compile and link the application.)

The global page table has moved from S0/S1 space to S2 space. (Compile and link the application.)

The PFN database has moved from S0/S1 space to S2 space. (Compile C applications. Inspect MACRO applications for changes that might not cause warning messages. Link all applications.)

PFN database entry format has changed. (Compile and link the application.)

Routines MMG$IOLOCK and MMG$UNLOCK are obsolete and are replaced by MMG_STD$IOLOCK_BUF and DIOBM. (Compile and link the application.)

A buffer locked for direct I/O is now described by SVAPTE, BOFF, BCNT, and a DIOBM.

Be aware of code that clears IRP$L_SVAPTE to keep a buffer locked even after the IRP is reused or deleted. (Inspect the code for changes.)

A single IRPE can only be used to lock down a single region of pages. (Compile and link the application.)

Some assumptions about I/O structure field adjacencies may no longer be true; for example, IRP$L_QIO_P1 and IRP$L_QIO_P2 are now more than 4 bytes apart. (Compile, link, inspect the code.)

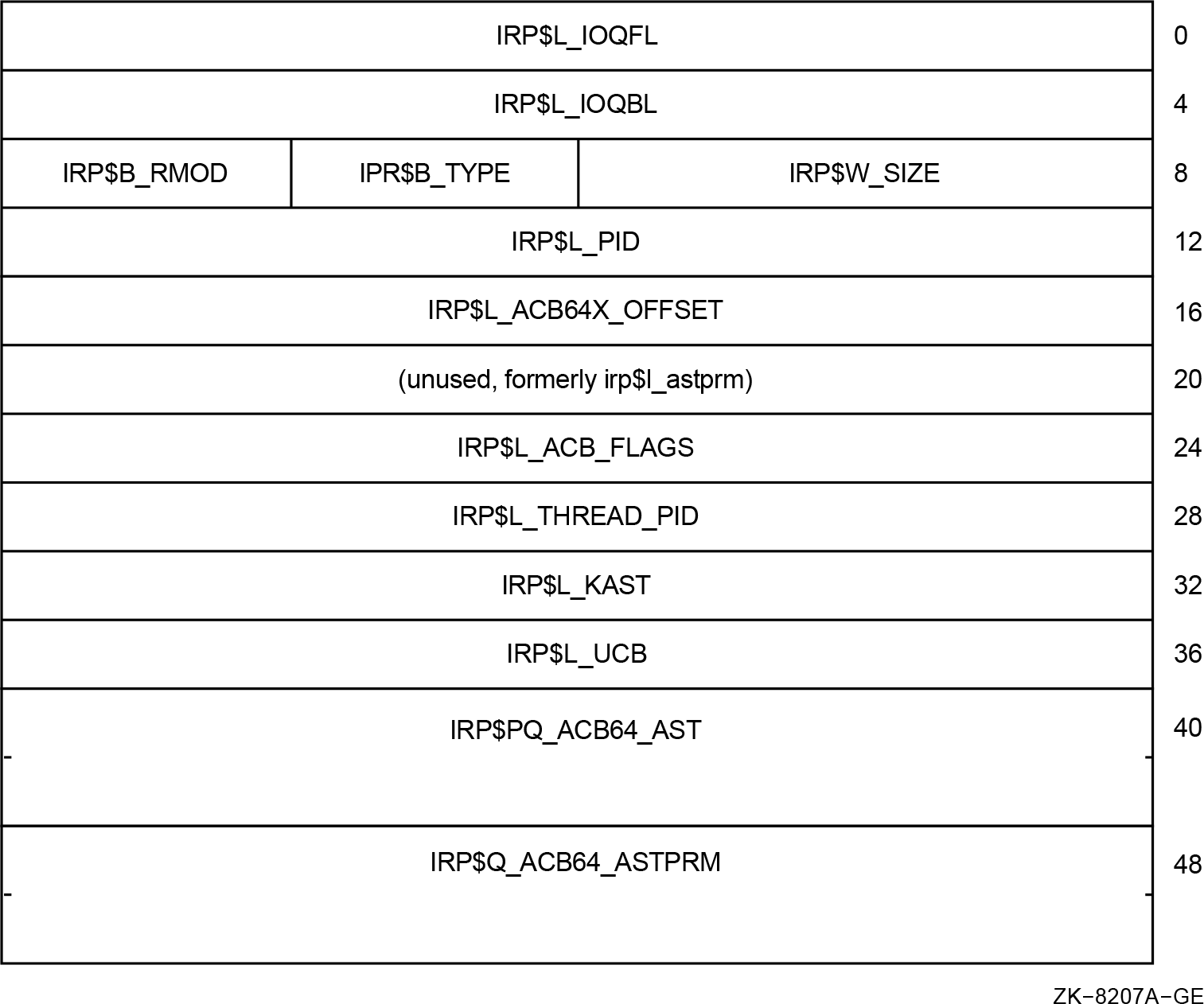

The IRP$L_AST, IRP$L_ASTPRM, and IRP$L_IOSB cells have been removed.(Compile and link the application.)

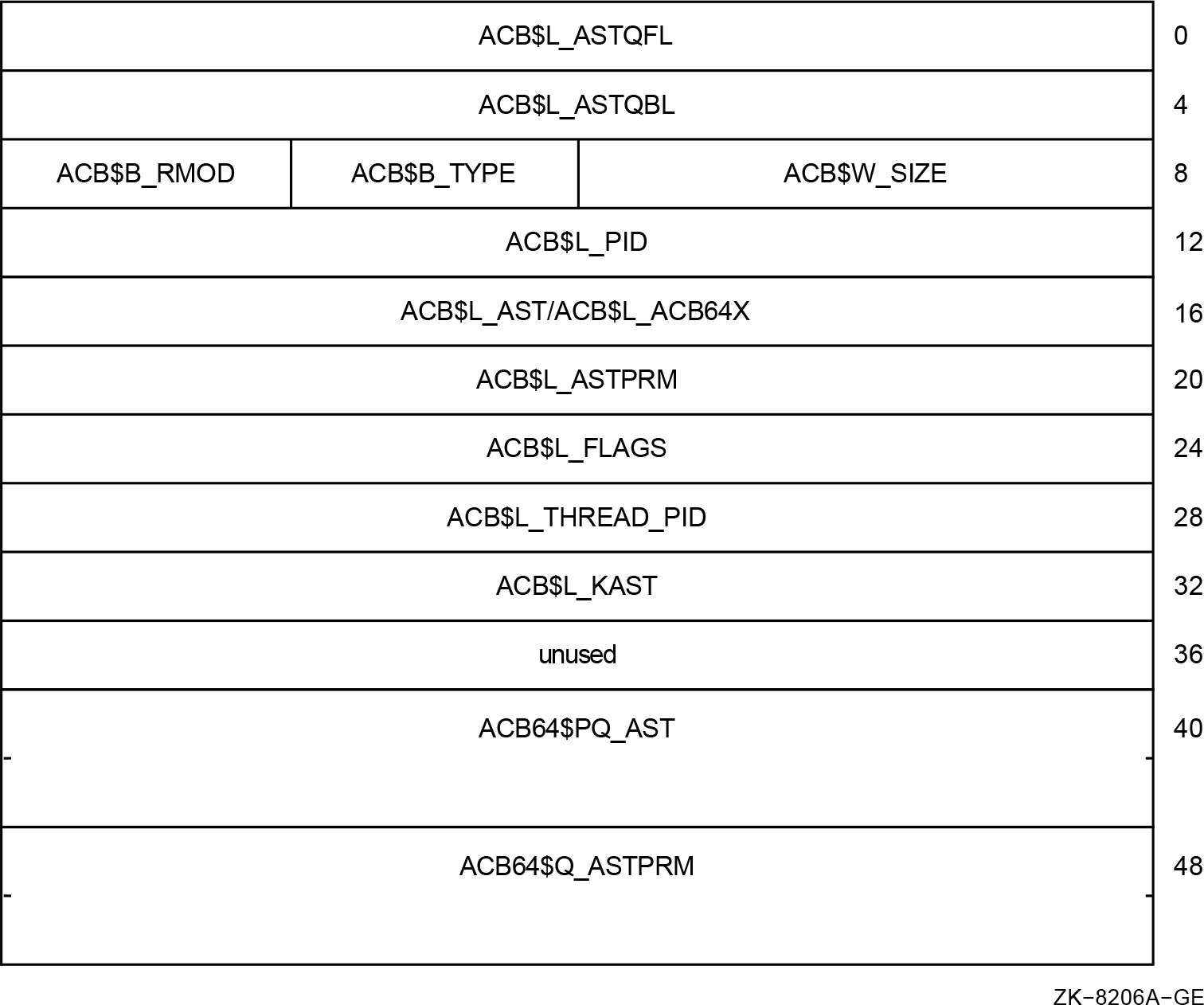

Two types of ACBs; an IRP is always in ACB64 format.(Compile, link, inspect the code.)

MMG$SVAPTECHK can longer be used for P0/P1 addresses. In addition, P2/S2 are not allowed; only S0/S1 are supported. (Inspect the code.)

Two types of buffer objects; buffer objects can be mapped into S2 space. (Inspect the code.)

Important

All device drivers, VCI clients, and inner-mode components must be recompiled and relinked to run on OpenVMS Alpha Version 7.0.

2.1.2. Changes Not Identified by Warning Messages

Pointers to a PFN database entry are now 64-bits wide. If you save or restore them, you must preserve the full 64 bits of these pointers.

The MMG[_STD]$SVAPTECHK routine can handle only S0/S1 addresses. If you pass it an address in any other space, such as P0, it will declare a bugcheck.

The various SCH$ routines that put a process (now kernel thread) into a wait state now require the KTB instead of the PCB.(This is not a 64-bit change, but it could affect drivers OpenVMS Alpha Version 7.0 device drivers.)

2.2. I/O Changes

This section describes OpenVMS Alpha Version 7.0 changes to the I/O subsystem that might require source changes to device drivers.

2.2.1. Impact of IRPE Data Structure Changes

As described in Section A.9, ''I/O Request Packet Extension (IRPE)'', the I/O Request Packet Extension (IRPE) structure now manages a single additional locked-down buffer instead of two. The general approach to deal with this change is to use a chain of additional IRPE structures.

irp$l_svapte, irp$l_boff,

and irp$l_bcnt values. For example, it is not uncommon for an IRPE to

be used in this fashion:The second buffer that will be eventually associated with the IRPE is locked first by calling EXE_STD$READLOCK with the IRP.

The

irp$l_svapte,irp$l_boff, andirp$l_bcntvalues are copied from the IRP into the IRPE. Theirp$l_svaptecell is then cleared. The locked region is now completely described by the IRPE.The first buffer is locked by calling EXE_STD$READLOCK with the IRP again.

A driver-specific error callback routine is required for the EXE_STD$READLOCK calls. This error routine calls MMG_STD$UNLOCK to unlock any region associated with the IRP and deallocates the IRPE.

This approach no longer works correctly. As described in Appendix A, "Data Structure Changes",

the DIOBM structure that is embedded in the IRP will be needed as well. Moreover, it

may not be sufficient to simply copy the DIOBM from the IRP to the IRPE. In

particular, the irp$l_svapte may need to be modified if the DIOBM is

moved.

irpe->irpe$b_type = DYN$C_IRPE;irpe->irpe$l_driver_p0 = (int) irp;

status = exe_std$readlock( irp, pcb, ucb, ccb,

buf1, buf1_len, lock_err_rtn

); if( !$VMS_STATUS_SUCCESS(status) ) return status; irpe->irpe$b_rmod = irp->irp$b_rmod;

status = exe_std$readlock( (IRP *)irpe, pcb, ucb, ccb,

buf2, buf2_len, lock_err_rtn ); if( !$VMS_STATUS_SUCCESS(status) ) return status;

The IRPE needs to be explicitly identified as an IRPE because the error callback routine depends on being able to distinguish an IRP from an IRPE. | |

The IRPE needs to contain a pointer to the original IRP for this I/O request for potential use by the error callback routine. Here, a driver-specific cell in the IRPE is used. | |

The first buffer is locked using the IRP. | |

If EXE_STD$READLOCK cannot lock the entire buffer into memory, the

following occurs:

| |

The caller's access mode must be copied into the IRPE in preparation for locking the second buffer using the IRPE. | |

The second buffer is locked using the IRPE. If this fails, the error callback routine LOCK_ERR_RTN is called with the IRPE. |

void lock_err_rtn (IRP *const lock_irp,PCB *const pcb, UCB *const ucb, CCB *const ccb, const int errsts, IRP **real_irp_p

) { IRP *irp; if( lock_irp->irp$b_type == DYN$C_IRPE ) irp = (IRP *) ((IRPE *)lock_irp)->irpe$l_driver_p0;

else irp = lock_irp; exe_std$lock_err_cleanup (irp);

*real_irp_p = irp;

return; }

The | |

Before returning from this error callback routine, you must provide

the original IRP via the | |

If this routine has been passed an IRPE, a pointer to the original IRP

from the | |

The new EXE_STD$LOCK_ERR_CLEANUP routine does all the needed unlocking and deallocation of IRPEs. | |

Provide the address of the original IRP to the caller. |

2.2.2. Impact of MMG_STD$IOLOCK, MMG_STD$UNLOCK Changes

The interface changes to the MMG_STD$IOLOCK and MMG_STD$UNLOCK routines are described in Appendix B, "I/O Support Routine Changes".The general approach to these changes is to use the corresponding replacement routines and the new DIOBM structure.

2.2.2.1. Direct I/O Functions

OpenVMS device drivers that perform data transfers using direct I/O functions do so by locking

the buffer into memory while still in process context, that is, in a driver FDT

routine. The PTE address of the first page that maps the buffer is obtained and

the byte offset within the page to the start of the buffer is computed. These

values are saved in the IRP (irp$l_svapte and

irp$l_boff). The rest of the driver then uses values in

the irp$l_svapte and irp$l_boff cells and the byte

count in irp$l_bcntin order to perform the transfer. Eventually

when the transfer has completed and the request returns to process context for

I/O post-processing, the buffer is unlocked using the irp$l_svapte

value and not the original process buffer address.

To support 64-bit addresses on a direct I/O function, one only needs to ensure the proper handling of the buffer address within the FDT routine.

Almost all device drivers that perform data transfers via a direct I/O function use OpenVMS-supplied FDT support routines to lock the buffer into memory. Because these routines obtain the buffer address either indirectly from the IRP or directly from a parameter that is passed by value, the interfaces for these routines can easily be enhanced to support 64-bit wide addresses.

irp$l_svapte cell by device drivers prior to OpenVMS Alpha

Version 7.0.In general, there are two problems:It takes a full 64-bits to address a process PTE in page table space.

The 64-bit page table space address for a process PTE is only valid when in the context of that process. This is also known as the "cross-process PTE problem.

In most cases, both of these PTE access problems are solved by copying the PTEs that map the

buffer into nonpaged pool and setting irp$l_svapte to point to the

copies. This copy is done immediately after the buffer has been successfully

locked. A copy of the PTE values is acceptable because device drivers only read

the PTE values and are not allowed to modify them. These PTE copies are held in

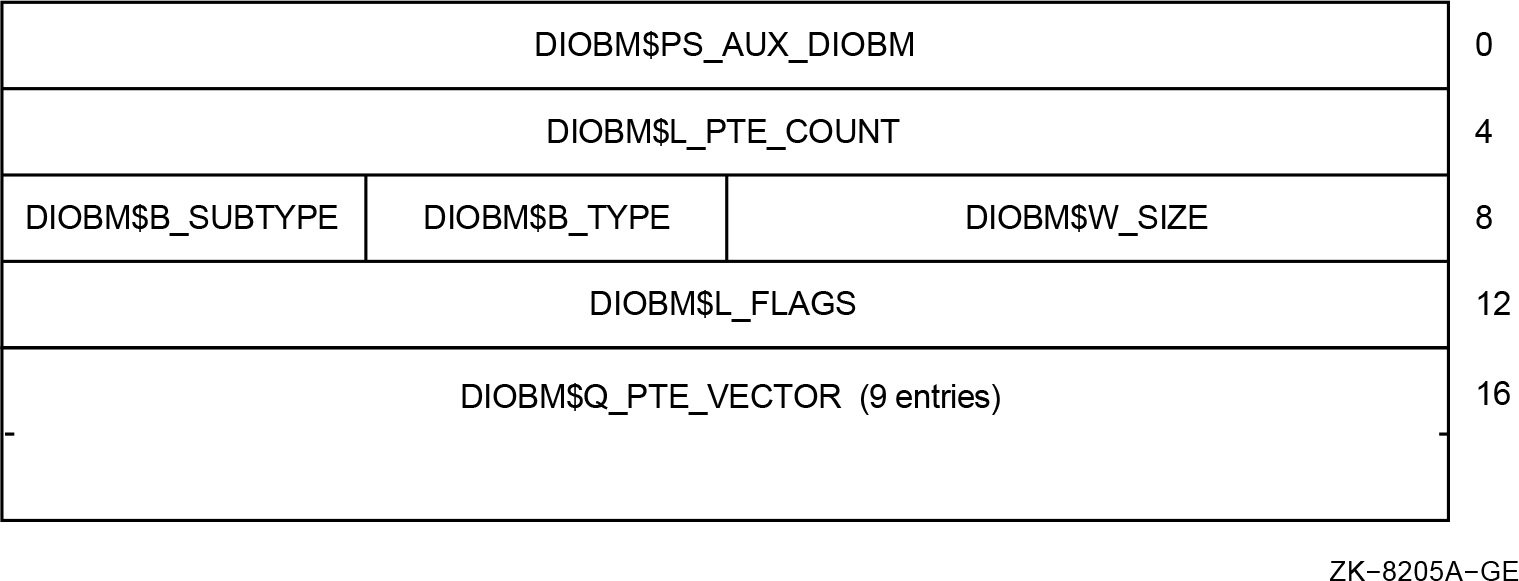

a new nonpaged pool data structure, the Direct I/O Buffer Map

(DIOBM) structure. A standard DIOBM structure (also known

as a fixed-size primary DIOBM) contains enough room for a vector of 9

(DIOBM$K_PTECNT_FIX) PTE values. This is sufficient for a buffer size

up to 64K bytes on a system with8 KB pages. It is expected that most I/O

requests are handled by this mechanism and that the overhead to copy a small

number of PTEs is acceptable, especially given that these PTEs have been

recently accessed to lock the pages.

The standard IRP contains an embedded fixed-size DIOBM structure. When the PTEs that map a

buffer fit into the embedded DIOBM, the irp$l_svapte cell is set to

point to the start of the PTE copy vector within the embedded DIOBM structure in

that IRP.

If the buffer requires more than 9 PTEs, then a separate "secondary" DIOBM

structure that is variably-sized is allocated to hold the PTE copies. If such a

secondary DIOBM structure is needed, it is pointed to by the original, or

"primary" DIOBM structure. The secondary DIOBM structure is

deallocated during I/O post-processing when the buffer pages are unlocked. In

this case, the irp$l_svapte cell is set to point into the PTE

vector in the secondary DIOBM structure. The secondary DIOBM requires only 8

bytes of nonpaged pool for each page in the buffer. The allocation of the

secondary DIOBM structure is not charged against the process BYTLM quota, but it

is controlled by the process direct I/O limit (DIOLM). This is the

same approach used for other internal data structures that are required to

support the I/O, including the kernel process block, kernel process stack, and

the IRP itself.

However, as the size of the buffer increases, the run-time overhead to copy the PTEs into the DIOBM becomes noticeable. At some point it becomes less expensive to create a temporary window in S0/S1 space to the process PTEs that map the buffer. The PTE window method has a fixed cost, but the cost is relatively high because it requires PTE allocation and TB invalidates. For this reason, the PTE window method is not used for moderately sized buffers.

The transition point from the PTE copy method with a secondary DIOBM to the PTE window method

is determined by a new system data cell, ioc$gl_diobm_ptecnt_max,

which contains the maximum desirable PTE count for a secondary DIOBM. The PTE

window method will be used if the buffer is mapped by more than

ioc$gl_diobm_ptecnt_max PTEs.

When a PTE window is used, irp$l_svapte is set to the S0/S1 virtual address in

the allocated PTE window that points to the first PTE that maps the buffer. This

S0/S1 address is computed by taking the S0/S1 address that is mapped by the

first PTE allocated for the window and adding the byte offset within page of the

first buffer PTE address in page table space. A PTE window created this way is

removed during I/O post-processing.

The PTE window method is also used if the attempt to allocate the required secondary DIOBM structure fails due to insufficient contiguous nonpaged pool. With an 8 Kb page size, the PTE window requires a set of contiguous system page table entries equal to the number of 8 Mb regions in the buffer plus 1. Failure to create a PTE window as a result of insufficient SPTEs is unusual. However, in the unlikely event of such a failure, if the process has not disabled resource wait mode, the $QIO request is be backed out and the requesting process is put into a resource wait state for nonpaged pool (RSN$_NPDYNMEM ). When the process is resumed, the I/O request is retried. If the process has disabled resource wait mode, a failure to allocate the PTE window results in the failure of the I/O request.

When the PTE window method is used, the level-3 process page table pages that contain the PTEs that map the user buffer are locked into memory as well. However, these level-3 page table pages are not locked when the PTEs are copied into nonpaged pool or when the SPT window is used.

irp$l_svapte by one of the

previously described three methods. The OpenVMS-supplied FDT support routines

EXE_STD$MODIFYLOCK, EXE_STD$READLOCK, and EXE_STD$WRITELOCK use the

IOC_STD$FILL_DIOBM routine in the following way:- The buffer is locked into memory by calling the new MMG_STD$IOLOCK_BUF routine. This routine returns a 64-bit pointer to the PTEs and replaces the obsolete MMG_STD$IOLOCK routine.

status = mmg_std$iolock_buf (buf_ptr, bufsiz, is_read, pcb, &irp->irp$pq_vapte, &irp->irp$ps_fdt_context->fdt_context$q_qio_r1_value);For more information about this routine, see Section B.17, ''MMG_STD$IOLOCK, MMG$IOLOCK, MMG_STD$IOLOCK_BUF''.

- A value for the 32-bit

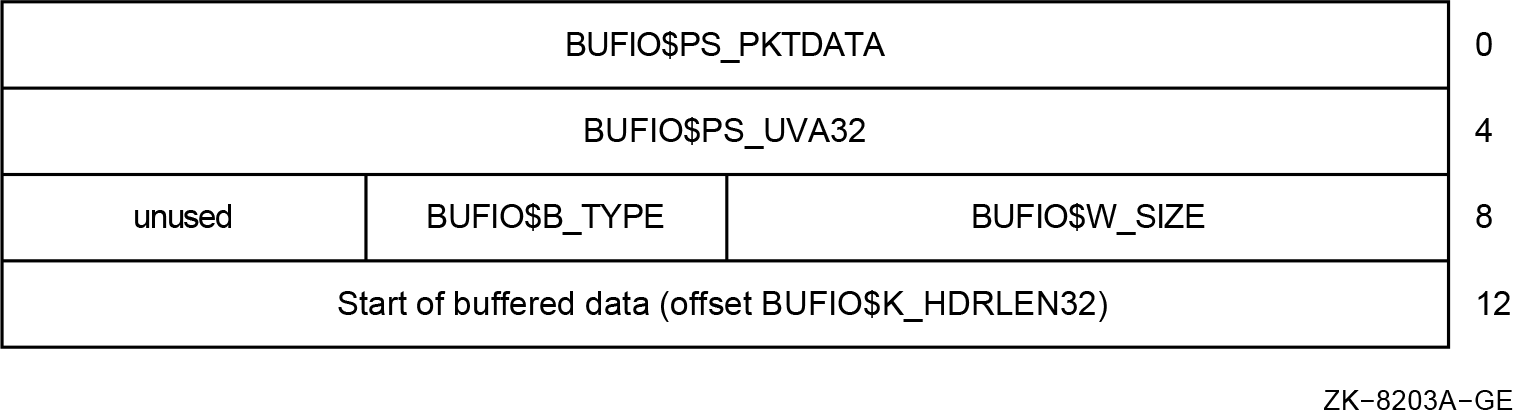

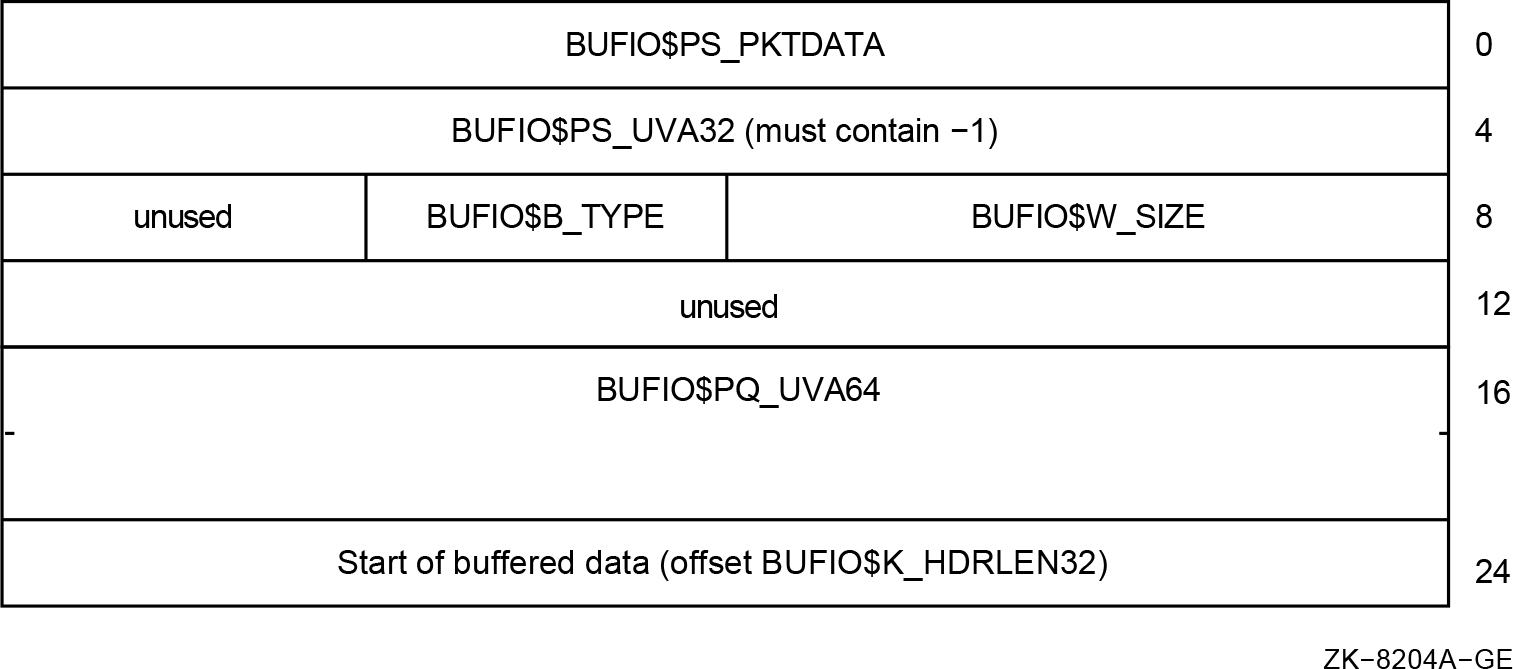

irp$l_svaptecell is derived by calling the new IOC_STD$FILL_DIOBM routine with a pointer to the embedded DIOBM in the IRP, the 64-bit pointer to the PTEs that was returned by MMG_STD$IOLOCK_BUF, and the address of theirp$l_svaptecell.status = ioc_std$fill_diobm (&irp->irp$r_diobm, irp->irp$pq_vapte, pte_count, DIOBM$M_NORESWAIT, &irp->irp$l_svapte);The DIOBM structure is fully described in Section A.6, ''Direct I/O Buffer Map (DIOBM)'' and this routine is described in Section B.10, ''IOC_STD$FILL_DIOBM''.

Device drivers that call MMG_STD$IOLOCK directly will need to examine their use of the returned values and might need to call the IOC_STD$FILL_DIOBM routine.

2.2.3. Impact of MMG_STD$SVAPTECHK Changes

Prior to OpenVMS Alpha Version 7.0,the MMG_STD$SVAPTECHK and MMG$SVAPTECHK routines compute a

32-bit svapte for either a process or system space address. As of

OpenVMS Alpha Version 7.0, these routines are restricted to an S0/S1 system space

address and no longer accept an address in P0/P1 space. The MMG_STD$SVAPTECHK and

MMG$SVAPTECHK routines declare a bugcheck for an input address in P0/P1 space. These

routines return a 32-bit system virtual address through the SPT window for an input

address in S0/S1 space.

The MMG_STD$SVAPTECHK and MMG$SVAPTECHK routines are used by a number of OpenVMS Alpha device drivers and privileged components. In most instances, no source changes are required because the input address is in nonpaged pool.

The 64-bit process-private virtual address of the level 3 PTE that maps a P0/P1 virtual

address can be obtained using the new PTE_VA macro. Unfortunately, this macro is not

a general solution because it does not address the cross-process PTE access problem.

Therefore, the necessary source changes depend on the manner in which the

svapte output from MMG_STD$SVAPTECHK is used.

The INIT_CRAM routine uses the MMG$SVAPTECHK routine in its computation of the physical address of the hardware I/O mailbox structure within a CRAM that is in P0/P1 space. If you need to obtain a physical address, use the new IOC_STD$VA_TO_PA routine.

If you call MMG$SVAPTECHK and IOC$SVAPTE_TO_PA, use the new IOC_STD$VA_TO_PA routine instead.

The PTE address in dcb$l_svapte must be expressible using32 bits and must be

valid regardless of process context. Fortunately, the caller's address is within the

buffer that was locked down earlier in the CONV_TO_DIO routine via a call to

EXE_STD$WRITELOCK and the EXE_STD$WRITELOCK routine derived a value for the

irp$l_svapte cell using the DIOBM in the IRP. Therefore, instead of

calling the MMG$SVAPTECHK routine, the BUILD_DCB routine has been changed to call

the new routine EXE_STD$SVAPTE_IN_BUF, which computes a value for the

dcb$l_svapte cell based on the caller's address, the original

buffer address in the irp$l_qio_p1 cell, and the address in the

irp$l_svapte cell.

2.2.4. Impact of PFN Database Entry Changes

There are changes to the use of the PFN database entry cells containing the page reference count and back link pointer.

For more information, see Section 2.3.6, ''Format of PFN Database Entry''.

2.2.5. Impact of IRP Changes

All source code references to the irp$l_ast, irp$l_astprm, and

irp$l_iosb cells have been changed. These IRP cells were removed

and replaced by new cells.

For more information, see Appendix A, "Data Structure Changes".

2.3. General Memory Management Infrastructure Changes

This section describes OpenVMS Alpha Version 7.0 changes to the memory management subsystem that might affect privileged-code applications.

For complete information about OpenVMS Alpha support for 64-bit addresses, see the OpenVMS Alpha Guide to 64-Bit Addressing and VLM Features.

2.3.1. Location of Process Page Tables

The process page tables no longer reside in the balance slot. Each process references its own page tables within page table space using 64-bit pointers.

PTE_VA — Returns level 3 PTE address of input VA

L2PTE_VA — Returns level 2 PTE address of input VA

L1PTE_VA — Returns level 1 PTE address of input VA

MAP_PTE — Returns address PTE through system space window.

UNMAP_PTE — Clears mapping of PTE through system space window.

Note that use of MAP_PTE and UNMAP_PTE requires the caller to hold the MMG spinlock across the use of these macros and that UNMAP_PTE must be invoked before another MAP_PTE can be issued.

2.3.2. Interpretation of Global and Process Section Table Index

As of OpenVMS Alpha Version 7.0, the global and process section table indexes, stored primarily in the PHD and PTEs, have different meanings. The section table index is now a positive index into the array of section table entries found in the process section table or in the global section table. The first section table index in both tables is now 1.

Add the PHD address to the value in PHD$L_PST_BASE_OFFSET.

Multiply the section table index by SEC$C_LENGTH.

Subtract the result of Step 2 from the result of Step 1.

2.3.3. Location of Process and System Working Set Lists

The base address of the working set list can no longer be found within the process PHDor the system PHD. To obtain the address of the process working set list, use the 64-bit data cell CTL$GQ_WSL To obtain the address of the system working set list, use the 64-bit data cell MMG$GQ_SYSWSL.

Note that pointers to working set list entries must be 64-bit addresses in order to be compatible with future versions of OpenVMS Alpha after Version 7.0.

2.3.4. Size of a Working Set List Entry

Each working set list entry (WSLE) residing in the process or the system working set list is now 64 bits in size. Thus, a working set list index must be interpreted as an index into an array of quadwords instead of an array of longwords, and working set list entries must be interpreted as 64 bits in size. Note that the layout of the low bits in the WSLE is unchanged.

2.3.5. Location of Page Frame Number (PFN) Database

Due to the support for larger physical memory systems in OpenVMS Alpha Version 7.0, the PFN database has been moved to S2 space, which can only be accessed with 64-bit pointers. Privileged routine interfaces within OpenVMS Alpha that pass PFN database entry addresses by reference have been renamed to force compile-time or link-time errors.

Privileged code that references the PFN database must be inspected and possibly modified to ensure that 64-bit pointers are used.

2.3.6. Format of PFN Database Entry

The offset PFN$L_REFCNT in the PFN database entry has been replaced with a different-sized offset that is packed together with other fields in the PFN database.

INCREF — Increments the PFN's reference count.

DECREF — Decrements the PFN's reference count.

As of OpenVMS Alpha Version 7.0, the offset PFN$L_PTE in the PFN database entry has been replaced with a new PTE backpointer mechanism. This mechanism can support page table entries that reside in 64-bit virtual address space.

ACCESS_BACKPOINTER — Accepts a PFN database entry address, and returns a virtual address at which you may access the PTE that maps that PFN.

ESTABLISH_BACKPOINTER — Replaces a write of a PTE address to PFN$L_PTE.

TEST_BACKPOINTER — Replaces a test for zero in PFN$L_PTE.

Note that pointers to PFN database entries must be 64 bits.

2.3.7. Process Header WSLX and BAK Arrays

Prior to OpenVMS Alpha Version 7.0, the process header contained two internal arrays of information that were used to help manage the balance slot contents (specifically, page table pages) during process swapping. These two arrays, along with the working set list index (WSLX)and backing storage (BAK) arrays, no longer are required for page table pages.

The swapper process now uses the upper-level page table entries and the working set list itself to manage the swapping of page table pages. A smaller version of the BAK array, now used only for backing storage information for balance slot pages, is located at the end of the fixed portion of the process header at the offset PHD$Q_BAK_ARRAY.

2.3.8. Free S0/S1 System Page Table Entry List

The format of a free page table entry in S0/S1 space has been changed to use index values from the base of page table space instead of the base of S0/S1 space. The free S0/S1 PTE list also uses page table space index values. The list header has been renamed to LDR$GQ_FREE_S0S1_PT.

2.3.9. Location of the Global Page Table

In order to support larger global sections and larger numbers of global sections in OpenVMS Alpha Version 7.0, the global page table has been moved to S2 space, which can be accessed only with 64-bit pointers.

Privileged code that references entries within GPT must be inspected and possibly modified to ensure that 64-bit pointers are used.

2.3.10. Free Global Page Table Entry List

The format of the free GPT entry has been changed to use index values from the base of the global page table instead of using the free pool list structure. The free GPT entry format is now similar to the free S0/S1 PTE format.

Note that pointers to GPT entries must be 64 bits.

2.3.11. Region Descriptor Entries (RDEs)

As of OpenVMS Alpha Version 7.0, each process virtual addressing region is described by a region descriptor entry (RDE). The program region (P0) and control region (P1) have region descriptor entries that contain attributes of the region and describe the current state of the region. The program region RDE is located at offset PHD$Q_P0_RDE within the process's PHD. The control region RDE is located at offset PHD$Q_P1_RDE, also within the process's PHD.

Many internal OpenVMS Alpha memory management routines accept a pointer to the region's RDE associated with the virtual address also passed to the routine.

$lookup_rde_va — Returns the address of the RDE given a virtual address.

$lookup_rde_id — Returns the address of the RDE given the region id.

2.4. Kernel Threads Changes

This section describes the OpenVMS Alpha kernel threads features that might require changes to privileged-code applications.

2.4.1. The CPU$L_CURKTB Field

The CPU$L_CURKTB field in the CPU databases contains the current kernel thread executing on that CPU. If kernel-mode codes own the SCHED spinlock, then the current KTB address can be obtained from this field. Before kernel threads implementation, this was the current PCB address.

2.4.2. Mutex Locking

No changes are necessary to kernel-mode code that locks mutexes. All of SCH$LOCK*, SCH$UNLOCK*, and SCH$IOLOCK* routines determine the correct kernel thread if it must wait because the mutex is already owned.

2.4.3. Scheduling Routines

|

EXE$KERNEL_WAIT |

SCH$WAIT_PROC |

|

EXE$KERNEL_WAIT_PS |

SCH$UNWAIT |

|

SCH$RESOURCE_WAIT |

RPTEVT macro |

|

SCH$RESOURCE_WAIT_PS |

SCH$REPORT_EVENT |

|

SCH$RESOURCE_WAIT_SETUP |

SCH$CHANGE_CUR_PRIORITY |

|

SCH$CHSE |

SCH$REQUIRE_CAPABILITY |

|

SCH$CHSEP |

SCH$RELEASE_CAPABILITY |

|

SCH$POSTEF |

SCH$WAKE |

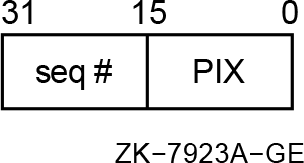

2.4.4. New MWAIT State

A thread that is waiting for ownership of the inner-mode semaphore may be put into MWAIT. The KTB$L_EFWM field contains a process-specific MWAIT code. The low word of the field contains RSN$_INNER_MODE, and the upper word contains the process index from the PID.

2.4.5. System Services Dispatching

The system services dispatcher has historically passed the PCB address to the inner-mode services. This is still true with kernel threads. The current KTB is not passed to the services.

2.4.6. Asynchronous System Traps (ASTs)

The ACB$L_PID field in the ACB should represent the kernel thread to which the AST is targeted. All other AST context is the same.

Inner-mode ASTs can be delivered on whichever kernel thread is currently in inner mode. ASTs that have the ACB$V_THREAD_SAFE bit set will always be delivered to the targeted thread, regardless of other-inner mode activity. Use extreme care if this is used. Attempted thread-safe AST delivery to a kernel thread that has been deleted is delivered to the initial thread.

2.4.7. TB Invalidation and Macros

TBI_DATA_64

TBI_SINGLE

TBI_ALL

|

Keyword |

Value |

Meaning |

|---|---|---|

|

ADDR |

= The virtual address to be invalidated. The address can be either a 64-bit VA or a sign-extended 32-bit VA. For MACRO-32, the address must be specified in a register. | |

|

ENVIRON |

= THIS_CPU_ONLY |

Indicates that this invocation of TBIS is to be executed strictly within the context of the local CPU only. Thus, no attempt is made whatsoever to extend the TBIS request to any CPU or other "processor" that might exist within the system. |

|

= ASSUME_PRIVATE |

Indicates that this is a threads environment and that the address should be treated as a private address and not be checked. Therefore, in an SMP environment, it is necessary to do the invalidate to other CPUs that are running a kernel thread from this process. This argument is used for system space addresses that should be treated as private to the process. | |

|

= ASSUME_SHARED |

Indicates that this invocation of TBIS should be broadcast to all other CPUs in the system. ASSUME_SHARED is the opposite of THIS_CPU_ONLY. | |

|

= LOCAL |

This is now obsolete and generates an error. | |

|

= anything other than the above |

Forces the TB invalidate to be extended to all components of the system that may have cached PTEs. | |

|

PCBADDR |

= Address of current process control block. Default is NO_PCB, which means that a PCB address does not need to be specified. The default is R31 for the MACRO-32 macros. |

This argument must be specified if the address to be invalidated is process-private (either ENVIRON=ASSUME_PRIVATE or no keyword for the ENVIRON qualifier was specified). |

|

Keyword |

Value |

Meaning |

|---|---|---|

|

ENVIRON |

= THIS_CPU_ONLY |

Indicates that this invocation of TBI_ALL is to be executed strictly within the context of the local CPU. No attempt is made to extend the TBIA request to any CPU or other "processor" that might exist within the system. |

|

= LOCAL |

This is now obsolete and generates an error. | |

|

= anything other than the above |

Forces the TB invalidate to be extended to all components of the system that may have cached PTEs. |

2.4.8. New PCB/KTB Fields

|

Field |

Meaning |

|---|---|

|

PCB$K_MAX_KT_COUNT |

Maximum number of kernel threads |

|

PCB$L_ACTIVE_CPUS |

CPUs owned by this process |

|

PCB$L_TQUANTUM |

Per-user thread quantum |

|

PCB$L_MULTITHREAD |

Maximum thread count |

|

PCB$L_KT_COUNT |

Current thread count |

|

PCB$L_KT_HIGH |

Highest KTB vector entry used |

|

PCB$L_KTBVEC |

KTB vector address |

|

PCB$L_IM_ASTQFL_SPK |

Special kernel AST queue forward link (head) |

|

PCB$L_IM_ASTQBL_SPK |

Special kernel AST queue back link (tail) |

|

PCB$L_IM_ASTQFL_K |

Kernel AST queue forward link (head) |

|

PCB$L_IM_ASTQBL_K |

Kernel AST queue back link (tail) |

|

PCB$L_IM_ASTQFL_E |

Executive AST queue forward link (head) |

|

PCB$L_IM_ASTQBL_E |

Executive AST queue back link (tail) |

|

PCB$L_INITIAL_KTB |

Initial KTB, overlays KTB$L_PCB |

|

KTB$L_PCB |

PCB address, overlays PCB$L_INITIAL_KTB |

|

KTB$L_FRED |

Address of FRED block |

|

KTB$L_PER_KT_AREA |

Address of per-kernel thread data area |

|

KTB$L_TQUANT |

Remaining per-user thread quantum |

|

KTB$L_QUANT |

Remaining per-kernel thread quantum |

|

KTB$L_TM_CALLBACKS |

Address of thread manager callback vector |

2.4.9. CTL$AL_STACK and CTL$AL_STACKLIM

The two arrays containing stack bounds information are now quadwords. The arrays are now CTL$AQ_STACK and CTL$AQ_STACKLIM and are still indexed by access mode. The entries are QUADWORDS.

|

Array |

Meaning |

|---|---|

|

PKTA$Q_STACK |

STACK pointer array |

|

PKTA$Q_STACKLIM |

STACK limit pointer array |

2.4.10. Floating-Point Register and Execution Data Blocks (FREDs)

The FRED is defined by FREDDEF. The KTB$L_FRED field in the KTB points to the FRED block. The section of the PHD that contains the HWPCB and floating-point register save area for the initial thread is identical to the layout of the FRED. Therefore, no distinction is needed between the initial thread and other threads when accessing this data.

2.5. Registering Images That Have Version Dependencies

Note

- BOD

- CDRP

- CXB

- DCBE

- FDT

- IRP

- IRPE

- PFN

- PHD

- UCB

- VCRP

The need for change in any image (including device drivers, as well as privileged applications linked against SYS$BASE_IMAGE.EXE) is normally detected by a system version check. That check is designed to prevent an application that may need change from producing incorrect results or causing system failures.

The version checks do not necessarily mean that the applications require any change. VSI recommends that you perform some analysis to determine compatibility for privileged images before you run them on Version 7.0systems.

OpenVMS Alpha Version 7.0 provides an Image Registry facility that may obviate the need for relinking images when you upgrade from previous versions of OpenVMS Alpha. The Image Registry is a central registry of images (including layered products, customer applications, and third-party software) that have version dependencies but have been identified as being compatible with the OpenVMS operating system software. The products in the registry are exempted from version checking.

The Image Registry facility has several benefits, particularly when you have only image files, not source or object files. In addition, it eases version compatibility problems on mixed-version clusters because the same images can be used on all nodes. It also simplifies the addition of third-party software and device drivers to the system.

The registry is a file that contains registered images. These images include main images (images that you can run directly), shared libraries, and device drivers that are identified byname, the image identification string, and the link time of the image. The registered images bypass normal system version checking in the INSTALL, system image loader, and image activator phases. With the Image Registry facility, images for different versions of applications can be registered independently.

Images linked as part of installation need not be registered because they match the version of the running system. However, linking during installation cannot ensure the absence of system version dependencies.

2.5.1. Version Identification (ID) Number Change to Three Subsystems

The OpenVMS executive defines 18 logical subsystems. Each of these subsystems contains its own version identification (ID)number. This modularization makes it possible for OpenVMS releases to include changes to a portion of the executive, impacting only those privileged programs which use that portion of the executive.

- I/O

- Memory Management

- Process Scheduling

Developers should check privileged code (that is, any image linked against the system symbol table SYS$BASE_IMAGE.EXE) to determine whether the image is affected by the changes to the subsystems. If the code is affected, the developer should make any necessary changes.

Chapter 3. Replacements for Removed Privileged Symbols

This chapter describes the closest equivalent mechanism to a number of internal routines, data structure cells, and system data cells that have been removed in OpenVMS Alpha Version 7.0.

Important

The internal data structure fields, routines, macros, and data cells described in this chapter should not be interpreted as being part of the documented interfaces that drivers or other privileged software should routinely depend on.

If you were using the removed mechanism correctly, this chapter will assist you in using the closest equivalent in OpenVMS Alpha Version 7.0.However, you should not use this as an opportunity to start using these mechanisms. Doing so is likely to increase the work required to maintain compatibility of your privileged software with future releases of OpenVMS.

3.1. Removed Date Structure Fields

|

Removed Field |

Replacement |

Comments |

|---|---|---|

|

BOD$L_BASEPVA |

BOD$PQ_BASEPVA |

64-bit process virtual address of buffer mapped by the buffer object. See Appendix A, "Data Structure Changes". |

|

CDRP$L_AST |

cdrp$pq_acb64_ast |

Increased to a quadword and renamed. |

|

CDRP$L_ASTPRM |

CDRP$Q_A | |

|

CDRP$L_IOSB |

CDRP$PQ_IOSB | |

|

CPT$L_IOVA |

CPT$PQ_IOVA |

Increased to a quadword and renamed. |

|

DMP$M_BITS_12_15 |

Still have this field. |

Same value. |

|

DMP$S_BITS_12_15 |

Still have this field. |

Same value. |

|

DMP$V_BITS_12_15 |

Still have this field. |

Same value. |

|

DYN$C_F64_F64DATA |

TBS—Dollar | |

|

DYN$C_NET_TIM_TEB |

DYN$C_NET_TIM_NTEB |

Renamed because the DECnet structure it indicates (network timer element block) was renamed from TEB to NTEB. |

|

FDT_CONTEXT$L_QIO_R1_VALUE |

FDT_CONTEXT$Q_QIO_R1_VALUE | |

|

IRP$L_AST |

IRP$PQ_ACB64_AST |

Removed to ensure that any reference to the $QIO

astadrvia a 32-bit address and astprmas a 32-bit value are detected at compile-time or link-time. |

|

IRP$L_ASTPRM |

IRP$Q_ACB64_ASTPRM |

Removed to ensure that any reference to the $QIO

astadrvia a 32-bit address and astprmas a 32-bit value are detected at compile-time or link-time. |

|

IRP$L_IOSB |

IRP$PQ_IOSB |

Removed to ensure that any reference to the $QIO

iosbvia a 32-bit address is detected at compile-time or link-time. |

|

IRPE$L_BCNT1 |

IRPE$L_BCNT | |

|

IRPE$L_BCNT2 |

None. |

Removed. |

|

IRPE$L_BOFF1 |

IRPE$L_BOFF | |

|

IRPE$L_BOFF2 |

None. |

Removed. |

|

IRPE$L_SVAPTE1 |

IRPE$L_SVAPTE | |

|

IRPE$L_SVAPTE2 |

None. |

Removed. |

|

LCKCTX$L_CPLADR |

LCKCTX$PQ_CPLADR |

Increased in length to quadword. |

|

LCKCTX$L_CPLPRM |

LCKCTX$Q_CPLPRM |

Increased in length to quadword. |

|

LCKCTX$L_CR3 |

LCKCTX$Q_CR3 |

Increased in length to quadword. |

|

LCKCTX$L_CR4 |

LCKCTX$Q_CR4 |

Increased in length to quadword. |

|

LCKCTX$L_CR5 |

LCKCTX$Q_CR5 |

Increased in length to quadword. |

|

LCKCTX$L_CRETADR |

LCKCTX$PQ_CREADR |

Increased in length to quadword. |

|

LCKCTX$L_CTX_PRM1 |

LCKCTX$Q_CTX_PRM1 |

Increased in length to quadword. |

|

LCKCTX$L_CTX_PRM2 |

LCKCTX$Q_CTX_PRM2 |

Increased in length to quadword. |

|

LCKCTX$L_CTX_PRM3 |

LCKCTX$Q_CTX_PRM3 |

Increased in length to quadword. |

|

LCKCTX$L_RET1 |

LCKCTX$PQ_RET1 |

Increased in length to quadword. |

|

LCKCTX$L_TMP1 |

LCKCTX$Q_TMP1 |

Increased in length to quadword. |

|

LKB$C_ACBLEN |

Removed. | |

|

LKB$K_ACBLEN |

Removed. | |

|

LKB$L_AST |

LKB$PQ_AST |

Increased in length to quadword. |

|

LKB$L_ASTPRM |

LKB$Q_ASTPRM |

Increased in length to quadword. |

|

LKB$L_BLKASTADR |

LKB$PQ_CPLASTADR |

Increased in length to quadword. |

|

LKB$L_CPLASTADR |

LKB$PQ_CPLASTADR |

Increased in length to quadword. |

|

LKB$L_LKSB |

LKB$PQ_LKSB |

Increased in length to quadword. |

|

LKB$L_OLDASTPRM |

LKB$Q_OLDASTPRM |

Increased in length to quadword. |

|

LKB$L_OLDBLKAST |

LKB$PQ_OLDBLKAST |

Increased in length to quadword. |

|

LMB$C_GBL |

No name change. |

Value changed from 2 to 3. |

|

LMB$C_PROCESS |

No name change. |

Value changed from 3 to 4. |

|

LMB$C_S0 |

LMB$C_S0S1 |

Value = 1 |

|

LMB$C_SPT |

LMB$C_SPTW |

Not guaranteed to be in a dump. |

|

LMB$L_BAD_MEM_END |

LMB$PQ_BAD_MEM_END |

Supports a 64-bit address. |

|

LMB$L_BAD_MEM_START |

LMB$PQ_BAD_MEM_START |

Supports a 64-bit address. |

|

LMB$L_HOLE_START_VA |

LMB$PQ_BAD_MEM_START |

Supports a 64-bit address. |

|

LMB$L_HOLE_TOTAL_PAGES |

LMB$Q_HOLE_TOTAL_PAGES |

Supports a 64-bit address. |

|

MMG$C_PTSPACE_OFFSET MMG$K_PTSPACE_OFFSET |

MMG$GL_L1_INDEX |

Compile-time constant that defined a fixed base address for page table address space. This has been replaced by a run-time mechanism which chooses a base address for page table address space during bootstrap, with the index of level 1 page table entry used to map the page tables stored in the new data cell. |

|

PCB$L_ADB_LINK |

None |

Supported a feature that was never implemented. |

|

PCB$L_PSX_ACTPRM |

PCB$Q_PSX_ACTPRM |

Increased in length to quadword. |

|

PCB$L_TOTAL_EVTAST |

None |

Supported a feature that was never implemented. |

|

PFN$C_ENTRY_SHIFT_SIZE |

None |

The size of a single PFN database entry was formerly a power of two. As of Version 7.0, that is no longer true and the symbol was deleted. |

|

PFN$L_PTE |

This offset in the PFN database was replaced with a new PTE

backpointer mechanism that is capable of supporting page table

entries that reside in 64-bit virtual address space. Any code

that formerly touched PFN$L_PTE must be recoded to use one of

the following macros supplied in LIB.MLB:

| |

|

ACCESS_BACKPOINTER |

Accepts a PFN database entry address and returns a virtual address at which you may access the PTE that maps that PFN. This replaces a fetch of a SVAPTE from PFN$L_PTE, which would subsequently be used as an operand for a memory read or write instruction. | |

|

ESTABLISH_BACKPOINTER |

Replaces a write of a SVAPTE to PFN$L_PTE. | |

|

TEST_BACKPOINTER |

Replaces a test for zero in PFN$L_PTE. | |

|

PFN$L_REFCNT |

INCREF DECREF |

This offset in the PFN database was replaced with a differently sized offset that is packed together with other fields in the PFN database. The supplied macro INCREF should be used to replace any existing increment of the value in PFN$L_REFCNT, while DECREF should be used to replace any existing decrement. |

|

PFN$L_WSLX |

PFN$L_WSLX_QW |

This offset was renamed to reflect a fundamental change in working set list indexes. Prior to Version 7.0, the working set list index (WSLX) was a longword index. The WSLX has become a quadword index as of Version 7.0, therefore the name of the offset was changed to focus attention on existing code that must be changed to view the value stored at this offset as a quadword index rather than as a longword index. |

|

PHD$C_PHDPAGCTX |

None |

Supported a feature that was never implemented. |

|

PHD$L_BAK |

PHD$L_BAK_ARRAY |

PHD$L_BAK contained an offset to an internally maintained array which was used to support swapping of the balance slot contents. As of Version 7.0, the implementation of this array changed to better accommodate the balance slot contents. PHD$L_BAK was replaced by PHD$L_BAK_ARRRAY which is the symbolic offset from the start of the process header to where this array begins. |

|

PHD$L_L2PT_VA |

L2PTE_VA |

This process header offset formerly contained the system space address of the process's level 2 page table page that was used to map P0 and P1 spaces. As of Version 7.0, the page tables no longer reside in the balance slot, and a process is no longer limited to having only one level 2 page table page. This offset was used to derive addresses of level 2 page table entries. Use the L2PTE_VA macro to derive from a given VA the address of the level 2 PTE that maps that VA. |

|

PHD$L_L3PT_VA PHD$L_L3PT_VA_P1 |

PTE_VA |

These process header offsets formerly contained the system space addresses of the bases of the P0 and P1 page tables that resided in the process's balance slot. As of Version 7.0, the page tables no longer reside in the balance slot, and the conceptual overlap of the P0 and P1 page tables in virtual memory no longer exists. Use the PTE_VA macro to derive from a given VA the address of the level 3 PTE that maps that VA. |

|

PHD$L_P0LENGTH |

None |

Different page table layout. |

|

PHD$L_P1LENGTH |

None |

Different page table layout. |

|

PHD$L_PSTBASMAX |

PHD$L_PST_BASE_MAX |

Contains new-style section index. |

|

PHD$L_PSTBASOFF |

PHD$L_PST_BASE_OFFSET |

Name changed. |

|

PHD$L_PSTFREE |

PHD$L_PST_FREE |

Contains new-style section index. |

|

PHD$L_PSTLAST |

PHD$L_PST_LAST |

Contains new-style section index. |

|

PHD$L_PTWSLELCK PHD$L_PTWSLEVAL |

PFN database |

These process header offsets formerly contained internal bookkeeping information for managing page table pages for a process. These have been replaced by a bookkeeping mechanism that resides in the PFN database entries for page table pages. It is highly unlikely that anyone is affected by this change. |

|

PHD$L_QUANT |

KTB$L_QUANT | |

|

PHD$L_WSL |

CTL$GQ_WSL |

You can no longer count on WSL (data cell) following PHD, use pointer to WSL in CTL$GQ_WSL instead. |

|

PHD$L_WSLX |

None |

WSLX array is no longer in PHD as a result of the new swapper design. |

|

PTE$L_COUNT |

PTE$L_FREE_COUNT |

Offset to the number of free PTEs in a free PTE structure. |

|

PTE$L_LINK |

PTE$Q_INDEX |

Contains an index to the next free element in the free PTE list. The contents of the field is a quadword index off the base of page table space. Free system PTEs and free global PTEs are linked together in this manner. |

|

PTE$M_SINGLE_SPTE |

PTE$M_SINGLE_PTE |

A mask or flag denoting whether a free element describes a single PTE or multiple PTEs. |

|

PTE$V_SINGLE_SPTE |

None |

The contents of a free PTE element are AND'ed with PTE$M_SINGLE_PTE to determine whether the element describes a single PTE. |

3.2. Removed Routines

|

Removed Routine |

Replacement |

Comments |

|---|---|---|

|

MMG$ALCSTX |

MMG_STD$ALCSTX |

Returns new-style section index. |

|

MMG$ALLOC_PFN_ALGND |

MMG$ALLOC_PFN_ALGND_64 |

MMG$ALLOC_PFN_ALGND_64 should not be called directly. Instead, use the ALLOCPFN macro. Note that 64-bit virtual addresses are required to access PFN database entries. |

|

MMG$ALLOC_ZERO_ALGND |

MMG$ALLOC_ZERO_ALGND_64 |

MMG$ALLOC_ZERO_ALGND_64 should not be called directly. Instead, use the ALLOC_ZERO_PFN macro. Note that 64-bit virtual addresses are required to access PFN database entries. |

|

MMG$CREPAG |

MMG$CREPAG_64 MMG_STD$CREPAG_64 |

Accepts 64-bit addresses and has 3 new inputs: RDE (R12), page file_cache (R13) mmg_flags (R14). See mmg_routines.h for STD interface. |

|

MMG$DALCSTX |

MMG_STD$DALCSTX |

Accepts new-style section index. |

|

MMG$DECPTREF |

MMG_STD$DECPTREF_PFNDB MMG_STD$DECPTREF_GPT |

MMG$DECPTREF expected a 32-bit system space address of a PTE as an input parameter. Page table entries are now located in 64-bit addressable memory. This routine was replaced by two routines: MMG_STD$DECPTREF_PFNDB and MMG_STD$DECPTREF_GPT. MMG_STD$DECPTREF_PFNDB accepts as input a 64-bit virtual address of a PFN database entry for a page table, the reference count of which is to be decremented. MMG_STD$DECPTREF_GPT, accepts as input a 64-bit virtual address of a global page table entry, which lies within a certain global page table page, of which a reference count must be decremented. |

|

MMG$DECSECREF |

MMG_STD$DECSECREF |

Accepts new-style section index. |

|

MMG$DECSECREFL |

MMG_STD$DECSECREFL |

Accepts new-style section index. |

|

MMG$DELPAG |

MMG$DELPAG_64 MMG_STD$DELPAG_64 |

Accepts 64-bit addresses and has 2 new inputs, RDE (R12) and mmg_flags (R14). See mmg_routines.h for STD interface. |

|

MMG$DELWSLEPPG |

MMG_STD$DELWSLEPPG_64 |

Replacement reflects a change in input from a 32-bit addressable system space address of a PTE to a 64-bit address of a PTE in page table space. Other argument changes may have occurred as well. |

|

MMG$DELWSLEX |

MMG_STD$DELWSLEX_64 |

Replacement reflects a change in input from a 32-bit addressable system space address of a PTE to a 64-bit address of a PTE in page table space. Other argument changes may have occurred as well. |

|

MMG$FREWSLX |

MMG$FREWSLX_64 |

Replacement reflects a change in input from a 32-bit addressable system space address of a PTE to a 64-bit address of a PTE in page table space. Other argument changes may have occurred as well. |

|

MMG$GETGSNAM |

MMG_STD$GETGSNAM |

Converted to STD interface. (No prototype in mmg_routines.h.) |

|

MMG$GSDSCAN |

MMG_STD$GSDSCAN |

Converted to STD interface. See mmg_routines.h for interface definition. |

|

MMG$INCPTREF |

MMG_STD$INCPTREF_64 |

Replacement reflects a change in input from a 32-bit addressable system space address of a PTE to a 64-bit address of a PTE in page table space. Other argument changes may have occurred as well. |

|

MMG$INIBLDPKT |

None |

This routine was used internally only. Its symbol has been removed from the base image. |

|

MMG$ININEW_PFN |

MMG_STD$ININEWPFN_64 |

Replacement reflects a change in input from a 32-bit addressable system space address of a PTE to a 64-bit address of a PTE in page table space. Other argument changes may have occurred as well. |

|

MMG$INIT_PGFLQUOTA |

MMG_STD$INIT_PGFLQUOTA $INIT_PGFLQUOTA |

Converted to STD interface. See mmg_functions.h for interface definition. |

|

MMG$IN_REGION |

MMG_STD$IN_REGION_64 $IN_REGION_64 |

Converted to STD interface. See mmg_functions.h for interface definition. |

|

MMG$IOLOCK |

MMG_STD$IOLOCK_BUF | |

|

MMG$LOCKPGTB |

MMG_STD$LOCKPGTB_64 |

Replacement reflects a change in input from a 32-bit addressable system space address of a PTE to a 64-bit address of a PTE in page table space. Other argument changes may have occurred as well. |

|

MMG$MAKE_WSLE |

MMG_STD$MAKE_WSLE_64 |

Replacement reflects a change in input from a 32-bit addressable system space address of a PTE to a 64-bit address of a PTE in page table space. Other argument changes may have occurred as well. |

|

MMG$MORE_PGFLQUOTA |

MMG_STD$MORE_PGFLQUOTA $MORE_PGFLQUOTA |

Converted to STD interface. See mmg_functions.h for interface definition. |

|

MMG$MOVPTLOCK MMG$MOVPTLOCK1 |

None |

Page table locking redesign has obviated these routines. No replacement exists. |

|

MMG$PTEINDX |

None |

Used internally only. Obviated by design as of Version 7.0. |

|

MMG$PTEREF |

MMG$PTEREF_64 |

This replacement reflects a change in interface including MMG_STD$PTEREF acceptance as input a 64-bit virtual address. |

|

MMG$PURGEMPL |

MMG$PURGE_MPL |

Renamed because the interface changed slightly. This is a JSB entry with arguments in R0-R2. It now accepts an additional argument in R3, the PTBR of the process owning the PTEs, for range-based requests. This request type also now accepts 64-bit PTE addresses rather than 32-bit SVAPTE addresses. |

|

MMG$SUBSECREF |

MMG_STD$DECSECREFL |

Accepts new-style section index. |

|

MMG$SUBSECREFL |

MMG_STD$SUBSECREFL |

Accepts new-style section index. |

|

MMG$TBI_SINGLE_64 |

TBI_SINGLE Macro |

MMG$TBI_SINGLE_64 should not be called directly. Instead, use the TBI_SINGLE macro. |

|

MMG$TRY_ALL |

MMG_STD$TRY_ALL_64 |

Converted to STD interface. See mmg_routines.h for interface definition. |

|

MMG$ULKGBLWSL |

None |

This routine was used internally only. Its symbol has been removed from the base image. |

|

MMG$UNLOCK |

MMG_STD$IOUNLOCK_BUF | |

|

MMG_STD$ALLOC_PFN |

MMG_STD$ALLOC_PFN_64 |

This routine should not be called directly. Instead, use the ALLOCPFN macro. Note that 64-bit virtual addresses are required to access PFN database entries. |

|

MMG_STD$ALLOC_ZERO_PFN |

MMG_STD$ALLOC_ZERO_PFN_64 |

This routine should not be called directly. Instead, use the ALLOC_ZERO_PFN macro. Note that 64-bit virtual addresses are required to access PFN database entries. |

|

MMG_STD$DALLOC_PFN |

MMG_STD$DALLOC_PFN_64 |

Note that 64-bit virtual addresses are required to access PFN database entries. |

|

MMG_STD$DEL_PFNLST |

MMG_STD$DEL_PFNLST_64 |

Note that 64-bit virtual addresses are required to access PFN database entries. |

|

MMG_STD$ININEW_PFN |

MMG_STD$ININEWPFN_64 |

Note that 64-bit virtual addresses are required to access PFN database entries. |

|

MMG_STD$INS_PFNH |

MMG_STD$INS_PFNH_64 |

Note that the 64-bit virtual addresses are required to access PFN database entries. |

|

MMG_STD$INS_PFNT |

MMG_STD$INS_PFNT_64 |

Note that the 64-bit virtual addresses are required to access PFN database entries. |

|

MMG_STD$IOLOCK |

MMG_STD$IOLOCK_BUF | |

|

MMG_STD$PTEINDX |

None |

Used internally only. Obviated by design as of OpenVMS Alpha Version 7.0. |

|

MMG_STD$REL_PFN |

MMG_STD$REL_PFN_64 |

Note that the 64-bit virtual addresses are required to access PFN database entries. |

|

MMG_STD$REM_PFN |

MMG_STD$REM_PFN_64 |

Note that the 64-bit virtual addresses are required to access PFN database entries. |

|

MMG_STD$REM_PFNH |

MMG_STD$REM_PFNH_64 |

Note that the 64-bit virtual addresses are required to access PFN database entries. |

|

MMG_STD$TBI_SINGLE_64 |

TBI_SINGLE Macro |

MMG_STD$TBI_SINGLE_64 should not be called directly. Instead, use the TBI_SINGLE macro. |

|

MMG_STD$UNLOCK |

MMG_STD$IOUNLOCK_BUF | |

|

SWP$FILL_L1L2_PT |

None |

Removed. |

3.3. Removed Macros

This section lists the macros that have been removed as of OpenVMS Alpha Version 7.0.

3.3.1. Removed MACRO-32 Macros Formerly in SYS$LIBRARY:LIB.MLB

$VADEF — Moved to SYS$LIBRARY:STARLET.MLB

TBI_SINGLE_64 — MMG$TBI_SINGLE_64

3.3.2. C Header Files Removed From SYS$LIBRARY:SYS$LIB_C.TLB

msb_codec_reg.h

msb_reg.h

vadef.h — Moved to SYS$LIBRARY:SYS$STARLET_C.TLB

3.4. Removed System Data Cells

|

Removed Cell |

Replacement |

Comments |

|---|---|---|

|

CTL$AL_STACK |

CTL$AQ_STACK |

Arrays are now quadwords. |

|

CTL$AL_STACKLIM |

CTL$AQ_STACKLIM |

Arrays are now quadwords. |

|

EXE$GL_GPT |

MMG$GQ_FREE_GPT |

As of Version 7.0, free GPTEs are managed in the same manner as free system PTEs. Note that 64-bit virtual addresses are required to access GPTEs. |

|

LDR$GL_FREE_PT |

LDR$GQ_FREE_S0S1_PT |

Contains the address of the start of the free S0/S1 PTE list. The format of the free PTEs has changed for Version 7.0. |

|

MMG$GL_FRESVA |

MMG$GQ_NEXT_FREE_S0S1_VA | |

|

MMG$GL_GPTBASE |

MMG$GQ_GPT_BASE |

As of Version 7.0, free GPTEs are managed in the same manner as free system PTEs. Note that 64-bit virtual addresses are required to access GPTEs. |

|

MMG$GL_MAXGPTE |

MMG$GQ_MAX_GPTE |

As of Version 7.0, free GPTEs are managed in the same manner as free system PTEs. Note that 64-bit virtual addresses are required to access GPTEs. |

|

MMG$GL_P0_PTLEN |

None |

Obviated by the removal of the process page tables from the balance slot. |

|

MMG$GL_PX_VPN_LENGTH |

None |

This data cell is obviated by the removal of the process page tables from the balance slot. |

|

MMG$GL_RESERVED_SVA |

MMG$GQ_WINDOW_VA |

Increased in length to quadword. |

|

MMG$GL_RESERVED_SVA2 |

MMG$GQ_WINDOW2_VA |

Increased in length to quadword. |

|

MMG$GL_RESERVED_SVAPTE |

MMG$GQ_WINDOW_PTE_PFN |

64-bit pointer to PFN field of first reserved PTE. |

|

MMG$GL_RESERVED_SVAPTE2 |

MMG$GQ_WINDOW2_PTE_PFN |

64-bit pointer to PFN field of second reserved PTE. |

|

MMG$GL_SHARED_L2PT_PFN |

None |

This cell was deleted since it is possible to have more than one shared L2PT. That is system space may span over multiple L2PTs. |

|

MMG$GL_SPT_L2PTE_BIAS |

None |

This cell was deleted since it is possible to have more than one shared L2PT. That is system space may span over multiple L2PTs. |

|

MMG$GL_VA_TO_PX_VPN |

None |

This data cell has been completely obviated by the removal of the process page tables from the balance slot. |

|

MMG$GL_ZERO_SVA |

MMG$GQ_WINDOW_VA |

Increased in length to quadword. |

|

MMG$GL_ZERO_SVAPTE_PFN |

MMG$GQ_WINDOW_PTE_PFN |

64-bit pointer to PFN field of reserved PTE. |

|

MMG$GQ_PT_VA |

MMG$GQ_PT_BASE |

MMG$GQ_PT_VA was renamed to ensure that any code that had assumed a fixed location of page table space as a function of page size would be revisited. The location of page table space is now variable to meet the individual bootstrap needs of supporting Version 7.0, as well as being a function of the page size. |

|

MPW$GW_HILIM |

MPW$GL_HILIM |

Increased in length to a longword. |

|

MPW$GW_LOLIM |

MPW$GL_LOLIM |

Increased in length to a longword. |

|

PFN$GB_LENGTH |

None | |

|

PFN$PL_DATABASE |

PFN$PQ_DATABASE |

The PFN database was moved to S2 space, which is only addressable with 64-bit pointers. |

|

PHV$GL_REFCBAS |

PHV$GL_REFCBAS_LW |

The process header reference count vector has been promoted from an array of words to an array of longwords. |

|

SGN$GL_PHDAPCNT |

None |

This cell was deleted as a result of moving the process page tables out of the balance slot. |

|

SGN$GL_PHDP1WPAG |

None |

This cell was deleted as a result of moving the process page tables out of the balance slot. |

|

SGN$GL_PHDRESPAG |

None |

This cell was deleted as a result of moving the process page tables out of the balance slot. |

|

SGN$GL_PTPAGCNT |

None |

This cell was deleted as a result of moving the process page tables out of the balance slot. |

|

SWP$GL_L1PT_SVAPTE |

None |

L1 page table now mapped virtually in page table space. |

|

SWP$GL_L1PT_VA |

None |

L1 page table now mapped virtually in page table space. |

|

SWP$GW_BAKPTE |

None |

This cell was deleted as a result of moving the process page tables out of the balance slot. |

Chapter 4. Modifying Device Drivers to Support 64-Bit Addressing

This chapter describes how to modify customer-written device drivers to support 64-bit addresses.

For more information about the data structures and routines described in this chapter, see Appendix A, "Data Structure Changes" and Appendix B, "I/O Support Routine Changes".

4.1. Recommendations for Modifying Device Drivers

Before you can modify a device driver to support 64-bit addresses, your driver must recompile and relink without errors on OpenVMS Alpha Version 7.0. See Chapter 2, "Upgrading Privileged Software to OpenVMS Alpha Version 7.0". If you are using OpenVMS-supplied FDT routines, supporting 64-bitaddresses can be automatic or easily obtained. Device drivers written in C are usually easier to modify than drivers written in MACRO-32.Drives using direct I/O are usually easier to modify than those using buffered I/O.

Select the functions that you want to support 64-bit functions.

Follow your IRP$L_QIO_P1 value and promote all references to 64-bit addresses.

Declare 64-bit support for the I/O function.

The remaining sections in this chapter provide more information about these recommendations.

4.2. Mixed Pointer Environment in C

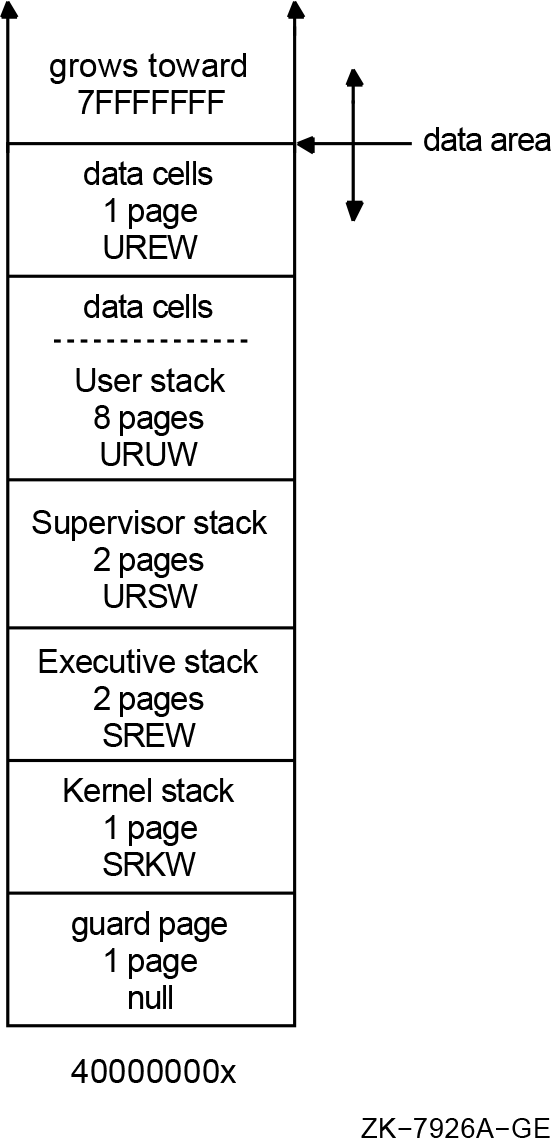

OpenVMS Alpha 64-bit virtual address space layout that applies to all processes. (There are no special 64-bit processes or 32-bit processes.)

64-bit pointer support for addressing the entire 64-bit OpenVMS Alpha address space layout including P0, P1, and P2 address spaces and S0/S1, S2, and page table address spaces.

32-bit pointer compatibility for addressing P0, P1, and S0/S1 address spaces.

Many new 64-bit system services which support P0, P1, and P2 space addresses.

Many existing system services enhanced to support 64-bit addressing.

32-bit sign-extension checking for all arguments passed to 32-bit pointer only system services.

C and MACRO-32 macros for handling 64-bit addresses.

- Compile your device driver using /POINTER_SIZE=32

$ CC/STANDARD=RELAXED_ANSI89 - /INSTRUCTION=NOFLOATING_POINT - /EXTERN=STRICT - /POINTER_SIZE=32 - LRDRIVER+SYS$LIBRARY:SYS$LIB_C.TLB/LIBRARY #pragma __required_pointer_size 32|64

- 64-bit pointer types are defined by header files; e.g.

#include <far_pointers.h> VOID_PQ user_va; /* 64-bit "void *" */ ... #include <ptedef.h> PTE * svapte; /* 32-bit pointer to a PTE */PTE_PQ va_pte; /* Quadword pointer to a PTE */PTE_PPQ vapte_p; /* Quadword pointer to a * quadword pointer to a PTE */ - Pointer size truncation warning

p0_va = p2_va;^%CC-W-MAYLOSEDATA, In this statement, "p2_va" has a larger data size than "short pointer to char"

4.3. $QIO Support for 64-Bit Addresses

$QIO[W] efn,chan,func,iosb,astadr,astprm,p1,p2,p3,p4,p5,p6

These services have a 64-bit friendly interface(as described in OpenVMS Alpha Guide to 64-Bit Addressing and VLM Features),which allows these services to support 64-bit addresses.

|

Argument |

Prior Type |

New Type |

Description |

|---|---|---|---|

|

efn |

Unsigned longword |

- |

Event flag number. Unchanged. |

|

chan |

Unsigned word |

- |

Channel number. Unchanged. |

|

func |

Unsigned longword |

- |

I/O function code. Unchanged. |

|

iosb |

32-bit pointer ? |

64-bit pointer |

Pointer to a quadword I/O status block (IOSB). The IOSB format is unchanged. |

|

astadr |

32-bit pointer ? |

64-bit pointer |

Procedure value of the caller's AST routine. On Alpha systems, the procedure value is a pointer to the procedure descriptor. |

|

astprm |

Unsigned longword ? |

Quadword |

Argument value for the AST routine. |

|

P1 |

Longword ? |

Quadword |

Device-dependent argument. Often P1 is a buffer address. |

|

P2 |

Longword ? |

Quadword |

Device-dependent argument. Only the low-order 32-bits will be used by system-supplied FDT routines that use P2 as the buffer size. |

|

P3 |

Longword ? |

Quadword |

Device-dependent argument. |

|

P4 |

Longword ? |

Quadword |

Device-dependent argument. |

|

P5 |

Longword ? |

Quadword |

Device-dependent argument. |

|

P6 |

Longword ? |

Quadword |

Device-dependent argument. Sometimes P6 is used to contain the address of a diagnostic buffer. |

SS$_NOT64DEVFUNC 64-bit address not supported by device for this function

The caller has specified a 64-bit virtual address in the P1device dependent argument, but the device driver does not support64-bit addresses with the requested I/O function.

The caller has specified a 64-bit address for a diagnostic buffer, but the device driver does not support 64-bit addresses for diagnostic buffers.

Some device drivers may also return this condition value when64-bit buffer addresses are passed using the P2 through P6arguments and the driver does not support a 64-bit address with the requested I/O function.

For more information about the $QIO, $QIOW, and $SYNCH system services, see the VSI OpenVMS System Services Reference Manual: GETUTC–Z.

4.4. Declaring Support for 64-Bit Addresses in Drivers

Device drivers declare that they can support a 64-bit address by individual function. The details vary depending on the language used to code the initialization of the driver's Function Decision Table.

4.4.1. Drivers Written in C

Drivers written in C use the ini_fdt_act macro to initialize an FDT entry for an

I/O function. This macro uses the DRIVER$INI_FDT_ACT routine. Both the macro and the

routine have been enhanced for OpenVMS Alpha Version7.0.

ini_fdt_act (fdt, func, action, bufflag)

bufflag parameter must be one of the following:|

BUFFERED |

The specified function is buffered. | |

|

NOT_BUFFERED |

The specified function is direct. This is a synonym for DIRECT. | |

|

DIRECT |

The specified function is direct. This is a synonym for NOT_BUFFERED. |

bufflag parameter has been enhanced to include the declaration of

64-bit support by allowing 3 additional values:|

BUFFERED_64 |

The specified function is buffered and supports a 64-bit address in the p1 parameter. | |

|

NOT_BUFFERED_64 |

The specified function is direct and supports a 64-bit address in the p1 parameter. | |

|

DIRECT_64 |

The specified function is direct and supports a 64-bit address in the p1 parameter. |

If a driver does not support a 64-bit address on any of its functions, there is no need to

change its use of the ini_fdt_act macro.

ini_fdt_act (&driver$fdt, IO$_SENSEMODE, my_sensemode_fdt, BUFFERED); ini_fdt_act (&driver$fdt, IO$_SETMODE, my_setmode_fdt, BUFFERED); ini_fdt_act (&driver$fdt, IO$_READVBLK, acp_std$readblk, DIRECT_64); ini_fdt_act (&driver$fdt, IO$_READLBLK, acp_std$readblk, DIRECT_64);

The interpretation of the bufflag parameter to the DRIVER$INI_FDT_ACT routine has

been enhanced to support the new values and the setting of the 64-bit support mask

in the FDT data structure.

4.4.2. Drivers Written in MACRO-32

As of OpenVMS Alpha Version 7.0,drivers written in MACRO-32 use a new FDT_64 macro to declare the set of I/O functions for which the driver supports 64-bit addresses. The use of the FDT_64 macro is similar to the use of the existing FDT_BUF macro. If a driver does not support a 64-bit address on any of its functions, there is no need to use the new FDT_64 macro.

FDT_INI MY_FDTFDT_BUF <SENSEMODE,SETMODE>FDT_64 <READVBLK,READLBLK>FDT_ACT ACP_STD$READBLK, <READVBLK,READLBLK>

4.4.3. Drivers Written in BLISS

As of OpenVMS Alpha Version 7.0,drivers written in BLISS-32 and BLISS-64 use a new optional keyword parameter, FDT_64, to the existing FDTAB macro to declare the set of I/O functions that support 64-bit addresses. The use of the new FDT_64 parameter is similar to the use of the existing FDT_BUF parameter. If a driver does not support a 64-bit address on any of its functions, there is no need to use the new FDT_64 parameter.

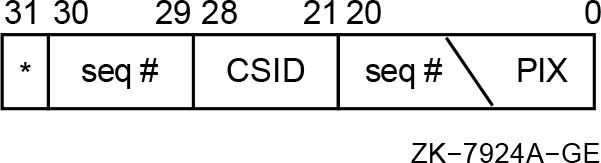

FDTAB (