Programming Concepts Manual, Volume I

- Operating System and Version:

- VSI OpenVMS IA-64 Version 8.4-1H1 or higher

VSI OpenVMS Alpha Version 8.4-2L1 or higher

VSI OpenVMS x86-64 Version 9.2-1 or higher

Preface

1. About VSI

VMS Software, Inc. (VSI) is an independent software company licensed by Hewlett Packard Enterprise to develop and support the OpenVMS operating system.

2. Intended Audience

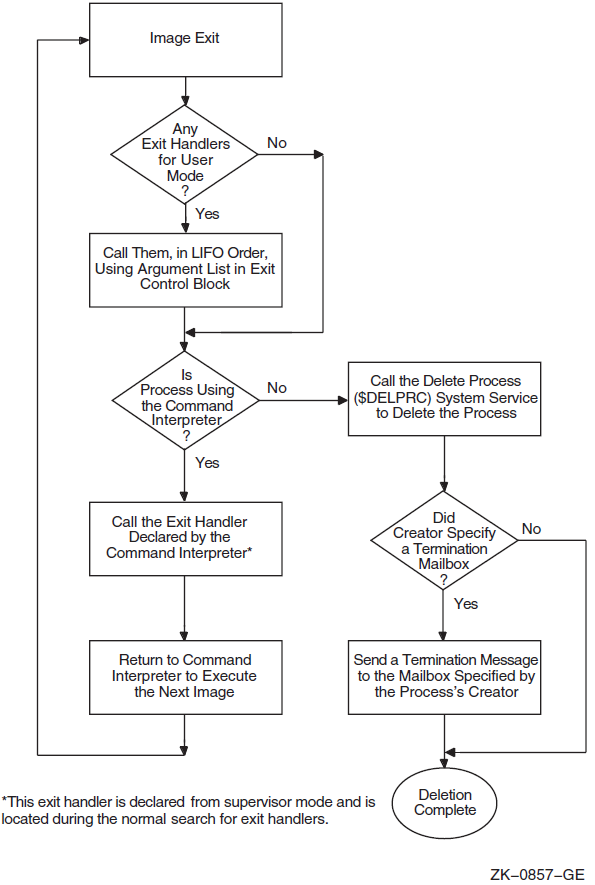

This manual is intended for system and application programmers. It presumes that its readers have some familiarity with the VSI OpenVMS programming environment.

3. Document Structure

Chapter 1 describes the structure of the two-volume manual, and offers an introduction to the OpenVMS operating system and to the tools that are available in the programming environment.

Chapter 2 defines the two types of processes, and describes what constitutes the context of a process, and the modes of execution of a process. It also describes kernel threads and the kernel threads process structure.

Chapter 3 describes communication within a process and between processes.

Chapter 4 describes how to use the creation and control of a process or kernel thread for programming tasks. It also describes how to gather information about a process or kernel thread and how to synchronize a program by using time.

Chapter 5 describes overview concepts of symmetric multiprocessing (SMP) systems.

Chapter 6 describes synchronization concepts and the differences between synchronization techniques on VAX systems, Alpha systems, and I64 systems. It presents methods of synchronization such as event flags, asynchronous system traps (ASTs), parallel processing RTLs, and process priorities, and the effects of kernel threads upon synchronization. It also describes how to use synchronous and asynchronous system services, and how to write applications in a multiprocessing environment.

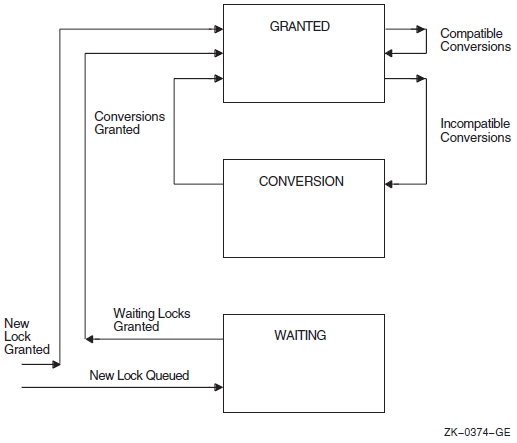

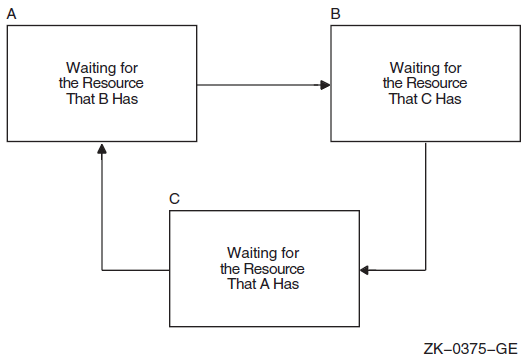

Chapter 7 describes the use of the lock manager system services to synchronize access to shared resources. This chapter presents the concept of resources and locks; it also describes the use of the SYS$ENQ and SYS$DEQ system services to queue and dequeue locks.

Chapter 8 describes how to use asynchronous system traps (ASTs). It describes access modes and service routines for ASTs and how ASTs are declared and delivered. It also describes the effects of kernel threads on AST delivery.

Chapter 9 describes the OpenVMS Condition Handling facility. It describes VAX system, Alpha system, and I64 system exceptions, arithmetic exceptions, and Alpha and I64 system unaligned access traps. It describes the condition value field, exception dispatcher, signaling, and the argument list passed to a condition handler. Additionally, types of condition handlers and various types of actions performed by them are presented. This chapter also describes how to write and debug a condition handler, and how to use an exit handler.

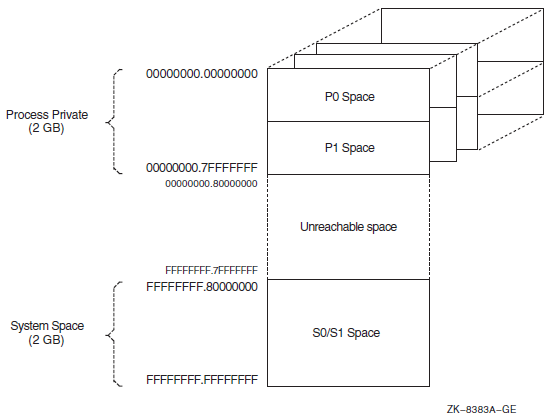

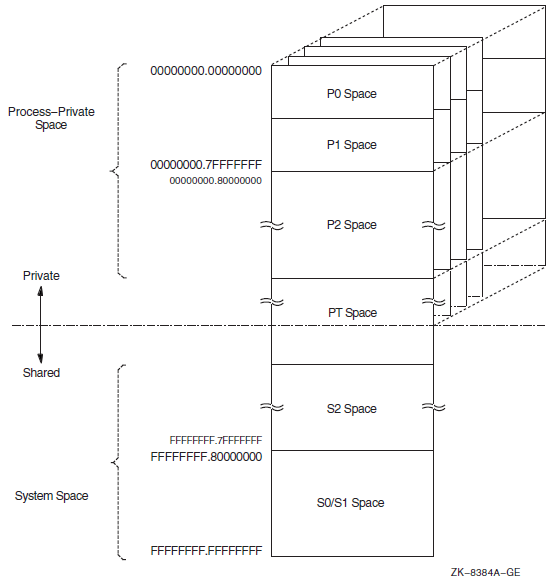

Chapter 10 describes the 32-bit and 64-bit use of virtual address space.

Chapter 11 describes all the services, routines, tools, and programs that support 64-bit addressing.

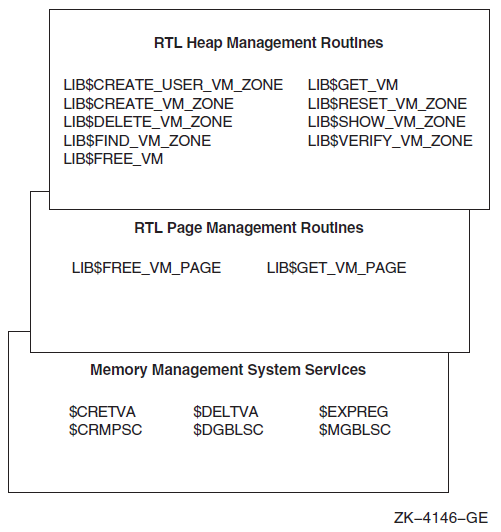

Chapter 12 describes system services and RTLs of Alpha and I64 systems to manage memory. It describes the page size and layout of virtual address space on Alpha and I64 systems. This chapter also describes how to add virtual address space, adjust working sets, control process swapping, and create and manage sections on Alpha and I64 systems.

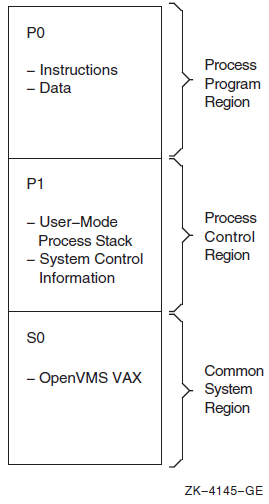

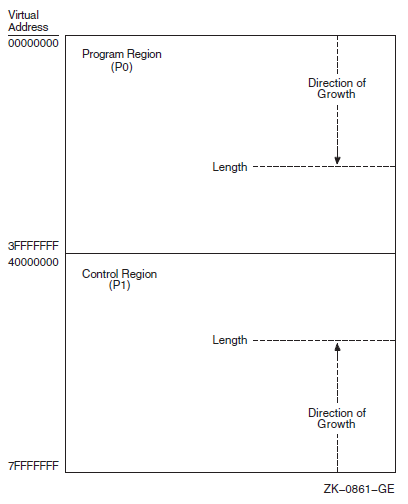

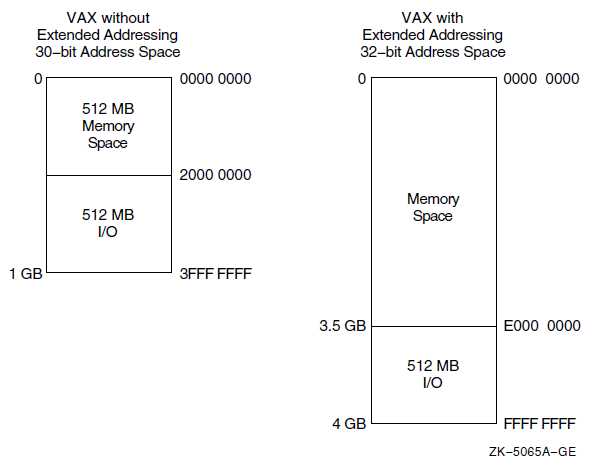

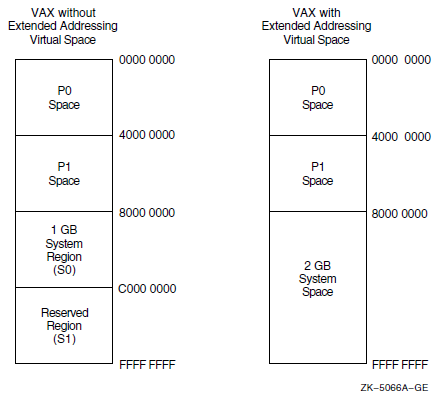

Chapter 13 describes the of system services and RTLs of VAX systems to manage memory. It describes the page size and layout of virtual address space on VAX systems. This chapter also describes how to add virtual address space, adjust working sets, control process swapping, and create and manage sections on VAX systems.

Chapter 14 describes how to use RTLs to allocate and free pages and blocks of memory, and how to use RTLs to create, manage, and debug virtual memory zones.

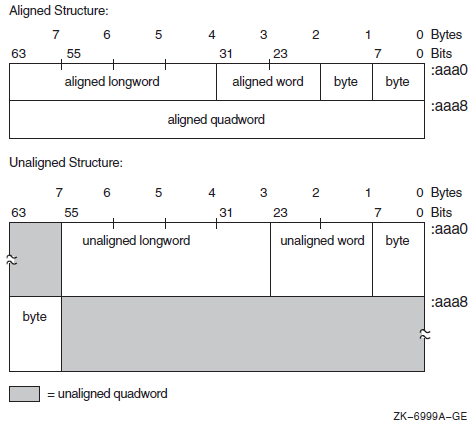

Chapter 15 describes the importance and techniques of instruction and data alignment.

- Chapter 16 describes the VLM memory management features, such as the following:

- Memory-resident global sections

- Fast I/O and buffer objects for global sections

- Shared page tables

- Expandable global page table

- Reserved memory registry

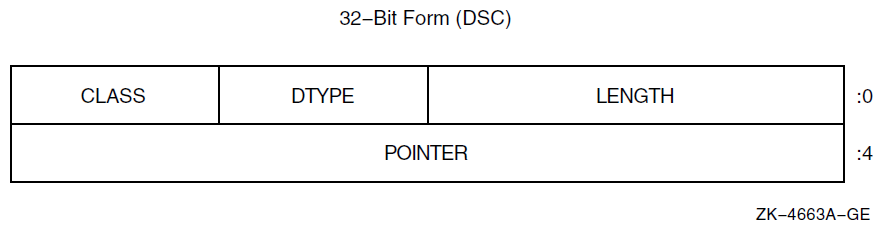

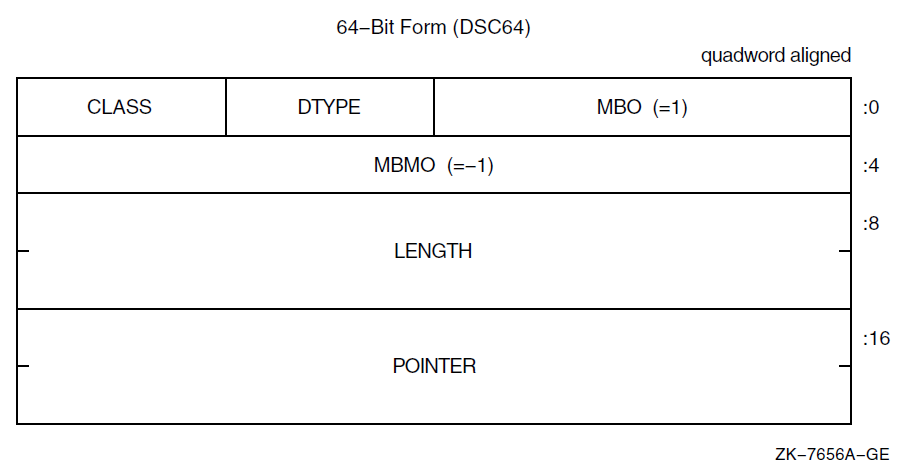

Appendix A describes the C language macros for manipulating 64-bit addresses, for checking the sign extension of the low 32 bits of 64-bit values, and for checking descriptors for the 64-bit format.

Appendix B illustrates writing a program with a 64-bit region that was created and deleted by system services.

Appendix C demonstrates the memory management VLM features described in Chapter 16.

4. Related Documents

For a detailed description of each run-time library and system service routine mentioned in this manual, see the OpenVMS Run-Time Library documentation and the VSI OpenVMS System Services Reference Manual.

VSI OpenVMS DCL Dictionary

VSI OpenVMS User's Manual

VSI OpenVMS Guide to OpenVMS File Applications

VSI OpenVMS Guide to System Security

OpenVMS Record Management Services documentation

VSI OpenVMS Utility Routines Manual

VSI OpenVMS I/O User's Reference Manual

5. VSI Encourages Your Comments

You may send comments or suggestions regarding this manual or any VSI document by sending electronic mail to the following Internet address: <docinfo@vmssoftware.com>. Users who have VSI OpenVMS support contracts through VSI can contact <support@vmssoftware.com> for help with this product.

6. OpenVMS Documentation

The full VSI OpenVMS documentation set can be found on the VMS Software Documentation webpage at https://docs.vmssoftware.com.

7. Typographical Conventions

The following conventions are used in this manual:

| Convention | Meaning |

|---|---|

Ctrl/x | A sequence such as

Ctrl/x

indicates that you must hold down the key labeled Ctrl while you

press another key or a pointing device button. |

PF1

x | A sequence such as PF1

x indicates that you

must first press and release the key labeled PF1 and then press and

release another key (x)

or a pointing device button. |

| Enter | In examples, a key name in bold indicates that you press that key. |

… |

A horizontal ellipsis in examples indicates one of the

following possibilities:

|

. . . | A vertical ellipsis indicates the omission of items from a code example or command format; the items are omitted because they are not important to the topic being discussed. |

| ( ) | In command format descriptions, parentheses indicate that you must enclose choices in parentheses if you specify more than one. |

| [ ] | In command format descriptions, brackets indicate optional choices. You can choose one or more items or no items. Do not type the brackets on the command line. However, you must include the brackets in the syntax for directory specifications and for a substring specification in an assignment statement. |

| | | In command format descriptions, vertical bars separate choices within brackets or braces. Within brackets, the choices are optional; within braces, at least one choice is required. Do not type the vertical bars on the command line. |

| { } | In command format descriptions, braces indicate required choices; you must choose at least one of the items listed. Do not type the braces on the command line. |

| bold type | Bold type represents the name of an argument, an attribute, or a reason. In command and script examples, bold indicates user input. Bold type also represents the introduction of a new term. |

| italic type | Italic type indicates important information, complete titles of manuals, or variables. Variables include information that varies in system output (Internal error number), in command lines (/PRODUCER=name), and in command parameters in text (where dd represents the predefined code for the device type). |

| UPPERCASE TYPE | Uppercase type indicates a command, the name of a routine, the name of a file, or the abbreviation for a system privilege. |

Example |

This typeface indicates code examples, command examples, and interactive screen displays. In text, this type also identifies website addresses, UNIX commands and pathnames, PC-based commands and folders, and certain elements of the C programming language. |

| – | A hyphen at the end of a command format description, command line, or code line indicates that the command or statement continues on the following line. |

| numbers | All numbers in text are assumed to be decimal unless otherwise noted. Nondecimal radixes—binary, octal, or hexadecimal—are explicitly indicated. |

Chapter 1. Overview of Manuals and Introduction to Development on OpenVMS Systems

This chapter describes the structure of this two-volume manual. This chapter also provides an overview of the OpenVMS operating system, its components, and the tools in programming software.

1.1. Overview of the Manual

|

Volume |

Part |

Description |

|---|---|---|

|

Volume I | ||

|

Part I |

Process and Synchronization. Describes the creation, communication, and control of processes. It also describes symmetric multiprocessing (SMP), and the synchronizing of data access, programming operations, and access to resources. | |

|

Part II |

Interrupts and Condition Handling. Describes the use of asynchronous system traps (ASTs), and the use of routines and services for handling conditions. | |

|

Part III |

Addressing and Memory Management. Describes 32-bit and 64-bit address space, and the support offered for 64-addressing. It also provides guidelines for 64-bit application programming interfaces (APIs); and Alpha, I64, VAX, and VLM memory management with run-time routines for memory management, and alignment on OpenVMS Alpha, VAX, and I64 systems. | |

|

Part IV |

Appendixes: Macros and Examples of 64-Bit Programming. Describes the macros used in 64-bit programming, along with two examples of 64-bit programming. | |

|

Volume II | ||

|

Part I |

OpenVMS Programming Interfaces: Calling a System Routine. Describes the basic calling format for OpenVMS routines and system services. It also describes the STARLET structures and definitions for C programmers. | |

|

Part II |

I/O, System and Programming Routines. Describes the I/O operations, and the system and programming routines used by run-time libraries and system services. | |

|

Part III |

Generic Macros for Calling System Services. Describes in appendixes the generic macros used for calling system services, OpenVMS data types, and the distributed name services on OpenVMS VAX systems. | |

1.2. Overview of the OpenVMS Operating System

The OpenVMS operating system is a highly flexible, general-purpose, multiuser system that supports the full range of computing capabilities, providing the high integrity and dependability of commercial-strength systems along with the benefits of open, distributed client/server systems.

OpenVMS operating systems can be integrated with systems from different vendors in open systems computing environments. OpenVMS supports software that conforms to international standards for an open environment. These industry-accepted, open standards specify interfaces and services that permit applications and users to move between systems and allow applications on different systems to operate together.

The OpenVMS operating system configuration includes OpenVMS integrated software, services and routines, applications, and networks. The system supports all styles of computing, from time-sharing to real-time processing to transaction processing. OpenVMS systems configured with optional software support distributed computing capabilities and can function as servers in multivendor client/server configurations.

The OpenVMS operating system is designed to provide software compatibility across all the processors on which it runs.

The following sections describe the components of the OpenVMS operating system, give a general overview of the system software, and describe the various styles of computing that OpenVMS software supports. The sections also summarize the basic ways in which OpenVMS software can be configured and connected to other software, and the hardware platforms and processors on which the OpenVMS software runs.

1.3. Components of the OpenVMS Operating System

The OpenVMS operating system is a group of software programs (or images) that control computing operations. The base operating system is made up of core components and an array of services, routines, utilities, and related software. The OpenVMS operating system serves as the foundation from which all optional software products and applications operate. The services and utilities in the base OpenVMS operating system support functions such as system management, data management, and program development. Other integrated software that adds value to the system provides functions such as clustering and volume shadowing.

Optional software products, including application programs developed by OpenVMS programmers and other programmers, run on the core operating system. The OpenVMS system supports a powerful, integrated development environment with a wide selection of software development tools. Application programs written in multiple languages provide computational, data-processing, and transaction-processing capabilities.

Compatibility Between Software Versions

User-mode programs and applications created under earlier versions of OpenVMS VAX, OpenVMS Alpha, and OpenVMS I64 run under subsequent versions with no change.

Command procedures written under one version of OpenVMS continue to run under newer versions of the software.

Most user-mode OpenVMS VAX sources can be recompiled, relinked, and run on an OpenVMS Alpha and OpenVMS I64 system without modification. Code that explicitly relies on the VAX architecture requires modification.

Most OpenVMS Alpha images run under translation on OpenVMS I64.

Translation is available for OpenVMS VAX applications without sources or that you do not want to recompile.

1.3.1. OpenVMS Systems on Multiple Platforms

A complex instruction set computer (CISC) architecture based on the VAX architecture.

A reduced instruction set computer (RISC) architecture based on the Alpha architecture.

The explicitly parallel instruction computing (EPIC) architecture used by Itanium systems.

1.3.1.1. System Compatibility and Program Portability Across Platforms

The OpenVMS Alpha and OpenVMS I64 operating systems are compatible with OpenVMS VAX systems in terms of user, system manager, and programmer environments. For general users and system managers, OpenVMS Alpha and OpenVMS I64 have the same interfaces as OpenVMS VAX. Virtually all OpenVMS VAX system management utilities, command formats, and tasks are identical in the OpenVMS Alpha and OpenVMS I64 environments. Mixed-architecture and mixed-version clusters that contain both Alpha systems and VAX systems are supported.

1.3.2. OpenVMS Computing Environments

Open system capabilities

Distributed processing capabilities

Production system capabilities

System and network management capabilities

OpenVMS software capabilities include both the standardized features of open systems computing and the commercial-strength functionality of traditional OpenVMS systems. System and network management software provides for control of heterogeneous, integrated environments.

The following sections describe the capabilities supported in OpenVMS computing environments and summarize the software resources available in each kind of environment.

1.3.2.1. Open System Capabilities

OpenVMS offers the benefits of an open system environment, which permits both applications and users to move between systems. In addition, applications on different open systems can operate together.

The OpenVMS operating system makes available a set of services in an open domain, while still offering its traditional high-integrity computing services. Incorporation of open computing capabilities enhances the traditional feature-rich OpenVMS environment.

Software in the OpenVMS open systems environment enables the development and use of portable applications and consistent user interfaces and also permits systems to operate together. The keys to openness of OpenVMS systems are standard programming interfaces, standardized user interfaces, and standard protocols.

1.3.2.2. Application Portability

Applications can be written once and run on other open platforms that support the standards used in the applications.

Users can access the wide range of applications available on open platforms.

Applications can be supplied by different vendors.

Applications that are developed on the three supported platforms and conform to open standards can be easily ported to other systems that conform to the same standard interfaces. Applications written in ISO and ANSI languages are portable to other systems. In addition, the Open Group/Motif graphical user interface supports application portability.

1.3.2.2.1. Other Application Portability Features

Applications written in ISO/ANSI languages are easily portable to other platforms that support them. OpenVMS VAX, OpenVMS Alpha, and OpenVMS I64 provide support for such languages as C, COBOL, and Fortran.

1.3.3. Distributed Computing Capabilities

In a distributed computing environment, an application is distributed over two or more systems or processors, each of which has its own autonomous operating environment. A distributed application is composed of separate modules, running on different systems, that communicate with each other by passing data between modules or by sharing access to files or databases. A distributed application must be able to coordinate its activities over a dispersed operating environment.

The distributed computing environment can consist of software located either in a single box or a single room or can comprise a worldwide network of computers. The systems in the distributed configuration can be uniprocessor, multiprocessor, or OpenVMS Cluster systems; systems from different vendors can be included in the same configuration.

1.3.3.1. Client/Server Style of Computing

A server is any system that provides a service or resource to other systems.

The client is the system requesting the service.

This style of computing allows each portion of a distributed application to run in its own optimal environment. The whole application does not have to run on one centralized system (such as a mainframe system), but enterprisewide cohesiveness can still be maintained. For example, individuals or local offices, using their own computers and running software appropriate to their needs, can be linked to large computers or OpenVMS Cluster systems in a network. A distributed computing system can function as though it were a single system that connects all parts of an enterprise. The client can have transparent access to the integrated resources of the enterprise.

Any system can be a client or a server, and some systems may include both client software for certain applications and server software for other applications. Servers can be connected to many clients, and a client can be connected to more than one server at a time. (Client and server relationships may change frequently: at times it may not be possible to tell which is the client and which is the server.) In some cases, the application is stored on the server and run on the client, using the resources of the client. The user, who does not need to know what system is serving the application, can function in a familiar, local environment.

1.3.3.2. OpenVMS Client/Server Capabilities

OpenVMS systems support a wide variety of client/server configurations. Clients requiring resources can be personal computers, workstations, point-of-sale devices, OpenVMS systems, or systems from other vendors that are running the appropriate client software. Users on client systems can use character-cell terminals or windowing desktops.

Servers fulfilling clients' requests can be located on OpenVMS systems or other operating systems running appropriate server software. OpenVMS servers, for example, can provide file access, printing, application services, communication services, and computing power as application engines to clients on desktop devices or in laboratories or factories. Client/server configurations permit the commercial-strength capabilities of OpenVMS host systems to be integrated with the personal-computing capabilities of desktop systems.

Middleware, which runs on OpenVMS and other systems from multiple vendors, can be used to tie together clients and servers. Middleware integrates various client and server systems through application, communication, data interchange, and multivendor support. Complex information-sharing environments involving PC clients and operating system servers are supported.

An essential feature of the OpenVMS operating system is its support of a rich environment for developing software application programs. The programming software integrated in the OpenVMS system provides the tools required to effectively develop new software applications. You also have the option of using additional powerful tools to enhance the productivity of software development in the OpenVMS environment.

The following sections summarize the primary program development features available on all three supported platforms. The sections also introduce the OpenVMS programming environment and present brief functional descriptions of the OpenVMS programming tools.

1.4. The OpenVMS Programming Environment

The OpenVMS system supports a flexible programming environment that offers a wide range of tools and resources to support efficient program development. This robust OpenVMS programming environment permits the development of mixed-language application programs and portable programs, as well as application programs with distributed functions that run in client/server environments. This environment also provides tools that allow you to use the web and other information technologies.

Creating, controlling, and deleting processes

Communicating with other components

Sharing resources

Implementing input/output procedures

Using security features

Managing memory

Managing files

Synchronizing events

Providing for condition handling

Calling utility routines

Language compilers, interpreters, and assemblers

Linkers and debuggers

Text processors and other program development utilities

Callable system routines such as run-time routines, system services, and other utility routines

Record Management Services (RMS) routines and utilities

Optional software development tools that run on the OpenVMS system enhance programmer productivity, saving programming time and promoting the development of error-free code. OpenVMS supports optional integrated software products that enhance program development capabilities in an organization. These software development products can make use of middleware services that facilitate the development of applications for multivendor networks and for web-enabling tools to help develop client/server applications.

Provide web access to data

Provide web access to applications

Provide web tools for commercial web servers

Provide other web tools including freeware

Middleware products and capabilities include Distributed Computing Environment (DCE), COM for OpenVMS, and Reliable Transaction Router for OpenVMS. Web-enabling tools include DECforms Web Connector, and TP Web Connector.

1.4.1. Programming to Standards

Coding of programs for the OpenVMS environment and for other environments involves conforming to software development standards. OpenVMS standards that define modular programming techniques and procedure calling and condition handling practices pertain to applications specific to OpenVMS. IEEE and international standards apply to applications developed on OpenVMS that are designed to run on other systems as well as on OpenVMS.

1.4.1.1. Common Environment for Writing Code

OpenVMS software programmers can write code in a common environment, following standard OpenVMS modular programming practices. This standard approach establishes the minimum criteria necessary to ensure the correct interface at the procedure level between software written by different programmers. If all programmers coding OpenVMS applications follow this standard approach, modular procedures added to a procedure library will not conflict with other procedures in the library. Standard modular programming practices apply to OpenVMS programs that have a public entry point. For details of this standard approach, see the Guide to Creating OpenVMS Modular Procedures.

1.4.1.2. Common Language Environment

The OpenVMS system supports a common language environment, which permits using a mixture of languages in programming. A program written in any of the programming languages supported by OpenVMS can contain calls to procedures written in other supported languages. Mixed-language programming is possible because all supported languages adhere to the OpenVMS calling standard. This standard describes the techniques used by all supported languages for invoking routines and passing data between them. It also defines the mechanisms that ensure consistency in error and exception handling routines, regardless of the mix of programming languages. Information about the calling standard appears in the VSI OpenVMS Calling Standard, and descriptions of how to use the calling interface are given in VSI OpenVMS Programming Concepts Manual, Volume II.

1.5. OpenVMS Programming Software

This section describes the integrated programming tools available on the OpenVMS operating system to help implement software development.

The phases of a typical software development life cycle can include proposal of the concept; formulation of requirements and specifications for the software product; design, implementation, and testing of the software; and integration and maintenance of the product. Implementing the software product involves building and modifying source code modules and compiling, linking, and executing the resulting images. Testing involves refining code to optimize performance.

|

Type of Software |

OpenVMS Software Components |

|---|---|

|

Text processors |

|

|

Major programming utilities |

|

|

Other program development utilities |

|

|

Callable system routines |

|

The commands used to invoke some of the programming utilities (for example, linker, debugger, LIBRARIAN) vary slightly for the three supported platforms.

1.5.1. Creating Program Source Files

OpenVMS text-processing utilities can be used to create and modify program source files. The DEC Text Processing Utility (DECTPU) is a high-performance text processor that can be used to create text-editing interfaces such as EVE. DECTPU includes a high-level procedure language with its own compiler and interpreter, as well as the customizable EVE editing interface. DECTPU features multiple buffers, windows, and subprocesses, and provides for text processing in batch mode. The EDT editor is an interactive text editor that provides editing in keypad and line modes. EDT supports multiple buffers, startup command files, and journaling. In general, the EVE editing interface offers more capability than EDT for complex editing tasks.

The vi editor is a display-oriented interactive text editor used in the POSIX for OpenVMS environment. POSIX also supports the ed and ex editors.

Other optional tools for creating source files on OpenVMS systems are available separately or as part of the VSI software development environment. The Language-Sensitive Editor/Source Code Analyzer for OpenVMS (LSE/SCA) provides a multilanguage, multivendor editor for program development and maintenance and also supplies cross-referencing features and the capability to analyze source code.

1.5.2. Creating Object Files

OpenVMS supports a variety of optional language compilers, interpreters, and assemblers that translate source code to object code (in the form of object modules). These language implementations adhere to industry standards, including ISO, ANSI, and X/Open standards as well as U.S. Federal Information Processing Standards (FIPS) and Military Standards (MIL-STD), as applicable.

| Language | Characteristics |

|---|---|

|

VSI Ada |

Complete production-quality implementation of Ada language; fully conforms to ANSI and MIL-STD standards; has Ada validation. |

|

VAX APL |

Interpreter with built-in editor, debugger, file system, communication facility. |

|

VAX BASIC |

Either an interpreter or a compiler; fully supported by the OpenVMS debugger; fully reentrant code. |

|

VSI BASIC for OpenVMS |

An optimizing compiler; highly compatible with VAX BASIC; no environment or interpreter support; also available on I64. |

|

BLISS-32 for OpenVMS |

Advanced set of language features supporting development of modular software according to structured programming concepts; also available on I64. |

|

BLISS-64 for OpenVMS |

Development of modular software support for 64-bit programs; not available on VAX. |

|

VAX C |

Full implementation of C programming language with added features for performance enhancement in the OpenVMS environment. |

|

VSI C for OpenVMS Alpha and I64 systems |

Compliant with ANSI/ISO C International Standard with VSI extensions; includes standard-conformance checking and many optional code-quality and portability diagnostics; supports 64-bit virtual addressing; generates optimized and position-independent code. |

|

VSI C++ for OpenVMS Alpha and I64 systems |

Compliant with ANSI/ISO C++ International Standard with VSI extensions; supports the ARM, GNU, and MS dialects; supports 64-bit virtual addressing; generates highly optimized object code; facilitates object-oriented program design. |

|

VSI COBOL for OpenVMS |

Compliant with ANSI-standard COBOL; includes as enhancements screen-handling, file-sharing, and report-writing facilities; is supported on I64. |

|

VAX DIBOL |

For interactive data processing; includes a compiler, debugger, and utility programs for data handling, data storing, and interprogram communication. |

|

VSI Fortran 77 for OpenVMS VAX |

Extended implementation of full language FORTRAN-77, conforming to American National Standard FORTRAN, ANSI X3.9-1978. It includes optional support for programs conforming to ANSI X3.9-1966 (FORTRAN IV) and meets Federal Information Processing Standard Publication FIPS-69-1 and MIL-STD-1753. |

|

VSI Fortran for OpenVMS |

ANSI-standard Fortran 90 and Fortran 95 optimizing compiler; available on I64 and Alpha systems. |

|

VAX MACRO |

Assembly language for programming the VAX computer under the OpenVMS operating system; uses all OpenVMS resources; supports large instruction set enabling complex programming statements. |

|

MACRO-32 Compiler |

Available on OpenVMS I64 and Alpha systems to port existing VAX MACRO code to an Alpha or I64 system. |

|

MACRO-64 Assembler |

Available on OpenVMS Alpha systems; a RISC assembly language that provides precise control of instructions and data. |

|

VSI Pascal for OpenVMS |

ANSI-standard Pascal features and language extensions that go beyond the standard; available on VAX, Alpha, and I64. |

1.5.3. Creating Runnable Programs

After a program source file is coded, it must be compiled or assembled into object modules by a language processor and then linked. The OpenVMS Linker binds the object modules into an image that can be executed on the OpenVMS operating system.

The linker processes object modules and shareable image files, as well as symbol table files, library files, and options files (used to manage the linking operation and simplify the use of complex, repetitious linker operations). The most common output of the linker is an executable image of the program. The linker can also produce a shareable image, a system image, an image map, or a symbol table file to be used by other programs being linked. Certain linking tasks, such as creating shareable images, are performed differently on OpenVMS VAX than on OpenVMS Alpha and OpenVMS I64 systems.

The Librarian utility provides for efficient storage in central, easily accessible files of object modules, image files, macros, help text, or other record-oriented information.

1.5.4. Testing and Debugging Programs

The debugger allows users to trace program execution and to display and modify register contents using the same symbols as are in the source code.

The OpenVMS Debugger (debugger), which debugs user-mode code.

TheDelta/XDelta Debugger (DELTA/XDELTA), which debugs code in other modes as well as user mode. (The DELTA debugger has not yet been ported to the OpenVMS I64 operating system).

The OpenVMS symbolic debugger is more useful than DELTA/XDELTA for most programs: the symbolic commands entered using different interfaces (keypad, command line, or file of commands) display source code lines on the screen, have more descriptive error messages, and provide help information.

Control program execution on a line-by-line basis or at a user-specified breakpoint

Display breakpoints, tracepoints, watchpoints, active routine calls, stack contents, variables, symbols, source code, and source directory search list

Define symbols

Create key definitions

Change values in variables

Evaluate a language or address expression

Create or execute debugger command procedures

The OpenVMS symbolic debugger provides enhanced support for programs that have multiple threads of execution within an OpenVMS process, including any program that uses POSIX Threads Library for developing real-time applications.

The debugger has been modified to support debugging of programs that contain 64-bit data addresses.

An additional debugger utility is available only on an OpenVMS Alpha system: the OpenVMS Alpha System-Code Debugger, which can be used to debug non-pageable system code and device drivers. The system-code debugger is a symbolic debugger that lets the user employ the familiar OpenVMS Debugger interface to observe and manipulate system code interactively as it executes. The system-code debugger can display the source code where the software is executing and allows the user to advance by source line.

Control the system software's execution, stopping at points of interest, resuming execution, intercepting fatal exceptions, and so on

Trace the execution path of the system software

Monitor exception conditions

Examine and modify the value of variables

In some cases, test the effect of modifications without having to edit the source code, recompile, and relink

You can use the OpenVMS Alpha System-Code Debugger to debug code written in the following languages: C, BLISS, and MACRO. Information about using the system-code debugger and how it differs from the OpenVMS Debugger is given in Writing OpenVMS Alpha Device Drivers in C.

1.5.4.1. Special Modes of Operation for Debugging

MULTIPROCESSING

POOLCHECK

BUGCHECKFATAL

SYSTEM_CHECK

MULTIPROCESSING is useful for debugging privileged code that uses spinlocks, such as device driver code. POOLCHECK is useful for investigating frequent and inexplicable failures in a system. When POOLCHECK is enabled, pool-checking routines execute whenever pool is deallocated or allocated. BUGCHECKFATAL is useful for debugging the executive. SYSTEM_CHECK turns on the previous three system parameters and also activates other software that aids in detecting problems. It enables a number of run-time consistency checks on system operation and records some trace information.

If you are using one of these special modes, for example, to debug a device driver or other complex application, under certain conditions generally related to high I/O loads, it is possible to incur a CPUSPINWAIT bugcheck. To prevent a CPUSPINWAIT bugcheck, use either the system default settings for these system parameters, or reduce the loading of the system.

If you have reason to change the default settings, you can reduce the likelihood of encountering a problem by setting the SMP_LNGSPINWAIT system parameter to a value of 9000000.

1.5.5. Using Other Program Development Utilities

| Utility | Function |

|---|---|

|

Command Definition Utility (CDU) |

Enables an application developer to create commands with a syntax similar to DIGITAL Command Language (DCL) commands. |

|

Message utility |

Permits user to create application messages to supplement the OpenVMS system messages. |

|

Patch utility? |

Permits users to make changes (in the form of patches) to an image or data file. If the change was made to an image, the new version can then be run without recompiling or relinking. |

|

SUMSLP utility |

Supplies batch-oriented editor used to make several updates to a single source file; one update program can be applied to all versions of a file. |

|

National character set utility |

Permits users to define non-ASCII string collating sequences and to define conversion functions; allows an RMS indexed file to be collated using user-specified collating sequences. |

|

System Dump Analyzer utility |

Determines the cause of system failures; reads the crash dump file and formats and displays it; also used to diagnose root causes that lead to an error. |

1.5.6. Managing Software Development Tasks

VSI Code Management System (CMS) for OpenVMS provides an efficient method of storing project files (such as documents, object files, and other records) and tracking all changes to these files.

VSI Module Management System (MMS) for OpenVMS automates building of software applications.

1.6. Using Callable System Routines

OpenVMS provides extensive libraries of prewritten and debugged routines that can be accessed by programs. Libraries specific to the supported platforms supply commonly needed routines optimized for the OpenVMS environment; these libraries include run-time library routines, system services, utility routines, and RMS services. These libraries are described in this section.

1.6.1. Using the POSIX Threads Library Routines

OpenVMS includes a user-mode, multithreading capability called POSIX Threads Library. POSIX Threads Library provides a POSIX 1003.1-1996 standard style threads interface. Additionally, POSIX Threads Library provides an interface that is the OpenVMS implementation of Distributed Computing Environment (DCE) threads as defined by The Open Group.

POSIX Threads Library is a library of run-time routines that allows the user to create multiple threads of execution within a single address space. With POSIX Threads Library Kernel Threads features enabled, POSIX Threads Library provides for concurrent processing across all CPUs by allowing a multithreaded application to have a thread executing on every CPU (on both symmetric and asymmetric multiprocessor systems). Multithreading allows computation activity to overlap I/O activity. Synchronization elements, such as mutexes and condition variables, are provided to help ensure that shared resources are accessed correctly. For scheduling and prioritizing threads, POSIX Threads Library provides multiple scheduling policies. For debugging multithreaded applications, POSIX Threads Library is supported by the OpenVMS Debugger. POSIX Threads Library also provides Thread Independent Services (TIS), which assist in the development of threadsafe APIs.

On OpenVMS Alpha and OpenVMS I64 systems, POSIX threads provide support to accept 64-bit parameters.

General threads

Object attributes

Mutex

Condition variable

Thread context

Thread cancellation

Thread priority and scheduling

Debugging

For more information about threads, see the Guide to POSIX Threads Library.

1.6.2. Using OpenVMS Run-Time Library Routines

The OpenVMS Run-Time Library (RTL) is a set of language-independent procedures for programs to be run specifically in the OpenVMS environment. RTL routines establish a common run-time environment for application programs written in any language supported in the OpenVMS common language environment. RTL procedures adhere to the OpenVMS calling standard and can be called from any program or program module in a language supported by OpenVMS (see Section 1.5.2).

|

Routine |

Description |

|---|---|

|

LIB$ routines |

Library routines that perform generally needed system functions such as resource allocation and common I/O procedures; provide support for 64-bit virtual addressing on Alpha and I64 systems. |

|

MTH$ routines? |

Math routines that perform arithmetic, algebraic, and trigonometric functions. |

|

DPML$ routines |

Portable Mathematics Library for OpenVMS Alpha and OpenVMS I64; a set of highly accurate mathematical functions. |

|

OTS$ routines |

Language-independent routines that perform tasks such as data conversion. |

|

SMG$ routines |

Screen management routines used in the design of complex images on a video screen. |

|

STR$ routines |

String manipulation routines. |

In addition, language-specific RTL routines support procedures in Ada, BASIC, C, COBOL, Fortran, Pascal, and PL/I (VAX only) as well as in POSIX C. VSI C RTL routines support 64-bit programming on OpenVMS Alpha and OpenVMS I64 systems.

CXML is a collection of mathematical routines optimized for Alpha systems. These subroutines perform numerically intensive operations that occur frequently in engineering and scientific computing, such as linear algebra and signal processing. CXML can help reduce the cost of computation, enhance portability, and improve productivity.

1.6.3. Using OpenVMS System Services

OpenVMS system services are procedures that control resources available to processes, provide for communication among processes, and perform basic operating system functions such as I/O coordination. Application programs can call OpenVMS system services to perform the same operations that the system services provide for the OpenVMS operating system (for example, creating a process or subprocess).

At run time, an application program calls a system service and passes control of the process to it. After execution of the system service, the service returns control to the program and also returns a condition value. The program analyzes the condition value, determines the success or failure of the system service call, and alters program execution flow as required.

| Service Group | Function |

|---|---|

|

Security |

Provides mechanisms to enhance and control system security |

|

Event flag |

Clears, sets, and reads event flags; places process in wait state until flags are set |

|

AST |

Controls handling of software interrupts called asynchronous system traps (ASTs) |

|

Logical names |

Provide a generalized logical name service |

|

Input/output |

Performs input and output operations directly at the device driver level, bypassing RMS |

|

Process control |

Creates, deletes, and controls the execution of processes (on a clusterwide basis); permits a process on one node to request creation of a detached process on another node |

|

Process information |

Provides information about processes |

|

Timer and time conversion |

Permits scheduling of program events at specific times or time intervals; supplies binary time values |

|

Condition handling |

Designates condition-handling procedures that gain control when an exception/condition occurs |

|

Memory management |

Permits control of an application program's virtual address space |

|

Change mode |

Changes the access mode of a process |

|

Lock management |

Permits cooperating processes to synchronize their access to shared resources |

|

DECdtm services |

Provide for complete and consistent execution of distributed transactions and for data integrity |

|

Cluster event notification? |

Requests notification when an OpenVMS Cluster configuration event occurs |

OpenVMS I/O system services perform logical, physical, and virtual I/O and network operations, and queue messages to system processes. The $QIO system service provides a direct interface to the operating system's I/O routines. These services are available from within most programming languages supported by OpenVMS and can be used to perform low-level I/O operations efficiently with a minimal amount of system overhead for time-critical applications.

On OpenVMS Alpha and OpenVMS I64 systems, new system services provide access to 64-bit virtual address space for process private use. Additionally, new system services are available to provide high CPU performance and improved symmetric multiprocessing (SMP) scaling of I/O operations. These services exhibit high-performance gains over the $QIO service.

DECdtm services ensure consistent execution of applications on the OpenVMS operating system. In transaction processing applications, many users may be simultaneously making inquiries and updating a database. The distributed transaction processing environment typically involves communication between networked systems at different locations. DECdtm services coordinate distributed transactions by using the two-phase commit protocol and implementing special logging and communication techniques. DECdtm services ensure that all parts of a transaction are completed or the transaction is aborted.

1.6.4. Using OpenVMS Utility Routines

|

Routine |

Utility/Facility |

|---|---|

|

ACL$ |

Access control list editor (ACL editor) |

|

CLI$ |

Command Definition Utility (CDU) |

|

CONV$ |

Convert and Convert/Reclaim utilities (CONVERT and CONVERT/RECLAIM) |

|

DCX$ |

Data Compression/Expansion facility (DCX) |

|

EDT$ |

EDT editor |

|

FDL$ |

File Definition Language utility (FDL) |

|

LBR$ |

Librarian utility (LIBRARIAN) |

|

LGI$ |

LOGINOUT routines |

|

MAIL$ |

Mail utility (MAIL) |

|

NCS$ |

National Character Set utility (NCS) |

|

PSM$ |

Print Symbiont Modification facility (PSM) |

|

SMB$ |

Symbiont/Job-Controller Interface facility (SMB) |

|

SOR$ |

Sort/Merge utility (SORT/MERGE) |

|

TPU$ |

DEC Text Processing Utility (DECTPU) |

You can use an optional, portable library of user-callable routines to perform high-performance sorting on OpenVMS Alpha systems. The high-performance sort supports a subset of the functionality present on the OpenVMS Sort/Merge utility, using the callable interface to the SOR$ routine. The high-performance sort/merge provides better performance for most sort and merge operations.

1.7. Programming User Interfaces

User interfaces to the OpenVMS VAX, OpenVMS Alpha, and OpenVMS I64 operating systems include the DCL interface and the optional DECwindows Motif for OpenVMS graphical user interface. Another user interface is through electronic forms.

You can use DCL commands to invoke program development software (compilers, editors, linkers) and to run and control execution of programs. You can use DCL command procedures to perform repetitious operations in software development.

The Command Definition Utility (CDU) enables application developers to create DCL-level commands with a syntax similar to OpenVMS DCL commands. Using CDU, the developer can create applications with user interfaces similar to those of operating system applications. The Message utility permits an application developer to create application messages to supplement the system messages supplied by the OpenVMS operating system.

The DECwindows Motif for OpenVMS software provides a consistent user interface for developing software applications and includes an extensive set of programming libraries and tools. DECwindows Motif for OpenVMS supports both the OSF/Motif standards-based graphical user interface and the X user interface (XUI) in a single run-time and development environment. DECwindows Motif requires a DECwindows X11 display server (device driver and fonts) that supports the portable compiled format (PCF), permitting use of vendor-independent fonts.

A user interface toolkit composed of graphical user interface objects (widgets and gadgets); widgets provide advanced programming capabilities that permit users to create graphic applications; gadgets, similar to widgets, require less memory to create labels, buttons, and separators

A user interface language to describe visual aspects of objects (menus, labels, forms) and to specify changes resulting from user interaction

The OSF/Motif Window Manager, which allows users to customize the interface

Standard X Window System libraries such as Xlib and the intrinsics

Libraries needed to support the current base of XUI applications

OSF/Motif toolkit support for developing applications using the Motif user interface style

VSI libraries that give users capabilities beyond the standards

1.8. Optional VSI Software Development Tools

VSI supplies optional software development tools for the OpenVMS environment, such as DECset. DECset is a set of tools that supports software coding, testing, and maintenance of applications and data. These tools can be used individually or as part of the optional VSI software development environment.

1.9. Managing Data

The basic OpenVMS tool for transparent, intuitive management of data is the Record Management Services (RMS) subsystem. RMS is a collection of routines that gives programmers a device-independent method for storing, retrieving, and modifying data for their application. RMS also provides extensive protection and reliability features to ensure data integrity.

RMS is a higher level interface to the file system and OpenVMS I/O subsystem. It is used by all products that run on OpenVMS VAX, OpenVMS Alpha, and OpenVMS I64 for file and record operations. A subset of RMS services permits network file operations that are generally transparent to the user.

On OpenVMS Alpha and OpenVMS I64 systems, RMS supports I/O operations to and from 64-bit addressable space.

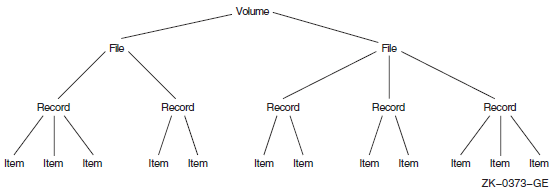

1.9.1. RMS Files and Records

RMS supports a variety of file organizations, record formats, and record-access modes. RMS supports sequential, relative, and indexed disk file organizations, and fixed- and variable-length records. It supports a number of record-access modes: sequential, by key value, by relative record number, or by record file address. RMS is designed primarily for mass storage devices (disks and tapes), but also supports unit-record devices such as terminals or printers.

Creating new files, accessing existing files, extending disk space for files, closing files, and obtaining file characteristics

Getting, locating, inserting, updating, and deleting records in files

RMS promotes safe and efficient file sharing by providing multiple access modes, automatic record locking when applicable, and optional buffer sharing by multiple processes.

1.9.2. RMS Utilities

RMS file utilities allow users to analyze the internal structure of an RMS file and to determine the most appropriate set of parameters to tune an RMS file. RMS utilities can also be used to create, efficiently load, and reclaim space in an RMS file.

Analyze/RMS_File utility

File Definition Language utilities (Create/FDL and Edit/FDL)

Convert and Convert/Reclaim utilities

The Analyze/RMS_File utility allows the programmer to analyze the internal structure of an OpenVMS RMS file and generate a report on its structure and use, as well as interactively explore the file's structure. The utility can generate an FDL file from an RMS file for use with the Edit/FDL utility to optimize the data file.

File Definition Language (FDL) is a special-purpose language for specifying file characteristics; it is useful with higher level languages or for ensuring that files are properly tuned. FDL makes use of RMS control blocks: the file access block (FAB), the record access block (RAB), and the extended attribute block (XAB).

The Edit/FDL utility creates a new FDL file according to user specifications. The Create/FDL utility uses the specifications of an existing FDL file to create a new empty data file.

You can use the Convert utility to copy records from one file to another, while changing the record format and file organization, and to append records to an existing file. The Convert/Reclaim utility reclaims empty bucket space in an indexed file to allow new records to be written to it.

Part I. Process and Synchronization

This part describes the creation, communication, and control of processes. It also describes symmetric multiprocessing (SMP), and the synchronizing of data access, programming operations, and access to resources.

Chapter 2. Process Creation

This chapter describes process creation and the different types of processes. It also describes kernel threads and the kernel threads process structure.

2.1. Process Types

A process is the environment in which an image executes. Two types of processes can be created with the operating system: spawned subprocesses or detached processes.

A spawned subprocess is dependent on the process that created it (its parent), and receives a portion of its parent process's resource quotas. The system deletes the spawned subprocess when the parent process exits.

A detached process is independent of the process that created it. The process the system creates when you log in is, for example, a detached process. If you want a created process to continue after the parent exits, or not to share resources with the parent, use a detached process.

|

Characteristic |

Subprocess |

Detached Process |

|---|---|---|

|

Privileges |

Received from creating process. |

Specified by creating process. |

|

Quotas and limits |

Some shared with creating process. |

Specified by creating process, but not shared with creating process. |

|

User authorization file |

Used for information not given by creating process. |

Used for most information not given by creating process. |

|

User identification code |

Received from creating process. |

Specified by creating process. |

|

Restrictions |

Exist as long as creating process exists. |

None. |

|

How created |

SYS$CREPRC, or LIB$SPAWN from another process. |

SYS$CREPRC from another process. |

|

When deleted |

When creating process exits, or at image exit or logout, depending on whether a CLI is present. |

At image exit or logout, depending on whether a CLI is present. |

|

Command language interpreter (CLI) present |

Usually not if created with SYS$CREPRC; always yes if spawned. |

Usually present, but not necessarily. |

2.2. Execution Context of a Process

Image that the process is executing

Input and output streams for the image executing in the process

Disk and directory defaults for the process

System resource quotas and user privileges available to the process

When the system creates a detached process as the result of a login, it uses the system user authorization file (SYSUAF.DAT) to determine the process's execution context.

The process created for you executes the image LOGINOUT.

The terminal you are using is established as the input, output, and error stream device for images that the process executes.

Your disk and directory defaults are taken from the user authorization file.

The resource quotas and privileges you have been granted by the system manager are associated with the created process.

A command language interpreter (CLI) is mapped into the created process.

2.3. Modes of Execution of a Process

Interactive—Receives input from a record-oriented device, such as a terminal or mailbox

Batch—Is created by the job controller and is not interactive

Network—Is created by the network ancillary control program (ACP)

Other—Is not running in any of the other modes (for example, a spawned subprocess where input is received from a command procedure)

2.4. Creating a Subprocess

You can create a subprocess using the LIB$SPAWN run-time library routines, the SYS$CREPRC system service, or the C system() call. A subprocess created with LIB$SPAWN is called a spawned subprocess.

2.4.1. Naming a Spawned Subprocess

As of OpenVMS Version 7.3-1, the way OpenVMS names spawned

subprocesses was changed to improve performance. Prior to OpenVMS

Version 7.3-1, if no process name was supplied, the system

constructed a name by appending _n to the user

name, where n was the next available nonduplicate

integer for any process currently in the system. For example, the

first spawned process from the SYSTEM would be called SYSTEM_1, the

second, SYSTEM_2, and so on. The next available number was chosen as

soon as a gap was found.

With OpenVMS Version 7.3-1, the default-constructed process name for subprocesses was changed. Instead of searching incrementally for the next unique number, a random number is chosen to append to the user name. Therefore, the first processes that are spawned from user SYSTEM might be SYSTEM_154, SYSTEM_42, SYSTEM_87, and so on. This procedure results in a very high probability of finding a unique name on the first try, because it is unlikely that the same number is already in use. This procedure greatly reduces the cost of process creation, and applications that rely on spawned subprocesses might see a dramatic performance improvement with this change.

However, some applications might rely on the prior method of assigning subprocess names. The DCL_CTLFLAGS parameter, a bitmask used to alter default behavior for certain commands on a systemwide basis, is available to allow you to configure the system as necessary. The low bit of the bitmask is defined, and it controls the default process-name assignment for a subprocess created using the SPAWN command or LIB$SPAWN routine.

If the bit is clear, the new behavior (beginning with OpenVMS Version 7.3-1) is used. If you do not specify a process name, the system assigns the user name with a random number suffix. This is the default setting.

If the bit is set, the old behavior is used. If you do not specify a process name, the system assigns the user name with the next available number.

2.4.2. Using LIB$SPAWN to Create a Spawned Subprocess

The LIB$SPAWN routine enables you to create a subprocess and to set some context options for the new subprocess. LIB$SPAWN creates a subprocess with the same priority as the parent process (generally priority 4). The format for LIB$SPAWN is:

LIB$SPAWN ([command_string],[input_file],[output_file],[flags],[process-name], [process_id],[completion_status],[completion_efn],[completion_astadr], [completion_astarg],[prompt],[cli])

For complete information on using each argument, refer to the LIB$SPAWN routine in VSI OpenVMS RTL Library (LIB$) Manual.

Specifying a Command String

Use the command_string argument to specify a

single DCL command to execute once the subprocess is initiated. You

can also use this argument to execute a command procedure that, in

turn, executes several DCL commands

(@command_procedure_name).

Redefining SYS$INPUT and SYS$OUTPUT

Use the input_file and

output_file arguments to specify

alternate input and output devices for

SYS$INPUT and

SYS$OUTPUT. Using alternate values

for SYS$INPUT and

SYS$OUTPUT can be particularly

useful when you are synchronizing processes that are executing

concurrently.

Passing Parent Process Context Information to the Subprocess

Use the flags argument to specify which

characteristics of the parent process are to be passed on to the

subprocess. With this argument, you can reduce the time required to

create a subprocess by passing only a part of the parent's context.

You can also specify whether the parent process should continue to

execute (execute concurrently) or wait until the subprocess has

completed execution (execute in line).

After the Subprocess Completes Execution

Use the completion_status,

completion_efn, and

completion_astadr arguments to

specify the action to be taken when the subprocess completes

execution (send a completion status, set a local event flag, or

invoke an AST procedure). For more information about event flags and

ASTs, refer to Chapter 8.

The LIB$SPAWN routine and SPAWN command do not return a completion status code of 0 from a subprocess command procedure.

The LIB$SPAWN routine can fail in a detached process as well, because it is dependent upon and requires the presence of a command language interpreter (CLI), such as DCL. Without a CLI present in the current process, this call fails with a"NOCLI, no CLI present to perform function" error. Note that a detached process may not have a CLI present.

You can use SYS$CREPRC in place of LIB$SPAWN; though with SYS$CREPRC the context of the parent process (symbols and logical names) is not propagated into the subprocess.

When using LIB$SPAWN asynchronously (with CLI$M_NOWAIT), you have to synchronize completion. For if the parent process should exit, all subprocesses exit, potentially resulting in an unexpected series of failures of all subprocesses of the exiting parent process.

Specifying an Alternate Prompt String

Use the prompt argument to specify a prompt

string for the subprocess.

Specifying an Alternate Command Language Interpreter

Use the cli argument to specify a command

language interpreter for the subprocess.

Examples of Creating Subprocesses

! Declare status and library routine INTEGER STATUS, LIB$SPAWN STATUS = LIB$SPAWN ('@COMMANDS')

#include <descrip.h> #include <lib$routines.h> #include <ssdef.h> #include <stsdef.h> main() { int RetStat; $DESCRIPTOR( CmdDsc, "@COMMANDS" ); RetStat = lib$spawn( &CmdDsc ); if (!$VMS_STATUS_SUCCESS( RetStat )) return RetStat; return SS$_NORMAL; }

! Mask for LIB$SPAWN INTEGER MASK EXTERNAL CLI$M_NOCLISYM, 2 CLI$M_NOLOGNAM, 2 CLI$M_NOKEYPAD ! Declare status and library routine INTEGER STATUS, LIB$SPAWN ! Set mask and call LIB$SPAWN MASK = %LOC(CLI$M_NOCLISYM) .OR. 2 %LOC(CLI$M_NOLOGNAM) .OR. 2 %LOC(CLI$M_NOKEYPAD) STATUS = LIB$SPAWN ('@COMMANDS.COM', 2 ,, 2 MASK)

#include <clidef.h> #include <descrip.h> #include <lib$routines.h> #include <ssdef.h> #include <stsdef.h> main() { int RetStat; int FlagsMask = CLI$M_NOCLISYM | CLI$M_NOLOGNAM | CLI$M_NOKEYPAD; $DESCRIPTOR( CmdDsc, "@COMMANDS.COM" ); RetStat = lib$spawn( &CmdDsc, 0, 0, &FlagsMask ); if (!$VMS_STATUS_SUCCESS( RetStat )) return RetStat; return SS$_NORMAL; }

! Mask for LIB$SPAWN EXTERNAL CLI$M_NOWAIT ! Declare status and library routine INTEGER STATUS, LIB$SPAWN STATUS = LIB$SPAWN ('RUN $DISK1:[USER.MATH]CALC', ! Image 2 'DATA84.IN', ! Input 2 'DATA84.RPT', ! Output 2 %LOC(CLI$M_NOWAIT)) ! Concurrent

#include <clidef.h> #include <descrip.h> #include <lib$routines.h> #include <ssdef.h> #include <stsdef.h> main() { int RetStat; int FlagsMask = CLI$M_NOWAIT; $DESCRIPTOR( CmdDsc, "RUN $DISK1:[USER.MATH]CALC" ); $DESCRIPTOR( InpDsc, "DATA84.IN" ); $DESCRIPTOR( OutDsc, "DATA84.RPT" ); RetStat = lib$spawn( &CmdDsc, &InpDsc, &OutDsc, &FlagsMask ); if (!$VMS_STATUS_SUCCESS( RetStat )) return RetStat; return SS$_NORMAL; }

2.4.3. Using the C system() Call

#include <ssdef.h>

#include <stdio.h>

#include <stdlib.h>

main()

{

printf("calling system() \n");

system("show system");

printf("done\n");

return SS$_NORMAL;

}This example shows the use of the system() call to spawn a DCLSHOW SYSTEM command; it subsequently returns and the execution of the main() image continues.

2.4.4. Using SYS$CREPRC to Create a Subprocess

The Create Process (SYS$CREPRC) system

service creates both subprocesses and detached processes. This

section discusses creating a subprocess; Section 2.5 describes creating a detached

process. When you call the SYS$CREPRC

system service to create a process, you define the context by

specifying arguments to the service. The number of subprocesses a

process can create is controlled by its

PQL$_PRCLM subprocess quota, an

individual quota description under the quota

argument.

Though SYS$CREPRC does not set many context values for the subprocess by default, it does allow you to set many more context values than LIB$SPAWN. For example, you cannot specify separate privileges for a subprocess with LIB$SPAWN directly, but you can with SYS$CREPRC.

By default, SYS$CREPRC creates a subprocess rather than a detached process. The format for SYS$CREPRC is as follows:

SYS$CREPRC ([pidadr] ,[image] ,[input] ,[output] ,[error] ,[prvadr] ,[quota], [prcnam] ,[baspri] ,[uic] ,[mbxunt] ,[stsflg] ,[itemlst] ,[node])

Ordinarily, when you create a subprocess, you need only assign it an image to execute and, optionally, the SYS$INPUT, SYS$OUTPUT, and SYS$ERROR devices. The system provides default values for the process's privileges, resource quotas, execution modes, and priority. In some cases, however, you may want to define these values specifically. The arguments to the SYS$CREPRC system service that control these characteristics follow. For details, see the descriptions of arguments to the SYS$CREPRC system service in the VSI OpenVMS System Services Reference Manual.

The default values passed into the subprocess might not be complete enough for your use. The following sections describe how to modify these default values with SYS$CREPRC.

Redefining SYS$INPUT, SYS$OUTPUT, and SYS$ERROR

Use the input, output,

and error arguments to specify alternate

input, output, and error devices for

SYS$INPUT,

SYS$OUTPUT, and

SYS$ERROR. Using alternate values

for SYS$INPUT,

SYS$OUTPUT, and

SYS$ERROR can be particularly

useful when you are synchronizing processes that are executing

concurrently. By providing alternate equivalence names for the

logical names SYS$INPUT,

SYS$OUTPUT, and

SYS$ERROR, you can place these

logical name/equivalence name pairs in the process logical name

table for the created process.

#include <descrip.h>

#include <ssdef.h>

#include <starlet.h>

#include <stdio.h>

#include <stsdef.h>

// Comment syntax here assumes compiler support

main()

{

int RetStat;

$DESCRIPTOR(input,"SUB_MAIL_BOX"); // Descriptor for input stream

$DESCRIPTOR(output,"COMPUTE_OUT"); // Descriptor for output and error

$DESCRIPTOR(image,"COMPUTE.EXE"); // Descriptor for image name

// Create the subprocess

RetStat = sys$creprc( 0, // process id

&image, // image

&input,  // input SYS$INPUT device

&output,

// input SYS$INPUT device

&output,  // output SYS$OUTPUT device

&output,

// output SYS$OUTPUT device

&output,  // error SYS$ERROR device

0,0,0,0,0,0,0);

if (!$VMS_STATUS_SUCCESS( RetStat ))

return RetStat;

return SS$_NORMAL;

}

}

// error SYS$ERROR device

0,0,0,0,0,0,0);

if (!$VMS_STATUS_SUCCESS( RetStat ))

return RetStat;

return SS$_NORMAL;

}

} The | |

The | |

The |

The SYS$CREPRC system service does not provide default equivalence names for the logical names SYS$INPUT, SYS$OUTPUT, and SYS$ERROR. If none are specified, any entries in the group or system logical name tables, if any, may provide equivalences. If, while the subprocess executes, it reads or writes to one of these logical devices and no equivalence name exists, an error condition results.

The SYS$CREPRC system service also does not provide default equivalence names for the logical names SYS$LOGIN, SYS$LOGIN_DEVICE, and SYS$SCRATCH. These logical names are available to the created process only when the specified image is LOGINOUT, and when the PRC$M_NOUAF flag is not set.

Use the Get Device/Volume Information (SYS$GETDVIW) system service to obtain the device name for the logical name SYS$INPUT, SYS$OUTPUT, or SYS$ERROR.

Specify the address of the descriptor returned by the SYS$GETDVIW service when you specify the

input,output, orerrorargument to the SYS$CREPRC system service.

#include <descrip.h> #include <dvidef.h> #include <efndef.h> #include <lib$routines.h> #include <ssdef.h> #include <starlet.h> #include <stdio.h> #include <stsdef.h> // Comment syntax used here assumes compiler support main() { #define MAXTERMLEN 64 #define MAXITMLST 3 char TermName[MAXTERMLEN]; int BasPri = 4; int RetStat; int TermLen; unsigned short int IOSB[4]; // ItemList data structures used to acquire device name int i; struct { unsigned short int BufLen; unsigned short int ItmCod; void *BufAdr; void *BufRLA; } ItmLst[MAXITMLST]; // Descriptors for sys$getdviw call $DESCRIPTOR( SysInput, "SYS$INPUT" ); // Descriptors for sys$creprc call $DESCRIPTOR( ImageDesc,"SYS$SYSTEM:LOGINOUT.EXE"); struct dsc$descriptor TermDesc = { MAXTERMLEN, DSC$K_DTYPE_T, DSC$K_CLASS_S, TermName }; // Assign values to the item list i = 0; ItmLst[i].BufLen = MAXTERMLEN; ItmLst[i].ItmCod = DVI$_DEVNAM; ItmLst[i].BufAdr = &TermName; ItmLst[i++].BufRLA = &TermLen; ItmLst[i].BufLen = 0; ItmLst[i].ItmCod = 0; ItmLst[i].BufAdr = NULL; ItmLst[i++].BufRLA = NULL; // Acquire the terminal device name RetStat = sys$getdviw( EFN$C_ENF, // no event flag needed here 0, // Channel (not needed here) &SysInput, // Device Name ItmLst, // item list IOSB, // Address of I/O Status Block 0,0,0); if (!$VMS_STATUS_SUCCESS( RetStat )) lib$signal( RetStat ); if (!$VMS_STATUS_SUCCESS( IOSB[0] )) lib$signal( IOSB[0] ); // Create the subprocess RetStat = sys$creprc( 0, &ImageDesc, // The image to be run &TermDesc, // Input (SYS$INPUT device) &TermDesc, // Output (SYS$OUTPUT device) &TermDesc, // Error (SYS$ERROR device) 0,0,0, &BasPri, // Process base priority 0,0,0); if (!$VMS_STATUS_SUCCESS( RetStat )) lib$signal( RetStat ); return SS$_NORMAL; }

Use OpenVMS RMS to open the device for reading or writing, or both.

Use the Assign I/O Channel (SYS$ASSIGN) system service to assign an I/O channel to the device for input/output operations.

int RetStat;

unsigned short int IOchan;

$DESCRIPTOR(DevNam,"SYS$OUTPUT");

.

.

.

RetStat = sys$assign( &DevNam, /* Device name */

&IOchan, /* Channel */

0, 0, 0);

if ($!VMS_STATUS_SUCCESS( RetStat ))

return RetStat;For more information about channel assignment for I/O operations, see VSI OpenVMS Programming Concepts Manual, Volume II.

Setting Privileges

Set different privileges by defining the privilege list for the

subprocess using the prvadr argument. This is

particularly useful when you want to dedicate a subprocess to

execute privileged or sensitive code. If you do not specify this

argument, the privileges of the calling process are used. If you

specify the prvadr argument, only the

privileges specified in the bit mask are used; the privileges of the

calling process are not used. For example, a creating process has

the user privileges GROUP and TMPMBX. It creates a process,

specifying the user privilege TMPMBX. The created process receives

only the user privilege TMPMBX; it does not have the user privilege

GROUP.

If you need to create a process that has a privilege that is not one of the privileges of your current process, you must have the user privilege SETPRV.

unsigned int PrivQuad[2] = { (PRV$M_GRPNAM | PRV$M_GROUP), 0};

// could also use: __int64 PrivQuad = PRV$M_GRPNAM | PRV$M_GROUP;Setting Process Quotas

Set different process quotas by defining the quota list of system

resources for the subprocess using the quota

argument. This option can be useful when managing a subprocess to

limit use of system resources (such as AST usage, I/O, CPU time,

lock requests, and working set size and expansion). If you do not

specify this argument, the system defines default quotas for the

subprocess.

#pragma environment save

#pragma nomember_alignment

struct

{

unsigned char pql_code;

unsigned long int pql_value;

} pql[] =

{

{ PQL$_ASTLM, 600 },

{ PQL$_BIOLM, 100 },

{ PQL$_BYTLM, 131072 },

{ PQL$_CPULM, 0 },

{ PQL$_DIOLM, 100 },

{ PQL$_FILLM, 50 },

{ PQL$_PGFLQUOTA, 40960 },

{ PQL$_PRCLM, 16 },

{ PQL$_TQELM, 600 },

{ PQL$_WSDEFAULT, 512 },

{ PQL$_WSQUOTA, 2048 },

{ PQL$_ENQLM, 600 },

{ PQL$_WSEXTENT, 4096 },

{ PQL$_JTQUOTA, 4096 },

{ PQL$_LISTEND, 0 }

};

#pragma environment restoreFor more information about process quotas and process quota lists, see Section 2.6.

Setting the Subprocess Priority

Set the subprocess priority by setting the base execution priority

with the baspri argument. If you do not set

the subprocess priority, the priority defaults to 2 for MACRO and

BLISS and to 0 for all other languages. If you want a subprocess to

have a higher priority than its creator, you must have the user

privilege ALTPRI to raise the priority level.

Specifying Additional Processing Options

Enable and disable parent and subprocess wait mode, control process

swapping, control process accounting, control process dump

information, control authorization checks, and control working set

adjustments using the stsflg argument. This

argument defines the status flag, a set of bits that controls some

execution characteristics of the created process, including resource

wait mode and process swap mode.

Defining an Image for a Subprocess to Execute

image argument to provide the process

with the name of an image to execute. For example, the following

lines of C create a subprocess to execute the image named

CARRIE.EXE:$DESCRIPTOR(image,"CARRIE"); . . . RetStat = sys$creprc(0, &image, ...);

In this example, only a file name is specified; the service uses current disk and directory defaults, performs logical name translation, uses the default file type .EXE, and locates the most recent version of the image file. When the subprocess completes execution of the image, the subprocess is deleted. Process deletion is described in Chapter 4.

2.4.4.1. Disk and Directory Defaults for Created Processes

When you use the SYS$CREPRC system service to create a process to execute an image, the system locates the image file in the default device and directory of the created process. Any created process inherits the current default device and directory of its creator.

If a created process runs an image that is not in its default directory, you must identify the directory and, if necessary, the device in the file specification of the image to be run.

There is no way to define a default device or directory for the created process that is different from that of the creating process in a call to SYS$CREPRC. The created process can, however, define an equivalence for the logical device SYS$DISK by calling the Create Logical Name ($CRELNM) system service.

If the process is a subprocess, you, in the creating process, can define an equivalence name in the group logical name table, job logical name table, or any logical name table shared by the creating process and the subprocess. The created process then uses this logical name translation as its default directory. The created process can also set its own default directory by calling the OpenVMS RMS default directory system service, SYS$SETDDIR.

Make a call to SYS$SETDDIR to change its own default directory.

Make a call to SYS$CREPRC to create the new process.

Make a call to SYS$SETDDIR to change its own default directory back to the default directory it had before the first call to SYS$SETDDIR.

The creating process now has its original default directory. The new process has the different default directory that the creating process had when it created the new process. If the default device is to change, you must also redefine the SYS$DISK logical name. For details on how to call SYS$SETDDIR, see the VSI OpenVMS System Services Reference Manual.

2.5. Creating a Detached Process

The creation of a detached process is primarily a task the operating system performs when you log in. In general, an application creates a detached process only when a program must continue executing after the parent process exits. To do this, you should use the SYS$CREPRC system service.

You can use the uic argument to the

SYS$CREPRC system service to define whether

a process is a subprocess or a detached process. The

uic argument provides the created process

with a user identification code (UIC). If you omit the

uic argument, the

SYS$CREPRC system service creates a

subprocess that executes under the UIC of the creating process. If you

specify a uic argument with the same UIC as the

creating process, the system service creates a detached process with the