Volume Shadowing Guide

- Operating System and Version:

- VSI OpenVMS IA-64 Version 8.4-1H1 or higher

VSI OpenVMS Alpha Version 8.4-2L1 or higher

VSI OpenVMS x86-64 Version 9.2-2 or higher

Preface

This manual explains how to use VSI Volume Shadowing for OpenVMS to replicate data transparently on multiple disks and to provide high data availability.

1. About VSI

VMS Software, Inc. (VSI) is an independent software company licensed by Hewlett Packard Enterprise to develop and support the OpenVMS operating system.

2. Intended Audience

This book is intended for system managers and system users who want to:

Understand how Volume Shadowing for OpenVMS works

Configure shadowed data storage subsystems to maximize data availability

Set up and manage shadow sets

Enhance shadow set performance

Although you do not need any previous volume shadowing experience to use the volume shadowing software or this documentation, you do need a familiarity with the OpenVMS operating system, the OpenVMS Mount utility or OpenVMS system services, and setting system parameters.

3. Document Structure

| Chapter | Contents |

|---|---|

| Chapter 1, "Introduction to Volume Shadowing for OpenVMS" | Introduces Volume Shadowing for OpenVMS and describes how it provides high data availability. |

| Chapter 2, "Configuring Your System for High Data Availability" | Illustrates various shadow set configurations. |

| Chapter 3, "Preparing to Use Volume Shadowing" | Describes how to set up a volume shadowing environment, including information about setting shadowing system parameters, booting a system that uses a system disk in a shadow set, and booting satellite nodes from a shadowed system disk. |

| Chapter 4, "Creating and Managing Shadow Sets Using DCL Commands" | Describes how to use DCL commands to create, mount, dismount,

and dissolve shadow sets. The chapter also describes how to use

the SHOW DEVICESSHOW DEVICES

command, the System Dump Analyzer, and the F$GETDVI lexical

function to obtain information about shadow sets on a running

system. |

| Chapter 5, "Creating and Managing Shadow Sets with System Services" | Describes how to use the OpenVMS system services in a user-written program to create and manage shadow sets. The chapter also describes how to use the $GETDVI system service to obtain information about shadow sets. |

| Chapter 6, "Ensuring Shadow Set Consistency" | Describes how the copy and merge operations maintain data consistency and availability during changes in shadow set membership. |

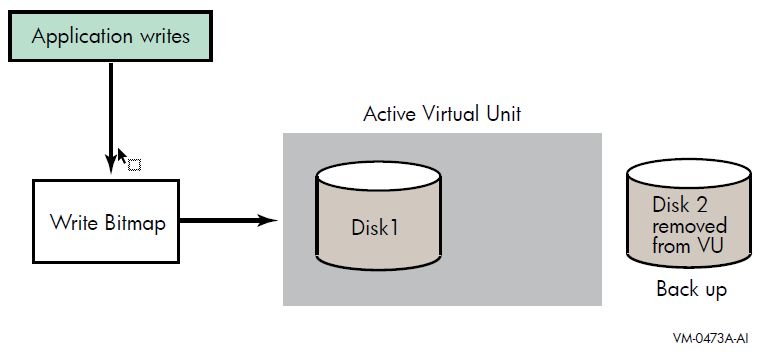

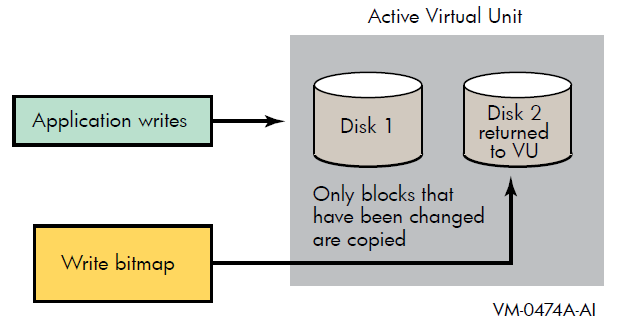

| Chapter 7, "Using Minicopy for Backing Up Data" | Describes how the minicopy operation can be used, in a carefully controlled environment, to reduce the time required for a member to be returned to a shadow set. Typically, the member is removed for backing up data. |

| Chapter 8, "Host-Based Minimerge (HBMM)" | Describes how to use host-based minimerge (HBMM) to shorten the time of a merge operation. |

| Chapter 9, "Performing System Management Tasks on Shadowed Systems" | Describes how to perform system management tasks on shadow sets, including performing backup and upgrade operations, performing shadowing operations in OpenVMS Cluster systems, and handling crash dumps on the shadow set. |

| Chapter 10, "Performance Information for Volume Shadowing" | Includes helpful information and guidelines for achieving better performance from shadow sets. |

| Appendix A, "Messages" | Lists messages related to volume shadowing that are returned by the Mount utility and the VOLPROC, shadow server, and OPCOM facilities. |

4. Related Documents

The following documents contain information related to this manual:

VSI OpenVMS License Management Utility Guide

VSI OpenVMS Cluster Systems Manual

Guidelines for OpenVMS Cluster Configurations

VSI OpenVMS DCL Dictionary: A–M

VSI OpenVMS DCL Dictionary: N–Z

VSI OpenVMS System Manager's Manual, Volume 1: Essentials

VSI OpenVMS System Manager's Manual, Volume 2: Tuning, Monitoring, and Complex Systems

VSI OpenVMS System Management Utilities Reference Manual, Volume 1: A-L

VSI OpenVMS System Management Utilities Reference Manual, Volume 2: M-Z

VSI OpenVMS System Analysis Tools Manual

VSI OpenVMS System Services Reference Manual: A–GETUAI

VSI OpenVMS System Services Reference Manual: GETUTC–Z

5. VSI Encourages Your Comments

You may send comments or suggestions regarding this manual or any VSI document by sending electronic mail to the following Internet address: <docinfo@vmssoftware.com>. Users who have VSI OpenVMS support contracts through VSI can contact <support@vmssoftware.com> for help with this product.

6. OpenVMS Documentation

The full VSI OpenVMS documentation set can be found on the VMS Software Documentation webpage at https://docs.vmssoftware.com.

7. Typographical Conventions

| Convention | Meaning |

|---|---|

|

Ctrl/ x |

A sequence such as Ctrl/ x indicates that you must hold down the key labeled Ctrl while you press another key or a pointing device button. |

|

PF1 x |

A sequence such as PF1 x indicates that you must first press and release the key labeled PF1 and then press and release another key or a pointing device button. |

|

Return |

In examples, a key name enclosed in a box indicates that you press a key on the keyboard. (In text, a key name is not enclosed in a box.) |

... |

A horizontal ellipsis in examples indicates one of the

following possibilities:

|

. . . |

A vertical ellipsis indicates the omission of items from a code example or command format; the items are omitted because they are not important to the topic being discussed. |

|

( ) |

In command format descriptions, parentheses indicate that you must enclose the options in parentheses if you choose more than one. |

|

[ ] |

In command format descriptions, brackets indicate optional choices. You can choose one or more items or no items. Do not type the brackets on the command line. However, you must include the brackets in the syntax for OpenVMS directory specifications and for a substring specification in an assignment statement. |

|

[ |] |

In command format descriptions, vertical bars separate choices within brackets or braces. Within brackets, the choices are options; within braces, at least one choice is required. Do not type the vertical bars on the command line. |

|

{ } |

In command format descriptions, braces indicate required choices; you must choose at least one of the items listed. Do not type the braces on the command line. |

|

bold text |

This typeface represents the introduction of a new term. It also represents the name of an argument, an attribute, or a reason. |

|

italic text |

Italic text indicates important information, complete titles of manuals, or variables. Variables include information that varies in system output (Internal error number), in command lines (/PRODUCER= name), and in command parameters in text (where dd represents the predefined code for the device type). |

|

UPPERCASE TEXT |

Uppercase text indicates a command, the name of a routine, the name of a file, or the abbreviation for a system privilege. |

|

|

Monospace type indicates code examples and interactive screen displays. In the C programming language, monospace type in text identifies the following elements: keywords, the names of independently compiled external functions and files, syntax summaries, and references to variables or identifiers introduced in an example. |

| Bold monospace type indicates DCL commands and qualifiers that can be entered at the command prompt. |

|

- |

A hyphen at the end of a command format description, command line, or code line indicates that the command or statement continues on the following line. |

|

numbers |

All numbers in text are assumed to be decimal unless otherwise noted. Nondecimal radixes—binary, octal, or hexadecimal—are explicitly indicated. |

Chapter 1. Introduction to Volume Shadowing for OpenVMS

This chapter introduces VSI Volume Shadowing for OpenVMS and describes how volume shadowing, sometimes referred to as disk mirroring, achieves high data availability.

1.1. Overview

VSI Volume Shadowing for OpenVMS ensures that data is available to your applications and end users by duplicating data on multiple disks. Because the same data is recorded on multiple disk volumes, if one disk fails, the remaining disk or disks can continue to service I/O requests. VSI Volume Shadowing for OpenVMS is available on VSI OpenVMS Integrity servers, OpenVMS Alpha, and OpenVMS x86 systems. All volume shadowing features are available on OpenVMS Integrity servers, OpenVMS Alpha, and OpenVMS x86 systems.

An implementation of RAID 1 (redundant arrays of independent disks) technology, Volume Shadowing for OpenVMS prevents a disk device failure from interrupting system and application operations. By duplicating data on multiple disks, volume shadowing transparently prevents your storage subsystems from becoming a single point of failure because of media deterioration or communication path failure, or through controller or device failure.

Any entity that is designated as a disk class device to OpenVMS is a device that can be used in a shadow set.

You can mount up to six compatible disk volumes, including the system disk, to form a shadow set.

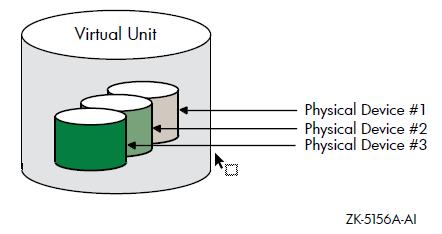

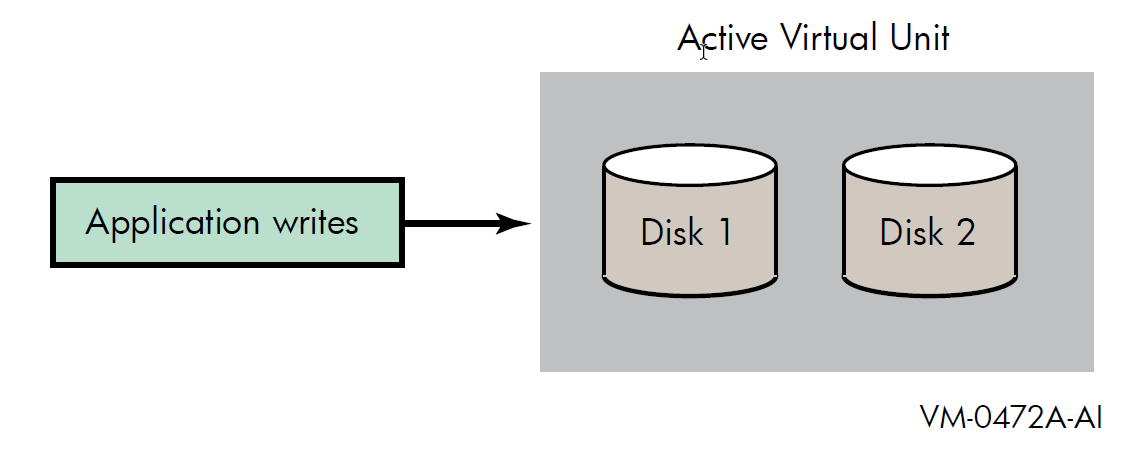

VSI OpenVMS allows users to mount a maximum of six disk volumes. Each disk in the shadow set is a shadow set member. Volume Shadowing for OpenVMS logically binds the shadow set disks together and represents them as a single virtual device called a virtual unit, as shown in Figure 1.1, ''Virtual Unit''. This means that the multiple members of the shadow set, represented by the virtual unit, appear to applications and users as a single, highly available disk.

Note that the term disk and device are used interchangeably throughout this manual to refer to a disk volume. A disk volume is a disk that was prepared for use by placing a new file structure on it.

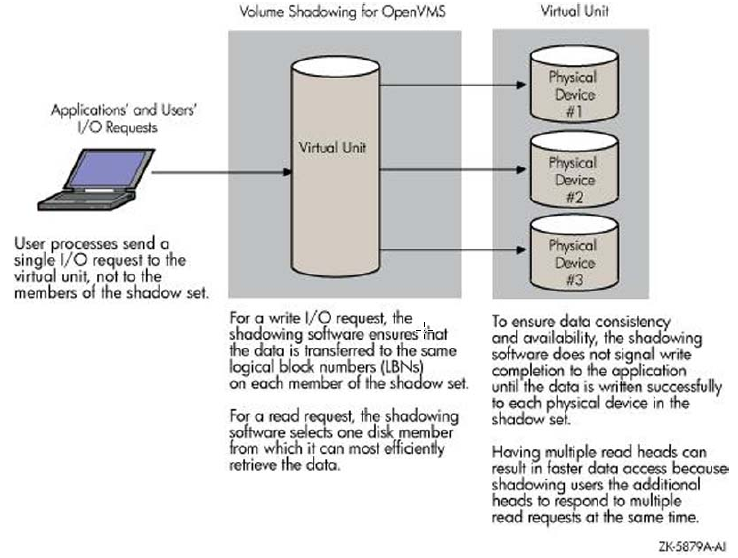

Figure 1.2, ''Elements of a Shadow Set'' shows how Volume Shadowing for OpenVMS propagates data through the virtual unit to three individual shadow set members.

Note

Remember that volume shadowing protects against hardware problems that cause a disk volume to be a single point of failure for both applications and systems that use the disk. Volume shadowing does not provide for recovery from software-related incidents, such as the accidental deletion of files or errant software corrupting the contents of a disk file. Do not use volume shadowing as a substitute for regular backup or journaling.

Applications and users read and write data to and from a shadow set using the same commands and program language syntax and semantics that are used for non-shadowed I/O operations. System managers manage and monitor shadow sets using the same commands and utilities they use for non-shadowed disks. The only difference is that access is through the virtual unit, not to individual disk.

1.2. Volume Shadowing Tasks and Operations

The primary volume shadowing operations used to create shadow sets and to maintain consistent data on each member are mount, copy, minicopy, merge, and minimerge. When these operations are in progress, the system continues to process read and write requests, thus providing continuous availability.

All volume shadowing operations, except for merges and minimerges, are under the control of the system manager. Merges and minimerges are started automatically by the volume shadowing software if a hardware or software failure occurs that could affect the consistency of the data on the shadow set members. However, you can control the order of these merges by assigning different priorities to the shadow sets, as described in Section 4.9, ''Prioritizing Merge and Copy Operations''. You can also change the default delays that affect merges and copies with the SHADOW_PSM_DLY and SHADOW_REC_DLY system parameters that are described in Table 3.1, ''Volume Shadowing Parameters''.

System managers turn on volume shadowing with the SHADOWING system parameter. They can control the number of concurrent merge or copy operations on a given node by the SHADOW_MAX_COPY system parameter. These volume shadowing system parameters, and all other system parameters used with volume shadowing, are described in Section 3.3, ''Volume Shadowing Parameters'' and in Section 3.4, ''Bitmap System Parameters''.

Volume Shadowing for OpenVMS is never invoked directly. Instead, you invoke the DCL commands

MOUNT and DISMOUNT. The MOUNT command

works with the volume shadowing software to create shadow sets. The DISMOUNT

command works with the volume shadowing software to remove shadow set members and to dissolve

entire shadow sets.

Host-based minimerge (HBMM) enables minimerge operations on Fibre Channel and SCSI disk devices.

OpenVMS also provides a programming interface for creating and managing shadow sets with the $MOUNT, $DISMOU, and $GETDVI system services. This programming interface is described in Chapter 5, "Creating and Managing Shadow Sets with System Services".

|

Task |

Operation |

Software Used |

|---|---|---|

|

Create a shadow set. |

Copy |

|

|

Remove a member from a shadow set. |

Dismount a disk |

|

|

Dissolve a shadow set. |

Dismount the shadow set (specify its virtual unit name) |

|

|

Ensure that the data is identical on all shadow set members in the event of a hardware failure. |

Merge or minimerge |

Shadowing software does this automatically when it detects a hardware or software failure. If an HSJ or HSC controller is present in the configuration, a minimerge might be done. |

|

Return a dismounted shadow set member to the shadow set. |

Copy or minicopy |

|

1.3. Hardware Environment

Hardware Environment Volume Shadowing for OpenVMS does not depend on specific hardware in order to operate. All shadowing functions can be performed on OpenVMS Integrity server systems, OpenVMS Alpha, and on OpenVMS x86 systems.

One CPU

One mass storage controller

- One of the following kinds of disk drives:

Serial Advanced Technology Attachment (SATA)

Small Computer Systems Interface (SCSI)

Fibre Channel

The following sections generically describe hardware support. See the most recent Volume Shadowing for OpenVMS Software Product Description DO-VIBHAA-031 for more information.

1.3.1. Memory Requirements

24 KB per node is required on Integrity server systems, OpenVMS Alpha systems, and OpenVMS x86 systems. These requirements are in effect even if you do not use Volume Shadowing for OpenVMS, unless you change the default setting.

If this memory is not available, the node will not boot.

4.5 KB per shadow set per node is required.

This amount of memory is required before a bitmap can be created. If this memory is not available, the mount fails (that is, the shadow set is not mounted on the node). TheMOUNTcommand that fails will issue the following message:%MOUNT-F-INSFMEM, insufficient dynamic memory

For every GB of storage of a shadow set member, 2.0 KB per node is required for the bitmaps for each shadow set mounted on a node. (Each shadow set can have up to six bitmaps. And, with HBMM support, a shadow set can have a maximum of 12 bitmaps.)

When calculating memory requirements, note that a two-member shadow set with 50 GB per member counts as 50 GB, not 100 GB.

For example, for a shadow set with 200 GB of storage per member, 400 KB of memory is required for its bitmaps for every node in the cluster. If this memory is not available on the node where the bitmap request occurs, the bitmap is not created.

If the master bitmap is created but sufficient memory is not available on another node on which the shadow set is subsequently mounted, a local bitmap is not created. If the WBM_OPCOM_LVL system parameter is set to 1 (the default) or 2, the following OPCOM message is displayed:Unable to allocate local bitmap - running in degraded mode.

Writes from nodes without local bitmaps are registered with the node on which the shadow set is first mounted.

24 KB per node (regardless of whether you use volume shadowing)

45 KB (10 shadow sets × 4.5 KB per unit mounted on the system)

1050 KB (50 × 2.1 KB (per GB of disk size) × 10 shadow sets

1119 KB total memory required

1.4. Supported Configurations

Volume Shadowing for OpenVMS provides data availability across the full range of configurations — from single nodes to large OpenVMS Cluster systems — so you can provide data availability where you require it most.

For the OpenVMS Operating System: SPD DO-VIBHAA-005 for Integrity V8.4-1H1; SPD DO-DVASPQ-001 for Alpha V8.4-2L1

For OpenVMS Cluster Software: SPD DO-VIBHAA-032

If an individual disk volume is already mounted as a member of an active shadow set, the disk volume cannot be mounted as a standalone disk on another node.

1.4.1. Maximum Number of Shadow Sets

You can mount a maximum of 500 disks in shadow sets on a standalone system or in an OpenVMS Cluster system. A limit of 10,000 single-member shadow sets is allowed on a standalone system or in an OpenVMS cluster. Dismounted shadow sets, unused shadow sets, and shadow sets with no write bitmaps allocated to them are included in this total. These limits are independent of controller and disk type. The shadow sets can be mounted as public or private volumes.

The SHADOW_MAX_UNIT system parameter is responsible for the maximum number of shadow sets that can exist on a node. For more information about SHADOW_MAX_UNIT, see Section 3.3.1, ''Guidelines for Using Volume Shadowing Parameters''.

1.4.2. Support for Six-Member Shadow Sets

VSI OpenVMS supports six-member shadow sets as compared to the previous three-member shadow sets. This is useful for multisite disaster-tolerant configurations. In a three-member shadow set, a three-site disaster tolerant configuration has only one shadow member per site. In this scenario, when two sites fail, the member left out in the surviving site becomes a single point of failure. With six-member shadow set support, you can have two members of a shadow set in each of the three sites providing high availability.

For example:

$ SHOW DEV DSA5678: Device Device Error Volume Free Trans Mnt Name Status Count Label Blocks Count Cnt DSA5678: Mounted 0 SIXMEMBER 682944 1 1 $6$DKB0: (WSC236) ShadowSetMember 0 (member of DSA5678:) $6$DKB100: (WSC236) ShadowSetMember 0 (member of DSA5678:) $6$DKB200: (WSC236) ShadowSetMember 0 (member of DSA5678:) $6$DKB300: (WSC236) ShadowSetMember 0 (member of DSA5678:) $6$DKB400: (WSC236) ShadowSetMember 0 (member of DSA5678:) $6$DKB500: (WSC236) ShadowSetMember 0 (member of DSA5678:)

1.4.3. Shadowing System Disks

You can shadow system disks as well as data disks. Thus, a system disk need not be a single point of failure for any system that boots from that disk. System disk shadowing becomes especially important for OpenVMS Cluster systems that use a common system disk from which multiple computers boot. Volume shadowing makes use of the OpenVMS distributed lock manager, and the quorum disk must be accessed before locking is enabled. Note that you cannot shadow quorum disks.

Integrity server, Alpha, and x86 systems can share data on shadowed data disks, but separate system disks are required — one for each architecture.

1.4.4. EFI Shell Precautions on Shadowed System Disks

On each Integrity server and x86 system disk, there can exist up to two File Allocation Table (FAT)s partitions that contain OpenVMS boot loaders, Extensible Firmware Interface (EFI) applications, and hardware diagnostics. The OpenVMS bootstrap partition and, when present, the diagnostics partition are respectively mapped to the following container files on the OpenVMS system disk:

SYS$LOADABLE_IMAGES:SYS$EFI.SYS SYS$MAINTENANCE:SYS$DIAGNOSTICS.SYS

The contents of the FAT partitions appear as fsn: devices

at the console EFI Shell> prompt. The fsn:

devices can be directly modified by the user command input at EFI Shell> prompt

and by the EFI console or EFI diagnostic applications. Neither OpenVMS nor any EFI console

environments that might share the system disk are notified of partition modifications; OpenVMS

and console environments are unaware of console modifications. You must ensure the proper

coordination and proper synchronization of the changes with OpenVMS and with any other EFI

consoles that might be in use.

You must take precautions when modifying the console in configurations using either or both of the following:

OpenVMS host-based volume shadowing for the OpenVMS Integrity server and x86 system disk;

Shared system disks and parallel EFI console access across Integrity server and x86 environments sharing a common system disk.

You must preemptively reduce the OpenVMS system disk environments to a single-member

host-based volume shadow set or to a non-shadowed system disk, and you must externally

coordinate access to avoid parallel EFI shell sessions whenever making shell-level modifications

to the fsn: devices, such as:

Installing or operating diagnostics within the diagnostics partition.

Allowing diagnostics in the partition (or running from removable media) to modify the boot or the diagnostic partition on an OpenVMS Integrity server and x86 system disk.

Modifying directly or indirectly the boot or the diagnostics partition within these environments from the EFI

Shell>prompt.

If you do not take these precautions, any modifications made within the fsn: device associated with the boot partition or the device associated with the diagnostic partition can be overwritten and lost immediately or after the next OpenVMS host-based volume shadowing full-merge operation.

For example, when the system disk is shadowed and changes are made by the EFI console shell to the contents of these container files on one of the physical members, the volume shadowing software is unaware that a write is done to a physical device. If the system disk is a multiple-member shadow set, you must make the same changes to all of the other physical devices that are the current shadow set members. If this is not done, when a full merge operation is next performed on that system disk, the contents of these files might regress. The merge operation might occur many days or weeks after any EFI changes are made.

Furthermore, if a full merge is active on the shadowed system disk, you must not make changes to either file using the console EFI shell.

To suspend a full merge operation that is in progress or to determine the membership of a shadow set, see Chapter 8, "Host-Based Minimerge (HBMM)".

The precautions are applicable only for the Integrity server and x86 system disks that are configured for host-based volume shadowing, or are configured and shared across multiple OpenVMS Integrity server and OpenVMS x86 systems.

1.4.5. Using Minicopy in a Mixed-Version OpenVMS Cluster System

To use the minicopy feature in a mixed-version OpenVMS Cluster system, every node in the cluster must use a version of OpenVMS that supports this feature.

1.4.6. Shadow Sets, Bound Volume Sets, and Stripe Sets

Shadow sets also can be constituents of a bound volume set or a stripe set. A bound volume

set consists of one or more disk volumes that have been bound into a volume set by specifying

the /BIND qualifier with the MOUNT command. Section 1.5, ''Shadowing Disks Across an OpenVMS Cluster System'' describes shadowing across OpenVMS Cluster systems. Chapter 10, "Performance Information for Volume Shadowing" contains more information about striping and how it relates to

volume shadowing.

1.5. Shadowing Disks Across an OpenVMS Cluster System

The host-based implementation of volume shadowing allows disks that are connected to multiple physical controllers to be shadowed in an OpenVMS Cluster system. There is no requirement that all members of a shadow set be connected to the same controller. Controller independence allows you to manage shadow sets regardless of their controller connection or their location in the OpenVMS Cluster system and helps provide improved data availability and flexible configurations.

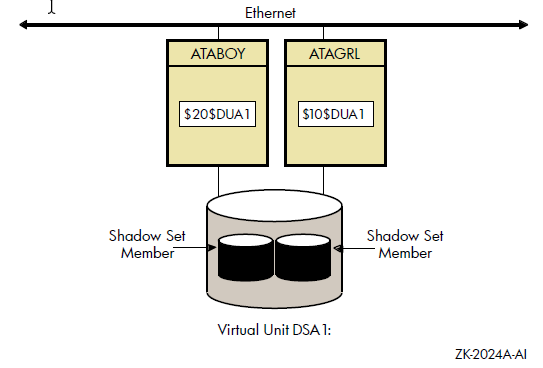

For clusterwide shadowing, members can be located anywhere in an OpenVMS Cluster system and served by MSCP servers across any supported OpenVMS Cluster interconnect, including the Ethernet, Digital Storage Systems Interconnect (DSSI), and Fiber Distributed Data Interface (FDDI). For example, OpenVMS Cluster systems using IPCI and wide area network services can be hundreds of miles apart, which further increases the availability and disaster tolerance of a system.

Figure 1.3, ''Shadow Sets Accessed Through the MSCP Server'' shows how shadow-set members are on line to local adapters located on different nodes. In the figure, a disk volume is local to each of the nodes ATABOY and ATAGRL. The MSCP server provides access to the shadow set members over the Ethernet. Even though the disk volumes are local to different nodes, the disks are members of the same shadow set. A member that is local to one node can be accessed by the remote node via the MSCP server.

The shadowing software maintains shadow sets in a distributed fashion on each node that mounts the shadow set in the OpenVMS Cluster system. In an OpenVMS Cluster environment, each node creates and maintains shadow sets independently. The shadowing software on each node maps each shadow set, represented by its virtual unit name, to its respective physical units. Shadow sets are not served to other nodes. When a shadow set must be accessed by multiple nodes, each node creates an identical shadow set. The shadowing software maintains clusterwide membership coherence for shadow sets mounted on multiple nodes. For shadow sets that are mounted on an OpenVMS Cluster system, mounting or dismounting a shadow set on one node in the cluster does not affect applications or user functions executing on other nodes in the system. For example, you can dismount the shadow set from one node in an OpenVMS Cluster system and leave the shadow set operational on the remaining nodes on which it is mounted.

1.6. Installation

Volume Shadowing for OpenVMS is a System Integrated Product (SIP) that you install at the same time that you install the operating system. On OpenVMS Integrity server systems, the license for Volume Shadowing is included in the Enterprise Operating Environment and in the Mission Critical Operating Environment. It is not included in the Foundation Operating Environment but can be purchased separately. On OpenVMS Alpha, Volume Shadowing for OpenVMS requires its own license that is separate from the OpenVMS base operating system license. To use the volume shadowing software, it must be licensed either as part of an OpenVMS Integrity server operating environment or by a separate license, as described. All nodes booting from shadowed system disks must have shadowing licensed and enabled. See the instructions included in your current OpenVMS upgrade and installation manual.

See Section 3.2, ''Licensing Volume Shadowing for OpenVMS'' for more information about licensing Volume Shadowing for OpenVMS.

Chapter 2. Configuring Your System for High Data Availability

System availability is a critical requirement in most computing environments. A dependable environment enables users to interact with their system when they want and in the way they want.

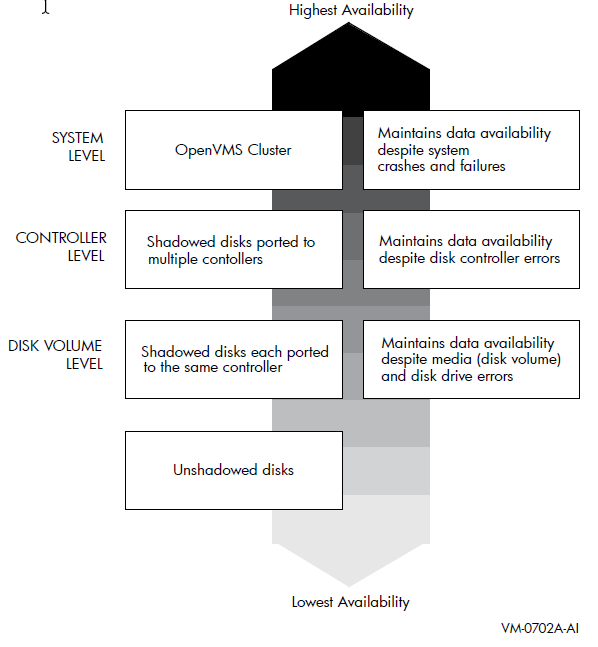

2.1. Levels of High Data Availability Using Volume Shadowing

A key component of overall system availability is availability or accessibility of data. Volume Shadowing for OpenVMS provides high levels of data availability by allowing shadow sets to be configured on a single-node system or on an OpenVMS Cluster system, so that continued access to data is possible despite failures in the disk media, disk drive, or disk controller. For shadow sets whose members are local to different OpenVMS Cluster nodes, if one node serving a shadow set member shuts down, the data is still accessible through an alternate node.

You can create a virtual unit, the system representation of a shadow set, that consists of only one disk volume. However, you must mount two or more disk volumes in order to “shadow,” that is, to maintain multiple copies of the same data. This configuration protects against either failure of a single disk drive or deterioration of a single volume. For example, if one member fails out of a shadow set, the remaining member can be used as a source device whose data can be accessed by applications at the same time the data is being copied to a newly mounted target device. Once the data is copied, both devices contain identical information and the target device becomes a source member of the shadow set. (Disks of different sizes can be combined into a shadow set, as described in Section 7.5, ''Write Bitmaps and Dissimilar Device Shadowing Caution''.)

Using two controllers provides a further guarantee of data availability in the event of a single-controller failure. When setting up a system with volume shadowing, you should connect each disk drive to a different controller I/O channel whenever possible. Separate connections help protect against either failure of a single controller or of the communication path used to access it.

Using an OpenVMS Cluster system (as opposed to a single-node environment) and multiple controllers provides the greatest data availability. Disks that are connected to different local controllers and disks that are MSCP-served by other OpenVMS systems can be combined into a single shadow set, provided the disks are compatible and no more than three are combined.

Figure 2.1, ''Levels of Availability '' provides a qualitative, high-level classification of how you can achieve increasing levels of physical data availability in different types of configurations.

Section 2.2, ''Repair and Recovery from Failures'' describes how you can configure your shadowed system to achieve high data availability despite physical failures.

2.2. Repair and Recovery from Failures

Controller errors

Device errors

Data errors

Connectivity failures

The handling of shadow set recovery and repair depends on the type of failure that occurred and the hardware configuration. In general, devices that are inaccessible tend to fail over to other controllers whenever possible. Otherwise, they are removed from the shadow set. Errors that occur as a result of media defects can often be repaired automatically by the volume shadowing software.

|

Type |

Description |

|---|---|

|

Controller error |

Results from a failure in the controller. If the failure is recoverable, processing continues and data availability is not affected. If the failure is nonrecoverable, shadow set members connected to the controller are removed from the shadow set, and processing continues with the remaining members. In configurations where disks are dual-pathed between two controllers, and one controller fails, the shadow set members fail over to the remaining controller and processing continues. |

|

Device error |

Signifies that the mechanics or electronics in the device failed. If the failure is recoverable, processing continues. If the failure is nonrecoverable, the node that detects the error removes the device from the shadow set. |

|

Data errors |

Results when a device detects corrupt data. Data errors usually result from media

defects that do not cause the device to be removed from a shadow set. Depending on the

severity of the data error (or the degree of media deterioration), the controller:

When data cannot be corrected by the controller, volume shadowing replaces the lost data by retrieving it from another shadow set member and writing the data to a different LBN of the member with the incorrect data. This repair operation is synchronized within the cluster and with the application I/O stream. |

|

Connectivity failures |

When a connectivity failure occurs, the first node to detect the failure must decide

how to recover from the failure in a manner least likely to affect the availability or

consistency of the data. As each node discovers the recoverable device failure, it

determines its course of action as follows:

|

2.3. Shadow Set Configurations

To illustrate the various levels of data availability obtainable through Volume Shadowing for OpenVMS, this section provides a representative sample of hardware configurations. Figures 2.2 through 2.4 show possible system configurations for shadow sets. The hardware used to describe the sample systems, while intended to be representative, is hypothetical; they must used only for general observations about availability and not as a suggestion for any specific configurations or products.

In all the following examples, the shadow set members use the

$allocation-class$ddcu: naming convention. The virtual unit uses the DSA

n: format, where n represents a number between 0 and 9999.

These naming conventions are described in more detail in Section 4.2, ''Creating a Shadow Set''.

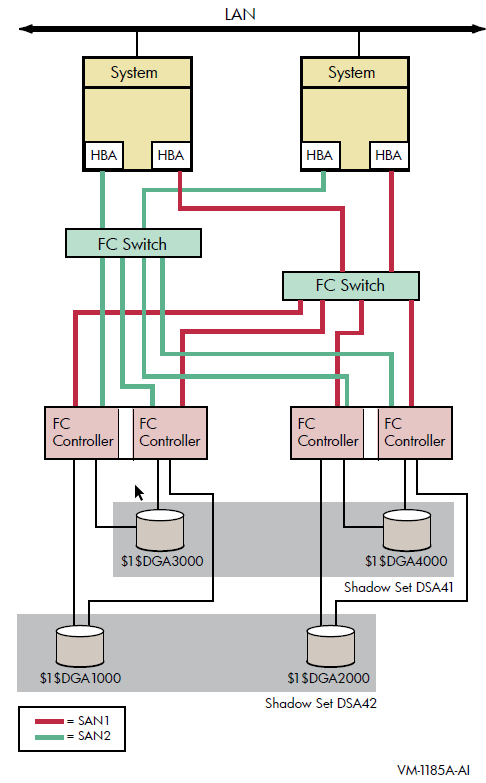

Figure 2.2, ''OpenVMS Cluster System With Two FC Switches, Two Dual Controllers and Two Shadow Sets'' shows an OpenVMS Cluster system consisting of two systems connected to the same two shadow sets. Each system has two host-based adapters (HBAs) connecting it to the same two Fibre Channel (FC) switches. In turn, the FC switches are connected to two dual controllers, which are connected to two shadow sets.

Each shadow set member is connected by two paths, one to each of the dual controllers of one storage system. Each shadow set member can fail over between controllers independently of each other. Each system can access both shadow sets by direct connections.

This configuration provides coverage against:

Media errors

Failure on one system

Failure of one HBA per system

Failure of one or more controllers

Failure of one disk per shadow set

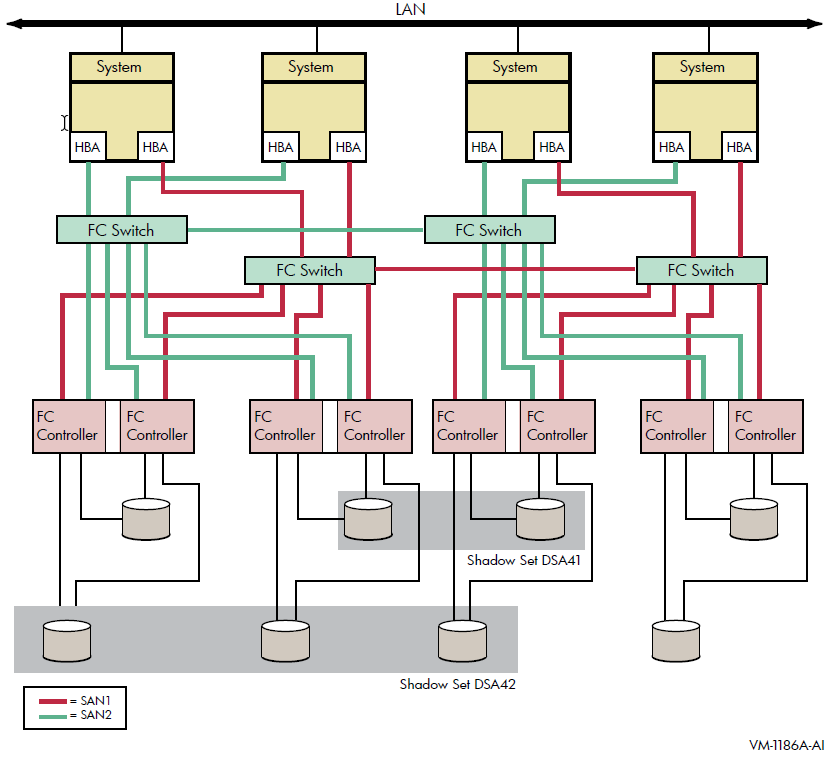

Figure 2.3, ''OpenVMS Cluster System With Four Systems, Four FC Switches, Four Dual Controllers, and Two Shadow Sets'' shows an OpenVMS Cluster system consisting of four systems. Each system in the cluster is identical to each system shown in Figure 2.2, ''OpenVMS Cluster System With Two FC Switches, Two Dual Controllers and Two Shadow Sets''. In addition to the protection offered by Figure 2.2, ''OpenVMS Cluster System With Two FC Switches, Two Dual Controllers and Two Shadow Sets'', this OpenVMS Cluster configuration provides greater protection from:

Component failure because there are twice as many components

Failure of one or two devices in shadow set DSA42 because it is a three-member set

This type of configuration provides continued access to data in spite of the failure of any one or more of these systems or switches.

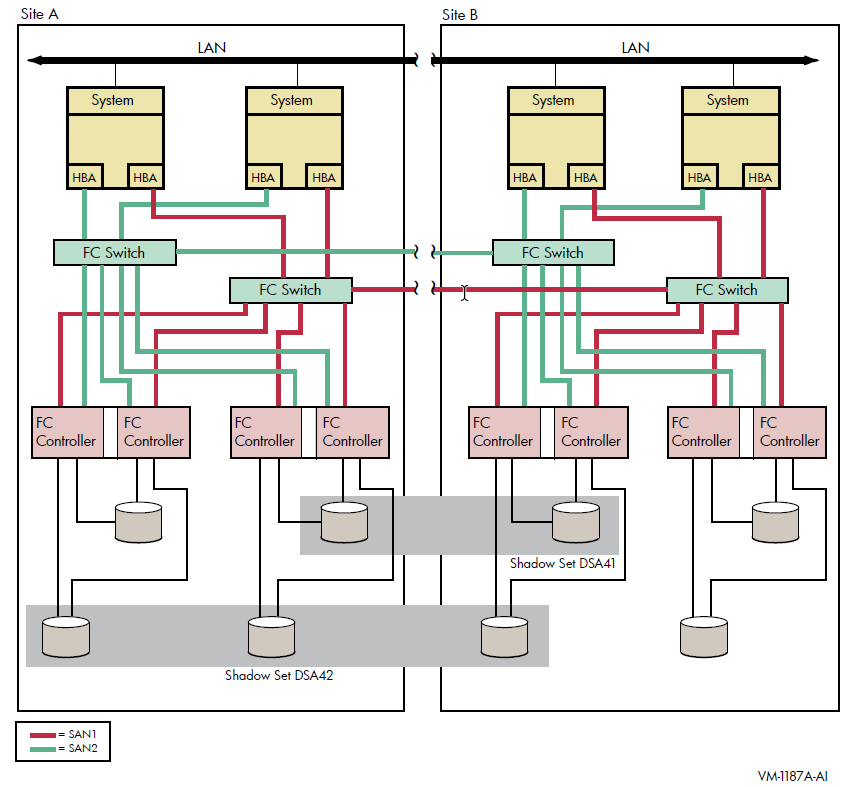

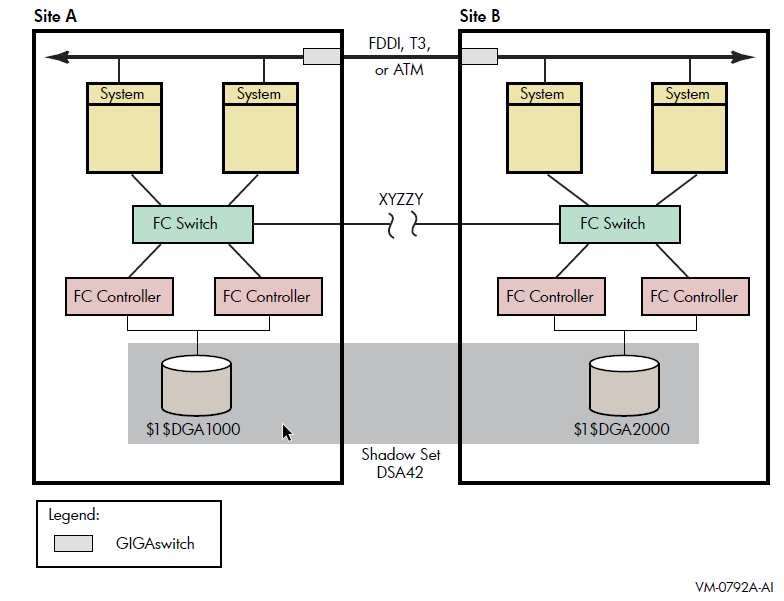

Figure 2.4, ''Multiple-Site OpenVMS Cluster System With Four Systems, Four FC Switches, Four Controllers, and Two Shadow Sets'' shows an OpenVMS Cluster system identical to Figure 2.3, ''OpenVMS Cluster System With Four Systems, Four FC Switches, Four Dual Controllers, and Two Shadow Sets'' except that the four systems are not in a single location. Instead, two systems are at one site and two at a second site. This figure illustrates how you can shadow data disks over long distances. Members of each shadow set are configured between two distinct and widely separated locations — a multiple-site OpenVMS Cluster system. The OpenVMS systems and shadowed disks in both locations function together as a single OpenVMS Cluster system and shadow set configuration. If a failure occurs at either site, the critical data is still available at the remaining site.

Chapter 3. Preparing to Use Volume Shadowing

This chapter describes the configuration tasks that are required before you can use volume shadowing on your system, including setting system parameters and installing licenses (unless volume shadowing is licensed with your OpenVMS Integrity server operating environment). This chapter also documents booting from a system disk and booting satellite nodes.

3.1. Configuration Tasks

Select which of your disk drives you want to shadow. Prepare the selected volumes for mounting by physically placing the volumes in the drives (for removable media disks). Ensure the disks are not write locked.

Consider whether or not you want to initialize the volumes you have chosen to shadow. Do not initialize volumes that contain useful data.

If you are creating a new shadow set, you can initialize one volume at a time, or multiple volumes with one command, which can streamline the creation of a shadow set (see Section 4.3, ''Using INITIALIZE/SHADOW/ERASE to Form a Shadow Set''). When you initialize one volume at a time, you can give it a volume label to be used for the shadow set. When you later mount additional volumes into the shadow set, each volume is initialized and is given the same volume label automatically.

Install the Volume Shadowing for OpenVMS licenses unless you are running OpenVMS Integrity servers and purchased either the Enterprise Operating Environment or the Mission Critical Operating Environment. These operating environments include the Volume Shadowing for OpenVMS license. See Section 3.2, ''Licensing Volume Shadowing for OpenVMS'' for more information.

Set the SHADOWING parameter to enable volume shadowing on each node that will use volume shadowing. See Section 3.3, ''Volume Shadowing Parameters'' for more information.

Setting the SHADOWING parameter requires that you reboot the system.

Set the ALLOCLASS parameter to a nonzero value. This parameter enables the use of allocation classes in device names. You must include a nonzero allocation class in the device name of shadowed disks. For more information, see Section 4.2, ''Creating a Shadow Set''.

- Dismount the disk drives you selected for the shadow set and remount them (along with the additional shadow set disk drives) as shadow set members. Note that:

You do not need to change the device volume labels and logical names.

If you use mount command files, ensure that the commands mount the physical devices using the appropriate naming syntax for virtual units (DSA n:).

For more information on the MOUNT command, see Chapter 4, "Creating and Managing Shadow Sets Using DCL Commands".

System disks can be shadowed. All nodes booting from that system disk must have shadowing licensed and enabled.

3.2. Licensing Volume Shadowing for OpenVMS

To use the volume shadowing product on OpenVMS Alpha, you must purchase a license for it, even though the volume shadowing software is part of the OpenVMS operating system. On OpenVMS Integrity server systems, the volume shadowing license is included in enterprise, mission critical, and high availability operating environments.

For OpenVMS Integrity server computers, a volume shadowing license is included in the collection of OpenVMS products known as the High Availability Operating Environment (HAOE). It is not included in the Base Operating Environment for OpenVMS license.

After licensing the OpenVMS operating system by registering an OpenVMS Product Authorization Key (PAK), OpenVMS Alpha system managers and managers of OpenVMS Integrity server systems with the FOE or BOE must also license Volume Shadowing for OpenVMS with a separate volume shadowing PAK. The PAK provides information that defines the Volume Shadowing for OpenVMS license contract you have with VSI. Obtain a PAK from your VSI sales representative.

When you enter information from the PAK into the online LICENSE database, the OpenVMS License Management Facility (LMF) authorizes the use of volume shadowing.

You must register and activate a license for Volume Shadowing for OpenVMS on each node that mounts a shadow set, including satellites in an OpenVMS Cluster system. If you do not register and activate licenses on nodes that use volume shadowing, subsequent shadow set mount operations do not succeed and displays error messages similar to the ones shown in Example 3.1, ''Nodes Not Registered to Use Volume Shadowing''.

%LICENSE-E-NOAUTH, DEC VOLSHAD use is not authorized on this node -LICENSE-F-NOLICENSE, no license is active for this software product -LICENSE-I-SYSMGR, please see your system manager

After you register the volume shadowing PAK, you must set the shadowing parameters on each node where you want to enable shadowing.

For more information about volume shadowing licensing, see the VSI Volume Shadowing for OpenVMS Software Product Description (DO-VIBHAA-031). For more information about the License Management Facility, see the OpenVMS Operating System Software Product Description (DO-VIBHAA-005 for Integrity V8.4-1H1; and DO-DVASPQ-001 for Alpha V8.4-2L1). You can also consult the VSI OpenVMS License Management Utility Guide.

3.3. Volume Shadowing Parameters

Table 3.1, ''Volume Shadowing Parameters'' lists the system parameters that are required to specify the use of Volume Shadowing for OpenVMS and the system parameters you can use to tailor the shadowing software on your system.

The term dynamic in Table 3.1, ''Volume Shadowing Parameters'' means that the active value can be changed on a running system. For more information about setting system parameters, see the VSI OpenVMS System Manager's Manual, Volume 1: Essentials.

Table 3.4, ''Write Bitmap System Parameters'' describes four bitmap system parameters. These system parameters support the host-based minicopy operation, described in Chapter 7, "Using Minicopy for Backing Up Data", and the host-based minimerge (HBMM) operation, described in Chapter 8, "Host-Based Minimerge (HBMM)".

|

Parameter |

Function |

Range |

Default |

Dynamic |

|---|---|---|---|---|

|

ALLOCLASS |

Specifies the device allocation class for the system. When using Volume Shadowing for OpenVMS, a nonzero value is required. |

0–255 |

0 |

No |

|

SHADOWING |

A value of 2 enables volume shadowing. See Table 3.2, ''SHADOWING Parameter Settings '' for a description of parameter values. |

0, 2? |

0 |

No |

|

SHADOW_MAX_COPY |

Limits the number of concurrent merge or copy operations on a given node. |

0–200 |

4 |

Yes |

|

SHADOW_MBR_TMO |

Controls the amount of time the system tries to fail over physical members of a shadow set. |

1–65,535 seconds |

120 |

Yes |

|

SHADOW_MAX_UNIT |

Specifies the maximum number of shadow sets that can exist on a node. Dismounted shadow sets, unused shadow sets, and shadow sets with no write bitmaps allocated to them are included in this total. |

10–10,000 |

500 on Alpha |

No |

|

SHADOW_SYS_DISK |

Allows system disk to be a shadow set |

0–1 |

0 |

0–1 |

|

SHADOW_SYS_DISK |

Allows system disk to be a shadow set and, optionally, enables a minimerge to occur. If a minimerge is enabled, the system must also be configured for writing to a nonshadowed, nonsystem disk of your choice. |

0, 1, 4097 ? |

0 |

Yes |

|

SHADOW_SYS_TMO |

Controls the amount of time members of a system disk shadow set have to return to the set. |

1–65,535 seconds |

120 |

Yes |

|

SHADOW_SYS_UNIT |

Contains the virtual unit number of the system disk. |

0–9999 |

0 |

No |

|

SHADOW_SYS_WAIT |

This parameter applies only to shadow sets that are currently mounted in the cluster. Controls the amount of time a booting system will wait for all members of a mounted system disk shadow set to become available. |

1–65,535 seconds |

480 |

Yes |

3.3.1. Guidelines for Using Volume Shadowing Parameters

This section provides guidelines for using volume shadowing parameters.

ALLOCLASS

The ALLOCLASS parameter is used to specify an allocation class that forms part of a device name. The purpose of allocation classes is to provide unique and unchanging device names. When using Volume Shadowing for OpenVMS on a single system or on an OpenVMS Cluster system, a nonzero allocation class value is required for each physical device in the shadow set. For more information about using allocation classes, see the VSI OpenVMS Cluster Systems Manual.

SHADOWING

The SHADOWING parameter enables or disables volume shadowing on your system, as shown in Table 3.2, ''SHADOWING Parameter Settings ''.

|

Setting |

Effect |

|---|---|

|

0 |

Shadowing is not enabled. This is the default value. |

|

2 |

Enables host-based shadowing. This setting provides shadowing of all disks that are located on a standalone system or on an OpenVMS Cluster system. Set SHADOWING to 2 on every node that will mount a shadow set, including satellite nodes. |

SHADOW_HBMM_RTC

SHADOW_HBMM_RTC is used to specify, in seconds, how frequently each shadow set on

this system has its modified block count compared with its reset threshold. If the

modified block count exceeds the reset threshold, the bitmap for that shadow set is

zeroed. This comparison is performed for all shadow sets mounted on the system that

have HBMM bitmaps. The reset threshold is specified by the RESET_THRESHOLD keyword

in the /POLICY qualifier of the SET SHADOW

command. When the comparison is made, the modified block count might exceed the

reset threshold by a small increment or by a much larger amount. The difference

depends on the write activity to the volume and the setting of this

parameter.

The default setting of SHADOW_HBMM_RTC is 150 seconds.

You can view the reset threshold setting and the modified block count, since the

last reset, in the SHOW SHADOW command display. For guidelines on

setting the reset threshold values and a sample SHOW SHADOW

display, see Section 8.5.2, ''Considerations for Setting a Bitmap RESET_THRESHOLD Value''. For a SHOW

SHADOW display that includes a modified block count greater than the

reset threshold value, refer to the VSI OpenVMS DCL Dictionary: N–Z.

SHADOW_MAX_COPY

The SHADOW_MAX_COPY parameter controls how many parallel copy and merge operations are allowed on a given node. (Copy and merge operations are described in Chapter 6, "Ensuring Shadow Set Consistency".) This parameter provides a way to limit the number of copy and merge operations in progress at any time.

The value of SHADOW_MAX_COPY can range from 0 to 200. The default value is specific to the OpenVMS version. You can determine the default value by looking at the parameter setting. When the value of the SHADOW_MAX_COPY parameter is 4, and you mount five multivolume shadow sets that all need a copy operation, only four copy operations can proceed. The fifth copy operation must wait until one of the first four copies completes.

CPU power

Disk controller bandwidth

Interconnect controller bandwidth

Other work loads on the system

For example, the default value of 4 may be too high for a small node. (In particular, satellite nodes should have SHADOW_MAX_COPY set to a value of 0.) Too low a value for SHADOW_MAX_COPY unnecessarily restricts the number of operations your system can effectively handle and extends the amount of time it takes to merge all of the shadow sets.

SHADOW_MAX_COPY is a dynamic parameter. Changes to the parameter affect only future copy and merge operations; current operations (pending or already in progress) are not affected.

SHADOW_MAX_UNIT

The SHADOW_MAX_UNIT specifies the number of shadow sets that can exist on a node and determines the memory reserved for the bitmap for each shadow set. (See Section 1.3.1, ''Memory Requirements''.) The important thing to note about this value is that any shadow set that has been created, regardless of whether it is in use, is included in this total. Because this is not a dynamic system parameter, you must be very careful when determining the value to use. If you have to change this parameter, you must reboot the system.

The default value for OpenVMS Alpha systems is 500.

Caution

Any MOUNT command that attempts to create more shadow sets

than the maximum specified for the node fails.

Note that this parameter does not affect the naming of shadow sets. For example, with the default value of 100, a device name such as DSA999 is still valid.

SHADOW_MBR_TMO

The SHADOW_MBR_TMO parameter controls the amount of time the system tries to fail over physical members of a shadow set before removing them from the set. SHADOW_MBR_TMO is a dynamic parameter that you can change on a running system.

Note

The value of SHADOW_MBR_TMO should not exceed the value of the parameter MVTIMEOUT.

If you specify zero, a default delay is used. The default delay is specific to the version of OpenVMS running on your system. For shadow sets in an OpenVMS Cluster configuration, the value of SHADOW_MBR_TMO must be set to the same value on each node.

Determining the correct value for SHADOW_MBR_TMO is a trade-off between rapid recovery and high availability. If rapid recovery is required, set SHADOW_MBR_TMO to a low value. This ensures that failing shadow set members are removed from the shadow set quickly and that user access to the shadow set continues. However, removal of shadow set members reduces data availability and, after the failed member is repaired, a full copy operation is required when it is mounted back into the shadow set.

If high availability is paramount, set SHADOW_MBR_TMO to a high value. This allows the shadowing software additional time to regain access to failed members. However, user access to the shadow set is stalled during the recovery process. If recovery is successful, access to the shadow set continues without the need for a full copy operation, and data availability is not degraded. Setting SHADOW_MBR_TMO to a high value may be appropriate when shadow set members are configured across LANs that require lengthy bridge recovery time.

Shadowing uses a timer to adhere to the number of seconds specified by the SHADOW_MBR_TMO parameter. For directly connected SCSI devices that have been powered down or do not answer to polling, the elapsed time before a device is removed from a shadow set can take several minutes.

| System Parameter | Recommended Setting |

|---|---|

| MSCP_CMD_TMO |

60 as a minimum. The value of 60 is appropriate for most configurations. Some configurations may require a higher setting. |

| SHADOW_MBR_TMO | At least 3 x MSCP_CMD_TMO |

| SHADOW_SYS_TMO | At least 3 x MSCP_CMD_TMO |

| MVTIMEOUT | At least 4 x SHADOW_MBR_TMO |

Note

The recommended setting for MVTIMEOUT, as shown in Table 3.3, ''System Parameter Settings for Multipath Shadow Sets''.

To modify SHADOW_MBR_TMO for an existing shadow set member, see the SET

SHADOW/RECOVERY_OPTIONS=DELAY_PER_SERVED_MEMBER=n

command, which is described in Section 4.8, ''Managing Copy and Merge Operations''.

SHADOW_PSM_DLY

SHADOW_PSM_DLY allows the system manager to adjust the delay that Shadowing adds automatically when a copy or merge operation is needed on a shadow set that is mounted on many systems.

The Shadowing facility attempts to perform the operation on a system that has a local connection to all the shadow set members. Shadowing implements the copy or merge operation by adding a time delay based on the number of shadow set members that are MSCP-served to the system. No delay is added for local members. Therefore, a system with all locally accessible shadow set members usually performs the copy or merge before a system on which one or more members is served and is therefore delayed.

When a shadow set is mounted on a system, the value of SHADOW_PSM_DLY is used as

the default shadow set member recovery delay for that shadow set. To modify

SHADOW_PSM_DLY for an existing shadow set, see the SET

SHADOW/RECOVERY_OPTIONS=DELAY_PER_SERVED_MEMBER=n

command, which is described in Section 4.8, ''Managing Copy and Merge Operations''.

SHADOW_PSM_DLY is a static parameter; its range is 0 to 65535 seconds. The default value is 30 seconds for each MSCP served shadow set member.

SHADOW_REC_DLY

SHADOW_REC_DLY governs the system behavior after a system failure or after a shadow set is aborted. The value of the SHADOW_REC_DLY parameter is added to the value of the RECNXINTERVAL parameter to determine how long a system waits before it attempts to manage a merge or copy operation on any shadow sets that it has mounted.

SHADOW_REC_DLY can be used to predict the systems that can perform recovery operations in an OpenVMS Cluster. This is done by setting lower values of SHADOW_REC_DLY on systems that are preferred to handle recovery operations and higher values of SHADOW_REC_DLY on the remaining systems.

SHADOW_REC_DLY is a dynamic parameter; its range is 0 to 65535 seconds. The default value is 20 seconds.

For more information about controlling which systems perform the merge or copy operations, see Section 4.9.5, ''Controlling Which Systems Manage Merge and Copy Operations''.

SHADOW_SYS_DISK

A SHADOW_SYS_DISK parameter value of 1 enables shadowing of the system disk. A value of 0 disables shadowing of the system disk. A value of 4097 enables a minimerge. The default value is 0.

If you enable a minimerge of the system disk, you must also configure your system to write a dump to a non-shadowed, non-system disk of your choice. This is known as dump off system disk (DOSD). For more information on DOSD, see the VSI OpenVMS System Manager's Manual, Volume 2: Tuning, Monitoring, and Complex Systems.

In addition, you must specify a system-disk, shadow-set virtual unit number with the SHADOW_SYS_UNIT system parameter, unless the desired system disk virtual unit number is DSA0.

SHADOW_SYS_TMO

You can use the SHADOW_SYS_TMO parameter in two ways: during the booting process and during normal operations. SHADOW_SYS_TMO is a dynamic parameter that you can change on a running system.

During the booting process, you can use this parameter on the first node in the cluster to boot and to create a specific shadow set. If the proposed shadow set is not currently mounted in the cluster, use this parameter to extend the time a booting system waits for all former members of the system disk shadow set to become available.

The second use of this parameter comes into effect once the system successfully mounts the shadow set and begins normal operations. Just as the SHADOW_MBR_TMO parameter controls the time the operating system waits for failing members of an application disk shadow set to rejoin the shadow set, the SHADOW_SYS_TMO parameter controls how long the operating system waits for failing members of a system disk shadow set. All nodes using a particular system disk shadow set must have their SHADOW_SYS_TMO parameter equal to the same value, after normal operations begin. Therefore, after booting, this parameter applies only to members of the system disk shadow set.

The default value is OpenVMS version specific. You can set a range of up to 65,535 seconds if you want the system to wait longer than the default for all members to join the shadow set.

SHADOW_SYS_UNIT

The SHADOW_SYS_UNIT parameter, which must be used when the SHADOW_SYS_DISK parameter is set to 1, contains the virtual unit number of the system disk.

The SHADOW_SYS_UNIT parameter is an integer value that contains the virtual unit number of the system disk. The default value is 0. The maximum value allowed is 9999. This parameter is effective only when the SHADOW_SYS_DISK parameter has a value of 1. This parameter must be set to the same value on all nodes that boot off a particular system disk shadow set. SHADOW_SYS_UNIT is not a dynamic parameter.

SHADOW_SYS_WAIT

Use the SHADOW_SYS_WAIT parameter to extend the time a booting system waits for all current members of a mounted system disk shadow set to become available to this node. SHADOW_SYS_WAIT is a dynamic parameter that you can change on a running system (for debugging purposes only). The shadow set must already be mounted by at least one other cluster node for this parameter to take effect. The default value is 256 seconds. Change this parameter to a higher value if you want the system to wait more than the 256-second default for all members to join the shadow set. This parameter has a range of 1 through 65,535 seconds.

3.4. Bitmap System Parameters

The four system parameters for managing minicopy bitmap messages apply equally to managing HBMM bitmap messages. Three parameters are used to manage update traffic between a master bitmap and its corresponding local bitmaps in an OpenVMS Cluster system. The fourth parameter controls whether bitmap system messages are sent to the operator console and, if they are to be sent, the volume of messages. System parameters are dynamic; they can be changed on a running system. Table 3.4, ''Write Bitmap System Parameters'' lists the bitmap system parameters.

The bitmap system parameters check if the messages are buffered and then packaged in a single System Communications Services (SCS) message to update the master bitmap or whether each message is sent immediately. The system parameters are used to set the upper and lower thresholds of message traffic and a time interval during which the traffic is measured.

The writes issued by each remote node are, by default, sent one at a time in individual SCS messages to the node with the master bitmap. This is known as single-message mode.

|

Parameter |

Meaning |

Unit |

Min |

Max? |

Default |

|---|---|---|---|---|---|

|

WBM_MSG_INT |

In single-message mode, the time interval msec between assessment of the most suitable bitmap message mode. In buffered-message mode, the maximum time (in milliseconds) that a message waits before it is sent. |

msec |

10 1 |

-1 100 |

10 7 |

|

WBM_MSG_UPPER |

The upper threshold for the number of messages sent during the test interval (calculated in 100 millisecond windows) that initiates buffered-message mode. |

msgs/interval |

0 |

-1 |

80 |

|

WBM_MSG_LOWER |

The lower threshold for the number of messages sent during the test interval (calculated in 100 millisecond windows) that initiates single-message mode. |

msgs/interval |

0 |

-1 |

20 |

|

WBM_OPCOM_LVL |

Controls whether bitmap messages are provided to the operator console: 0 means messages are turned off; 1 means messages are provided when bitmaps are started, deleted, and renamed, and when the SCS message mode (buffered or single) changes; 2 means that all messages for a setting of 1 are provided along with detailed messages for debugging purposes. |

n/a |

0 |

2 |

1 |

3.4.1. Setting System Parameters

n.SYSEXE]MODPARAMS.DAT file or the appropriate AUTOGEN

include file. After editing the file, execute SYS$UPDATE:AUTOGEN as described in the

VSI OpenVMS System Manager's Manual, Volume 2: Tuning, Monitoring, and Complex Systems. If you have an OpenVMS Cluster system, ensure that the system

parameters are updated on each node. Example 3.2, ''MODPARAMS.DAT File'' illustrates a

MODPARAMS.DAT file that includes assignment statements to set shadowing

parameters. .

.

.

! Volume Shadowing Parameters:

SHADOWING=2 ! Enables phase II shadowing

SHADOW_SYS_DISK=1 ! Enables system disk shadowing

SHADOW_SYS_UNIT=7 ! Specifies 7 as the virtual unit number

of the system disk

SHADOW_MAX_COPY=4 ! Specifies that 4 parallel copies can occur at one time

SHADOW_MBR_TMO=120 ! Allows 120 seconds for physical members to fail over

! before removal from the shadow set

.

.

.See the VSI OpenVMS System Manager's Manual, Volume 2: Tuning, Monitoring, and Complex Systems for complete information about invoking AUTOGEN and specifying the appropriate command qualifiers to perform the desired AUTOGEN operations.

3.4.2. Displaying System Parameters

It is sometimes useful to use the SYSGEN command SHOW to

display the values of system parameters.

SHOW command, or you can use the SHOW/ALL

command to display information about all system parameters. (Enter HELP

SHOW at the SYSGEN> prompt for more information about the

SHOW command.) The following example illustrates how you can

check the current default, minimum, and maximum values for the SHADOWING

parameter.$ MCR SYSGEN SYSGEN> SHOW SHADOWING Parameter Name Current Default Minimum Maximum Unit Dynamic -------------- ------- ------- ------- ------- ---- ------- SHADOWING 2 0 0 2 Coded-value SYSGEN>

3.5. Dynamic Volume Expansion

The basis of dynamic volume expansion is the one-time allocation of extra bitmap space

to the maximum size ever used on this volume. The current limit is 1 TB. The one-time

allocation of extra bitmap space can be performed either at disk initialization time

with the INITIALIZE/LIMIT command or on a mounted volume with the

SET VOLUME/LIMIT command. By allocating extra bitmap space, you

can later expand the logical volume size while the device is mounted by using

SET VOLUME

volume-name/SIZE=x command. (The

logical volume size is the amount of disk space allocated to the file system.) For

example, you might prepare a disk for 1 TB of storage (by allocating 1 TB of bitmap

space) but use only 18 GB today. Next year, you might increase it to 36 GB, and so on,

until you reach the maximum of 1 TB. By allocating the maximum size for storage on the

disk, you can later increase the size of the volume without stopping the application or

dismounting a disk. To use the SET VOLUME/LIMIT command to allocate

extra bitmap space, the disk must be mounted privately. However, once allocated, the

volume can be expanded while the disk is mounted as shareable

(MOUNT/SHARE).

You can allocate additional bitmap space regardless of whether the physical volume has room for expansion.

Note

In volume expansion, you must disable and re-enable HBMM so that write bitmaps are recreated, which encompasses the new volume size. Failing to do this might result in longer than expected merge times because the expansion area is subject to a complete merge.

The following command allocates extra bitmap size on a new volume:

$ INITIALIZE/LIMIT $1$DGAnnn: ! Allocates 1 TB bitmap

The following command allocates extra bitmap size on a mounted volume:

$ SET VOLUME/LIMIT $1$DGAnnn

The default /LIMIT size for both commands is 1 TB, which is also

the maximum size currently supported on OpenVMS. In special circumstances, you may want

to specify less.

When you use the /LIMIT qualifier with the

INITIALIZE or SET VOLUME command, you increase

the BITMAP.SYS file by a few hundred blocks, which gives you much greater flexibility in

the future.

When additional physical storage is made available (either by adding a larger device to the shadow set and removing the smaller member, or by increasing the size on the storage subsystem), you can then enter the following command to increase the volume size:

$ SET VOLUME $1$DGAn/SIZE=x

In this command syntax, x represents the number of blocks.

Note

If the volume of a shadow set is expanded to be larger than the physical size of a member, the smaller member can no longer be added back to the shadow set.

3.5.1. Using the /SIZE Qualifier With the INITIALIZE Command

You can use the INITIALIZE/SIZE command to create a file system

that is smaller than the current physical size of the volume. If you have a 36-GB

disk and you anticipate adding an 18-GB disk in the future, then you can initialize

the disk with the following command:

$ INIT/SIZE=36000000 $1$DGAnlabel

In this example, 18GB disk = 36,000,000 blocks of 512 bytes each approximately.

3.5.2. When to Increase the Expansion Limit on Each Volume

If you are adding a new volume to your system, increase the expansion limit on the

volume when you initialize the disk with INITIALIZE/LIMIT. To

increase the expansion limit on volumes already in use, plan to increase the

expansion limit during the next convenient maintenance period using the command

SET VOLUME/LIMIT.

If INITIALIZE/LIMIT is used, the default cluster size of the

/CLUSTER_SIZE qualifier is 8. This value controls how much

space the bitmap occupies. You can later expand the volume (using the SET

VOLUME volume-name/SIZE=x

command) while the device is still mounted if your storage requirements grow

unexpectedly.

3.6. Booting from a System Disk Shadow Set

When multiple nodes boot from a common system disk shadow set, ensure that all nodes specify a physical disk that is a source member of the system disk shadow set.

If the boot device was not formerly a member of a shadow set, the system creates a new shadow set containing only the boot device. You can manually mount additional disks into the shadow set after the system boot procedure completes.

If the boot device is already a valid member of an existing shadow set (for instance, if it is already an up-to-date member of a shadow set mounted by another node in the cluster), the shadowing software automatically locates all the members of the set.

When booting the first node in a cluster, information stored in the SCB of the physical boot device is used to locate other members of the shadow set and to create the complete system disk shadow set.

The shadowing software detects boot attempts from a physical disk that is inconsistent with currently active shadow set members. In this case, the boot attempt detects the existence of the other shadow set members and determines (using the information in the SCB) that the boot device is not a valid member of the shadow set. When this occurs, the boot attempt fails with a SHADBOOTFAIL bugcheck message on the system console, and a dump file is written to the boot device.

The system bugchecks because it can boot only from a currently valid member of the system disk shadow set. If the boot device fails out of or is otherwise removed from the system disk shadow set, you must either mount the boot device back into the shadow set (and wait for the copy operation to complete) or modify the boot command file to boot from a current shadow set member.

Caution

A system is operating normally with a multiple member system disk shadow set.

The original boot device is removed from the shadow set but remains as a functioning disk.

The system continues with the remaining members.

The system is shut down or it fails.

The system is rebooted using the original boot device (which is now out of date).

The boot process determines that the boot device is not consistent with the other shadow set members and, therefore, does not add them into the shadow set. This behavior preserves the up-to-date data on the other members.

A

MOUNTcommand in the startup procedure adds the other shadow set members to the system disk shadow set.A copy operation from the boot device to the other shadow set members is initiated, thereby overwriting them.

virtual-unit: does not contain the member named to VMB.

System may not reboot.After the boot device has been repaired, manually add it back into the system disk shadow set.

3.7. Booting Satellite Nodes from a Served, System Disk Shadow Set

The OpenVMS operating system uses the Maintenance Operations Procedure (MOP) protocol to boot satellite nodes. MOP protocol support is provided by either the LANACP process controlled by the LANCP utility or by DECnet software controlled by the NCP or NCL utilities. You must specify the name of the satellite's system disk using LANCP, NCP, or NCL commands (depending on which you are using to boot satellites). If the system disk is shadowed, the commands must specify the name of the virtual unit or the virtual unit logical name rather than the name of any physical unit.

The MOP server accesses the system disk shadow set (using the virtual unit defined) to perform downline load operations to the satellite. These operations include downline loading the physical boot device name to the satellite. When downline loading is complete, the satellite is able to connect to an MSCP server and access the physical boot device directly. The satellite's shadowing parameters are then used in the same way as a non-satellite node.

You can use the SYS$MANAGER:CLUSTER_CONFIG_LAN.COM procedure or the SYS$MANAGER:CLUSTER_CONFIG.COM procedure to set MOP server, MSCP server, and satellite parameters automatically. When configuring satellite nodes with the cluster configuration command procedure, you can specify a shadowed system disk virtual unit as the satellite's system disk. The cluster configuration command procedure then automatically sets the satellite's system parameters SHADOW_SYS_DISK and SHADOW_SYS_UNIT for you. The values of these parameters are transferred automatically to the system parameter file ALPHAVMSSYS.PAR for Alpha satellites. (See the VSI OpenVMS Cluster Systems Manual for more information about using this command procedure.)

$ MCR LANCP LANCP> LIST DEVICE/MOPDLL Device Listing, permanent database: --- MOP Downline Load Service Characteristics --- Device State Access Mode Client Data Size ------ ----- ----------- ------ --------- ESA0 Disabled NoExlusive NoKnownClientsOnly 246 bytes FCA0 Disabled NoExlusive NoKnownClientsOnly 246 bytes LANCP> EXIT

For DECnet–Plus commands, see the DECnet–Plus documentation.

$ MCR NCP NCP> SHOW NODE HIWAY1 CHAR Node Volatile Characteristics as of 12-MAR-2000 14:53:59 Remote node = 19.891 (HIWAY1) Hardware address = 03-03-03-03-03-BC Tertiary loader = SYS$SYSTEM:TERTIARY_VMB.EXE Load Assist Agent = SYS$SHARE:NISCS_LAA.EXE Load Assist Parameter = DSA1: NCP> EXIT

You may need to adjust the settings of the SHADOW_MBR_TMO and SHADOW_MAX_COPY parameters on satellite nodes. These parameters are not automatically set by the cluster configuration command procedure. See Section 3.3, ''Volume Shadowing Parameters'' for more information.

The cluster configuration command procedure automatically enables shadowing on satellite nodes when you want to shadow the system disk. If you do not want to shadow the system disk but need to enable shadowing, you must do so manually after the cluster configuration command procedure completes. Set shadowing parameters in the satellite node's MODPARAMS.DAT file and execute AUTOGEN as described in Section 3.3, ''Volume Shadowing Parameters'' and in Section 3.4.1, ''Setting System Parameters''.

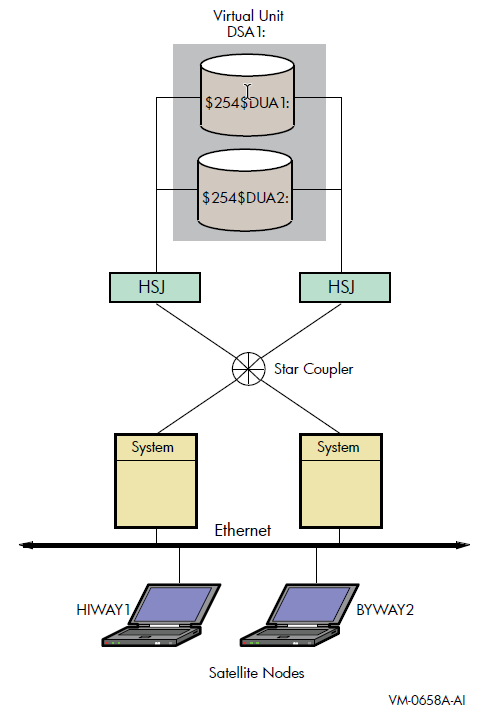

Figure 3.1, ''Booting Satellite Nodes'' shows two satellite nodes with shadowed system disk volumes located in an OpenVMS Cluster system configuration. In this configuration, the devices $254$DUA1 and $254$DUA2 make up a two-member shadow set. The satellites HIWAY1 and BYWAY2 access shadow set members across the Ethernet via the MSCP servers in the two boot nodes.

When a satellite node in Figure 3.1, ''Booting Satellite Nodes'' is booted, the boot node (MOP server) downline loads initial bootstrap code from the virtual unit DSA1. The boot node points the satellite to use either $254$DUA1 or $254$DUA2 as a boot device for the remainder of the boot process. Note that the boot node must have the virtual unit mounted. The satellite then forms the system disk shadow set locally according to the shadow set membership information stored in the SCB on the boot device.

SHOW DEVICES command displays how the shadow set appears

after the satellite node HIWAY1 is booted. In this example, the physical disk devices

are accessed through the MSCP server node BTNODE.$ SHOW DEVICES DSA1 Device Device Error Volume Free Trans Mnt Name Status Count Label Blocks Count Cnt DSA1: Mounted 0 MYVOLUME 181779 194 37 $254$DUA1: (BTNODE) ShadowSetMember 0 (member of DSA1:) $254$DUA2: (BTNODE) ShadowSetMember 0 (member of DSA1:) $

Chapter 4. Creating and Managing Shadow Sets Using DCL Commands

This chapter describes how to create, mount, dismount, and dissolve shadow sets using

interactive DCL commands. It also describes how to use the DCL command SET

SHADOW to manage merge and copy operations and to specify management

attributes for shadow set members located at different sites in a multiple-site OpenVMS

Cluster system. In addition, it describes how to use the DCL command SHOW

DEVICE and the lexical function F$GETDVI to access

current information about the state of shadow sets.

Volume Shadowing for OpenVMS improves data availability by ensuring that corresponding logical block numbers (LBNs) on multiple disk volumes contain the same information. Upon receiving a command to mount or dismount disks in a shadow set, the volume shadowing software may need to reconcile data differences and ensure that corresponding LBNs contain the same information.

An understanding of the copy and merge operations used for data reconciliation is essential to the discussions in this chapter. Therefore, you may find it helpful to refer to Chapter 6, "Ensuring Shadow Set Consistency" to understand how Volume Shadowing for OpenVMS ensures data availability and consistency during changes in shadow set membership.

4.1. Allocating Devices

MOUNT command, you can optionally allocate the device before

issuing the MOUNT command. Use the DCL command

ALLOCATE to provide your process with exclusive access to a

physical device until you either deallocate the device or terminate your process.

Optionally, you can associate a logical name with the device. The format for the

ALLOCATE command is as

follows:ALLOCATE device-name[:] logical-name[:]

4.2. Creating a Shadow Set

MOUNT command with the

/SHADOW qualifier to mount at least one physical disk into a

shadow set and assign a virtual unit name to the set, as shown in Example 4.1, ''Creating a Shadow Set''.$ MOUNT DSA23:/SHADOW

=$4$DUA9:

volume-label

logical-name

Use the DSAn: format to name the shadow set virtual unit, where n

represents a unique number from 0 through 9999. If you do not include a

number after the DSA prefix, Each virtual unit number must be unique across the system, regardless of

whether or not the unit is mounted for public (mounted with the

| |

The | |

Use a non-zero allocation class for each physical device in the shadow

set. Use the allocation class naming format

$allocation-class$ddcu, where:

Note that you cannot use more than 3 letters for the ddc portion of the name. See VSI OpenVMS Cluster Systems Manual for more information about allocation classes. | |

Specify a 1- to 12-character volume label for the virtual unit. | |

Optionally, specify a 1- to 255-alphanumeric-character logical name string for the shadow set. |

In addition, you can specify /SYSTEM, /GROUP, or

/CLUSTER to make the shadow set available to all users of a

system, all members of a group, or all nodes in a cluster on which shadowing is

enabled.

To create a three-member shadow set, you can add two members in a single

MOUNT command to an existing one-member shadow set. This method

optimizes the I/O operation because both members are copied at

the same time. (See the example in Section 4.4.4, ''Creating a Shadow Set With /SYSTEM and With /CLUSTER''.)

You can also streamline the process of creating a shadow set by initialized multiple

devices in one command, using INITIALIZE/SHADOW/ERASE, as described

in Section 4.3, ''Using INITIALIZE/SHADOW/ERASE to Form a Shadow

Set''.

Upon receiving a command to create a shadow set, the volume shadowing software may

perform a copy or a merge operation to reconcile data differences. If you are not sure

which disks might be targets of copy operations, you can specify the

/CONFIRM or /NOCOPY qualifiers as a precaution

against overwriting important data when you mount a disk. These and other

MOUNT command qualifiers are discussed in Section 4.4, ''MOUNT Command Qualifiers for Shadowing''.

4.3. Using INITIALIZE/SHADOW/ERASE to Form a Shadow Set

You can use the DCL command INITIALIZE with the

/SHADOW and /ERASE command qualifiers to

initialize multiple members of a future shadow set. Initializing multiple members in

this way eliminates the requirement of a full copy when you later create a shadow

set.

INITIALIZE command with the /SHADOW and

/ERASE qualifiers performs the following operations:Formats up to six devices with one command, so that any three can be subsequently mounted together as members of a new host-based shadow set.

Writes a label on each volume.

Deletes all information from the devices except for the system files and leaves each device with identical file structure information.

All former contents of the disks are lost. You can then mount up to three of the devices that you have initialized in this way as members of a new host-based shadow set.

4.3.1. Benefits and Side Effects of Using /ERASE

VSI strongly recommends that you use the /ERASE qualifier. By

using the /ERASE qualifier, a subsequent merge operation will be

substantially reduced.

If you omit the /ERASE qualifier, then the portions of the

volume that do not contain file system data structures contain indeterminate data.