Guide to OpenVMS File Applications

- Operating System and Version:

- VSI OpenVMS IA-64 Version 8.4-1H1 or higher

VSI OpenVMS Alpha Version 8.4-2L1 or higher

Preface

This document is intended for application programmers and designers who write programs that use OpenVMS RMS files.

1. About VSI

VMS Software, Inc. (VSI) is an independent software company licensed by Hewlett Packard Enterprise to develop and support the OpenVMS operating system.

2. Intended Audience

This document is intended for applications programmers and designers who create or maintain application programs that use RMS files.

You may also read this document to gain a general understanding of the file- and record-processing options available on an OpenVMS system.

3. Document Structure

Chapter 1, "Introduction" provides general information on file, disk, and magnetic tape concepts and brief overviews of available media, RMS, FDL, and resource requirements.

Chapter 2, "Choosing a File Organization" describes the file organizations and record access modes to help you choose the correct file organization for your application.

Chapter 3, "Performance Considerations" discusses general performance considerations and specific decisions you can make in the design of your application.

Chapter 4, "Creating and Populating Files" describes procedures necessary to create files, populate files with records, and protect files.

Chapter 5, "Locating and Naming Files on Disks" describes file specifications and the procedures needed to use them.

Chapter 6, "Advanced Use of File Specifications" describes the rules of file specification parsing and advanced file specification use. Information about rooted directories is also provided.

Chapter 7, "File Sharing and Buffering" describes file sharing and buffering, including record locking and the use of global buffers.

Chapter 8, "Record Processing" describes aspects of record processing, including record access modes; synchronous and asynchronous record operations; and retrieving, inserting, updating, and deleting records.

Chapter 9, "Run-Time Options" describes how to specify run-time options and summarizes the run-time options available when a file is opened and closed and when records are retrieved, inserted, updated, and deleted.

Chapter 10, "Maintaining Files" describes procedures needed to maintain properly tuned files, with the emphasis on efficiently maintaining indexed files.

Appendix A, "Edit/FDL Utility Optimization Algorithms" describes the algorithms used by the Edit/FDL utility.

4. Related Documents

The VSI OpenVMS User's Manual describes the use of the operating system for a general audience.

Programmers should be familiar with the appropriate documentation for the high-level language in which the application will be written.

System managers should be familiar with the VSI OpenVMS System Manager's Manual, a task-oriented guide to managing an OpenVMS system.

5. OpenVMS Documentation

The full VSI OpenVMS documentation set can be found on the VMS Software Documentation webpage at https://docs.vmssoftware.com.

6. VSI Encourages Your Comments

You may send comments or suggestions regarding this manual or any VSI document by sending electronic mail to the following Internet address: <docinfo@vmssoftware.com>. Users who have VSI OpenVMS support contracts through VSI can contact <support@vmssoftware.com> for help with this product.

7. Conventions

| Convention | Meaning |

|---|---|

|

Ctrl/ x |

A sequence such as Ctrl/ x indicates that you must hold down the key labeled Ctrl while you press another key or a pointing device button. |

|

PF1 x |

A sequence such as PF1 x indicates that you must first press and release the key labeled PF1 and then press and release another key or a pointing device button. |

|

Return |

In examples, a key name enclosed in a box indicates that you press a key on the keyboard. (In text, a key name is not enclosed in a box.) |

... |

A horizontal ellipsis in examples indicates one of the

following possibilities:

|

. . . |

A vertical ellipsis indicates the omission of items from a code example or command format; the items are omitted because they are not important to the topic being discussed. |

|

( ) |

In command format descriptions, parentheses indicate that you must enclose the options in parentheses if you choose more than one. |

|

[ ] |

In command format descriptions, brackets indicate optional choices. You can choose one or more items or no items. Do not type the brackets on the command line. However, you must include the brackets in the syntax for OpenVMS directory specifications and for a substring specification in an assignment statement. |

|

[ |] |

In command format descriptions, vertical bars separate choices within brackets or braces. Within brackets, the choices are options; within braces, at least one choice is required. Do not type the vertical bars on the command line. |

|

{ } |

In command format descriptions, braces indicate required choices; you must choose at least one of the items listed. Do not type the braces on the command line. |

|

bold text |

This typeface represents the introduction of a new term. It also represents the name of an argument, an attribute, or a reason. |

|

italic text |

Italic text indicates important information, complete titles of manuals, or variables. Variables include information that varies in system output (Internal error number), in command lines (/PRODUCER= name), and in command parameters in text (where dd represents the predefined code for the device type). |

|

UPPERCASE TEXT |

Uppercase text indicates a command, the name of a routine, the name of a file, or the abbreviation for a system privilege. |

|

|

Monospace type indicates code examples and interactive screen displays. In the C programming language, monospace type in text identifies the following elements: keywords, the names of independently compiled external functions and files, syntax summaries, and references to variables or identifiers introduced in an example. |

|

- |

A hyphen at the end of a command format description, command line, or code line indicates that the command or statement continues on the following line. |

|

numbers |

All numbers in text are assumed to be decimal unless otherwise noted. Nondecimal radixes—binary, octal, or hexadecimal—are explicitly indicated. |

Chapter 1. Introduction

This chapter illustrates how basic data management concepts are applied by the OpenVMS Record Management Services (OpenVMS RMS), referred to hereafter as RMS. RMS is the data management subsystem of the operating system. In combination with OpenVMS operating systems, RMS allows efficient and flexible storage, retrieval, and modification of data on disks, magnetic tapes, and other devices. RMS may be implemented through the File Definition Language (FDL) interface or through high-level language, program-specific processing options. Although RMS supports devices such as line printers, terminals, and card readers, the purpose of this guide is to introduce you to RMS record keeping on magnetic tape and disk.

In contrast to magnetic tape storage, disk storage allows faster data access while providing the same virtually limitless storage capacity. Disks provide faster access because the computer can locate files and records selectively without first searching through intervening data. This faster access time makes disks the most appropriate medium for online file processing applications.

1.1. File Concepts

The following file concepts are discussed in this manual:

Files

Records

Fields

Bytes and bits

Access modes

Record formats

Maximum RMS file size

A computer file is an organized collection of data stored on a mass storage volume and processed by a central processing unit (CPU). Data files are organized to accommodate the processing of data within the file by an application program. The basic unit of electronic data processing is the record. A record is a collection of related data that the application program processes as a functional entity. For example, all the information about an employee, such as name, street address, city, and state, constitutes a personnel record. Records are made up of fields, which are sets of contiguous bytes. For example, a person's name or address might be a field. A byte is a group of binary digits (bits) that are used to represent a single character. You can also think of a field or an item as a group of bytes in a record that are related in some way.

The records in a file must be formatted uniformly. That is, they must conform to some defined arrangement of the record fields including the field length, field location, and the field data type (character strings or binary integers, for instance). To process file data, an application must know the arrangement of the record fields, especially if the application intends to modify existing records or to add new records to the file.

|

Access Method |

Description |

|---|---|

|

Sequential Access |

Records are stored or retrieved one after another starting at a particular point in the file and continuing in order through the file. |

|

Relative Record Number Access |

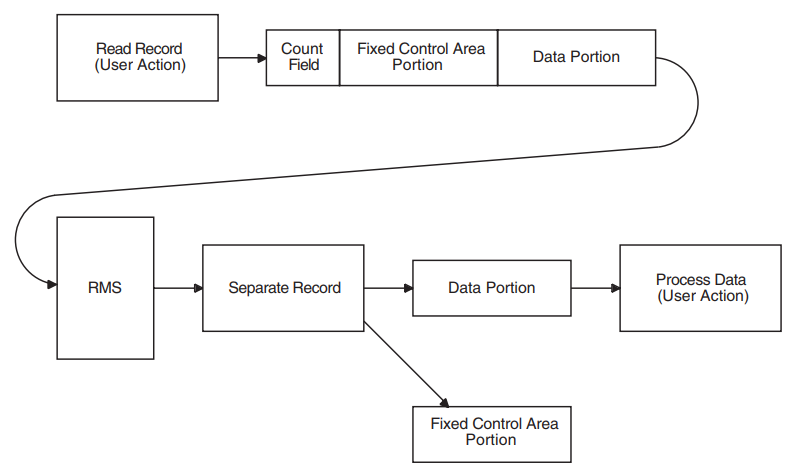

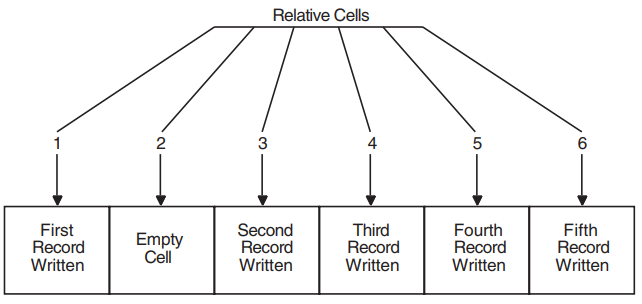

Records are stored and retrieved by relative record number or by file address. Records occupy cells of equal length, and each cell is assigned a relative record number, which represents the cell's position relative to the beginning of the file. |

|

Record File Address Access |

When a record is accessed directly by its file address, the distinction is made by its unique location in the file; that is, its record file address (RFA). |

|

Indexed Access |

Indexed file records are stored and retrieved by a key in the data record. The desired records are usually accessed directly and then retrieved sequentially in sorted order using a key embedded in the record. |

|

Record Format |

Description |

|---|---|

|

Fixed length |

All records are the same length. |

|

Variable length |

Records vary in length. Each record is prefixed with a count byte that contains the number of bytes in the record. The count byte may be either MSB- or LSB-formatted. |

|

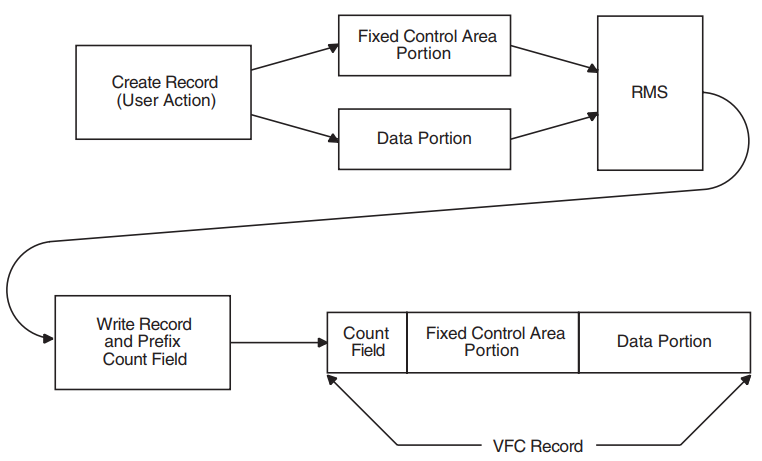

Variable record length with fixed-length control |

Records do not have to be the same length, but each includes a fixed-length control field that precedes the variable-length data portion. |

|

Stream |

Records are delimited by special characters or character sequences called terminators. Records with stream format are interpreted as a continuous sequence, or stream, of bytes. The carriage return and the line feed characters are commonly used as terminators. |

When you design a file, you specify the file storage medium and the file and record characteristics directly through your application program or indirectly using an appropriate utility. Chapter 2, "Choosing a File Organization" outlines RMS file organization, record access modes, and record characteristics in detail.

After RMS creates the file, the application program must consider these record characteristics when storing, retrieving, and modifying records. See Chapter 4, "Creating and Populating Files" for information about creating files, populating files with records, and protecting files. See Chapter 8, "Record Processing" for information about record processing, including record access modes; synchronous and asynchronous record operations; and retrieving, inserting, updating, and deleting records.

The maximum size of an RMS file has no built-in limitation other than the 32-bit virtual block number (VBN). In terms of blocks, a single file is limited to a VBN that must be described in 32 bits. So the maximum size of an RMS file is about 4.2 billion (4,294,967,295) blocks. In terms of bytes, this is equivalent to 2 terabytes.

1.2. Disk Concepts

This section describes disk concepts as an aid to understanding how a disk may be configured to enhance data access for improved performance. Disk structures may be defined as either logical or physical and the two types interact with each other to some degree. That is, you cannot manipulate a logical structure without considering the effect on a corresponding physical structure.

RMS disk files reside on Files–11 On-Disk Structure (ODS) disks. Files–11 is the name of the disk structures supported by the operating system. Files–11 disk structures are further characterized as being either on-disk structures or CD–ROM volume and file structures. The Files–11 structure is a hierarchical organization of files, their data, and the directories needed to gain access to them. The OpenVMS file system implements the Files–11 on-disk structure and provides random access to the files located on the disk or CD-ROM. Users can read from and write to disks. Users can read from and write to disks. They can read from CD-ROMs and if they have a CD-Recordable (CD-R or CD-RW) drive, they can write (or burn) their own CD-ROMs.

On-disk structures include levels 1, 2, and 5. Levels 3 and 4 are internal names for ISO and High Sierra CD formats. ODS-1 and ODS-2 structures have been available on OpenVMS systems for some time. Beginning with OpenVMS Version 7.2 on Alpha systems, you can also specify ODS-5 to format disks.

|

Structure |

Disk or CD |

Description |

|---|---|---|

|

ODS-1 |

Both |

VAX only; use for RSX compatibility: RSX–11M, RSX–11D, RSX–11M–PLUS, and IAS operating systems. |

|

ODS-2 |

Both |

Default disk structure of the OpenVMS operating system; use to share data between VAX and Alpha with full compatibility. |

|

ODS-5 |

Both |

Alpha only?; superset of ODS-2; use when working with systems like NT that need expanded character sets or directories deeper than ODS-2. |

|

ISO 9660 CD |

CD |

ISO format files: read by systems that do not have ODS-2 capability such as PCs, NT systems, and Macintoshes. |

|

Dual format |

CD |

Single volume with both ISO 9660 CD and Files-11 CD formats. Files are accessible to both formats whose directories might point to the same data. |

|

Foreign |

Both |

A structure that is not related to a Files–11 structure. When you specify a foreign structure, you make the contents of a volume known to the system, but the system makes no assumptions about its file structure. The application is responsible for supplying a structure. |

|

Characteristic |

ODS-1 (VAX only) |

ODS-2 |

ODS-5 |

|---|---|---|---|

|

File names |

9.3 |

39.39 |

238 bytes, including the dot. For Unicode, that is 119 characters including the dot. |

|

Character set |

Uppercase alphanumeric |

Uppercase alphanumeric plus hyphen (-), dollar sign ($), and underscore (_) |

ISO Latin-1, Unicode. |

|

File versions |

32,767 limit; version limits are not supported |

32,767 limit; version limits are supported |

32,767 limit; version limits are supported |

|

Directories |

No hierarchies of directories and subdirectories; directory entries are not ordered? |

Alpha: 255 ?VAX: 8 (with rooted logical, 16) |

Alpha: 255 VAX: 8 (with rooted logical, 16). |

|

System disk |

Cannot be an ODS-1 volume |

Can be an ODS-2 volume |

Cannot be an ODS-5 volume. |

|

OpenVMS Cluster access |

Local access only; files cannot be shared across a cluster |

Files can be shared across a cluster |

Files can be shared across a cluster. However, only computers running OpenVMS Version 7.2–EFT1 or later can mount ODS-5 disks. VAX computers running Version 7.2–EFT1 or later can see only files with ODS-2 style names. |

|

Disk |

Unprotected objects |

Protected objects |

Protected objects. |

|

Disk quotas |

Not supported |

Supported |

Supported. |

|

Multivolume files and volume sets |

Not supported |

Supported |

Supported. |

|

Placement control |

Not supported |

Supported |

Supported |

|

Caches |

No caching of file header blocks, file identification slots, or extent entries |

Caching of file header blocks, file identification slots, and extent entries |

Caching of file header blocks, file identification slots, and extent entries. |

|

Clustered allocation |

Not supported |

Supported |

Supported. |

|

Backup home block |

Not supported |

Supported |

Supported. |

|

Protection code E |

E means "extend" for the RSX–11M operating system but is ignored by OpenVMS |

E means "execute access" |

E means "execute access". |

|

Enhanced protection features (for example, access control lists) |

Not supported |

Enhanced protection features supported |

Enhanced protection features supported. |

|

RMS journaling |

Not supported |

Supported |

Supported. |

Note

Future enhancements to OpenVMS software will be based primarily on structure levels 2 and 5; therefore, structure level 1 volumes might be further restricted in the future. However, VSI does not intend for ODS-5 to become the default OpenVMS file structure. The principal use of ODS-5 will be when OpenVMS is a server for other systems (such as Windows NT) that have extended file names.

The default disk structure is Files–11 ODS-2. VAX systems also support Files–11 ODS-1 from earlier operating systems? to ensure compatibility among systems.

1.2.1. Files–11 On-Disk Structure Concepts

The term Files–11 On-Disk Structure, or simply ODS, refers to the logical structure given to magnetic disks; namely, a hierarchical organization of files, their data, and the directories needed to gain access to them. The file system implements Files–11 ODS-1 (on VAX systems only) and Files–11 ODS-2 (on VAX and Alpha systems) to define the disk structure and to provide access to the files located on magnetic disks.

This section describes the Files–11 ODS levels and defines related terminology.

See Section 1.2.5, ''CD–ROM Concepts'' for information about concepts and logical structures used with CD–ROMs formatted in accordance with ISO 9660.

The primary difference between Files–11 ODS-1 and Files–11 ODS-2 is that Files–11 ODS-2 incorporates control capabilities that permit added features including volume sets (described later).

The logical ordering of ODS structures is listed below in order of ascending hierarchy:

Blocks

Clusters

Extents

Files

Volumes

Volume Sets

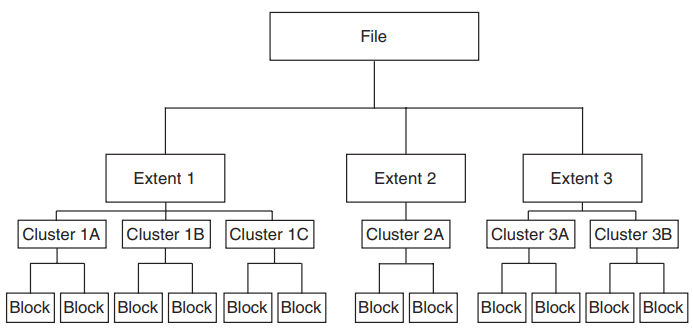

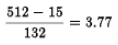

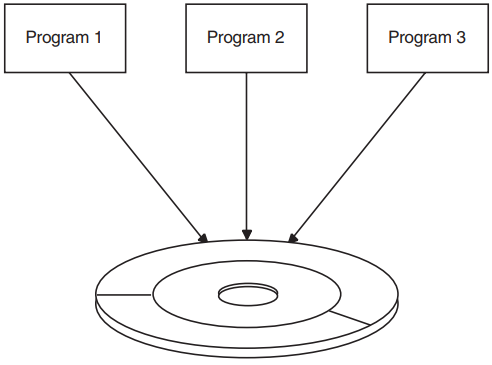

Figure 1.1, ''Files–11 On-Disk Structure Hierarchy'' shows the hierarchy of blocks, clusters, extents, and files in the Files–11 ODS.

The next higher level of Files–11 ODS is the volume (not illustrated), which is the ordered set of blocks that comprise a disk. However, a volume may include several disks that together make up a structure called a volume set. Because a volume set consists of two or more related volumes, the system treats it as a single volume.

Note

The terms disk and volume are used interchangeably in this document.

The smallest addressable logical structure on a Files–11 ODS disk is a block, comprising 512, 8-bit bytes. During input/output operations, one or more blocks may be transferred as a single unit between a Files–11 ODS disk and memory.

RMS allocates disk space for new files or extended files using multiblock units called clusters. The system manager specifies the number of blocks in a cluster as part of volume initialization.

Clusters may or may not be contiguous (share a common boundary) on a disk. Cluster sizes may range from 1 to 65,535 blocks. Generally, a system manager assigns a small cluster size to a disk with a relatively small number of blocks. Relatively larger disks are assigned a larger cluster size to minimize the overhead for disk space allocation.

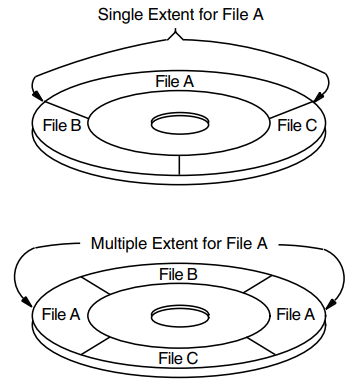

An extent is one or more adjacent clusters allocated to a file or to a portion of a file. If enough contiguous disk space is available, the entire file is allocated as a single extent. Conversely, if there is not enough contiguous disk space, the file is allocated using several extents, which may be scattered physically on the disk. Figure 1.2, ''Single and Multiple File Extents'' shows how a single file (File A) may be stored as a single extent or as multiple extents.

With RMS, you can exercise varying degrees of control over file space allocation. At one extreme, you can specify the number of blocks to be allocated and their precise location on the volume. At the other extreme, you can allow RMS to handle all disk space allocation automatically. As a compromise, you might specify the size of the initial space allocation and have RMS determine the amount of space allocated each time the file is extended. You can also specify that unused space at the end of the file is to be deallocated from the file, making that space available to other files on the volume.

When you need a large amount of file storage space, you can combine several Files–11 ODS volumes into a volume set with file extents located on different volumes in the set. You need not specify a particular volume in the set to locate or create a file, but you may improve performance if you explicitly specify a volume for a particular allocation request.

1.2.2. Files–11 Control Files

Ten files control the structure of a Files–11 On–Disk Structure Level 2 volume. Only five of these files are used for a Files–11 On–Disk Structure Level 1 volume. Table 1.5, ''Files–11 Control Files'' identifies all nine files, which are referred to as reserved files, and indicates to which Files–11 On–Disk Structure level they pertain.

|

Reserved File |

File Name |

Structure |

Structure |

|---|---|---|---|

|

Level 1 |

Level 2 | ||

|

Index file |

INDEXF.SYS;1 |

X |

X |

|

Storage bit map file |

BITMAP.SYS;1 |

X |

X |

|

Bad block file |

BADBLK.SYS;1 |

X |

X |

|

Master file directory |

000000.DIR;1 |

X |

X |

|

Core image file |

CORIMG.SYS;1 |

X |

X |

|

Volume set list file |

VOLSET.SYS;1 |

X | |

|

Continuation file |

CONTIN.SYS;1 |

X | |

|

Backup log file |

BACKUP.SYS;1 |

X | |

|

Pending bad block |

BADLOG.SYS;1 |

X | |

|

Security profile |

SECURITY.SYS |

X |

All the files listed in Table 1.5, ''Files–11 Control Files'' are listed in the master file directory (MFD), [000000].

1.2.2.1. Index File

Bootstrap block — The volume's bootstrap block is virtual block number 1 of the index file. If the volume is a system volume, this block contains a bootstrap program that loads the operating system into memory. If the volume is not a system volume, this block contains a program that displays the message that the volume is not the system device but a device that contains user files only.

Home block — The home block provides specific information about the volume, including default file values. The following information is included within the home block:

The volume name

Information to locate the remainder of the index file

The maximum number of files that can be present on the volume at any given time

The user identification code (UIC) of the volume owner

Volume protection information (specifies which users can read and/or write the entire volume)

The home block identifies the disk as a Files–11 ODS volume. Initially, the home block is the second block on the volume. Files–11 ODS volumes contain several copies of the home block to ensure that accidental destruction of this information does not affect the ability to locate other files on the volume. If the current home block becomes corrupted, the system selects an alternate home block.

Alternate home block — The alternate home block is a copy of the home block. It permits the volume to be used even if the primary home block is destroyed.

Alternate index file header — The alternate index file header permits recovery of data on the volume if the primary index file header becomes damaged.

Index file bit map — The index file bit map controls the allocation of file headers and thus the number of files on the volume. The bit map contains a bit for each file header allowed on the volume. If the value of a bit for a given file header is 0, a file can be created with this file header. If the value is 1, the file header is already in use.

File headers — The largest part of the index file is made up of file headers. Each file on the volume has a file header, which describes such properties of the file as file ownership, creation date, and time. Each file header also contains a list of the extents that define the physical location of the file. When a file has many extents, it may be necessary to have multiple file headers for locating them. When this occurs, each header is assigned a file identifier number to associate it with the appropriate file.

When you create a file, you normally specify a name that RMS assigns to the file on a Files–11 ODS volume. RMS places the file name and file identifier associated with the newly created file in a directory that contains an entry defining the location for each file. To subsequently access the file, you specify its name. The system uses the name to define a path through the directory entry to the file identifier. In turn, the file identifier points to the file header that lists the file's extents.

1.2.2.2. Storage Bit Map File

The storage bit map file controls the available space on a volume; this file is listed in the master file directory as BITMAP.SYS;1. It contains a storage control block, which consists of summary information intended to optimize the Files–11 space allocation, and the bit map itself, which lists the availability of individual blocks.

1.2.2.3. Bad Block File

The bad block file, which is listed in the master file directory as BADBLK.SYS;1, contains all the bad blocks on the volume. The system detects bad disk blocks dynamically and prevents their reuse once the files to which they are allocated have been deleted.

1.2.2.4. Master File Directory

Note

Wildcard directory searches in the MFD always start after 000000.DIR to prevent recursive looping. Therefore, you should avoid creating any directories in the MFD that lexically precede "000000".

When the Backup utility (BACKUP) creates sequential disk save sets, it stores the save set file in the MFD.

For an explanation of user file directories and file specifications, see the VSI OpenVMS User's Manual.

1.2.2.5. Core Image File

The core image file is listed in the MFD as CORIMG.SYS;1. It is not supported by the operating system.

1.2.2.6. Volume Set List File

The volume set list file is listed in the MFD as VOLSET.SYS;1. This file is used only on relative volume 1 of a volume set. The file contains a list of the labels of all the volumes in the set and the name of the volume set.

1.2.2.7. Continuation File

The continuation file is listed in the MFD as CONTIN.SYS;1. This file is used as the extension file identifier when a file crosses from one volume to another volume of a loosely coupled volume set. This file is used for all but the first volume of a sequential disk save set.

1.2.2.8. Backup Log File

The backup log file is listed in the MFD as BACKUP.SYS;1. This file is reserved for future use.

1.2.2.9. Pending Bad Block Log File

The pending bad block log file is listed in the MFD as BADLOG.SYS;1. This file contains a list of suspected bad blocks on the volume that are not listed in the bad block file.

1.2.2.10. Security Profiles File (VAX Only)

This file contains the volume security profile and is managed with the SET/SHOW security commands.

1.2.3. Files–11 On–Disk Structure Level 1 Versus Structure Level 2

For reasons of performance and reliability, Files–11 On–Disk structure level 2, a compatible superset of structure level 1, is the preferred disk structure on an OpenVMS system.

At volume initialization time (see the INITIALIZE command in the VSI OpenVMS DCL Dictionary), structure level 2 is the default.

On VAX systems, structure level 1 should be specified only for volumes that must be transportable to RSX–11M, RSX–11D, RSX–11M–PLUS, and IAS systems, as these systems support only that structure level. Additionally, you may be required to handle structure level 1 volumes transported to OpenVMS systems from one of the previously mentioned systems.

Structure level 1 volumes have the following limitations:

Directories — No hierarchies of directories and subdirectories, and no ordering of directory entries (that is, the file names) in any way. RSX–11M, RSX–11D, RSX–11M–PLUS, and IAS systems do not support subdirectories and alphabetical directory entries.

Disk quotas — Not supported.

Multivolume files and volume sets — Not supported.

Placement control — Not supported.

Caches — No caching of file header blocks, file identification slots, or extent entries.

System disk — Cannot be a structure level 1 volume.

- OpenVMS Cluster access — Local access only; cannot be shared across an OpenVMS Cluster.

Note

In this document, discussions that refer to OpenVMS Cluster environments apply to both VAXcluster systems that include only VAX nodes and OpenVMS Cluster systems that include at least one Alpha node unless indicated otherwise.

Clustered allocation — Not supported.

Backup home block — Not supported.

Protection code E — Means extend for the RSX–11M operating system but is ignored by OpenVMS systems.

File versions — Limited to 32,767; version limits are not supported.

Enhanced protection features (for example, access control lists) — not supported.

Extended File Specifications— Not supported.

RMS journaling for OpenVMS — Not supported.

RMS execution statistics monitoring — Not supported.

1.2.4. Physical Structures

For performance reasons, you should be aware of certain physical aspects of a disk.

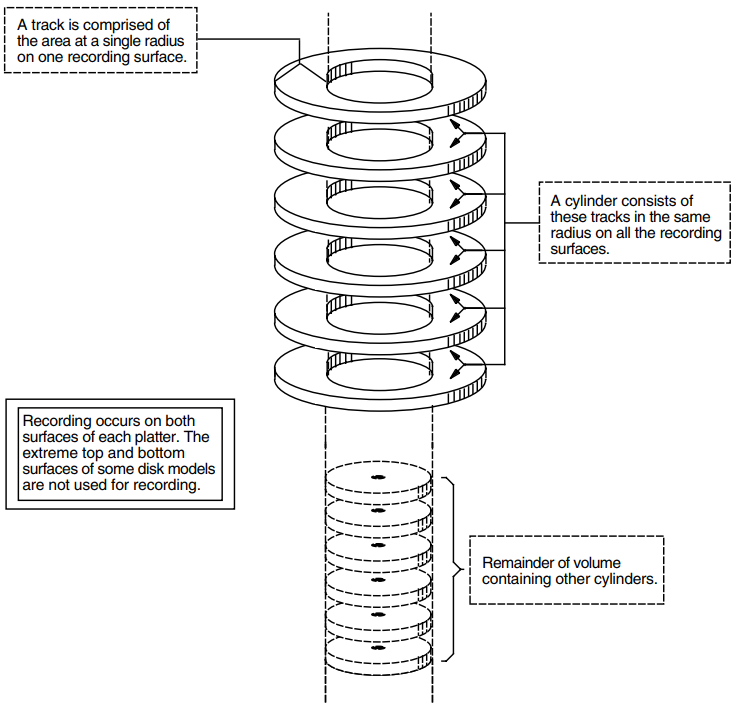

A disk (or volume) consists of one or more platters that spin at very high, constant speeds and usually contain data on both surfaces (upper and lower). A disk pack is made up of two or more platters having a common center.

Data is located at different distances from the center of the platter and is stored or retrieved using read/write heads that move to access data at various radii from the platter's center. The time required to position the read/write heads over the selected radius (referred to as a track) is called seek time. Each track is divided into 512-byte structures called sectors. The time required to bring the selected sector (logical block) under the read/write heads at the selected radius (track) is called the rotational latency. Because seek time usually exceeds the rotational latency by a factor of 2 to 4, related blocks (sectors) should be located at or near the same track to obtain the best performance when transferring data between the disk and RMS-maintained buffers in memory. Typically, related blocks of data might include the contents of a file or several files that are accessed together by a performance-critical application.

Another physical disk structure is called a cylinder. A cylinder consists of all tracks at the same radius on all recording surfaces of a disk.

Figure 1.3, ''Tracks and Cylinders'' illustrates the relationship between tracks and cylinders.

Because all blocks in a cylinder can be accessed without moving the disk's read and write heads, it is generally advantageous to keep related blocks in the same cylinder. For this reason, when choosing a cluster size for a large-capacity disk, a system manager should consider one that divides evenly into the cylinder size.

1.2.5. CD–ROM Concepts

This section describes software support for accessing CD–ROM media in compliance with the ISO 9660 standard. Compact Disc Read Only Memory (CD–ROM) discs and CD–ROM readers used with computers are very similar to the CD–ROMs and CD–ROM readers used for audio applications and may incorporate the same hardware. The major difference is that CD–ROM disc readers used with computers have a digital interface that incorporates circuitry which provides error detection and correction logic to improve the accuracy of the disc data.

CD–ROMs provide the following advantages when used to store data:

Direct access of data allowed.

Typically less expensive than other direct-access media.

Large storage capability. Currently, you can store approximately 650 megabytes (1.27 million blocks) of data on a CD–ROM.

Easier to store and handle off line.

1.2.5.1. CD–ROM On-Disc Formats

Files–11 ODS-2—OpenVMS On Disk Structure, Level 2

ISO 9660—A volume and file structure standard for information interchange on CD–ROMs

High Sierra—Working paper of the CD–ROM Advisory Committee

1.2.5.2. Volume Structure

CD–ROM media is divided into logical sectors that are assigned a unique logical sector number. Logical sectors are the smallest addressable units of a CD–ROM. Each logical sector consists of one or more consecutively ascending physical sectors as defined by the relevant recording standard. ?Logical sectors are numbered in ascending order. The value 0 is assigned to the logical sector with the lowest physical address containing recorded data. Each logical sector includes a data field made up of at least 2048 bytes—but, in all cases, the number must be a power of 2.

ISO 9660-formatted CD–ROM volumes include a system area and a data area. The reserved system area includes logical sectors 0 through 15. The data area includes the remaining logical sectors and is called volume space. Volume space is organized into logical blocks that are numbered in consecutively ascending logical block number order.

Logical blocks are made up of at least 512 bytes—but, in all cases, the number of bytes must be a power of 2. However, no logical block can be larger than a logical sector.

The data area may include one or more Volume Descriptors, File Descriptors, Directory Descriptors, and Path Tables. These entities collectively describe the volume and file structure of an ISO 9660-formatted CD–ROM. The Ancillary Control Process (ACP) that manages I/O access to the CD–ROM views the volume and file structure as an integral part of the base OpenVMS file system.

1.2.5.3. Files–11 C/D – ACPs

The ISO 9660 requirement for handling blocks that exceed 512 bytes

Partial extents

Interleaved data

Undefined record formats

Logical Blocks Greater Than 512 Bytes

OpenVMS device drivers are designed to handle files made up of 512-byte blocks that are uniquely addressable. The ISO 9660 standard supports logical blocks that are greater than 512-bytes. The Files-11 C/D ACP solves this incompatibility by converting ISO 9660 logical-block-size requests into OpenVMS-block-size requests at the file system level.

Partial Data Blocks

Any logical block in an ISO 9660 file extent may be partially filled with data. RMS assumes that all file blocks are filled with data, with the possible exception of the final block. When RMS finds a data block that is not filled, it attempts to start end-of-file processing. To prevent RMS from misinterpreting a partially-filled block as the final file block, the Files-11 C/D ACP uses I/O operations that combine adjacent ISO 9660 logical blocks into full 512-byte logical blocks.

Interleaving

Interleaving is used to gain efficiency in accessing information by storing sequential information on separate tracks. The OpenVMS file system is not natively compatible with interleaving, but ISO 9660 file extents may be interleaved. That is, ISO 9660 extents may consist of logical block groupings that are separated by interleaving gaps. In order to make the OpenVMS file system compatible with interleaving, the Files–11 C/D ACP treats each of the interleaved logical block groups as an extent.

Undefined Record Format

ISO 9660 CD–ROMs may be mastered without a specified record format because the ISO 9660 media can be mastered from platforms that do not support the semantics of files containing predefined record formats. See the VSI OpenVMS System Manager's Manual for details about mounting media with undefined record formats.

1.2.5.4. Using DIGITAL System Identifiers on CD–ROM

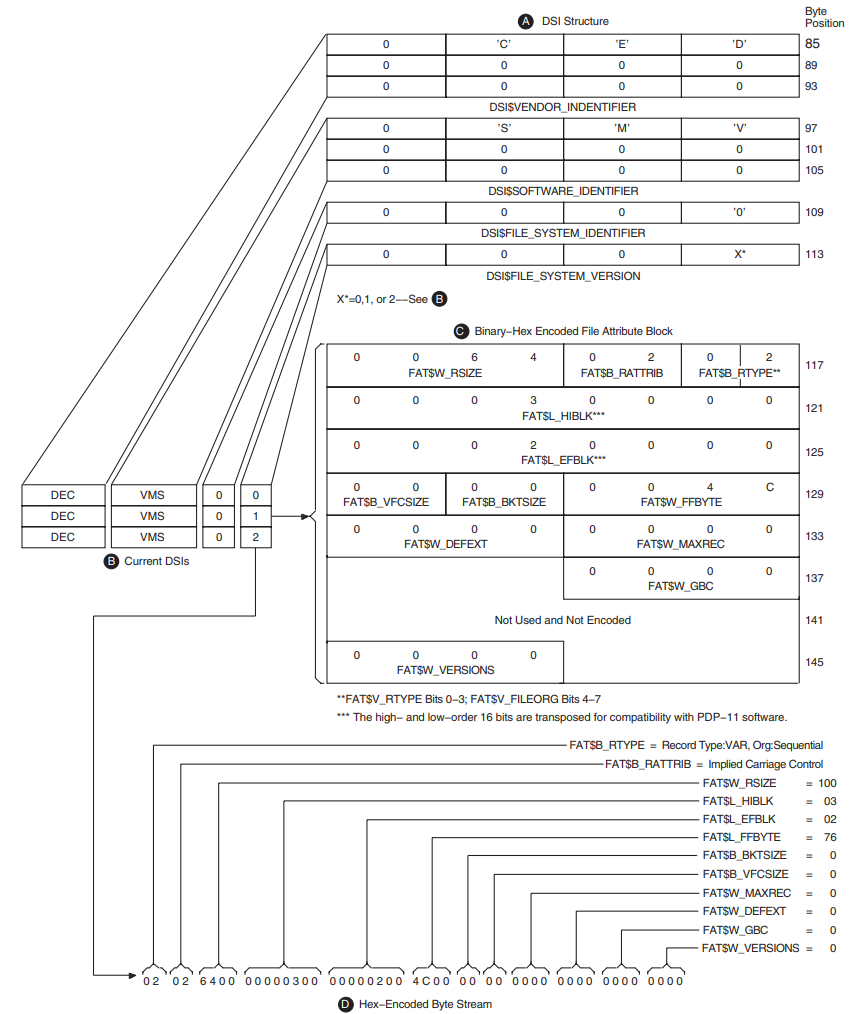

When an ISO 9660-formatted CD–ROM contains information written according to VSI specifications, affected records may include a DIGITAL System Identifier (DSI) in the associated extended attribute records (XAR). This section describes how DIGITAL System Identifiers are recorded on ISO 9660 media and how a DSI is used to encode OpenVMS formatted information on the media. Figure 1.4, ''DSI and FAT Structures in an XAR'' illustrates the DSI and FAT structures in an XAR.

Record format in byte position 79

Record attributes in byte position 80

Record length in byte positions 81 to 84

If the DSI file identifier field (DSI$FILE_SYSTEM_IDENTIFIER) contains a 0 and the DSI file version field (DSI$FILE_SYSTEM_VERSION) contains either a 1 or a 2, use the area immediately following the DSI to obtain OpenVMS file and record information (See (B), Figure 1.4, ''DSI and FAT Structures in an XAR''.)

If the DSI file version field contains a 1, the area immediately following the DSI contains a binary, hex-encoded, file attributes block that provides file and record information. (See (C), Figure 1.4, ''DSI and FAT Structures in an XAR''.)

If the DSI file version field contains a 2, the area immediately following the DSI contains an ASCII, hex-encoded, byte stream that provides file and record information. (See (D) in Figure 1.4, ''DSI and FAT Structures in an XAR''.)

When the DSI file version field contains a 0, the area immediately following the DSI will not contain file and record information. Nevertheless, if the media is mounted for DSI protection, the OpenVMS UIC codes and permission codes for system, owner, group, and world (SOGW) users will be enforced.

1.3. Magnetic Tape Concepts

This section describes magnetic tape concepts. Data records are organized on magnetic tape in the order in which they are entered; that is, sequentially.

Characters of data on magnetic tape are measured in bits per inch (bpi). This measurement is called density. A 1600-bpi tape can accommodate 1600 characters of data in 1 inch of recording space. A tape has 9 parallel tracks containing 8 bits and 1 parity bit.

A parity bit is used to check for data integrity using a scheme where each character contains an odd number of marked bits, regardless of its data bit configuration. For example, the alphabetic character (A) has an ASCII bit configuration of 100 0001, where two bits, the most significant and the least significant, are marked. With an odd-parity checking scheme, a marked eighth bit is added to the character so that it appears as 1100 0001. When this character is transmitted to a receiving station, the receiver logic checks to make sure that the character still has an odd number of marked bits. If media distortion corrupts the data resulting in an even number of marked bits, the receiving station asks the sending station to retransmit the data.

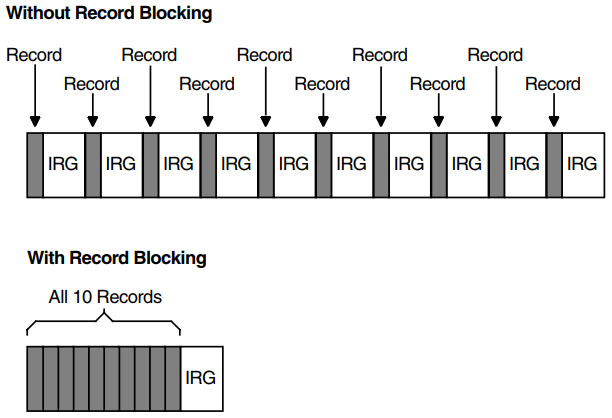

Even though a tape may have a density of 1600 bpi, there are not always 1600 characters on every inch of magnetic tape because of the interrecord gap (IRG). The IRG is an interval of blank space between data records that is created automatically when records are written to the tape. After a record operation, this breakpoint allows the tape unit to decelerate, stop if necessary, and then resume working speed before the next record operation.

Each IRG is approximately 0.6 inch in length. Writing an 80-character record at 1600 bpi requires 0.05 inch of space. The IRG, therefore, requires twelve times more space than the data with a resultant waste of storage space.

RMS can reduce the size of this wasted space by using a record blocking technique that groups individual records into a block and places the IRG after the block rather than after each record. (A block on disk is different from a block on tape. On disk, a block is fixed at 512 bytes; on tape, you determine the size of a block.) However, record blocking requires more buffer space for your program, resulting in an increased need for memory. The greater the number of records in a block, the greater the buffer size requirements. You must determine the point at which the benefits of record blocking cease, based on the configuration of your computer system.

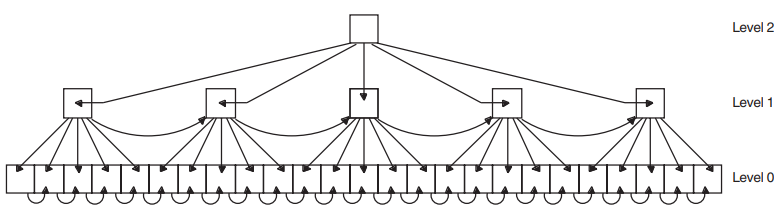

Figure 1.5, ''Interrecord Gaps'' shows how space can be saved by record blocking. Assume that a 1600-bpi tape contains 10 records not grouped into record blocks. Each record is 160 characters long (0.1 inch at 1600 bpi) with a 0.6-inch IRG after each record; this uses 7 inches of tape. Placing the same 10 records into 1 record block uses only 1.6 inches of tape (1 inch for the data records and 0.6 inch for the IRG).

Record blocking also increases the efficiency of the flow of data into the computer. For example, 10 unblocked records require 10 separate physical transfers, while 10 records placed into a single block require only 1 physical transfer. Moreover, a shorter length of tape is traversed for the same amount of data, thereby reducing operating time.

Like disk data, magnetic tape data is organized into files. When you create a file on tape, RMS writes a set of header labels on the tape immediately preceding the data blocks. These labels contain information such as the user-supplied file name, creation date, and expiration date. Additional labels, called trailer labels, are also written following the file. Trailer labels indicate whether or not a file extends beyond a volume boundary.

To access a file on tape by the file name, the file system searches the tape for the header label set that contains the specified file name.

When the data blocks of a file or related files do not physically fit on one volume (a reel of tape or a tape cartridge), the file is continued on another volume, creating a multivolume tape file that contains a volume set. When a program accesses a volume set, it searches all volumes in the set. For additional information about magnetic tapes, see the VSI OpenVMS System Manager's Manual.

1.3.1. ANSI-Labeled Magnetic Tape

This section describes ANSI magnetic tape labels, data, and record formats supported by OpenVMS operating systems. Note, however, that OpenVMS operating systems also support the ISO standard. For a complete description of these labels, please refer to the ANSI X3.27–1978 or ISO 1001–1979 magnetic tape standard.

1.3.1.1. Logical Format of ANSI Magnetic Tape Volumes

The format of ANSI magnetic tape volumes is based on Level 3 of the ANSI standard for magnetic tape labels and file structure for information interchange. This standard specifies the format, content, and sequence of volume labels, file labels, and file structures. According to this standard, volumes are written and read on 9-track magnetic tape drives only. The contents of labels must conform to prescribed data and record formats. All labels must consist of ASCII "a" characters.

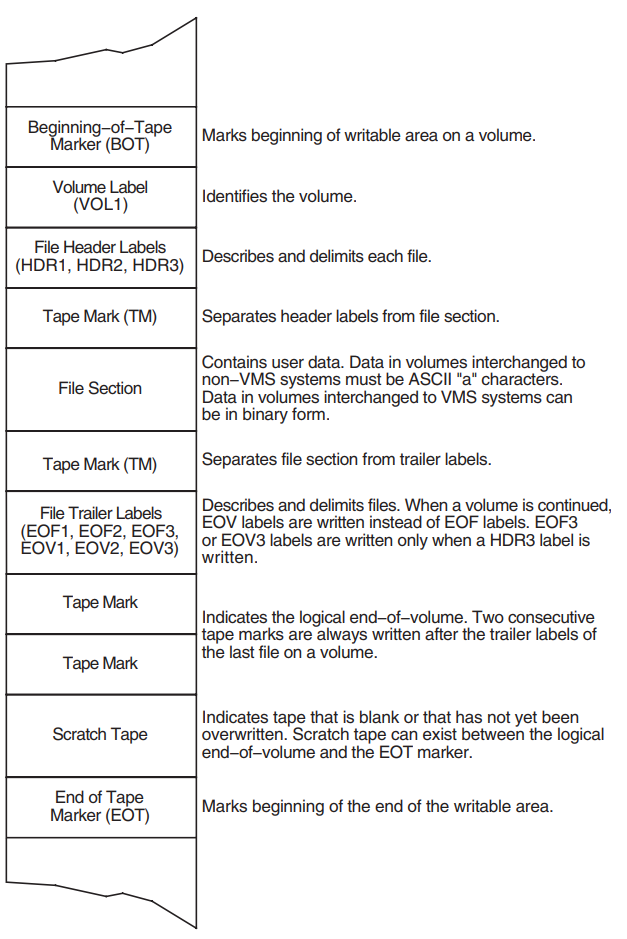

The ANSI magnetic tape format allows you to write binary data in the file sections (see Figure 1.6, ''Basic Layout of an ANSI Magnetic Tape Volume'') of files. However, if you plan to use such files for information interchange across systems, make sure that the recipient system can read the binary data.

1.3.1.2. RMS Magnetic Tape Ancillary Control Process (MTAACP)

The RMS magnetic tape ancillary control process (MTAACP) is the internal operating system software process that interprets the logical format of ANSI magnetic tape volumes. Transparent to your process, the MTAACP process reads, writes, and interprets ANSI labels before passing this information to RMS and $QIO system services. These services, in turn, read, write, and interpret the record format of the data written in the file section.

1.3.1.3. Basic Components of the ANSI Magnetic Tape Format

Beginning-of-tape (BOT) and end-of-tape (EOT) markers

Tape marks

File sections

Volume, header, and trailer labels

Figure 1.6, ''Basic Layout of an ANSI Magnetic Tape Volume'' displays the arrangement and function of these components.

Beginning-of-Tape and End-of-Tape Markers

Every volume has beginning-of-tape (BOT) and end-of-tape (EOT) markers. These markers are pieces of photoreflective tape that delimit the writable area on a volume. ANSI magnetic tape standards require that a minimum of 14 feet to a maximum of 18 feet of magnetic tape precede the BOT marker; a minimum of 25 feet to a maximum of 30 feet of magnetic tape, of which 10 feet must be writable, must follow the EOT marker. The EOT marker indicates the start of the end of the writable area of the tape, rather than the physical end of the tape. Therefore, data and labels can be written after the EOT marker.

Tape Marks

Tape marks separate the file labels from the file sections, separate one file from another, and denote the logical end-of-volume. On the basis of the number and relative placement of tape marks written on a volume, OpenVMS systems determine whether a tape mark delimits a label, a file, or a volume.

Tape marks are written both singly and in pairs. Single tape marks separate either a file section from the header and trailer labels or one file from another. When written after a set of header labels, a single tape mark signals the beginning of a file section. When written before a set of trailer labels, a single tape mark indicates the end of a file section. When written after a trailer label set, a single tape mark separates one file from another.

Double tape marks indicate that either an empty file section exists or the logical end-of-volume has been reached. OpenVMS systems create an empty file when a volume is initialized.

Labels

Labels identify, describe, and control access to volumes and their files. The ANSI magnetic tape format supports volume, header, and trailer labels. The volume labels are the first labels written on a volume. They identify the volume and the volume owner and specify access protection. Header and trailer labels are sets of labels that identify, describe, and delimit files. Header labels precede files; trailer labels follow files.

Table 1.6, ''Labels and Components Supported by OpenVMS Systems'' lists the labels supported by OpenVMS operating systems. All other ANSI magnetic tape labels are ignored on input.

Although each type of label uses a different format to organize its contents, all labels conforming to Version 3 of the ANSI magnetic tape standard must consist of ASCII "a" characters. Some labels contain reserved fields designed for future system use or future ANSI magnetic tape standardization. Reserved fields also must consist of ASCII "a" characters; however, OpenVMS systems ignore these characters on input.

|

Symbol |

Meaning |

|---|---|

|

BOT |

Beginning-of-tape marker |

|

EOF1 |

First end-of-file label |

|

EOF2 |

Second end-of-file label |

|

EOF3 |

Third end-of-file label |

|

EOF4 |

Fourth end-of-file label |

|

EOT |

End-of-tape marker label |

|

EOV1 |

First end-of-volume label |

|

EOV2 |

Second end-of-volume label |

|

EOV3 |

Third end-of-volume label |

|

EOV4 |

Fourth end-of-volume label |

|

HDR1 |

First header label |

|

HDR2 |

Second header label |

|

HDR3 |

Third header label |

|

HDR4 |

Fourth header label |

|

VOL1 |

First volume label |

|

VOL2 |

Second volume label |

|

TM |

Tape mark |

|

TM TM |

Double tape mark indicates an empty file section or the logical end-of-volume |

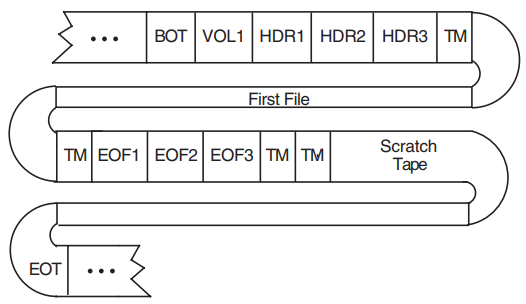

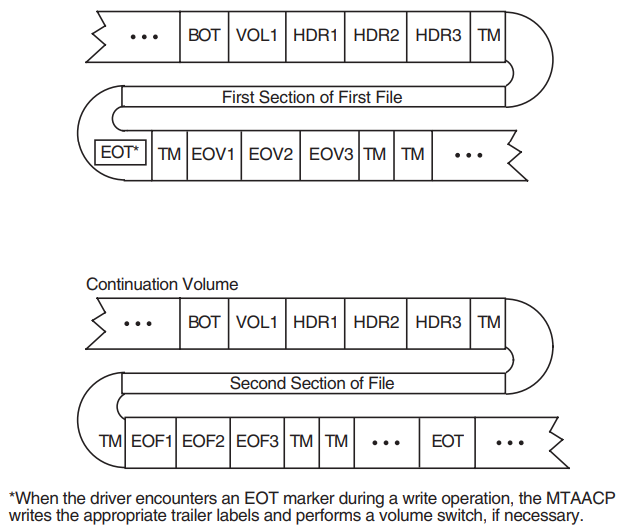

1.3.1.4. Volume and File Configurations

A single file residing on a single volume

A single file requiring multiple volumes

Multiple files residing on a single volume

Multiple files requiring multiple volumes

The file sequence number field allows as many as 9999 file sections for one file. In effect, the file length is unlimited.

Only one file section of a given file is written on a volume.

When multiple sections exist for one file, each file section is written to a separate volume in the volume set. The file section numbers of each section are written consecutively in ascending order (section

n+1is written immediately following sectionn); file sections of other files are not interspersed.

Each of the file and volume configurations is illustrated in the sections that follow.

Single File Residing on a Single Tape Volume

A single file on a single tape volume configuration consists of one file on one volume. The components of the ANSI magnetic tape format for this configuration are illustrated in Figure 1.7, ''Single File on a Single Volume''.

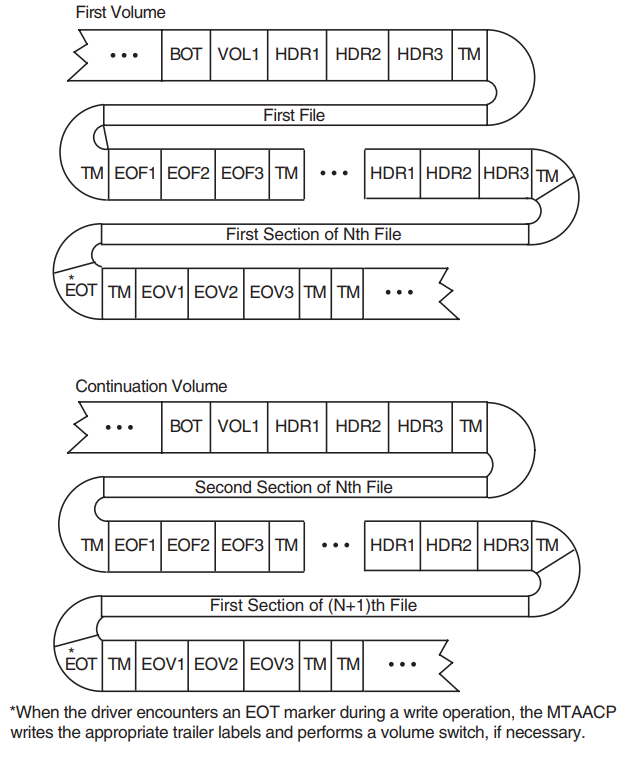

Single File Requiring Multiple Tape Volumes

A single-file/multivolume configuration consists of one file that spans two or more volumes in a volume set. Figure 1.8, ''Single File on Multiple Tape Volumes'' illustrates the components of the ANSI magnetic tape format for this configuration.

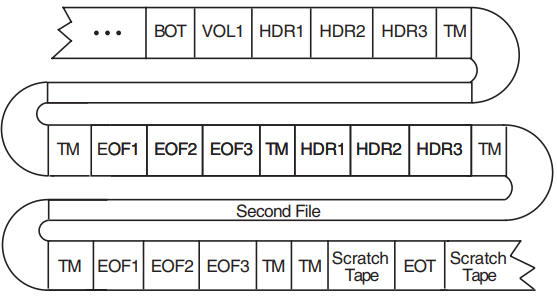

Multiple Files on a Single Tape Volume

A multifile/single-volume configuration consists of two or more files on a single volume. It is the most common file and volume configuration. Figure 1.9, ''Multifile/Single-Volume Configuration'' illustrates the components of the ANSI magnetic tape format for this configuration.

Multifile/Multivolume Configuration

A multifile/multivolume configuration consists of two or more files that span two or more volumes in the same volume set. Figure 1.10, ''Multifile/Multivolume Configuration'' illustrates the components of the ANSI magnetic tape format for this configuration.

1.3.1.5. Volume Labels

The sections that follow describe the first volume (VOL1) and second volume (VOL2) labels.

1.3.1.5.1. VOL1 Label

The 80-character volume label (VOL1) is the first label written on an ANSI magnetic tape volume. It defines the label type, name, and owner of the volume. Although there are many fields in a VOL1 label, this section describes only those fields that you can access or that can inhibit access to a volume and its files on OpenVMS systems.

Volume Identifier Field

The volume identifier field is a 6-character field that contains the name of the volume. You specify the volume identifier in the command string when you initialize or mount a volume (see the VSI OpenVMS System Manager's Manual). The volume identifier consists of six ASCII "a" characters. Lowercase characters are not in the "a" set, but if you specify them, OpenVMS systems change them to uppercase. If you specify fewer than six characters, OpenVMS systems pad the field by right-justifying the field with the ASCII space character.

Accessibility Field

The accessibility field is a one-character field that allows an installation to control access to a volume. See the VSI OpenVMS System Manager's Manual for a description of accessibility support.

Implementation Identifier Field

The implementation identifier field contains the identifier of the implementation that creates the magnetic tape. This field controls how certain implementation-specific fields and volume labels are interpreted. The magnetic tape file system's implementation identifier is DECFILE11 A. This field contains the implementation identifier only if a second volume (VOL2) label is written on the magnetic tape. Otherwise, it is filled with ASCII space characters.

Owner Identifier Field

The owner identifier field is available to the user. This field does not affect the checking of a user's access to a volume, except as noted in the VSI OpenVMS System Manager's Manual.

1.3.1.5.2. VOL2 Label

In addition to the first volume (VOL1) label described above, OpenVMS systems provide a second volume (VOL2) label, the volume-owner field.

The volume-owner field contains the OpenVMS protection information that has been written on the magnetic tape. A second volume label is written only if an OpenVMS protection scheme had been specified on either the MOUNT or INITIALIZE command.

The volume-owner field also contains a value that incorporates the user identification code (UIC) with the OpenVMS protection code specified for a volume. By default, OpenVMS systems do not write a UIC to this field, thus allowing all users READ and WRITE access. Note, however, that EXECUTE and DELETE access are not applicable to magnetic tape volumes. Also note that, regardless of the protection code that you specify, both system users and the volume owner always have READ and WRITE access to a volume. The contents of the volume-owner field depends on the OpenVMS protection code that you specify.

1.3.1.6. Header Labels

OpenVMS operating systems support four file-header labels: HDR1, HDR2, HDR3, and HDR4. The HDR3 and HDR4 labels are optional. The following sections describe and illustrate each file-header label.

1.3.1.6.1. HDR1 Label

Every file on a volume has a HDR1 label, which identifies and describes the file by supplying the OpenVMS MTAACP with the following information:

File identifier

File-set identifier

File section number

File sequence number

Generation and generation version numbers

File creation and expiration dates

Accessibility code

Implementation identifier

File Identifier Field

filename.type;version

OpenVMS file specifications are a subset of ANSI magnetic tape file names. However, ANSI magnetic tape file names are valid only for magnetic tape volumes; OpenVMS file specifications are valid for disk and tape volumes. Both types of file specifiers are compatible with compatibility mode.

An OpenVMS file specification consists of a file name, a file type, and an optional version number. Valid file names contain a maximum of 39 characters. Valid file types consist of a period followed by a maximum of 39 characters. The semicolon separates the version number from the file type.

Except for wildcard characters, only the characters A through Z, 0 through 9, and the special characters ampersand (&), hyphen (-), underscore (_), and dollar sign ($) are valid for OpenVMS file names and types. The period and semicolon are the only other valid special characters, and they are always separators.

ANSI magnetic tape file names do not have a file type field. An ANSI magnetic tape file name consists of a 17-character name string, a period, a semicolon, and an optional version number. You can specify a name string consisting of a maximum of 17 ASCII "a" characters, but you must enclose the string in quotation marks (as in, for example, "file name"). When you specify fewer than 17 characters, the string is padded on the right with spaces to the 17-character maximum size. If you specify a file name that has trailing spaces, OpenVMS systems truncate them when the file name is returned. In addition, the space-padded field prevents you from specifying a unique file name that consists of spaces.

Although you can specify longer file names (up to 79 characters), only the first 17 characters of the file name will be used in interchange.

The quotation mark character requires special treatment because it is both the file name delimiter and a valid ASCII "a" character that can itself be embedded in the name string. You must specify two quotation marks for each one that you want the operating system to interpret. The additional quotation mark informs the operating system that one of the quotation marks is part of the name string, rather than a delimiter.

Embedded spaces also are valid characters, but embedded tabs are not. Lowercase characters are not in the ASCII "a" character set, but if you specify them, OpenVMS systems convert them to uppercase characters.

If you do not specify a file type or version number on input, OpenVMS systems supply a period (the default file type) and a semicolon (the default version number). However, the period and semicolon will not be written to this field on the tape.

Although the operating system considers version numbers for ANSI magnetic tape file names and OpenVMS file names to be part of the file name specification, the version number of a file is not written to the file identifier field but is mapped to the generation number and generation version-number fields as described in the section called “Generation Number and Generation Version-Number Fields”.

Input "AB2&D""FgHI*k4""#-M";2 "##########" """""""""""""""""""""""""""; "DWDEVOP.DAT" "VMS_LONG_FILENAME.LONG_FILETYPE" Output to User Process "AB2&D""FGHI*K4""#-M";2 "".; """""""""""""""""""""""""""".; DWDEVOP.DAT; VMS_LONG_FILENAME.LONG_FILETYPE Output to HDR1 Label AB2&D"FGHI*K4"#-M ################# """""""""""""#### DWDEVOP.DAT###### VMS_LONG_FILENAME

File-Set Identifier Field

The 6-character file-set identifier field denotes all files that belong to the same volume set. The file-set identifier for any file within a given volume set should always be the same as the file-set identifier of the first file on the first volume that you mount. The file-set identifier is the same as the volume identifier of the first volume that you mount.

File Section Number and File Sequence Number Fields

The file section number is a 4-character field that specifies the number of the file section.

The file sequence number is a 4-character field that specifies the number of the file in a file set.

Generation Number and Generation Version-Number Fields

The generation number (a decimal number from 0001 to 9999) and generation version-number (a 2-digit decimal number) fields store the file version number specified on input and written by the system on output. The operating system does not increment the version number of a file, even when the version of the specified file already exists on the volume. Therefore, if the file that you specify has the same file name and version number as an existing file, you will have at least two files with the same version number on the same volume set.

On input, OpenVMS systems compute the version number by using this calculation:

version number = [(generation number - 1) * 100] + generation version-number + 1

Version numbers larger than 32,767 are divided by 32,768; the integer remainder becomes the version number.

On output, the generation number is derived from the version number with this calculation:

generation number = [(version number - 1)/100] + 1

If there is a remainder after the version number is divided by 100, the remainder becomes the generation version number. It is not added to 1 to form the generation number.

Creation Date and Expiration Date Fields

The creation date field contains the date the file is created. The expiration date field contains the date the file expires. The system interprets the expiration date of the first file on a volume as the date that both the file and the volume expire. The creation and expiration dates are stored in the Julian format. This 6-character format (#YYDDD) permits the # symbol to consist of either an ASCII space or an ASCII zero, with the YYDDD consisting of a year and day value. If an ASCII space is indicated, it is assumed that 1900 is added to the 2-digit year value; if an ASCII zero is indicated, it is assumed that 2000 is added to the 2-digit year value. For the YYDDD part of the format, only dates are relevant for these fields; time is always returned as 00:00:00:00.

OpenVMS Version 5.1-1 and later versions implement the ASCII zero to the previously existing ASCII space per the ANSI X3.27–1987 standard, making them year 2000 ready. This ANSI standard is believed to be valid through the year 2100.

OpenVMS versions prior to Version 5.1-1 have known problems initializing and mounting magnetic tapes in the year 2000 and later.

By default, the current date is written to both the creation and expiration date fields when you create a file. Because the expiration date is the same as the creation date, the file expires on creation and you can overwrite it immediately. If the expiration date is a date that is later than the creation date and if the files you want to overwrite have not expired, you must specify the /OVERRIDE=EXPIRATION qualifier with the INITIALIZE or MOUNT command.

To write dates other than the defaults in the date fields in this label, specify the creation date field (CDT) and the expiration date field (EDT) of the RMS date and time extended attribute block (XABDAT).

When you do not specify a creation date, RMS defaults the current date to the creation date field. To specify a zero creation date, you must specify a year before 1900. If you specify a binary zero in the date field, the system will write the current date to the field.

For details on the XABDAT, see the VSI OpenVMS Record Management Services Reference Manual.

Accessibility Field

The contents of this field are described in Section 1.3.1.5, ''Volume Labels''.

Implementation Identifier Field

The implementation identifier field specifies, using ASCII "a" characters, an identification of the implementation that recorded the Volume Header Label Set.

1.3.1.6.2. HDR2 Label

The HDR2 label describes the record format, maximum record size, and maximum block size of a file.

Record Format Field

The record format field specifies the type of record format the file contains. The operating system supports two record formats: fixed length (F) and variable length (D). When files contain record formats that the system does not support, it cannot interpret the formats and classifies them as undefined.

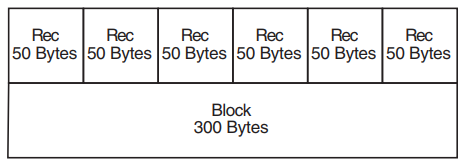

Fixed-length records are all the same length. No indication of the record length is required within the records because either the HDR2 label defines the record length or you specify the record length with the /RECORDSIZE qualifier. A fixed-length record can be a complete block, or several records can be grouped together in a block.

Fixed-length blocked records are padded to a multiple of 4 records. Variable-length records are padded to the block size. If a block is not filled, it will be padded with circumflex characters (^). The standard does not allow records containing only circumflexes; the system will interpret this as padding, not data.

Figure 1.11, ''Blocked Fixed-Length Records'' shows a block of fixed-length records. Each record has a fixed length of 50 bytes. All six records are contained in a 300-byte block. The records are blocked—that is, grouped together as one entity—to increase processing efficiency; when records are blocked, you can access many of them with one I/O request. The block size should be a multiple of the record size.

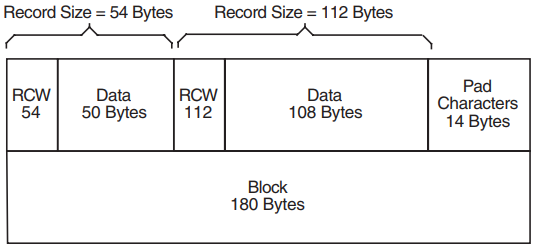

The size of a variable-length record is indicated by a record control word (RCW). The RCW consists of four bytes at the beginning of each record. A variable-length record can be a complete block, or several records can be grouped together in a block.

Two variable-length records are shown in Figure 1.12, ''Variable-Length Records''. The first consists of 54 bytes, including the RCW. The second consists of 112 bytes, including the RCW. The records are contained in a 166-byte block.

Do not use system-dependent record formats on volumes used for information interchange. OpenVMS system-dependent formats are stream and variable with fixed-length control (VFC).

Block Length Field

The block length field denotes the maximum size of the blocks. According to the ANSI magnetic tape standard, valid block sizes range from 18 to 2048 bytes. However, the operating system allows you to specify a smaller or larger block size by using the /BLOCKSIZE qualifier with the MOUNT command. To specify the block size using RMS, see the BLS field in the file access block (FAB) in the VSI OpenVMS Record Management Services Reference Manual. When you specify a block size outside the ANSI magnetic tape standard range, the volume may not be processed correctly by other systems that support the ANSI magnetic tape standards.

Record Length Field

The record length field denotes either the size of fixed-length records or the maximum size of variable-length records in a file. Valid RMS record sizes vary, depending on the record format. The range for fixed-length records is 1 to 65,534 bytes; the range for variable-length records is 4 to 9,999 bytes, including the 4-byte RCW. Therefore, the maximum length of the data area of a variable-length record is 9,995 bytes. To comply with ANSI magnetic tape standards, the record size should not be larger than the maximum block size of 2,048 bytes, even though OpenVMS systems allow larger record sizes (when the block size is larger).

For volumes containing files that do not have HDR2 labels, you must specify

MOUNT/RECORDSIZE=n at mount time. The variable n denotes the record

length in bytes. Files without HDR2 labels were created by a system that supports only

level 1 or 2 of the ANSI standard for magnetic tape labels and file structure. Records

should be fixed length because this is the only record format that either level

supports. If you do not specify a record size, each block will be considered a single

record.

Implementation-Dependent Field

The implementation-dependent field contains two 1-character subfields that describe how the operating system interprets record format and form control.

The first subfield, character position 16, denotes whether the RMS attributes are in this label or the HDR3 label. If character position 16 contains a space, the RMS attributes are in the HDR3 label; if it contains any character other than a space, character position 16 is the first byte of the RMS attributes in the HDR2 label. The attributes appear in character positions 16 through 36 and 38 through 50.

|

A |

First byte of record contains Fortran control characters. |

|

M |

The record contains all form control information. |

|

space |

Line-feed/carriage-return combination is to be inserted between records when the records are written to a carriage-control device, such as a line printer or terminal. If form control is not specified when a file is created, this is the default. |

Buffer-Offset Length Field

For implementations that support buffer offsets, the buffer-offset length field indicates the length of information that prefixes each data block. The magnetic tape file system supports the input of buffer offset, which means that the buffer-offset length obtained from the HDR2 label (when reading the file) is used to determine the actual start of the data block. The magnetic tape file system does not support the writing of a buffer offset.

Note that, if you open a file for append or update access and the buffer-offset length is nonzero, the open operation will not succeed.

1.3.1.6.3. HDR3 Label

The HDR3 label describes the RMS file attributes. For RMS operations, data in the HDR3 label supersedes data in the HDR2 label.

Although the HDR3 label usually exists for every file on an ANSI magnetic tape volume, there are two situations when this label will not be written. The first is when an empty dummy file is created during volume initialization; no HDR3 label is written because the empty file does not require RMS attributes. The second is when you specify MOUNT/NOHDR3 at mount time. You should use the /NOHDR3 qualifier when you create files on volumes that will be interchanged to systems that do not process HDR3 labels correctly.

The RMS attributes describe the record format of a file. These attributes are converted from 32 bytes of binary values to 64 bytes of ASCII representations of their hexadecimal equivalents for storage in the HDR3 label.

1.3.1.6.4. HDR4 Label

The HDR4 label contains the remainder of an OpenVMS file name that would not fit in the HDR1 file identifier field.

1.3.1.7. Trailer Labels

The operating system supports two sets of trailer labels: end-of-file (EOF) and end-of-volume (EOV). A trailer label is written for each header label.

The label identifier field (characters 1-3) contains EOF or EOV.

The block count field (characters 55-60) in the EOF1 and EOV1 labels contains the number of data blocks in the file section.

The particular label that OpenVMS systems write depends on whether a file extends beyond a volume. If a file terminates within the limits of a volume, EOF labels are written to delimit the file (see Figure 1.7, ''Single File on a Single Volume''). If a file extends across volume boundaries before terminating, EOV labels are written, indicating that the file continues on another volume (see Figure 1.8, ''Single File on Multiple Tape Volumes'').

1.4. Using Command Procedures to Perform Routine File and Device Operations

Many of the operations that you perform on disk and magnetic tape media are routine in nature. Therefore, you will find it worthwhile to take the time to identify those tasks that you routinely perform at your particular site. Once you have isolated those tasks, you can design command procedures to assist you in performing them.

For example, if you are a system manager or an operator, you must frequently perform data integrity tasks such as backing up media. You could enter all of the commands, parameters, and qualifiers required to back up your media each time that you perform the backup operation, or you can write a single command procedure (containing that set of commands, qualifiers, and parameters) that, when executed, would also perform the backup operation.

In order to familiarize yourself with the syntax used to design and execute command procedures, see the VSI OpenVMS User's Manual.

1.5. Volume Protection

The system protects data on disk and tape volumes to make sure that no one accesses the data accidentally or without authorization. For volumes, the system provides protection at the file, directory, and volume levels. For tape volumes, the system provides protection at the volume level only.

In addition to protecting the data on mounted volumes, the system provides device protection coded into the home block of the disks and tapes. See Section 1.2, ''Disk Concepts'' for more information.

In general, you can protect your disk and tape volumes with user identification codes (UICs) and access control lists (ACLs). The standard protection mechanism is UIC-based protection. Access control lists permit you to customize security for a file or a directory.

UIC-based protection is determined by an owner UIC and a protection code, whereas ACL-based protection is determined by a list of entries that grant or deny access to specified files and directories.

Note

You cannot use ACLs with magnetic tape files.

When you try to access a file that has an ACL, the system uses the ACL to determine whether or not you have access to the file. If ACL does not explicitly allow or refuse you access or if the file has no ACL, the system uses the UIC-based protection to determine access. (See the VSI OpenVMS Guide to System Security for additional information about system security.)

For detailed information about protecting your files, directories, or volumes, see Section 4.4, ''Defining File Protection''.

1.6. RMS (Record Management Services)

OpenVMS Record Management Services (OpenVMS RMS or simply RMS) is the file and record access subsystem of the OpenVMS operating systems. RMS allows efficient and flexible data storage, retrieval, and modification for disks, magnetic tapes, and other devices.

You can use RMS from low-level and high-level languages. If you use a high-level language, it may not be easy or feasible to use the RMS services directly because you must allocate control blocks and access fields within them. Instead, you can rely on certain processing options of your language's input/output (I/O) statements or upon a specialized language provided as an alternative to using RMS control blocks directly, the File Definition Language (FDL).

If you use a low-level language, you can either use record management services directly, or you may use FDL.

1.6.1. File Definition Language (FDL)

FDL is a special-purpose language you can use to specify file characteristics. FDL is particularly useful when you are using a high-level language or when you want to ensure that you create properly tuned files. Properly tuned files can be created from an existing file or from a new design for a file. The performance benefits of properly tuned files can greatly affect application and system performance, especially when using large indexed files.

FDL allows you to use all of the creation-time capabilities and many of the run-time capabilities of RMS control blocks, including the file access block (FAB), the record access block (RAB), and the extended attribute blocks (XABs).

For more information about FDL, see Section 4.1.2, ''Using File Definition Language''.

1.6.2. RMS Data Structures

RMS control blocks generally fall into two groups: those pertaining to files and those pertaining to records.

To exchange file-related information with file services, you use a control block called a file access block (FAB). You use the FAB to define file characteristics, file specifications, and various run-time options. The FAB has a number of fields, each identified by a symbolic offset value. For instance, the allocation quantity for a file is specified in a longword-length field having a symbolic offset value of FAB$L_ALQ. FAB$L_ALQ indicates the number of bytes from the beginning of the FAB to the start of the field.

To exchange record-related information with RMS, you use a control block called a record access block (RAB). You use the RAB to define the location, type, and size of the input and output buffers, the record access mode, certain tuning options, and other information. The symbolic offset values for the RAB fields have the prefix RAB$ to differentiate them from the values used to identify FAB fields. The RAB symbolic offset values have the same general format, where the letter following the dollar sign indicates the field length and the letters following the underscore are a mnemonic for the field's function. For example, the multibuffer count field (MBF) specifies the number of local buffers to be used for I/O and has the symbolic offset value RAB$B_MBF. The value of RAB$B_MBF is equal to the number of bytes from the beginning of the RAB to the start of the field.

Where applicable, RMS uses control blocks called extended attribute blocks (XABs), together with FABs and RABs, to support the exchange of information with RMS. For example, a Key Definition XAB specifies the characteristics for each key within an indexed file. The symbolic offset values for XAB fields have the common prefix XAB$.

For more information about RMS control blocks, see Chapter 4, "Creating and Populating Files".

1.6.3. Record Management Services

Because RMS performs operations related to files and records, services generally fall into one of two groups:

Services that support file processing. These services create and access new files, access (or open) previously created files, extend the disk space allocated to files, close files, get file characteristics, and perform other functions related to the file.

Services that support record processing. These services get (extract), find (locate), put (insert), update (modify), and delete (remove) records and perform other record operations.

For more information about the various services, see Chapter 7, "File Sharing and Buffering" and Chapter 8, "Record Processing".

1.7. RMS Utilities

The following are RMS file-related utilities:

The Analyze/RMS_File utility

The Convert utility

The Convert/Reclaim utility

The Create/FDL utility

The Edit/FDL utility

These utilities let you design, create, populate, maintain, and analyze data files that can use the full set of RMS create-time and run-time options. They help you create efficient files that use a minimum amount of system resources, while decreasing I/O time.

For more information about the record management utilities, see the VSI OpenVMS Record Management Utilities Reference Manual.

1.7.1. The Analyze/RMS_File Utility

With the Analyze/RMS_File utility (ANALYZE/RMS_FILE), you can perform five functions:

Inspect and analyze the internal structure of an RMS file

Generate a statistical report on the file's structure and use

Interactively explore the file's internal structure

Generate an FDL file from an RMS file

Generate a summary report on the file's structure and use

ANALYZE/RMS_FILE is particularly useful in generating an FDL file from an existing data file that you can then use with the Edit/FDL utility (also called the FDL editor) to optimize your data files. Alternatively, you can provide general tuning for the file without the FDL editor.

To invoke the Analyze/RMS_File utility, use the following DCL command line format:

ANALYZE/RMS_FILE filespec[,...]

The filespec parameter lets you select the data file you want to analyze.

For more information about the Analyze/RMS_File utility, refer to Chapter 10, "Maintaining Files" of this manual and the VSI OpenVMS Record Management Utilities Reference Manual.

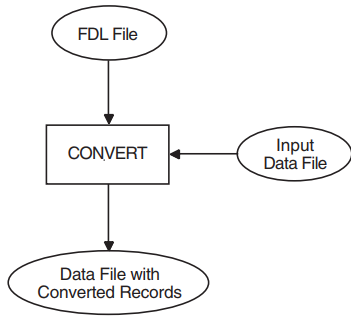

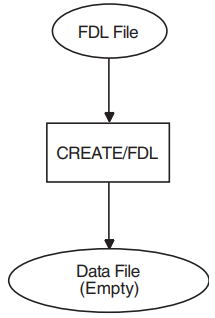

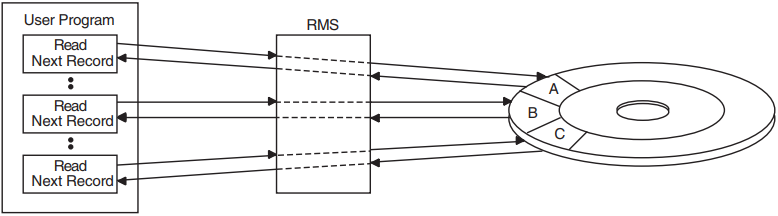

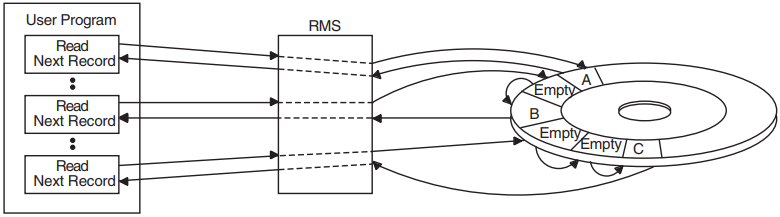

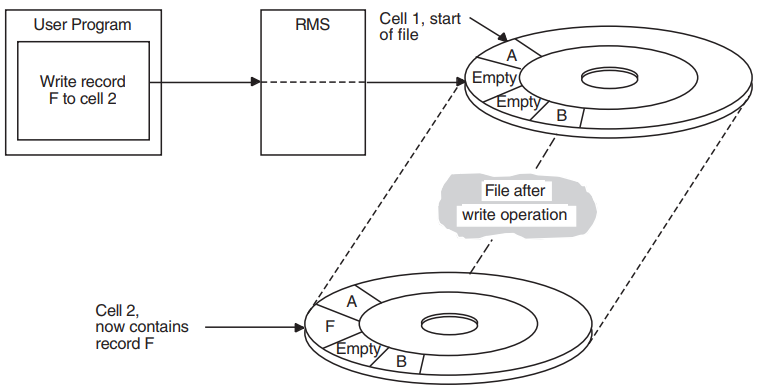

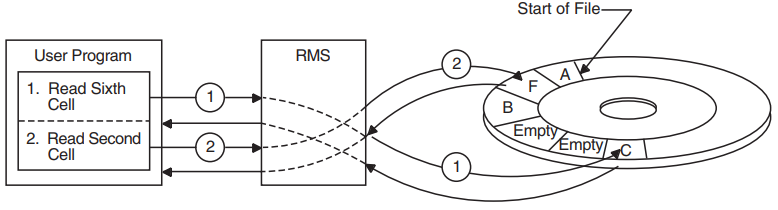

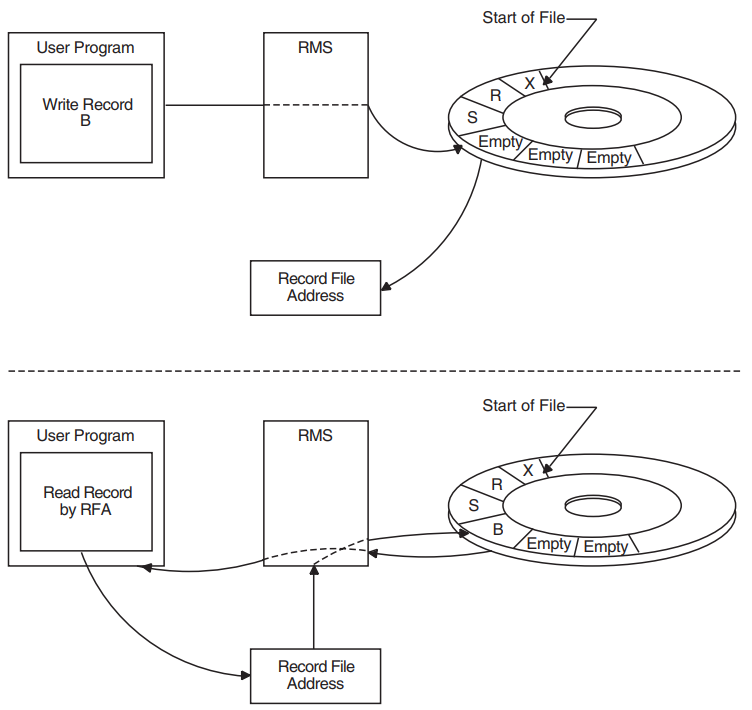

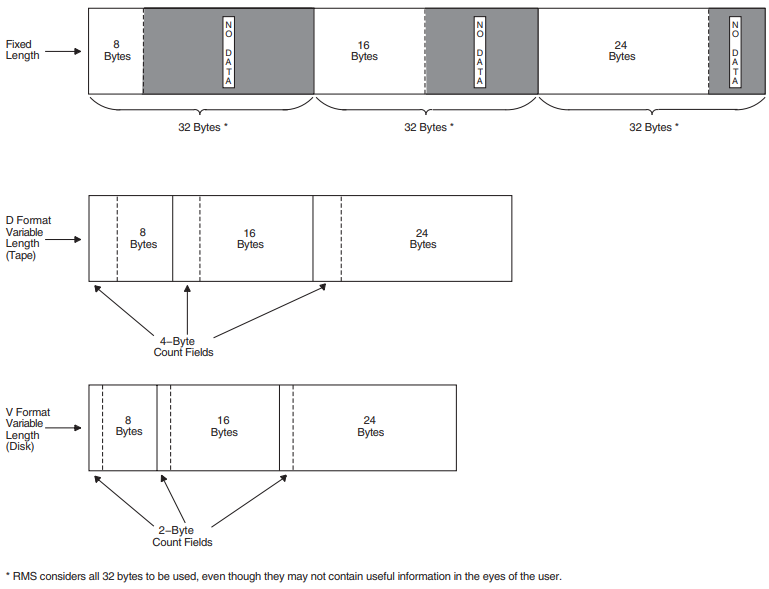

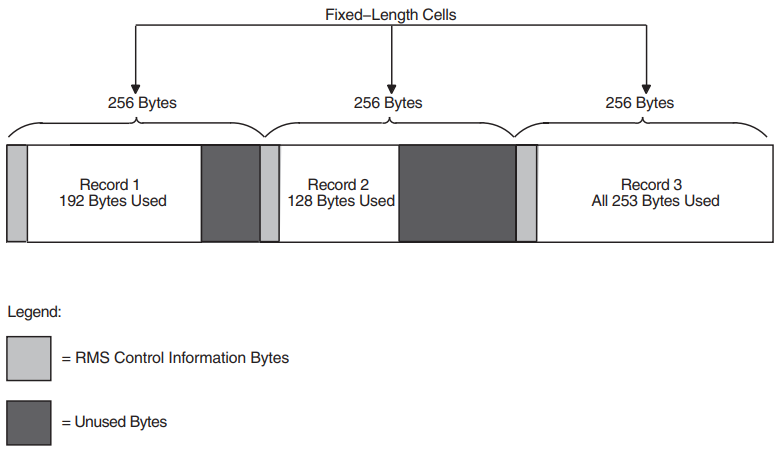

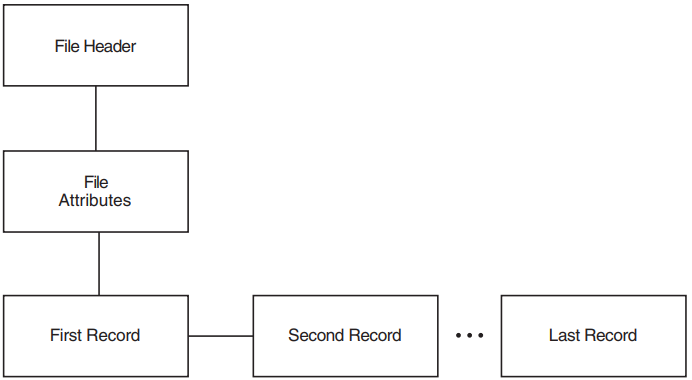

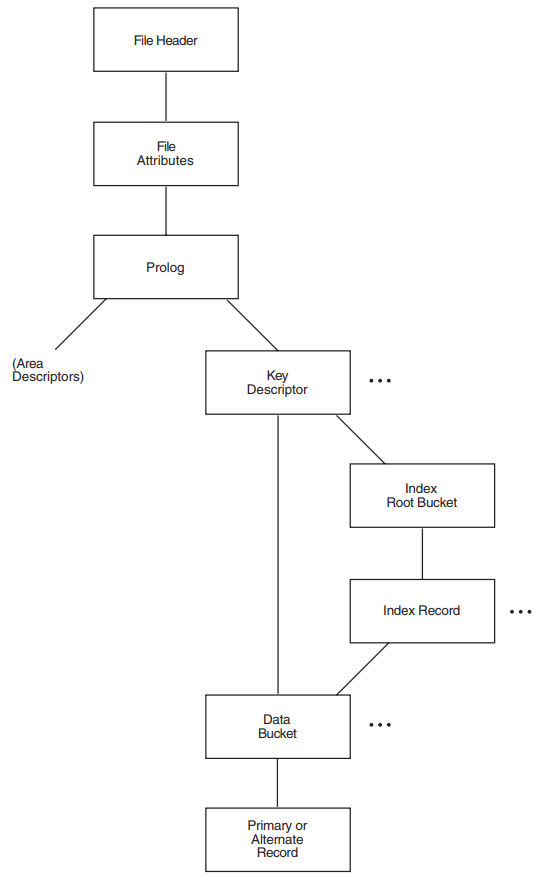

1.7.2. The Convert Utility