VSI DECdfs for OpenVMS Management Guide

- Software Version:

- VSI DECdfs Version 2.4

- Operating System and Version:

- VSI OpenVMS IA-64 Version 8.4-1H1 or higher

VSI OpenVMS Alpha Version 8.4-2L1 or higher

Preface

This manual describes VSI DECdfs for OpenVMS management concepts and procedures and the functions of the management commands. It assumes a division of job responsibilities between the person who manages DECdfs on a network node and the person who manages the Digital Distributed Name Service (DECdns) for a network. Such a division of between the person who manages VSI DECdfs on a network node and the person who manages the Digital Distributed Name Service (DECdns) for a network. Such a division of responsibilities may not always exist. If you need information on DECdns, see the manuals entitled, VSI DECnet-Plus for OpenVMS DECdns Management Guide and DECnet/OSI DECdns Management.

1. About VSI

VMS Software, Inc. (VSI) is an independent software company licensed by Hewlett Packard Enterprise to develop and support the OpenVMS operating system.

2. Intended Audience

This manual is intended for the VSI DECdfs manager: anyone who sets up, controls, and tunes performance of a VSI DECdfs server, client, or both. Managers must have OpenVMS knowledge and experience to the system manager level and access to the OpenVMS documentation set and to the DECnet documentation set.

Users who need information only about VSI DECdfs commands can find it in the command dictionary in Chapter 4. The manual should be useful to these users, but it is not directed toward them.

3. Related Documents

The VSI DECdfs for OpenVMS documentation set consists of this manual, the VSI DECdfs for OpenVMS Installation Guide, and the VSI DECdfs for OpenVMS Release Notes.

Guide to OpenVMS File Applications

VSI OpenVMS Guide to System Security

VSI OpenVMS User's Manual

VSI OpenVMS System Manager's Manual

VSI OpenVMS I/O User's Reference Manual

VSI OpenVMS System Services Reference Manual

VSI OpenVMS DECnet Network Management Utilities

VSI OpenVMS DECnet Network Management Utilities

VSI DECnet-Plus for OpenVMS Network Control Language Reference Guide

DECnet/OSI Network Control Language Reference

VSI OpenVMS DECnet Networking Manual

VSI DECnet-Plus for OpenVMS DECdns Management Guide

DECnet/OSI DECdns Management

4. OpenVMS Documentation

The full VSI OpenVMS documentation set can be found on the VMS Software Documentation webpage at https://docs.vmssoftware.com.

5. VSI Encourages Your Comments

You may send comments or suggestions regarding this manual or any VSI document by sending electronic mail to the following Internet address: <docinfo@vmssoftware.com>. Users who have VSI OpenVMS support contracts through VSI can contact <support@vmssoftware.com> for help with this product.

6. Conventions

| Convention | Meaning |

|---|---|

|

Ctrl/ x |

A sequence such as Ctrl/ x indicates that you must hold down the key labeled Ctrl while you press another key or a pointing device button. |

|

PF1 x |

A sequence such as PF1 x indicates that you must first press and release the key labeled PF1 and then press and release another key or a pointing device button. |

|

Return |

In examples, a key name enclosed in a box indicates that you press a key on the keyboard. (In text, a key name is not enclosed in a box.) |

. . . |

A horizontal ellipsis in examples indicates one of the

following possibilities:

|

... |

A vertical ellipsis indicates the omission of items from a code example or command format; the items are omitted because they are not important to the topic being discussed. |

|

( ) |

In command format descriptions, parentheses indicate that you must enclose the options in parentheses if you choose more than one. |

|

[ ] |

In command format descriptions, brackets indicate optional choices. You can choose one or more items or no items. Do not type the brackets on the command line. However, you must include the brackets in the syntax for OpenVMS directory specifications and for a substring specification in an assignment statement. |

|

[ |] |

In command format descriptions, vertical bars separate choices within brackets or braces. Within brackets, the choices are options; within braces, at least one choice is required. Do not type the vertical bars on the command line. |

|

{ } |

In command format descriptions, braces indicate required choices; you must choose at least one of the items listed. Do not type the braces on the command line. |

|

bold text |

This typeface represents the introduction of a new term. It also represents the name of an argument, an attribute, or a reason. |

|

italic text |

Italic text indicates important information, complete titles of manuals, or variables. Variables include information that varies in system output (Internal error number), in command lines (/PRODUCER= name), and in command parameters in text (where dd represents the predefined code for the device type). |

|

UPPERCASE TEXT |

Uppercase text indicates a command, the name of a routine, the name of a file, or the abbreviation for a system privilege. |

|

|

Monospace type indicates code examples and interactive screen displays. In the C programming language, monospace type in text identifies the following elements: keywords, the names of independently compiled external functions and files, syntax summaries, and references to variables or identifiers introduced in an example. |

- |

A hyphen at the end of a command format description, command line, or code line indicates that the command or statement continues on the following line. |

|

numbers |

All numbers in text are assumed to be decimal unless otherwise noted. Nondecimal radixes—binary, octal, or hexadecimal—are explicitly indicated. |

Chapter 1. Introduction to DECdfs

Manageability: Centralizing file resources on one system simplifies management. With DECdfs, you do not have to maintain multiple accounts for multiple users on multiple systems across the network. You can move files without disturbing end-user applications or work patterns, and you can back up all of your data with a single backup operation.

Convenience: Even geographically dispersed users can easily access common files just as they access local files.

Timesaving: VSI DECdfs saves users the time spent copying files from remote nodes over the network.

Resource-Use Reduction: VSI DECdfs uses less CPU time and less disk space and requires less labor for maintenance. You can place commonly used files on a single VSI DECdfs server node and eliminate the redundancy of maintaining several copies on multiple nodes across the network.

Security: You can control user access to server files by using proxy access.

The DECdfs file access protocol works in any DECnet environment but performs especially well with high-speed LAN lines. This speed accounts for ease of access: users can access files on a remote server as quickly as on a local device.

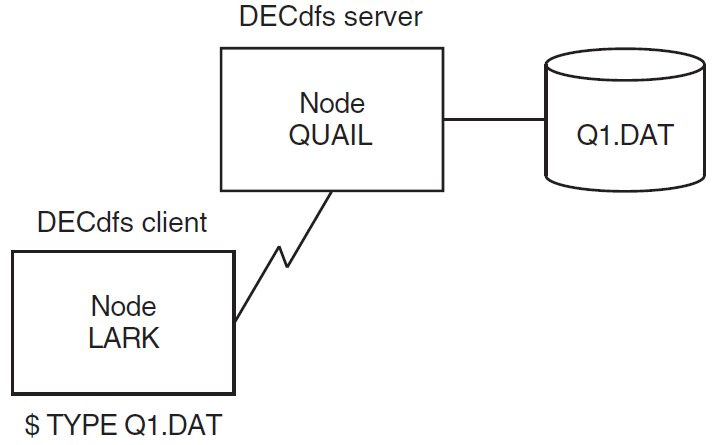

Figure 1.1, ''DECdfs Client-Server Relationship'' illustrates the client-server relationship between two systems running DECdfs. The figure shows the DCL TYPE command at the client system, which displays a file that resides on a disk at the server. Note that the command does not include a node name or access-control information, as would be necessary in an ordinary network operation.

DECdfs can play a special role in an environment where many users have systems with limited disk space. If you off-load files that require significant disk space to a single server, you free resources at each client. If you also move files that require frequent backup operations to the server, you lessen the time and cost of multiple backups.

OpenVMS Cluster environments allow multiple systems to share files. Like clusters, DECdfs provides file sharing, but in contrast to clusters, it allows client systems to be autonomous.

|

VSI DECdfs allows users to... |

But does not allow them to... |

|---|---|

|

Create and manipulate directories |

Perform logical and physical I/O |

|

Share a file for concurrent reading with other users |

Share a file when a user is writing to the file |

|

Use all file QIO function codes |

Use the shared-write option; DECdfs converts the shared-write option to the exclusive-write option. |

|

Use all Record Management Services (RMS) features or the QIO interface |

Install files as known images on the client if the files reside at a disk at the server |

|

Run applications at the client that use mapped sections on the server |

Use system page files or swap files on the server |

In any environment, a group can keep help files, such as the OpenVMS HELP library, on a single DECdfs server disk. In this way, individual systems avoid storing and maintaining their own help files and instead read the files from a shared disk.

In a computer-aided design (CAD) laboratory, designers use schematic capture systems that include extensive parts libraries. Each designer has a workstation running OpenVMS and needs access to all the parts in all the libraries. These libraries use large amounts of disk space and require frequent updates. With DECdfs, you can store the parts libraries on one system in the network. This reduces demands on disk space and facilitates keeping the libraries up to date.

In a development environment, many programmers share a code management system, such as DEC Code Management System (CMS). Storing the CMS libraries on a DECdfs server allows their considerable disk-space requirements to be centralized where available disks reside. Each developer checks out a particular source file and edits it on his or her own DECdfs client system. When compiling and linking at the client, however, the code compiles and links with the other source and object files, which remain on the server.

The remainder of this chapter describes the basic components of a DECdfs environment and the interface for managing DECdfs.

1.1. Components of a VSI DECdfs Environment

As described in the introduction to this chapter, DECdfs creates a client-server relationship among network nodes. A node can be a client, a server, or both. A client-and-server node can share one of its disks with other nodes while accessing files on another node's disks. This manual refers separately to the client and server functions at such nodes. When the manual refers to a client or a server, it refers to functions that can exist on the same node unless the reference is specifically to a remote client or remote server.

The following sections describe the components of a DECdfs environment.

1.1.1. Digital Distributed Name Service

Like DECdfs, the Digital Distributed Name Service (DECdns) implements a client-server relationship between the user of resources and the provider of resources. As a user of DECdns, each DECdfs node is a DECdns client. The DECdns system that provides information about the location of files is a DECdns server.

VAX and Alpha processors running DECnet Phase V

VAX processors running the OpenVMS operating system

DECdns is not available on OpenVMS Alpha systems unless the system is running DECnet Phase V. If a node does not provide DECdns software, DECdfs cannot access the DECdns registry of available resources. In this case, DECdfs requires users on nodes without DECdns to specify the node name where the resource is located. Using DECdfs on nodes without DECdns is described in Section 2.3.2.2, ''Systems Without DECdns''.

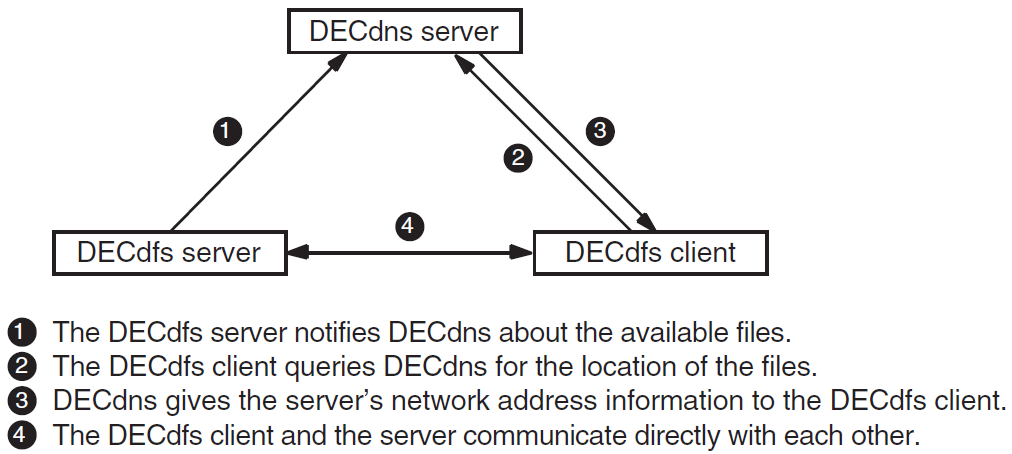

If DECdns is available, whenever a DECdfs server makes available a group of files, it notifies the DECdns server. DECdns records the global (networkwide) name of the files and address information needed to communicate with the DECdfs server. To use the DECdfs server, a DECdfs client queries the DECdns server for the DECdfs server's address information. The client then passes the node address to DECnet for setting up a network connection (link) between the DECdfs client node and the DECdfs server node. After the client receives the server address information from DECdns, it communicates directly with the server. Figure 1.2, ''Interaction Between VSI DECdfs and the DECdns Server'' illustrates how DECdfs interacts with DECdns.

If a DECdfs client wants to access a resource on a VSI DECdfs server but the client cannot access the DECdns server that has registered the resource, the client must specify the node name of the DECdfs server it wants to use. If a VSI DECdfs server cannot access the DECdns server, all VSI DECdfs clients that want to use the VSI DECdfs server's resource must specify that server's node name.

DECdns registers information about network resources in a namespace, which is the registry of network names managed by DECdns. Certain DFS$CONTROL commands listed in Section 1.4, ''The DFS$CONTROL Commands'' allow you to add and remove information from the DECdns namespace. If you need to move a DECdfs disk from one server to another, you can simply remove and reregister the DECdns information. The users at a client never need to know that the location of the files has changed. Users on nodes that are not running DECdns will need to know of a resource relocation because these users explicitly specify the name of the server where the resource resides.

Consult with the DECdns manager (a person responsible for managing DECdns) at your site before setting up DECdfs on your system. The DECdns manager needs to know how you plan to set up DECdfs, and you need information on how the DECdns manager has set up DECdns. For example, some DFS$CONTROL commands require that you specify names that conform to the DECdns naming conventions in your network. A DECdns manager can create a single-directory namespace or a hierarchical namespace. It is important to know which type of namespace your network is using so that you can use the DFS$CONTROL commands correctly. The DECdns manager must inform VSI DECdfs users of any access point changes that make access impossible.

1.1.2. Access Points

An access point represents the file resources that a server makes available to clients. It allows the server to name the available resources and allows the client to find the resources. An access point refers to a specific directory (usually the master file directory) on a specific device.

At a server, the DECdfs manager decides what directory on what device to make available to DECdfs client users. The access point gives access to that directory and all subdirectories. The master file directory is the default choice. The DECdfs manager gives the access point a name. Using the DFS$CONTROL command ADD ACCESS_POINT, the manager then registers the access point name with the local DECdfs server database (see Section 1.1.3.1, ''The Server '') and with DECdns. If DECdns is not available, the access point is recorded only in the server database. See Section 2.3.2.2 for information on adding access points on systems without DECdns.

Causes the client system to create a DECdfs client (DFSC) device.

The client device (called the DFSC device) is a pseudodevice, a forwarding mechanism through which a physical device can be reached. The system treats a pseudodevice as if it were a physical I/O device though it is not (hence the name).

Creates a correlation between the DFSC device and the server device and directory to which the access point refers.

When a user on a client system first mounts an access point, DECdfs queries DECdns to find out which node serves that access point. Systems not running DECdns must use the /NODE qualifier to specify the VSI DECdfs server name. If a DECdfs connection to the server does not already exist, the client sets up one. If a DECnet logical link does not already exist, the client also requests DECnet to provide one.

The DECdfs Communication Entity creates one connection for all communication between a server and a particular client. This single connection provides DECdfs service to any number of users at the client. The users can mount any number of access points on the server and open any number of files.

End users on the client can then use the mounted client device as if it were a local device. If you assign a logical name to the client device, access to the files can be simple. End users enter standard DCL file commands requesting directories of, or access to, files on the client device. DECdfs intervenes and interacts with DECnet software to redirect these requests across the network to the server of the actual device.

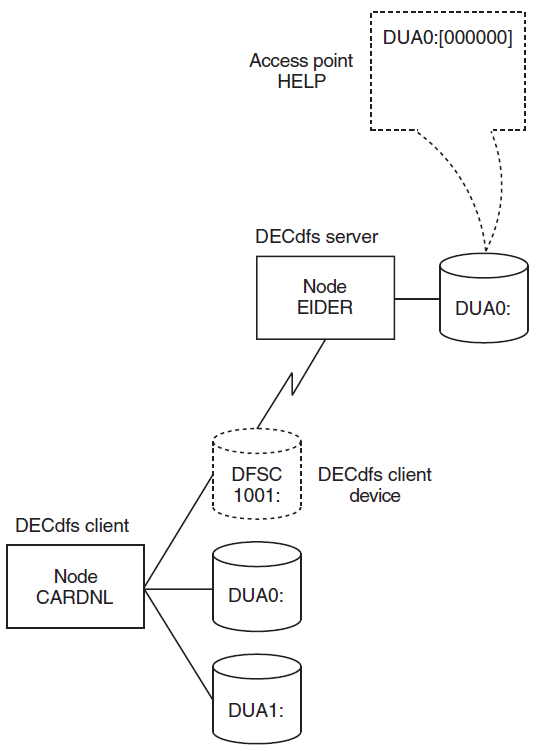

Figure 1.3, ''Correlation Between a Client Device and an Access Point'' illustrates the correlation between a client device and access point named HELP.

In Figure 1.3, ''Correlation Between a Client Device and an Access Point'', a correlation exists between client device DFSC1001: on node CARDNL and the access point that refers to DUA0:[000000] on node EIDER. A user at CARDNL can access files subordinate to DUA0:[000000] by specifying the device DFSC1001: with the file specification in DCL commands. If the DFSC1001: client device has the logical name HELP_LIBRARY, as in the previous example, the user can specify HELP_LIBRARY in the file specification.

1.1.3. DECdfs Management Components

DECdfs is comprised of three cooperating management components: the server, the client, and the Communication Entity. Each has a name, attributes describing it, and an interface supporting management operations. Sections 1.1.3.1, 1.1.3.2, and 1.1.3.3 describe the function of each component.

1.1.3.1. The Server

Adds and removes access points

Interacts with the OpenVMS operating system to verify user access rights and manipulate files

The server also contains the server database, which is the local registry of resources. Information in the server database usually matches information in DECdns namespace, if DECdns is available. Occasionally, however, the server database and DECdns information may differ (see Section 2.3.6, ''Maintaining Consistency with DECdns'').

DFS$SERVER_ACP.EXE, the server's ancillary control process (ACP). The server process name is DFS$00010001_1.

DFSSDRIVER.EXE, the server driver

The installation procedure places these files respectively in the SYS$SYSTEM and SYS$LOADABLE_IMAGES directories.

To manage a DECdfs server, you must prepare the system for the expected amount of use. You can display and set various parameters of the server to improve performance and control use. Chapter 2, "Managing a VSI DECdfs Server" describes the management tasks for servers.

1.1.3.2. The Client

Receives I/O sent to the client device and forwards it to the remote server, through the Communication Entity and the network.

Receives I/O from the remote server and forwards it to the end user.

The client is implemented by the SYS$LOADABLE_IMAGES:DFSCDRIVER.EXE file, which is the DECdfs client device driver.

Chapter 3, "Managing a DECdfs Client" describes the management tasks for a client.

1.1.3.3. The Communication Entity

The Communication Entity passes information between the server or client and the network software. The Communication Entity is automatically part of any DECdfs installation, whether the node is a client only or both a client and a server. Without the Communication Entity, the client and server would not be able to communicate across the network.

Creates DECdfs connections

Controls the flow of data

Interacts with the DECnet software to open logical links (transport connections)

Scans for and times out inactive links

Checks data integrity by performing checksums (if desired)

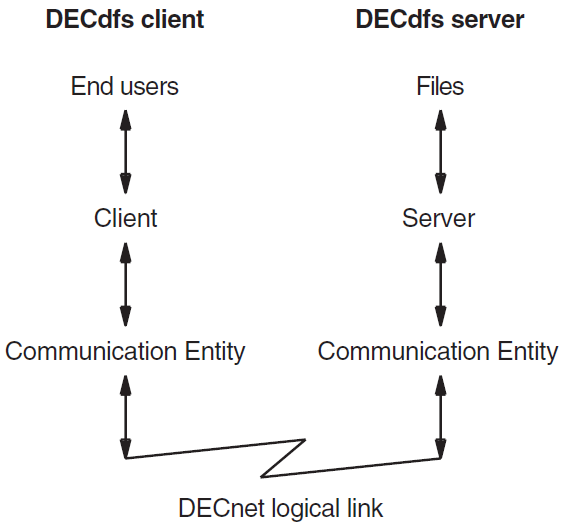

Figure 1.4, ''Server-Client Information Flow'' illustrates the flow of information between client and server as it passes through the Communication Entity and the DECnet software.

A DECdfs connection represents a relationship between a DECdfs server and client through the Communication Entity.

DFS>SHOW COMMUNICATION/CURRENT_CONNECTIONS

A DECdfs connection may or may not have an active DECnet link at a single point in time. When a client first mounts an access point, the Communication Entity requests a logical link from DECnet. All communication between the client and server passes through that link. When the Communication Entity finds that the link was not used during a specified timeout period, it disconnects the link, giving resources back to the server. However, DECdfs stores the server's network address information and maintains the DECdfs connection. The Communication Entity provides links for that connection as needed and times them out as appropriate, until the client device is dismounted.

Occasionally, DECdfs creates a connection between a client and server in another way. The DFS$CONTROL command SHOW ACCESS_POINT/FULL displays access point names (from DECdns) and status (from server databases). Entering this command causes DECdfs to create a connection between your node and each node from which you display server database information. Some commands, such as ADD ACCESS_POINT and REMOVE ACCESS_POINT, create DECdfs connections to the local server. You might see such connections when you display the connections on your system.

DFS$COM_ACP.EXE, the communication ACP

DFSRRDRIVER.EXE, the communication driver

The installation procedure places these files respectively in the SYS$SYSTEM and SYS$LOADABLE_IMAGES directories.

1.2. Client-Server Consistency Issues

This section contains information on system times and logical names on the client and server. See Section 2.2.2.3, ''User Names'' for information about consistency of user names on the client and server.

1.2.1. System Times on the Client and Server

Note

If the client and server are in the same time zone, a network time synchronization service can help eliminate problems caused by inconsistent system times. If the client and the server are not in the same time zone, you should set the time on both to Greenwich Mean Time, to avoid time problems caused by geographical differences.

DECdfs treats timestamps differently within files and in file headers. Timestamps recorded in records within files are based on the client system time. Timestamps recorded in file headers (and displayed with the DIRECTORY/DATE or DIRECTORY/FULL command) are usually based on the server system time. Exceptions do exist. For example, if you use the COPY command without specifying an output file name, the command sets the output file's creation date equal to the input file's creation date. The file's timestamp is whatever system time the input file originally had.

1.2.2. Logical Names on the Client and Server

Logical names are valid only on the local system. The client system does not have information about logical names defined on the server. If a user needs to use a logical name to access files on the server, you need to define that logical name on the client system. For example, if you use DECdfs to access a CMS library that users specify with a logical name, define the logical name on the client system to represent the library.

1.3. Comparison of Standard OpenVMS File Access and VSI DECdfs File Access

DECdfs divides standard OpenVMS file access functions between two cooperating nodes.

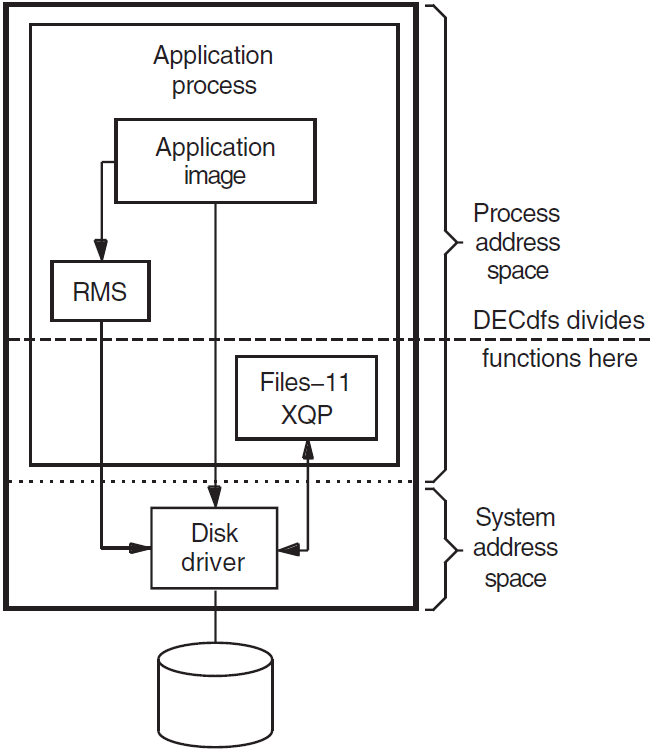

Figure 1.5, ''Standard OpenVMS File Access Functions'' illustrates the standard OpenVMS file access functions and shows where DECdfs divides the file access functions between the client and server. In the figure, an application requests access to a file by entering either a Record Management Services (RMS) call or a QIO directly to the disk driver. The Files-11 extended $QIO system service processor (XQP) opens and accesses the file through the disk driver.

Note

The direction of the arrows in Figure 1.5, ''Standard OpenVMS File Access Functions'' indicates the flow of control (not the flow of information, which exists in both directions).

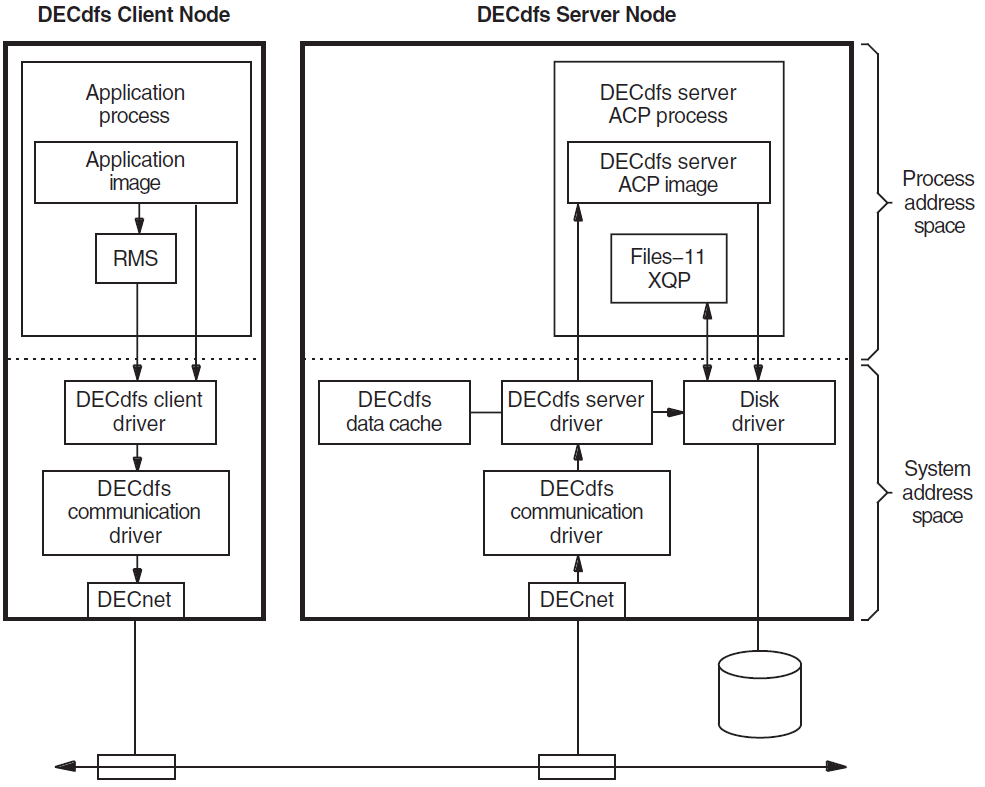

Figure 1.6, ''DECdfs File Access Functions'' shows the same file access functions as those in Figure 1.5, ''Standard OpenVMS File Access Functions'' and illustrates how DECdfs divides the functions between the client and server nodes. The application and RMS remain on the client. To reach the disk driver and the Files-11 XQP, however, the application's request passes through the DECdfs drivers and over the network.

The application sends a request to access a device, either through RMS or by using direct QIO functions. Both access the device driver.

Because the request is sent to a DECdfs client device, the request goes to the DECdfs client driver instead of a standard local disk driver. The DECdfs client driver passes the request to the DECdfs Communication Entity driver.

The Communication Entity forwards the request to DECnet software for transmission over the network.

DECnet software receives the request and passes it to the DECdfs Communication Entity driver.

The communication driver passes the request to the DECdfs server driver.

If the request is a read or write request to an open file, the server driver accesses the disk driver directly.

- If the request is an XQP function, such as a request to open, close, or search a directory for a file, the server driver passes it to the DECdfs server ancillary control process (ACP).

- The server ACP interacts with OpenVMS to validate the user and to access the file.

- The server ACP then passes the request to the disk driver and to the Files-11 XQP for continued file I/O.

To expedite any repeated use of file blocks and to avoid needless disk access, the DECdfs server uses a file data cache. Section 2.7, ''Managing the Data Cache'' explains this cache in further detail.

1.4. The DFS$CONTROL Commands

DFS$CONTROL is a set of commands that you use to manage DECdfs. The commands allow you to set up, monitor, tune, and customize your DECdfs environment, particularly on a server. However, most parameters to the DECdfs software have default values that should provide a satisfactory balance between economical use of resources and good performance. Generally you need only use DFS$CONTROL to start the DECdfs client, server, and Communication Entity and to add access points on a server or mount them on a client.

|

Command |

Description |

|---|---|

|

Entered at Either a Server or Client | |

|

EXIT |

Terminates the DFS$CONTROL session. |

|

HELP |

Displays information on DFS$CONTROL commands. |

|

SET COMMUNICATION |

Sets parameters for the DECdfs Communication Entity. |

|

SHOW ACCESS_POINT |

Displays the names of access points stored by DECdns. |

|

SHOW COMMUNICATION |

Displays information about the DECdfs Communication Entity. |

|

SHOW VERSIONS |

Displays version information for DECdfs components. |

|

SHUTDOWN COMMUNICATION |

Stops DECdfs communication after completing file operations in progress. |

|

SNAPSHOT COMMUNICATION |

Records the current communication counters in DFS$CONTROL memory or in a specified file. |

|

START COMMUNICATION |

Starts the Communication Entity. |

|

STOP COMMUNICATION |

Stops DECdfs communication immediately. |

|

Entered at a Server Only | |

|

ADD ACCESS_POINT |

Makes an access point available by registering it in the server database and with DECdns. |

|

REMOVE ACCESS_POINT |

Removes an access point name from the server database and from DECdns. |

|

SET SERVER |

Sets parameters for the DECdfs server. |

|

SHOW SERVER |

Displays information about the DECdfs server. |

|

SNAPSHOT SERVER |

Records the current server counters in DFS$CONTROL memory or in a specified file. |

|

START SERVER |

Starts the DECdfs server. |

|

STOP SERVER |

Stops the DECdfs server. |

|

Entered at a Client Only | |

|

DISMOUNT |

Makes a DECdfs client device (and therefore an access point) unavailable to users. |

|

MOUNT |

Mounts an access point as a DECdfs client device. |

|

SHOW CLIENT |

Displays information about a DECdfs client device. |

|

SNAPSHOT CLIENT |

Records the current client counters in DFS$CONTROL memory or in a specified file. |

See Chapter 4, "DFS$CONTROL Commands" for a dictionary of DFS$CONTROL commands.

1.4.1. Using DFS$CONTROL Commands in DECdfs Command Files

|

File |

Comment |

|---|---|

|

DFS$STARTUP.COM |

Do not edit this file, but note that it executes DFS$CONFIG and DFS$SYSTARTUP, both of which you may edit. |

|

DFS$CONFIG.COM |

This file contains the SET commands that set parameters for the DECdfs server and Communication Entity. DFS$STARTUP executes this file before it starts the DECdfs processes. The commands have default values, so edit this file only if you want to change the parameter values. |

|

DFS$SYSTARTUP.COM |

This file contains commands that add access points at a server and mount access points at a client. On a server, keep this file up to date to add the access points each time DECdfs starts up. On a client, use this command file to mount access points for systemwide use. |

|

OpenVMS VAX Version 5.5-2 | |

|

SYSTARTUP_V5.COM |

Edit the system SYSTARTUP_V5 file so that it executes the SYS$STARTUP:DFS$STARTUP command file. DECnet startup must complete before DECdfs startup begins. SYSTARTUP_V5.COM is in the SYS$MANAGER directory. |

|

OpenVMS VAX Version 6. n OpenVMS Alpha Version 6. n OpenVMS Alpha Version 7. n | |

|

SYSTARTUP_VMS.COM |

Edit the system SYSTARTUP_VMS.COM file so that it executes the SYS$STARTUP:DFS$STARTUP command file. DECnet startup must complete before DECdfs startup begins. SYSTARTUP_VMS.COM is in the SYS$MANAGER directory. |

1.4.2. Using DFS$CONTROL Commands Interactively

- Preface the command with the string DFSCP as shown in the following example:

$DFSCP :== $DFS$CONTROL$DFSCP SHOW VERSIONS - Invoke the DFS$CONTROL program, invoke the DFS prompt, and enter commands as shown in the following example:

$RUN SYS$SYSTEM:DFS$CONTROLDFS>SHOW VERSIONS

You can use other commands either interactively or by executing the DFS$CONFIG or DFS$SYSTARTUP command files. If you choose to interactively enter a command that one of these files usually executes, edit the file to reflect any new values that you have set. This ensures that, for DFS$CONFIG, the next startup uses the most recent value or, for DFS$SYSTARTUP, your system adds or mounts all access points.

1.4.3. Getting Help with DECdfs

The DFS$CONTROL HELP command displays a list of topics on which you can obtain information. Entering HELP and a command name displays information on the specified command.

$HELP DFS

Chapter 2. Managing a VSI DECdfs Server

Managing a VSI DECdfs for OpenVMS server involves first preparing the system for use by DECdfs and then using DFS$CONTROL commands to create one or more access points and make them available. If you choose, you can also use DFS$CONTROL commands to tailor the operation of the server and the Communication Entity to enhance performance.

Setting system parameters

Setting up proxy accounts

Creating and managing access points

Protecting server files

Protecting individual files

Managing the persona cache

Managing the data cache

Using a cluster as a DECdfs server

Stopping and starting DECdfs on your system

Most of these tasks involve the use of DFS$CONTROL commands and qualifiers. For complete information on a command, see Chapter 4, "DFS$CONTROL Commands".

After you read this chapter, set the necessary system and network parameters and edit the DFS$CONFIG.COM and DFS$SYSTARTUP.COM files. You can then start DECdfs on your system by executing the SYS$STARTUP:DFS$STARTUP.COM file.

2.1. Setting System Parameters

Running DECdfs on an OpenVMS system requires that you adjust certain system generation (SYSGEN) parameters. Before installation, change the CHANNELCNT, NPAGEDYN, GBLPAGES, GLBSECTIONS, and INTSTKPAGES (VAX only) parameters as directed in the VSI DECdfs for OpenVMS Installation Guide . On OpenVMS VAX systems, increasing the INTSTKPAGES parameter is especially important. If the number of interrupt stack pages is not large enough, an interrupt stack overflow can cause your system to halt.

Sections 2.1.1, 2.1.2, and 2.1.3 describe DECdfs Communication Entity and server parameters that work with each other and with system and network parameters. These sections describe the parameters that limit the number of open files and the amount of DECdfs activity.

The parameters work together in a layered manner; that is, you can set parameters at the system level, network level, or application DECdfs level. Setting a low value at any one of those levels affects the server's operation, even if you set higher values at the other levels. For example, if you specify that the DECnet network should establish very few logical links to and from your system, the low number of links prevents DECdfs from establishing a high number of connections.

For information about limiting logical links at the network level, see Appendix C, "Adjusting DECnet and Client RMS Parameters to Enhance Performance".

2.1.1. Limiting the Number of Open Files

Your system's channel count parameter, CHANNELCNT, specifies the maximum number of files that any process on the system can open concurrently. Each file requires one channel, and the DECdfs server process opens all local files that users at DECdfs clients access. If the server is your system's most active file user, you may need to increase the channel count to accommodate the server.

$RUN SYS$SYSTEM:SYSGENSYSGEN>USE CURRENTSYSGEN>SHOW CHANNELCNT

Parameter Name Current Default Minimum Maximum Units Dynamic-------------- ------- ------- ------- ------- ----- -------CHANNELCNT 202 127 31 2047 Channels

MIN_CHANNELCNT = 265

$RUN SYS$SYSTEM:SYSGENSYSGEN>HELP PARAMETERS SPECIAL_PARAMS CHANNELCNT

2.1.2. Controlling DECdfs Activity

DFS>SET COMMUNICATION/REQUESTS_OUTSTANDING_MAXIMUM=value

If the number of requests arriving from client systems exceeds the Communication Entity's permitted number of outstanding requests, the Communication Entity stops accepting data from DECnet. The DECnet network layer buffers the requests until the requests reach the value specified by one of these parameters:

DECnet Phase IV: PIPELINE QUOTA parameter

DECnet Phase V: MAXIMUM WINDOW parameter

For more information on these parameters, see Appendix C, "Adjusting DECnet and Client RMS Parameters to Enhance Performance".

When the limit is reached, DECnet's flow control mechanism stops the client from sending data and returns an error message.

2.1.3. Limiting Inactive DECdfs DECnet Links

The DECdfs Communication Entity monitors the DECnet links, using the time interval specified by the SET COMMUNICATION/SCAN_TIME command. If the Communication Entity finds that a link is inactive on two successive scans, it disconnects the link. The link is reestablished when a user on that client next requests a file operation. The Communication Entity maintains the DECdfs connection even after it times out a link.

2.2. Setting Up Proxy Accounts

Client users must have OpenVMS proxy accounts in order to access the server. You use the Authorize Utility (AUTHORIZE) to create these accounts. The Authorize Utility modifies the network user authorization file, NETPROXY.DAT, so that users at DECdfs clients get the necessary rights and privileges at the server. For information on AUTHORIZE commands, see the VSI OpenVMS System Management Utilities Reference Manual.

Each remote user can be granted DECnet proxy access to multiple accounts. One of the accounts can be the default proxy account for that user. The DECdfs server recognizes only default proxy accounts.

$SET DEFAULT SYS$SYSTEM$RUN AUTHORIZEUAF>ADD/PROXY EGRET::CHRIS STAFF /DEFAULTUAF>EXIT

To give users access to the DECdfs server without giving them explicit proxy accounts, create a default DECdfs account (DFS$DEFAULT).

$SET DEFAULT SYS$SYSTEM$RUN AUTHORIZEUAF>ADD DFS$DEFAULT -/NOACCESS=(PRIMARY, SECONDARY) -/ASTLM=0 -/BIOLM=0 -/BYTLM=0 -/CLI=no_such_cli -/CLITABLES=no_such_tbl -/CPUTIME=::.01 -/DEFPRIVILEGES=NOALL -/DEVICE=NLA0: -/DIOLM=0 -/DIRECTORY=[no_such_directory] -/ENQLM=0 -/FILLM=0 -/FLAGS=(CAPTIVE, DEFCLI, DISCTLY, DISMAIL, DISNEWMAIL, DISRECONNECT, -DISWELCOME, LOCKPWD, PWD_EXPIRED, PWD2_EXPIRED, RESTRICTED) -/GENERATE_PASSWORD=BOTH -/JTQUOTA=0 -/LGICMD=no_such_file -/OWNER="Distributed File Service" -/PGFLQUOTA=0 -/PRCLM=0 -/PRIORITY=0 -/PRIVILEGES=NOALL -/PWDEXPIRED -/PWDLIFETIME=::.01 -/PWDMINIMUM=31 -/TQELM=0 -/UIC=[ggg,mmm] -/WSDEFAULT=0 -/WSEXTENT=0 -/WSQUOTA=0UAF>EXIT$

The example illustrates creating a well-protected default DECdfs account that is fully usable by DECdfs. See the VSI OpenVMS Guide to System Security for information on default network accounts. Use care in setting up the account to ensure that DECdfs users have the rights and privileges necessary to access the files they need. If you create a DFS$DEFAULT account, all users without explicit proxy accounts have the rights, privileges, and identity of DFS$DEFAULT.

The DFS$DEFAULT account in Example 2.1, ''Creating a DFS$DEFAULT Account'' can also serve as a model for an individual proxy account that gives DECdfs users access to the server while preventing other types of access. For detailed information about creating proxy accounts, see the VSI OpenVMS Guide to System Security, the VSI OpenVMS DECnet Network Management Utilities manual, and the VSI DECnet-Plus for OpenVMS Network Management Guide manual.

2.2.1. Setting Up Privileges

The privileges that affect file-access checking (BYPASS, GRPPRV, READALL, and SYSPRV) also control DECdfs access to files.

If the proxy account or DFS$DEFAULT account has any of these privileges as default privileges, the DECdfs server uses them to allow access to files.

Note

Dynamic enabling and disabling of privileges differs from ordinary DECnet file-access checking, which can use only the default privileges of the proxy or default account.

Allowing SETPRV as an authorized privilege for a DECdfs proxy account or the DFS$DEFAULT account is the same as allowing all privileges as authorized privileges.

2.2.2. Setting Up UICs, ACLs, and User Names

In some circumstances, the difference between the server environment and the client environment can become obvious to users. This section explains how user identification codes (UICs), access control lists (ACLs), and user names can cause operational discrepancies between the server and client.

2.2.2.1. User Identification Codes

The OpenVMS system on the server interprets a file's user identification code (UIC) according to its rights database (RIGHTSLIST.DAT). The OpenVMS system stores a file owner's UIC as a binary value, which it translates to ASCII according to the rights database when displaying the UIC to a user. When a user at a DECdfs client requests the UIC of a file, the server system passes the binary value to the client system.

If the file UIC and proxy account UIC are the same, DECdfs converts the file UIC to the client account UIC. Otherwise, when the client system translates the binary UIC according to the client system's rights database, the translation might seem incorrect to users at the client system.

BACKUP

DIRECTORY, with the /OWNER, /FULL, or /SECURITY qualifier

SET FILE, with the /OWNER_UIC qualifier

Note

Client users can avoid problems with the BACKUP command by using the /BY_OWNER=PARENT or /BY_OWNER=ORIGINAL qualifier as described in Section 3.4.2, ''User Identification Codes on Server Files''.

For more information about UICs, see Section 3.4.2.

2.2.2.2. Access Control Lists

The OpenVMS system on the server also interprets a file's access control lists (ACLs) according to its rights database. It propagates default access control entries (ACEs) for DECdfs users' files from the directory in which it creates those files. The OpenVMS system enforces ACEs on files at the server; you can log in to the server and set ACEs that control DECdfs access to files. However, users cannot set or display ACLs from a DECdfs client. For more information on ACLs and ACEs, see Section 2.5, ''Protecting Individual Files''.

2.2.2.3. User Names

With applications that require user names, a discrepancy can occur if a user has different user names on the client and the server. If the user sometimes accesses the application from a DECdfs client and, at other times, locally from the server, certain operations of the application can fail.

For example, DEC Code Management System (CMS) reserves and replaces software components according to user name. When a user reserves and removes a component, CMS stores that person's user name in its library data file. When the user attempts to replace the component, CMS compares the current user name with the stored name. If the names do not match, the user cannot replace the component. Suppose the CMS libraries are on a server, and a user reserves a library component when running CMS at a client. If the user later logs in to the server and tries to replace the component, CMS rejects the replacement operation unless the user names match.

2.2.3. Giving Cluster Clients Access to Server Files

If the client node is a cluster system, enable the cluster alias outgoing on the client node (see Section 3.8, ''Using a Cluster as a DECdfs Client'') and add a proxy on the server from the cluster's user to the local user account. This allows users to access DECdfs files regardless of which cluster member they log in to.

UAF>ADD/PROXY client-cluster-name::remote-user user-name /DEFAULT

UAF>ADD/PROXY NODE_A::B_WILLIAMS B_WILLIAMS /DEFAULTUAF>ADD/PROXY NODE_B::B_WILLIAMS B_WILLIAMS /DEFAULTUAF>ADD/PROXY NODE_C::B_WILLIAMS B_WILLIAMS /DEFAULT

2.2.4. Allowing Client Users to Print Server Files

To allow client users to print files from your server, you must create special proxy accounts. The OpenVMS print symbiont runs under the SYSTEM account. The client SYSTEM account therefore needs proxy access to your server in order to print files for users.

Giving another node's SYSTEM account proxy access to your node is an issue to resolve according to the security needs at your site.

UAF>ADD/PROXY client-node-name::SYSTEM user-name /DEFAULT

UAF>ADD/PROXY EAGLE::SYSTEM JULIE

Use the Authorize Utility to create a special proxy account for client printing. You can name this account DFS$PRINT.

Set up the account to resemble the DFS$DEFAULT account shown in Example 2.1, ''Creating a DFS$DEFAULT Account'', but replace the /DEFPRIV=NOALL qualifier with /DEFPRIV=READALL and use a different password for the /PASSWORD qualifier.

After creating the DFS$PRINT account, give the client time-sharing node's SYSTEM account proxy access to it.

However, this method might have a security weakness because it lets the system account at the client read any DECdfs-served file on the server.

Printing files that have the WORLD READ protection setting

Using the PRINT/DELETE command for files that have the WORLD DELETE protection setting

Note

If the client is a time-sharing system or a cluster, see Section 3.6, ''Printing Files from a Client Device'' for information about using the /DEVICE qualifier with the DFS$CONTROL command MOUNT.

UAF>ADD/PROXY client-node-name::SYSTEM SYSTEM /DEFAULT

Warning

In a large network, using a wildcard to give multiple SYSTEM accounts (*::SYSTEM) access to any nondefault account on your system can be a serious breach of your system's security. This is especially true of giving such access to your SYSTEM account.

2.3. Creating and Managing Access Points

Deciding where to place access points

Adding access points

Changing access points

Maintaining consistency between the server and DECdns

2.3.1. Deciding Where to Place Access Points

Each time you add an access point on a DECdfs server, you specify a device and directory to which the access point name refers. The DFS$CONTROL command ADD ACCESS_POINT requires a device name and gives you the option of supplying a directory. The default directory is the master file directory for the device ([000000]), but you can place the access point lower in the directory tree. This placement affects the user's perception of the directory structure.

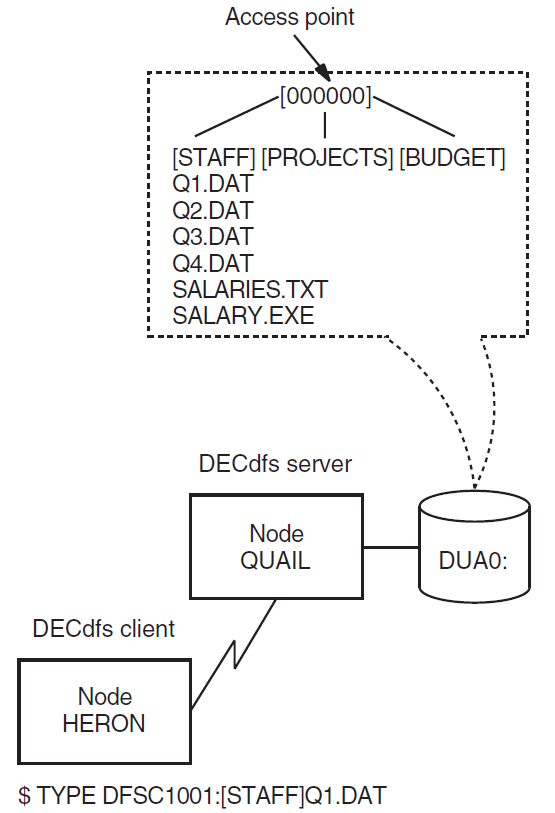

If you place the access point at the device's actual master file directory, end users can access files in the disk's directories as they normally would. Figure 2.1, ''Access Point at the Master File Directory'' illustrates this placement, with the access point at the master file directory. The user enters a command that accesses one of the first subdirectories.

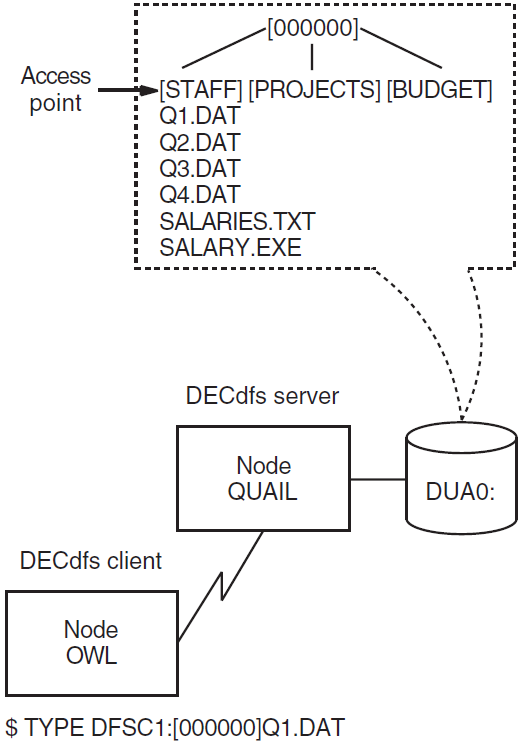

If you place the access point at a subdirectory of the master file directory, that subdirectory appears on the client device as a master file directory. To perform file operations in that directory, end users would have to specify the directory as [000000] in their file specifications. Figure 2.2, ''Access Point at a Subdirectory'' illustrates this access point placement.

The figure shows that [000000] is the actual master file directory for the disk, as viewed from the server. The user command, however, uses [000000] to represent the master file directory for the client device, which is the server directory at which you placed the access point.

The user at a DECdfs client can create subdirectories to the usual OpenVMS depth limit of 8, starting with the master file directory of the client device. If the master file directory on the client device is a subdirectory at the server, the user can create subdirectories that are hidden from OpenVMS at the server. These DECdfs subdirectories can nest as many as eight additional directories at the server. Backing up the server disk includes these DECdfs subdirectories only if you use the /IMAGE or /PHYSICAL qualifier to the BACKUP command. This is similar to what happens when you create rooted-device logical names in OpenVMS (see the Guide to OpenVMS File Applications).

2.3.2. Adding Access Points

To add an access point, you use the DFS$CONTROL command ADD ACCESS_POINT on the VSI DECdfs server that contains the resource you want to make available. To make the access point available, you enter the DFS$CONTROL command MOUNT on a VSI DECdfs client. Refer to Chapter 4, "DFS$CONTROL Commands" for detailed information on all DFS$CONTROL commands.

The ADD ACCESS_POINT command requires that you specify a device and optionally allows you to specify the directory to which the access point refers. When you enter the command, DECdfs adds this information to your node's server database. DECdfs also sends the access point name and your DECnet address information to the Digital Distributed Name Service (DECdns) if this service is available on your system.

Each access point name can contain from 1 to 255 characters. The name can consist of alphanumeric characters and underscores (_); a name in a hierarchical DECdns namespace can also contain period (.) characters. The dollar sign ($) is reserved for use by VSI.

It is important to discuss access point names with your DECdns manager before you attempt to create any. Each access point name in a DECdns namespace must be unique, and the names that you create must follow the conventions for your namespace. The organization of the namespace as single-directory or hierarchical also affects the types of names that you create.

A client node typically has one or more remote access points that are mounted automatically during system startup. At the conclusion of VSI DECdfs startup, the startup procedure looks for the file SYS$STARTUP:DFS$SYSTARTUP.COM and runs it. The file typically contains a series of DFS mount commands to mount the usual access points. If you want to mount access points from clients that are not running DECdns (refer to Section 2.3.2.2, ''Systems Without DECdns''), you can edit DFS$SYSTARTUP.COM to include the appropriate /NODE qualifiers.

System managers responsible for a number of clients typically maintain a master DFS$SYSTARTUP.COM file which is distributed to the clients each time it is updated.

If you add an access point interactively, it is important to edit the DFS$SYSTARTUP command file. In this way, the server automatically adds the access point the next time that the DECdfs server starts up.

VSI recommends that you add access points that see an actual directory path, and not a directory alias. For example, on the OpenVMS system disk, the directory SYS$SYSDEVICE:[SYS0.SYSCOMMON] is an alias for the directory SYS$SYSDEVICE:[VMS$COMMON]. VSI recommends using SYS$SYSDEVICE:[VMS$COMMON] as the access point directory. DECdfs cannot properly derive a full file specification when translating a file identification (FID) whose directory backlinks point to a directory different than the access point directory. If the access point does see a directory alias, incorrect backlink translation affects the SHOW DEVICE/FILES and SHOW QUEUE/FULL commands.

2.3.2.1. Systems with DECdns

- The manager at DECdfs server node EIDER adds access point HELP, as follows:

DFS>ADD ACCESS_POINT HELP DUA0:[000000]The access point refers to the master file directory ([000000]) of device DUA0:.

- The manager at the client then mounts access point HELP, producing a client device with the logical name HELP_LIBRARY. The response to the MOUNT command displays the client device unit number as DFSC1001:.

DFS>MOUNT HELP HELP_LIBRARY%MOUNT-I-MOUNTED, .HELP mounted on _DFSC1001:DCL commands entered at the client, such as SET DEFAULT and DIRECTORY, operate on the DECdfs client device as on any other device.$SET DEFAULT HELP_LIBRARY:[000000]$DIR HELP_LIBRARY:M*.HLBDirectory HELP_LIBRARY:[000000]MAILHELP.HLB;2 217 29-JUL-1998 14:39:57.50 (RWED,RWED,RWED,RE)MNRHELP.HLB;2 37 29-JUL-1998 14:41:36.41 (RWED,RWED,RWED,RE)Total of 2 files, 254 blocks.$

2.3.2.2. Systems Without DECdns

The current version of VSI DECdfs has been modified to operate without using DECdns to accommodate OpenVMS Alpha systems running DECnet. If you have an OpenVMS Alpha system running DECnet Phase V, refer to Section 2.3.2.

DFS>ADD ACCESS_POINT DEC:.LKG.S.MYDISK DKA300:[000000]

DFS>MOUNT DEC:.LKG.S.MYDISK /NODE=SRVR MYDISK%MOUNT-I-MOUNTED, DEC:.LKG.S.MYDISK mounted on _DFSC1001:

%DFS-E-NAMSPMSNG, Namespace component of access point is missing

If the access point is served by a cluster system, the node name to be specified depends on the cluster configuration and how the access point is added. Refer to Section 2.8, ''Using a Cluster as a DECdfs Server'' for more information. If the access point is a cluster-wide access point, then the cluster alias can be used for the node name. Otherwise, the name of a specific cluster node, which is known to be serving the access point, must be used.

%SYSTEM-F-NOSUCHNODE, remote node is unknown

%IPC-E-UNKNOWNENTRY, name does not exist in name space

When the /NODE qualifier is specified, DECdns does not check or expand the access point name even if DECdns is present on the system. The /NODE qualifier must be used to mount an access point on a server that does not have DECdns even if the client does have DECdns.

$DEFINE /SYS DFS$DEFAULT_NAMESPACE DEC:$DFSCP MOUNT .LKG.S.DFSDEV.VTFOLK_DKA3 /NODE=VTFOLK

%DFS-E-NAMSPMSNG, Namespace component of access point is missing

2.3.2.3. Using the /LOCAL Qualifier

The ADD ACCESS_POINT and REMOVE ACCESS_POINT commands include a /LOCAL qualifier, which provides functionality similar to the /NODE qualifier described in Section 2.3.2.2, ''Systems Without DECdns''.

As with MOUNT/NODE, the /LOCAL qualifier prevents any use of DECdns even if it is present. This enables you to use VSI DECdfs without setting up a DECdns namespace and name server even on systems where DECdns is available.

$ DEFINE /SYS DFS$DEFAULT_NAMESPACE DEC:

DFS>ADD ACCESS_POINT .LKG.S.MYDISK /LOCALDFS>MOUNT .LKG.S.MYDISK /NODE=VTFOLK

%DFS-E-NAMSPMSNG, Namespace component of access point is missing

Refer to Chapter 4, "DFS$CONTROL Commands" for more information on DFSCP commands.

2.3.3. Determining Access Point Information

DFS>SHOW ACCESS /FULL access-point-name

DFS>SHOW ACCESS /FULL .LKG.S.DFSDSKDEC:.LKG.S.DFSDSK on BIGVAX::DUA30:[000000]

DFS>MOUNT DEC:.LKG.S.DFSDSK /NODE=BIGVAX

A logical name and other qualifiers may also be specified on the mount command line.

2.3.4. Changing Access Points

Caution

Use caution when removing or changing an access point, because doing so can disrupt the user environment on client systems.

To remove an access point name, enter the REMOVE ACCESS_POINT command. This command removes the name from the server database and from DECdns. However, it does not notify client systems that currently have the access point mounted. On these systems, any subsequent attempt to use the access point will fail except for operations on files that are currently open. Client users will receive an error code identifying the failure.

2.3.5. Removing Access Points Added with the /CLUSTER_ALIAS Qualifier

Removing access points from servers in a cluster sometimes requires extra steps. The original ADD ACCESS_POINT command registers the access point name in both the DECdns namespace and the local server database. The REMOVE ACCESS_POINT command attempts to remove the name from both the DECdns namespace and the local server database. However, if you registered the access point according to its server's cluster alias (that is, the ADD ACCESS_POINT command had the /CLUSTER_ALIAS qualifier), you must perform some extra procedures to remove the access point.

The REMOVE ACCESS_POINT command deletes the DECdns access point name entry. This command also removes the access point from the server's local database, but it does so only on the cluster member at which you enter the REMOVE command. An informational message reminds you of this.

To remove an access point that was registered by cluster alias, you must use the fully expanded access point name on all cluster members except the first server on which you entered the REMOVE ACCESS_POINT command.

DFS>SHOW ACCESS_POINT /LOCAL /FULL

Remove the access point on each server by entering the REMOVE ACCESS_POINT command with this fully expanded access point name and the exact punctuation. When you enter this command at the first DECdfs server, you remove the access point name from the DECdns database. Subsequent REMOVE ACCESS_POINT commands at the other DECdfs servers in the cluster generate warnings that the access point is not in the DECdns namespace, but this does not indicate a problem. When you enter the fully expanded name at each server, you remove the access point from the server's local database.

To continue serving the access point on other cluster members, you can reregister the access point by using the ADD ACCESS_POINT/CLUSTER_ALIAS command on one of the other nodes. This replaces the access point name in the DECdns namespace. Disable the incoming alias on the node (or nodes) from which you removed the access point.

For DECnet Phase IV:

NCP> SET OBJECT DFS$COM_ACP ALIAS INCOMING DISABLED

For DECnet Phase V:

NCL>SET SESSION CONTROL APPLICATION DFS$COM_ACP INCOMING ALIAS FALSE

To disable the incoming alias permanently, edit the NET$SESSION_STARTUP.NCL NCL script file.

2.3.6. Maintaining Consistency with DECdns

Your node or its server becomes unavailable.

You entered an access point interactively without adding it to the DFS$SYSTARTUP file, and then the server stopped and restarted.

In either case, DECdns continues to supply outdated information (the access point name and the server's DECnet address information). If a new client attempts to mount the access point, the client receives a message stating that the access point is unavailable. If a client that previously mounted the access point attempts to read or write to an open file, an error occurs and returns an SS$_INCVOLLABEL error code. If such a client attempts to open a new file or to search a directory on the client device, the client attempts mount verification (see Section 3.4.5, '' DECdfs Mount Verification''), which then fails.

While you cannot prevent the server from being unavailable occasionally, you can prevent the loss of access points by always adding new access points to the DFS$SYSTARTUP file. If you stop the server permanently, be sure to enter a REMOVE ACCESS_POINT command for each access point on your system.

2.4. Protecting Server Files

DECdfs handles security and file access according to OpenVMS conventions, but a few differences exist. DECdfs allows any user to enter a MOUNT command, regardless of volume-level protections. However, DECdfs performs access checking at the time of file access.

The server uses proxy access to verify a user's access to an account (see the VSI OpenVMS Guide to System Security). The server does not perform an actual proxy login, however, since DECdfs accesses a node through the DECdfs server process. The server process performs file operations on behalf of the user at the client, and it impersonates the user by performing these operations in the name of the user's proxy account. Files created on behalf of a client user are therefore owned by the user's proxy account, not by the server process's account. Section 2.2, ''Setting Up Proxy Accounts'' describes more fully how the DECdfs server validates user access.

2.5. Protecting Individual Files

DECdfs allows any user at any DECdfs client to mount an access point. On the server, however, standard OpenVMS file access protection applies to each file. The OpenVMS operating system uses a combination of user identification codes (UICs), privileges, protection settings, and access control lists (ACLs) to validate each file access according to the user's proxy account.

DFS$SERVICE

NETWORK

The DFS$SERVICE identifier applies only to users at DECdfs clients. The NETWORK identifier applies to users at DECdfs clients and all other network users.

You can explicitly place ACLs on DECdfs files only by logging in to the server system. The OpenVMS operating system recognizes the ACLs, so you can use them from the server to protect or grant access to the server files. However, DECdfs suppresses ACLs as seen from the client. A user with access to a DECdfs client device cannot create or view the ACLs on files residing at the server. Using the SET ACL/OBJECT_TYPE=FILE or EDIT/ACL command at a client to modify a server file displays an error message. Entering the DIRECTORY/SECURITY and DIRECTORY/FULL commands returns displays that omit the ACLs on any files in the directory listing.

2.6. Managing the Persona Cache

The server uses a persona cache, which contains information about individual client users. The server uses this information to determine whether a client user has permission to access individual files. This section explains how you control the operation of the persona cache.

When incoming user requests arrive at the server, the server process interacts with the OpenVMS operating system to create or access the requested files. To perform this operation on behalf of a particular user, the server builds a profile of that user. The server checks the NETPROXY.DAT file for the user's proxy account, the SYSUAF.DAT file for the user's privileges and UIC, and the RIGHTSLIST.DAT file for any identifiers granting additional rights.

The server places all of this information in a persona block. When creating or accessing a file on behalf of the user, the server process impersonates the user according to the persona block information. Although the server process itself is interacting with the OpenVMS file system, each file appears to be accessed by, and in accordance with the privileges of, the proxy account.

The persona cache helps to accelerate file access. After the server creates an individual persona block, the server reuses it each time that user accesses another file. This saves time because the server need not reread the NETPROXY.DAT, SYSUAF.DAT, and RIGHTSLIST.DAT files at each file access.

DECdfs automatically sets the size of the cache based on the number of users. As the number of users increases, DECdfs borrows from nonpaged pool to meet the demand. When the number of users decreases, DECdfs returns unused blocks to nonpaged pool.

2.6.1. Specifying the Lifetime of Persona Blocks

Persona blocks have a specified lifetime, which you can adjust by using the SET SERVER/PERSONA_CACHE=UPDATE_INTERVAL command. When the persona block for a user expires, the server validates the user's next access by reading the three authorization files and building a new block. This ensures that, at a specified interval, the DECdfs server automatically incorporates any changes that you make to any of the authorization files.

If DECdfs users at client systems complain that the response time for opening files is too long, consider lengthening the update interval.

2.6.2. Flushing the Cache

You can flush the persona cache by using the SET SERVER/INVALIDATE_PERSONA_CACHE command. This forces the server to build a completely new cache, validating each new user access from the authorization files. You can flush the persona cache after making changes to access rights or proxy accounts without waiting for the update interval to expire.

You need to restart the server if you have replaced the RIGHTSLIST.DAT file by copying the file or changing the file's logical name assignment. You do not need to restart the server if you have replaced or copied the NETPROXY.DAT file or SYSUAF.DAT file or if you have changed either of these two files' logical name assignments.

2.6.3. Displaying Cache Counters

DFS>SHOW SERVER/COUNTERS

|

Counter |

Description |

|---|---|

|

Persona Blocks Active |

The current number of simultaneously active persona blocks. |

|

Maximum Persona Blocks Active |

The highest number of simultaneously active persona blocks since the server started. |

|

Persona Cache Blocks Allocated |

The current number of allocated persona blocks. This includes a count of both currently active and inactive persona blocks. |

|

Maximum Persona Cache Blocks Allocated |

The highest number of allocated persona blocks since the server started. This tells how large the cache has been since the last startup. |

|

Persona Cache Hits |

The number of times the server was able to reuse an existing persona block to satisfy an incoming file request. |

|

Persona Cache Misses |

The number of times the server was forced to build a new persona block to satisfy a new file request. |

|

Persona Cache Threshold |

The number of preallocated persona blocks that the server maintains. |

2.7. Managing the Data Cache

Managing the data cache involves periodically using the server counters to monitor DECdfs performance, reassess server use, and tune the data cache parameters to maintain good performance.

The DECdfs server data cache improves performance by caching blocks of files to expedite the repeated use of files or parts of files. Many files on a system, such as command procedures or executable files, are used repeatedly. In addition, during access of a file, the same blocks in the file are often read and written many times. DECdfs stores file data in its data cache to eliminate unnecessary disk accesses. The caching takes place on both read and write requests.

To further improve performance, DECdfs prefetches subsequent blocks from files being accessed sequentially; that is, during sequential file access operations, DECdfs anticipates your needs, moving data from the disk to the cache so it is available when you actually request it.

The server's data cache is a write-through cache. It does not affect standard RMS caching, which occurs on the client system.

2.7.1. Specifying the Size of the Cache

DFS>SET SERVER/DATA_CACHE=COUNT_OF_BUFFERS

This command allocates a certain number of buffers from nonpaged pool to use in the data cache. The size of each buffer is fixed. Each buffer takes 8192 bytes of data plus 50 bytes of header information, for a total of 8242 bytes.

If you increase the count of buffers past the default value, increase the amount of nonpaged pool (the NPAGEDYN parameter) by a corresponding number of bytes. To do so, modify the SYS$SYSTEM:MODPARAMS.DAT file and rerun AUTOGEN (see the VSI OpenVMS System Manager's Manual).

2.7.2. Specifying the Per-File Quota

The quota prevents a large sequentially accessed file from taking all the cache buffers while other files are in use.

The server can ignore the quota when necessary. Then the server can better meet the needs of large and frequently accessed files when no or few other files are in use.

DFS>SET SERVER/DATA_CACHE=FILE_BUFFER_QUOTA

When a user makes an initial request for read access to a file, the server moves data from the disk to the cache. As the user continues to request read and write access to the same file, the server continues to allocate buffers to the file. Once the server reaches the quota, however, it reuses a file's buffers, beginning with the one least recently used. If that buffer is currently in use, the server ignores the quota and uses the least recently used available buffer in the cache. If no buffer is currently available in the cache, the file request waits.

If you choose to adjust the file buffer quota, consider what types of files you use with DECdfs. If users repeatedly access one large file, such as an executable file or a shared design template, a high file quota can be useful. Adjustments to this value should reflect the patterns of use at your site. To monitor the use and efficiency of the cache, use the SHOW SERVER/COUNTERS command.

2.7.3. Displaying Cache Counters

DFS>SHOW SERVER/COUNTERS

|

Counter |

Description |

|---|---|

|

Data Cache Full |

The number of times that the least recently used buffer was currently in use and a request had to wait for a buffer. |

|

Data Cache Hits |

The number of times that the server was able to satisfy a read request by finding a requested block in the cache. The server therefore avoided accessing the disk. |

|

Data Cache Misses |

The number of times that the server was unable to satisfy a read request by finding a requested block in the cache. The server was therefore forced to access the disk. |

|

Data Cache Quota Exceeded |

The number of times that a particular file used more buffers than its specified quota. |

|

Physical Writes |

The number of times that the server wrote a block to disk. |

|

Physical Reads |

The number of times that the server read a requested block from disk. |

Frequent high numbers for the Data Cache Full counter indicate that your server is very busy. When the cache is full and file requests wait for buffering, performance can degrade. Monitor this counter and consider raising the buffer count value if necessary.

Interpret the hits-to-misses ratio according to the application for which you use DECdfs. Sequential accesses should produce a high hits-to-misses ratio because of the prefetching DECdfs performs. Nonsequential accesses (or a very busy server with frequent reuse of cache blocks) can produce a low hits-to-misses ratio. To correct a consistently low hits-to-misses ratio, consider increasing the buffer count value by using the SET SERVER/DATA_CACHE=COUNT_OF_BUFFERS command.

The Physical Writes and Physical Reads counters indicate the number of times the server performed a disk I/O operation.

2.8. Using a Cluster as a DECdfs Server

You can make a device and directory available as an access point from a cluster system by using a cluster alias. A cluster alias serves a single access point from all cluster members when the incoming alias is enabled.

Sections 2.8.1 and 2.8.2 explain how to serve an access point from a cluster alias and from individual cluster members.

2.8.1. Serving an Access Point from a Cluster Alias

Install and start the DECdfs server on each node in the cluster for which the incoming alias is enabled.

Add the access point by using the /CLUSTER_ALIAS qualifier with the ADD ACCESS_POINT command. This supplies DECdns with the cluster alias instead of the node address as the access point's location.

Repeat the same ADD ACCESS_POINT command on each DECdfs server node in the cluster.

After you have completed these steps, a client system that mounts the access point connects to the cluster rather than to a specific node. DECnet software at the cluster chooses the node that will serve the client. The failure of one node does not prevent a DECdfs client from mounting an access point. If the server node involved in a DECdfs communication session becomes unavailable, another cluster member can respond when the DECdfs client tries to reestablish the connection. This allows the DECdfs session to proceed with minimal interruption to the user.

2.8.2. Serving an Access Point from Individual Cluster Members

If you do not enable the cluster alias, or if you have not installed the DECdfs server software on all members of the cluster, you can still serve the same device and directory from multiple nodes. The access point, however, must have a different name on each node. The access point name simply represents an alternative route to the same device and directory.

2.9. Stopping and Starting DECdfs on Your System

DFS>SHOW SERVER /USERS

You can determine whether DECdfs users are accessing a local client by entering the SHOW COMMUNICATION/CURRENT command and looking for active outbound connections. This procedure does not identify users by name. However, you can use the DCL REPLY command to notify those users before stopping the server.

SET SERVER/INVALIDATE_PERSONA_CACHE

SHOW SERVER/USERS

SHOW SERVER/ACTIVE

Note

For DECnet Phase IV:

NCP>SET EXECUTOR STATE OFF

For DECnet Phase V:

NCL>DISABLE NODE 0 ROUTINGNCL>DISABLE NODE 0 NSPNCL>DISABLE NODE 0 SESSION CONTROL

Note

Make sure DECnet is running before you restart DECdfs. Restarting DECnet or restarting the Communication Entity does not restart the DECdfs server; you must explicitly execute the DECdfs startup command file.

Chapter 3. Managing a DECdfs Client

Managing a VSI DECdfs for OpenVMS client involves coordinating the values of certain interrelated parameters on your system and then mounting DECdfs access points. This creates the client devices on your system.

Setting system parameters

Mounting access points

Displaying client device information

Using the client device

Performing checksum comparisons on DECdfs connections

Printing files from a client device

Using the Backup Utility with a client device

Using a cluster as a DECdfs client

Stopping and starting DECdfs on your system

Most of these tasks involve the use of DFS$CONTROL commands and qualifiers. For complete information on a specific command, see Chapter 4, "DFS$CONTROL Commands". For an overall perspective on DECdfs, read Chapter 2, "Managing a VSI DECdfs Server", even if you manage a client-only node. Certain topics covered in Chapter 2, "Managing a VSI DECdfs Server" affect both the client and server.

Note

A major difference between the server and client is as follows: the server resides in its own process on your system, whereas no explicit client process exists. The client resides in the DFSC device driver. Managing a client involves managing the client devices.

3.1. Setting System Parameters

Running DECdfs on a client system may require that you adjust the SYSGEN parameter NPAGEDYN. Adjust this before installation, as described in the VSI DECdfs for OpenVMS Installation Guide.

DECdfs provides excellent performance when your system uses the default network and RMS parameters. However, you might improve DECdfs client performance by setting these parameters as described in Appendix C, "Adjusting DECnet and Client RMS Parameters to Enhance Performance".

3.2. Mounting Access Points

To mount an access point, use the DFS$CONTROL command MOUNT. You can mount only access points that the server manager has added. How access points are added and mounted is described in Section 2.3.2, ''Adding Access Points''. For further information on the MOUNT command and its qualifiers, refer to Chapter 4, "DFS$CONTROL Commands".

To display a list of the available access points, use the SHOW ACCESS_POINT command. To simplify operation, place the MOUNT commands in the DFS$SYSTARTUP command file.

The MOUNT command mounts the client device to enable access by all users and jobs on the client system. That is, the DFSC device can be accessed by users other than the one who mounted it. However, access to files on the server is controlled based on the client user making the reference, not the user who mounted the device.

%MOUNT-VOLALRMNT, another volume of same label already mounted

If neither the /SYSTEM or /GROUP qualifier is specified, the mount command allocates a new DFSC unit even if another user already has the same access point mounted.

3.2.1. Assigning Device Unit Numbers

Mounting an access point creates a new client device on your system. DECdfs copies this device from the template device DFSC0:. DECdfs creates DFSC0: at startup, when it loads DFSCDRIVER.EXE, the client driver image. DECdfs then copies the I/O database data structures for each subsequent DFSC device from the template. As you mount access points, OpenVMS sequentially assigns a unit number to each new DFSC device, starting with unit number 1001. The first access point you mount creates DFSC1001:, the second access point creates DFSC1002:, and so on.

The MOUNT command has a /DEVICE qualifier that allows you to specify the device unit number. If you manage an OpenVMS Cluster system as a DECdfs client, this feature ensures that the same device number is mounted on all cluster members. Otherwise, DECdfs's default numbering could assign different device unit numbers to the same access point on different cluster members.

3.2.2. Assigning Logical Names

When you mount an access point, you can use the MOUNT command parameter local-logical-name to assign a logical name to the DFSC device. VSI recommends that you use logical names. Because the order in which DFSC devices are created can vary, their unit numbers can also vary. Referring to the devices by consistent logical names simplifies both management and use.

3.2.3. Specifying Volume Names

The MOUNT command's /VOLUME_NAME qualifier allows you to specify a volume name for the client device. This name identifies the device in the display from the DCL command SHOW DEVICE.

Note

Specifying a volume name for the client device does not affect the volume name on the actual device at the server.

3.2.4. Enabling Data Checking

Data checking causes the server to ensure the integrity of data between the disk and the OpenVMS system on the server. When you mount an access point, you can request a data check on read-only operations, write-only operations, or both read and write operations for the client device. To do so, include the /DATA_CHECK qualifier with the MOUNT command.

Data checking takes place at the server. You can request data checking on the client device whether or not the system manager at the server mounted the actual physical device with data checking enabled. If the physical device has data checking enabled, your request does not cause redundant data checking. If the device does not have data checking enabled, your request causes data checking only on your own client's use of the access point.

For a description of data checking on a disk, see the VSI OpenVMS I/O User's Reference Manual.

3.2.5. Mounting Alternative Access Points

An access point can be served by a cluster as well as by an individual node. If the server is a common-environment cluster, the DECdfs manager can register the cluster alias as the access point's location. This allows any node to process incoming requests for the access point. Consequently, the client has to mount only the cluster device. For more information on OpenVMS Cluster systems, see the VSI OpenVMS Cluster Systems Manual manual. For more information on cluster aliases, see the VSI OpenVMS DECnet Network Management Utilities manual or the VSI DECnet-Plus for OpenVMS Network Management Guide manual.

If the server manager does not want all nodes with incoming alias enabled to serve the access point, he or she can add the access point from more than one node, giving the access point a different, alternative name on each. The client manager can then choose an access point name and can also select another name later if problems arise with the first choice.

3.3. Displaying Client Device Information

$SHOW DEVICE DFSC1:Device Device Error Volume Free Trans MntName Status Count Label Blocks Count CntDFSC1: Mounted 0 HELP ***** 2 1

With the /FULL qualifier, the command displays the number 4294967295 in the Free Blocks field. This number is always the same and does not actually represent a count of free blocks.

DFS>SHOW CLIENT SATURNClient Device SATURN (Translates to _DFSC1001:) Status = Available Access Point = DEC:.LKG.S.TANTS.RANGER_SATURN Node = TOOTER Free blocks = 71358

|

Counter |

Description |

|---|---|

|

File Operations Performed |

The total number of all file (XQP) QIO functions issued to the device. |

|

Bytes Read |