Performance Management Manual

- Operating System and Version:

- VSI OpenVMS IA-64 Version 8.4-1H1 or higher

VSI OpenVMS Alpha Version 8.4-2L1 or higher

VSI OpenVMS x86-64 Version 9.2-2 or higher

Preface

This manual presents techniques for evaluating, analyzing, and optimizing performance on a system running OpenVMS. Discussions address such wide-ranging concerns as:

Understanding the relationship between work load and system capacity

Learning to use performance-analysis tools

Responding to complaints about performance degradation

Helping the site adopt programming practices that result in the best system performance

Using the system features that distribute the work load for better resource utilization

Knowing when to apply software corrections to system behavior—tuning the system to allocate resources more effectively

Evaluating the effectiveness of a tuning operation; knowing how to recognize success and when to stop

Evaluating the need for hardware upgrades

The manual includes detailed procedures to help you evaluate resource utilization on your system and to diagnose and overcome performance problems resulting from memory limitations, I/O limitations, CPU limitations, human error, or combinations of these. The procedures feature sequential tests that use OpenVMS tools to generate performance data; the accompanying text explains how to evaluate it.

Whenever an investigation uncovers a situation that could benefit from adjusting system values, those adjustments are described in detail, and hints are provided to clarify the interrelationships of certain groups of values. When such adjustments are not the appropriate or available action, other options are defined and discussed.

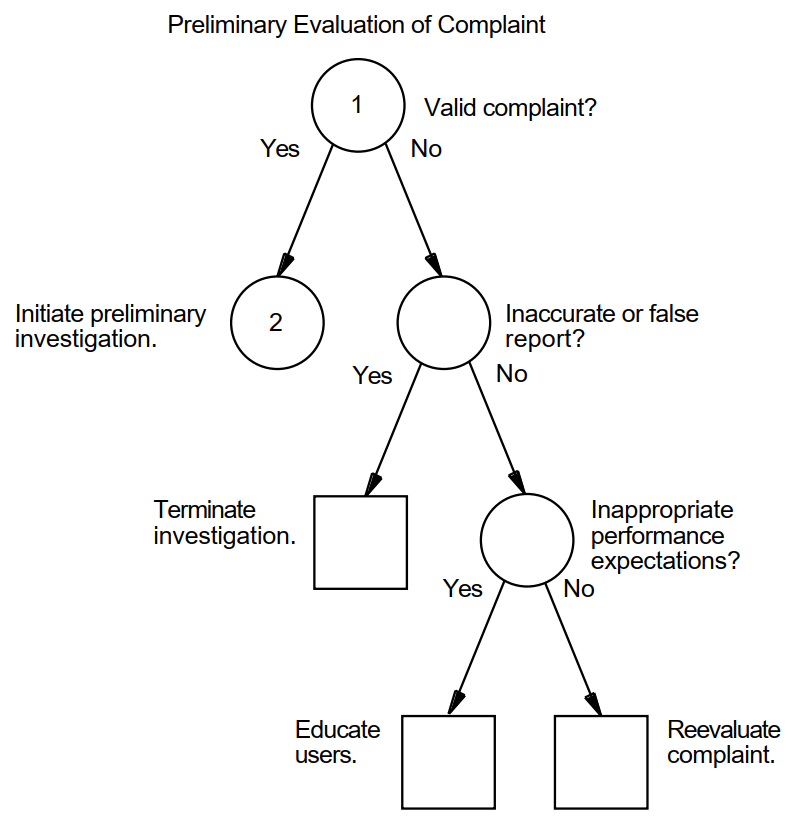

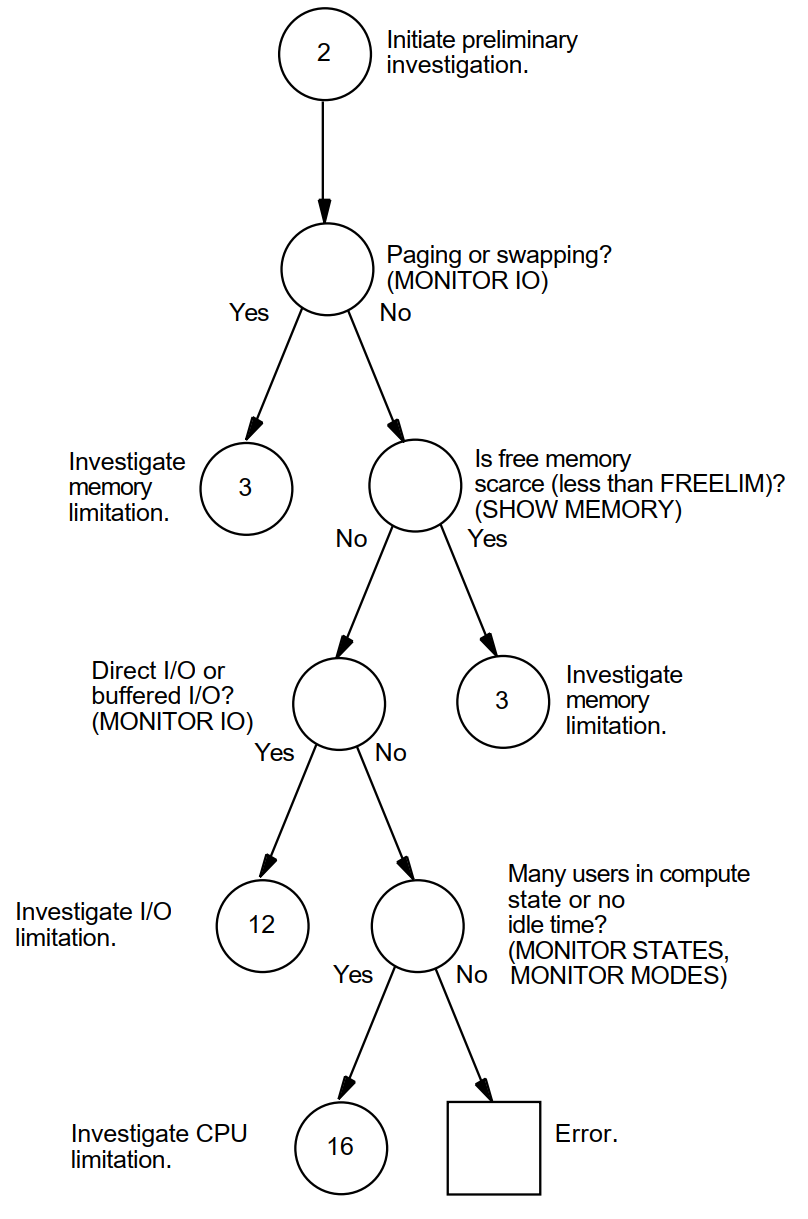

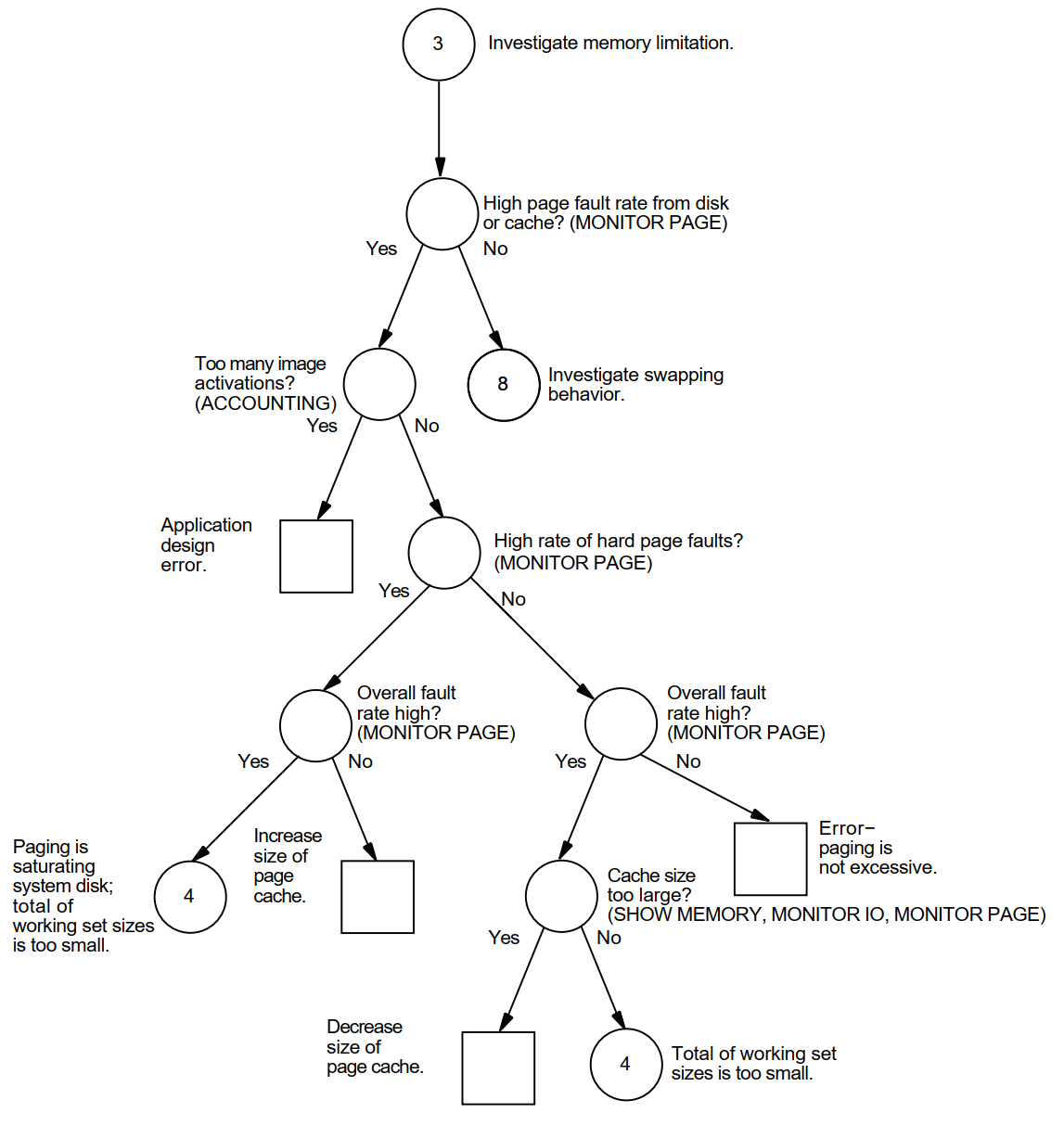

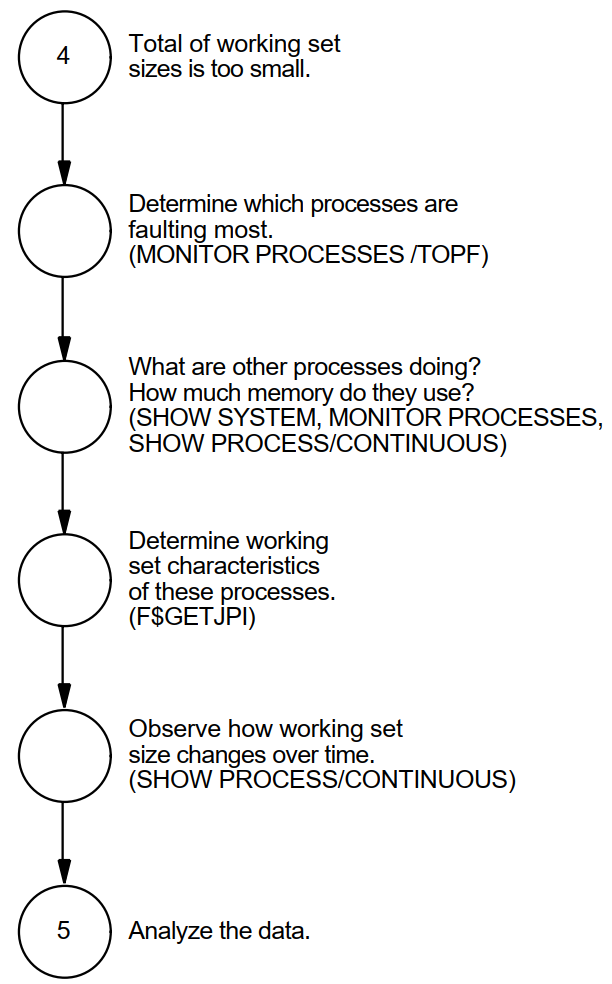

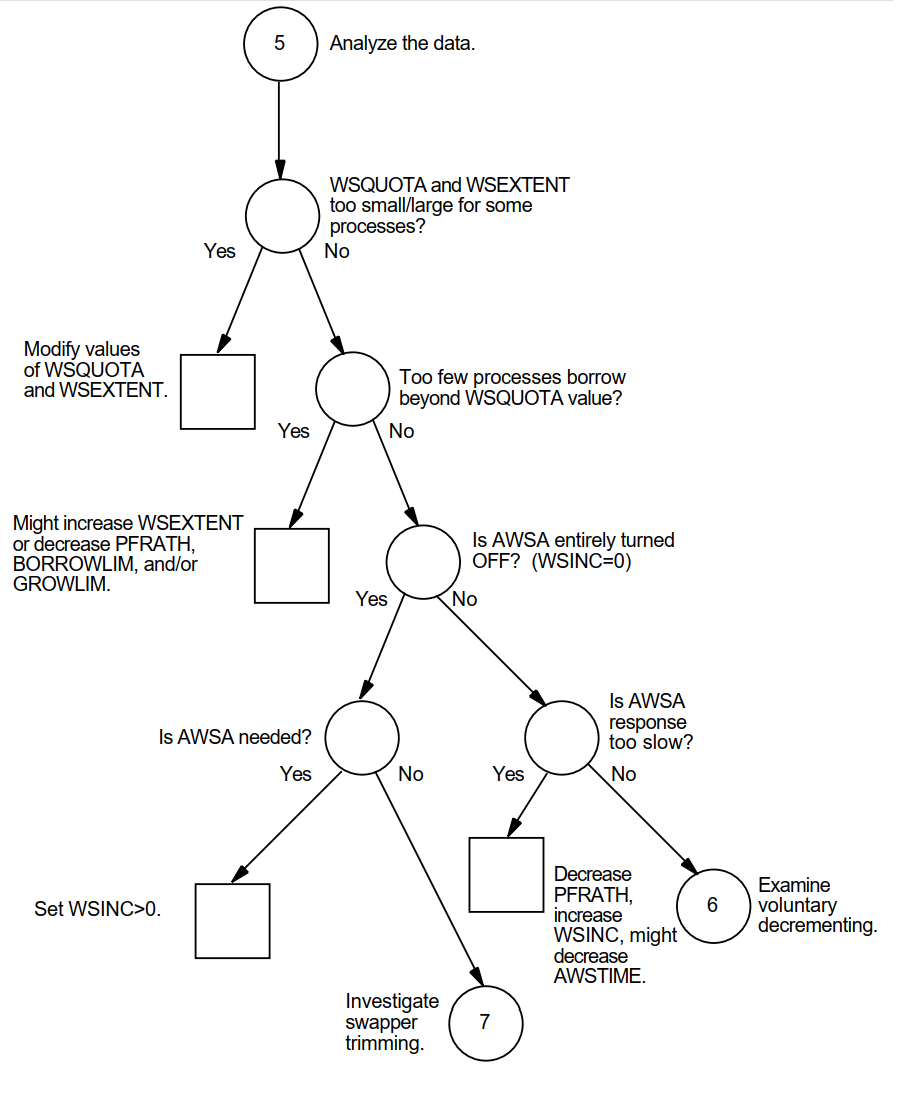

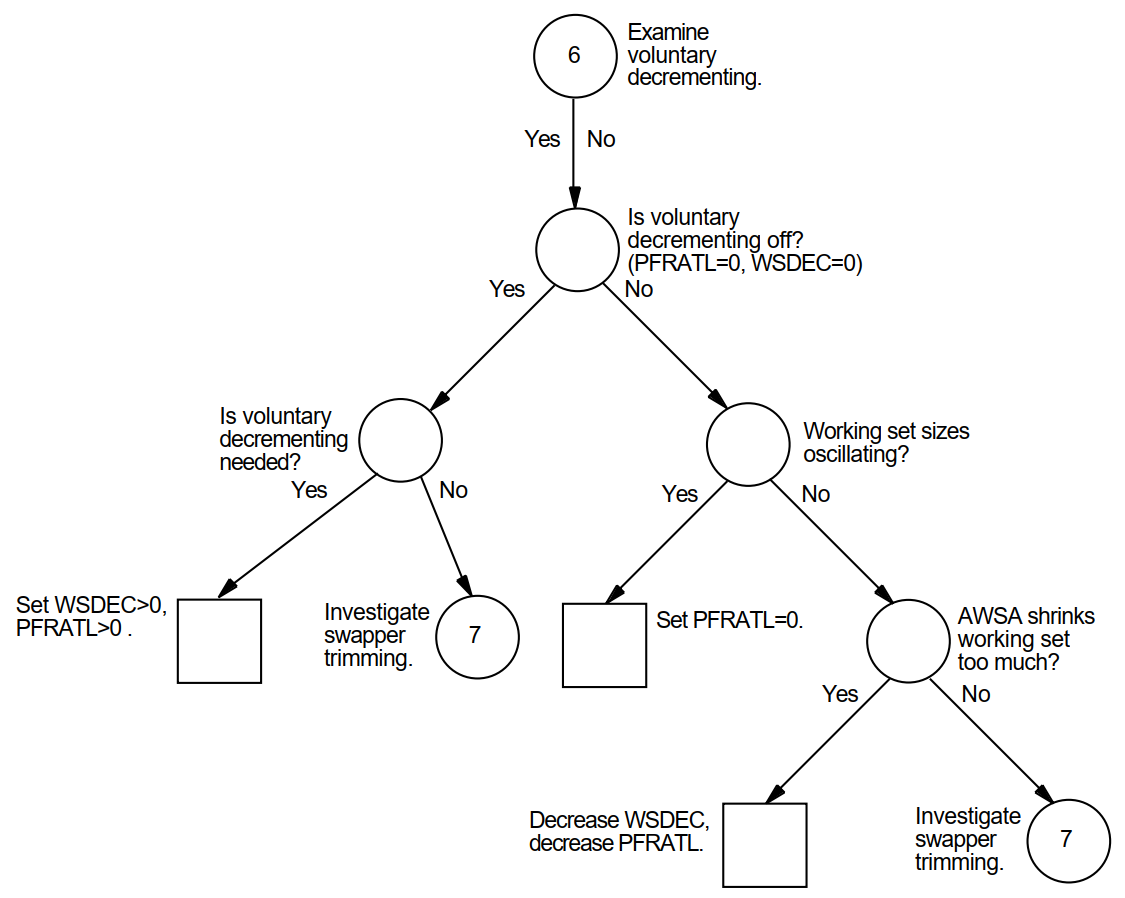

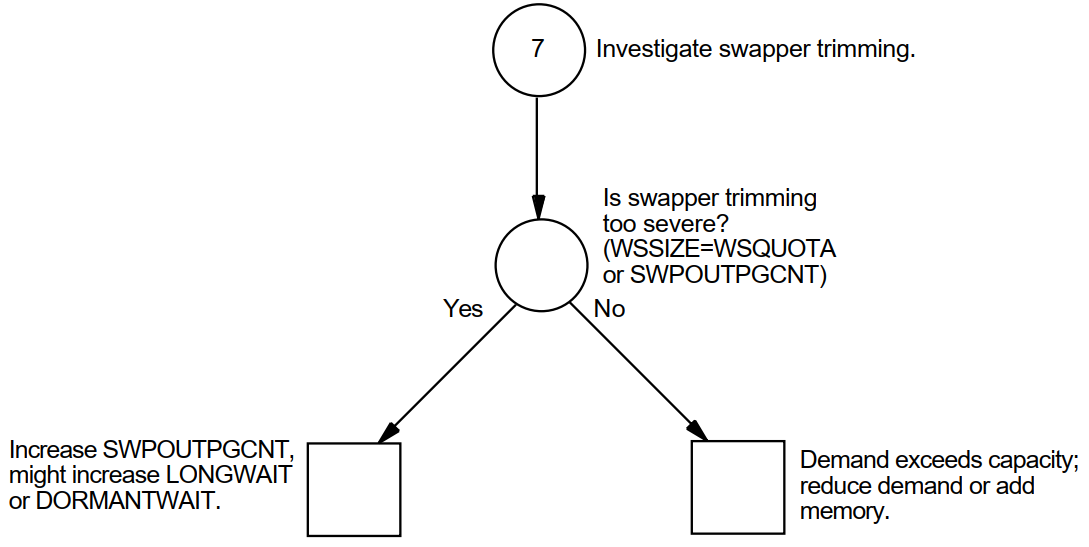

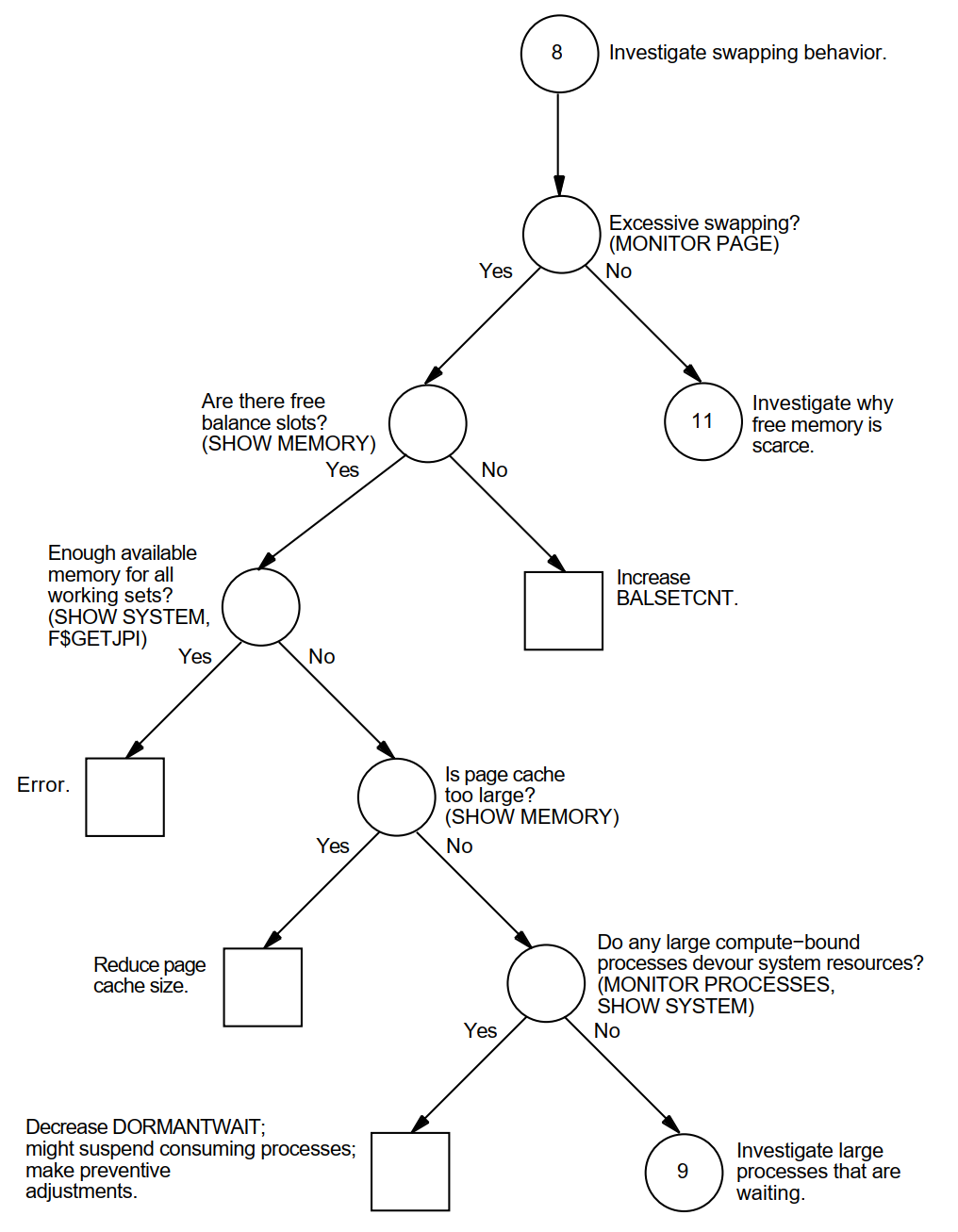

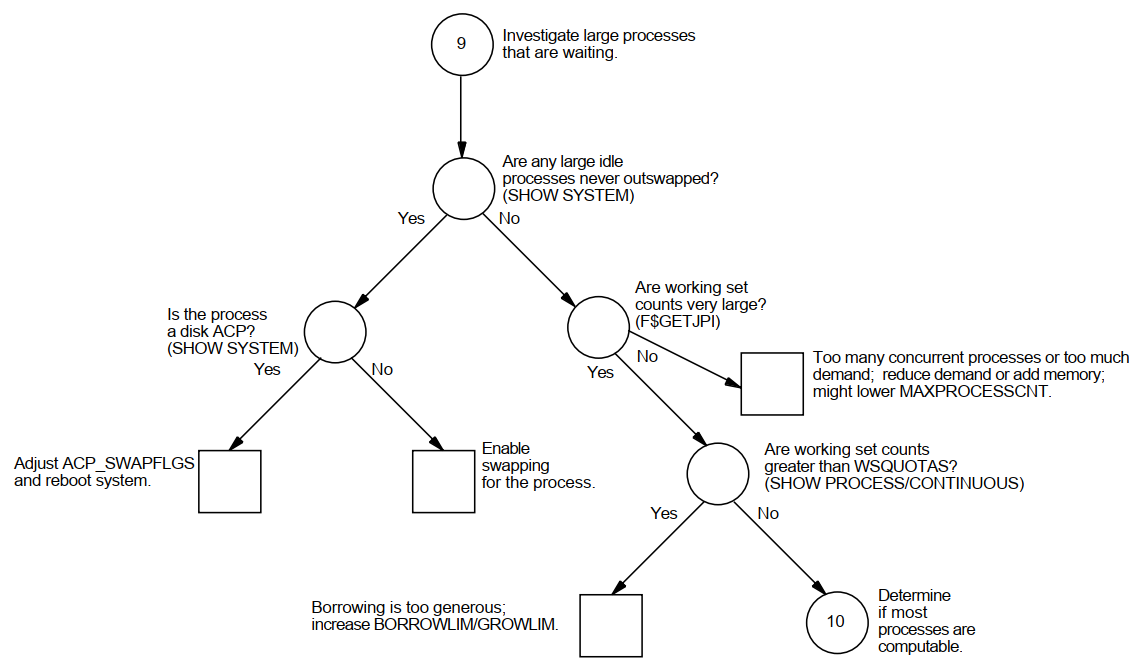

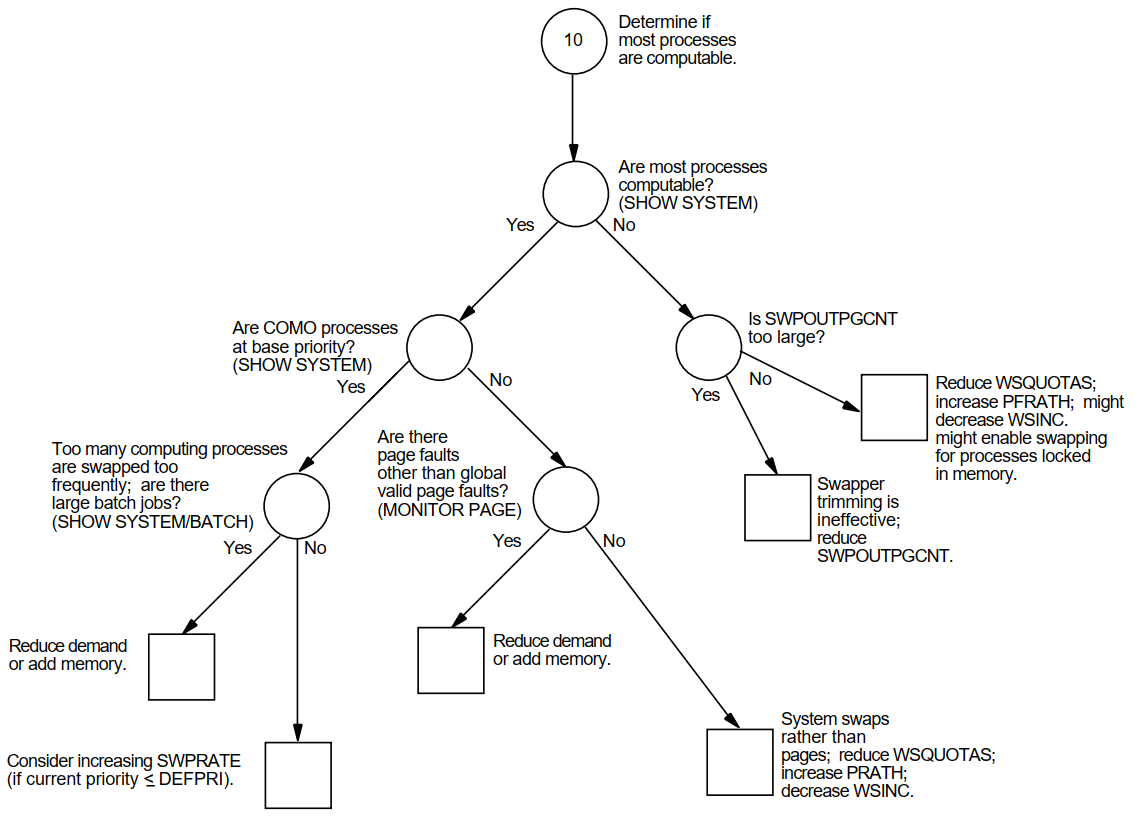

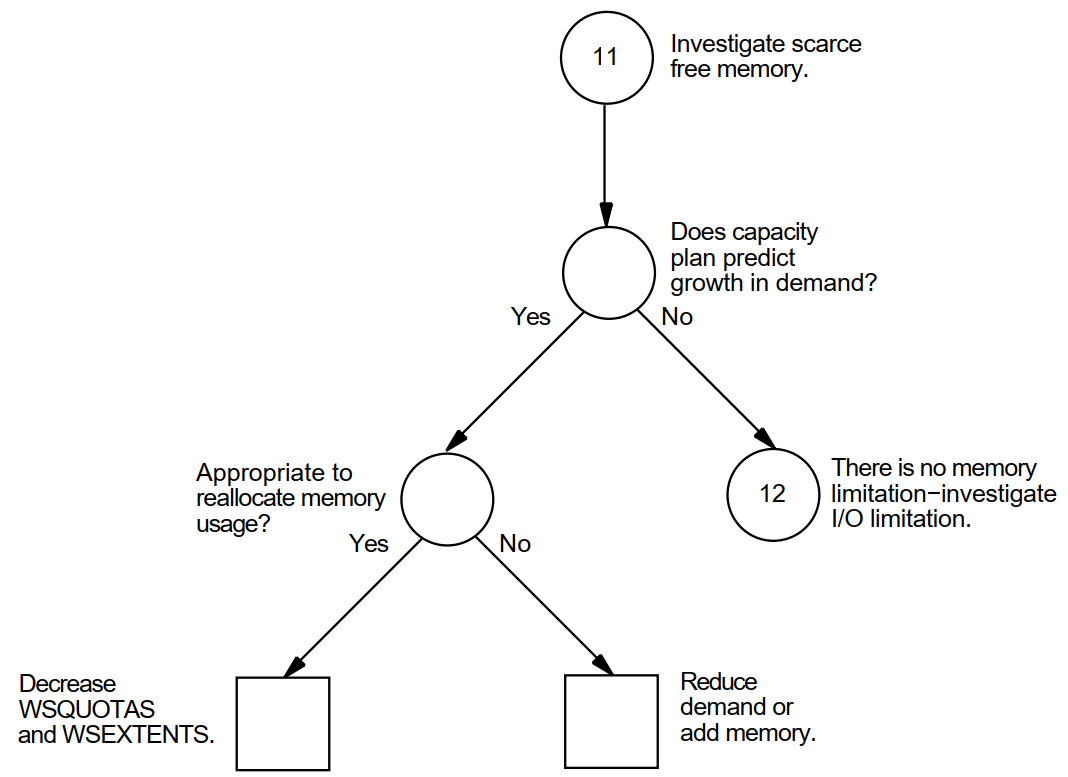

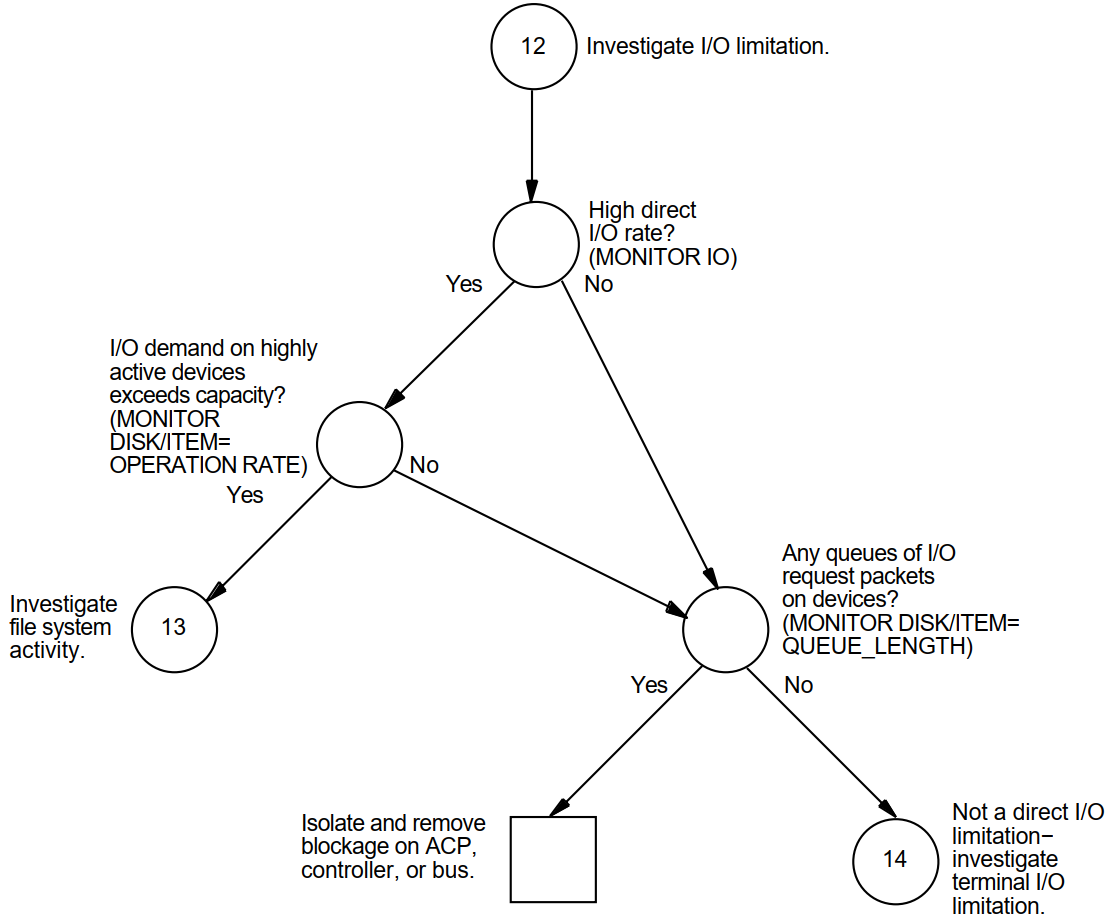

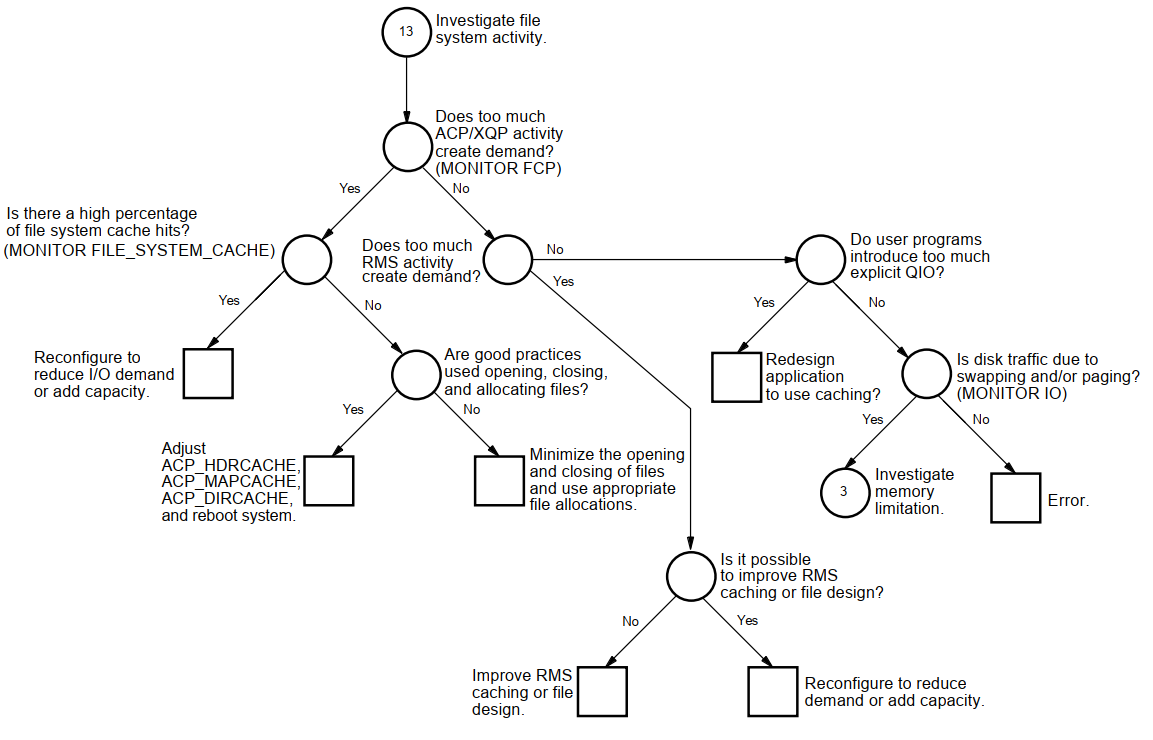

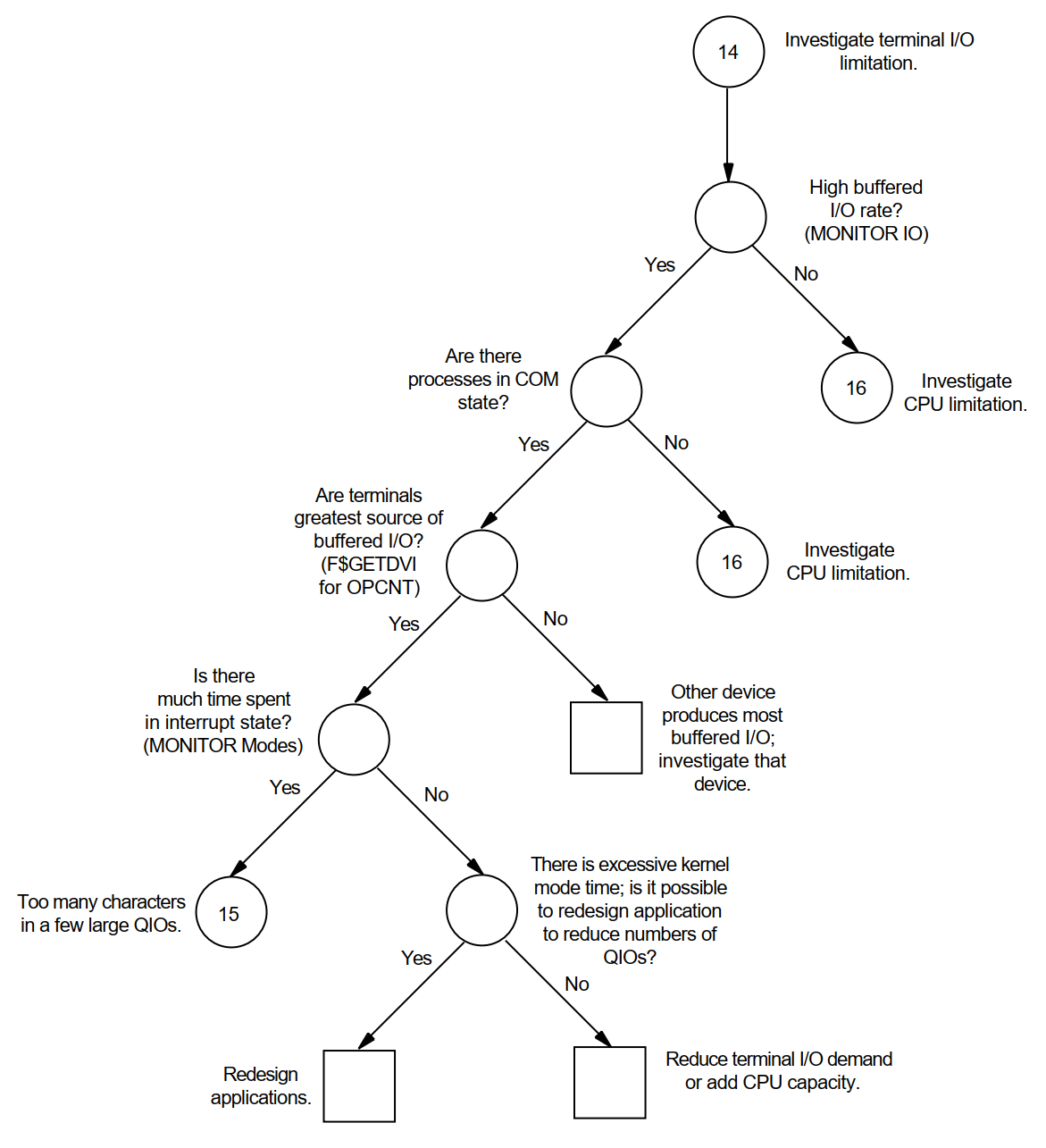

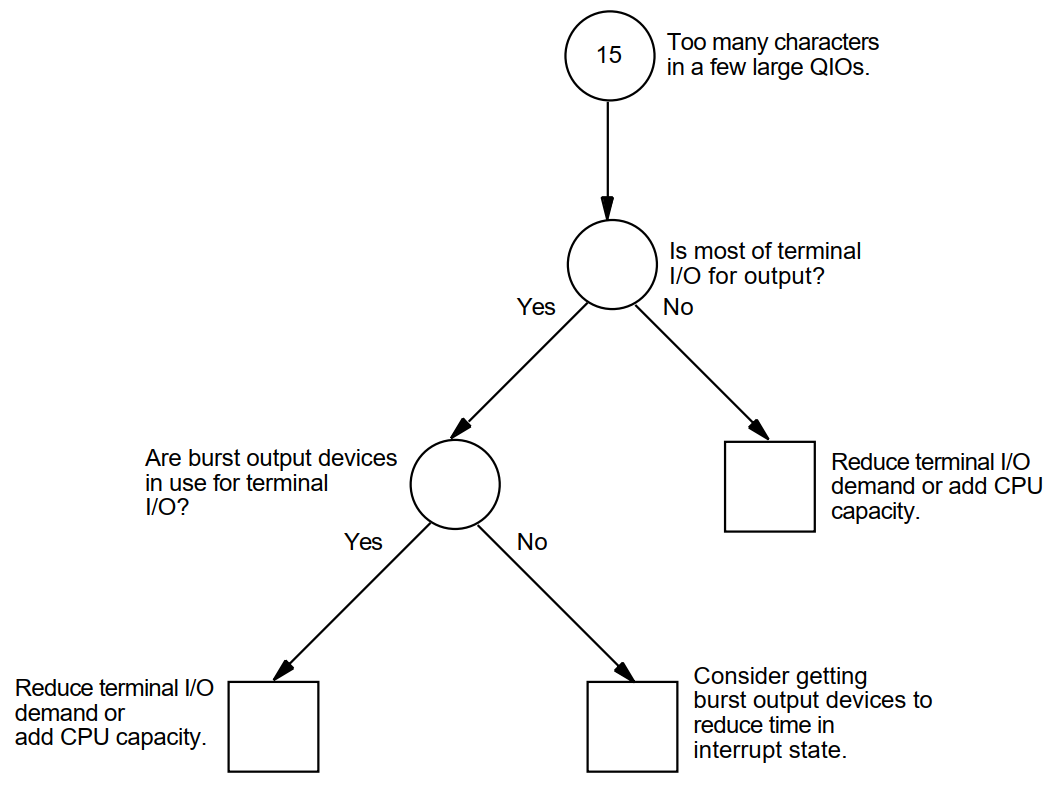

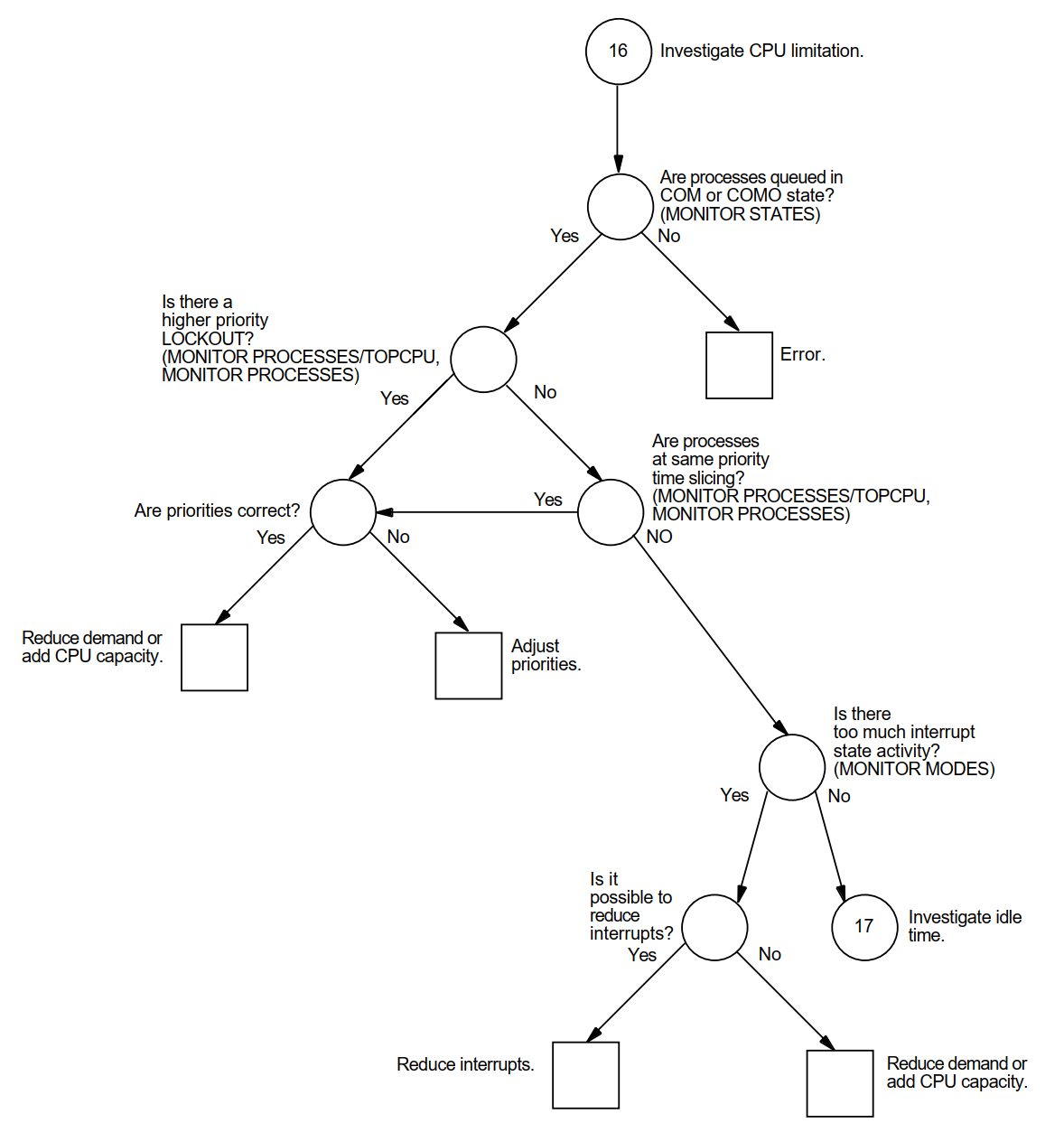

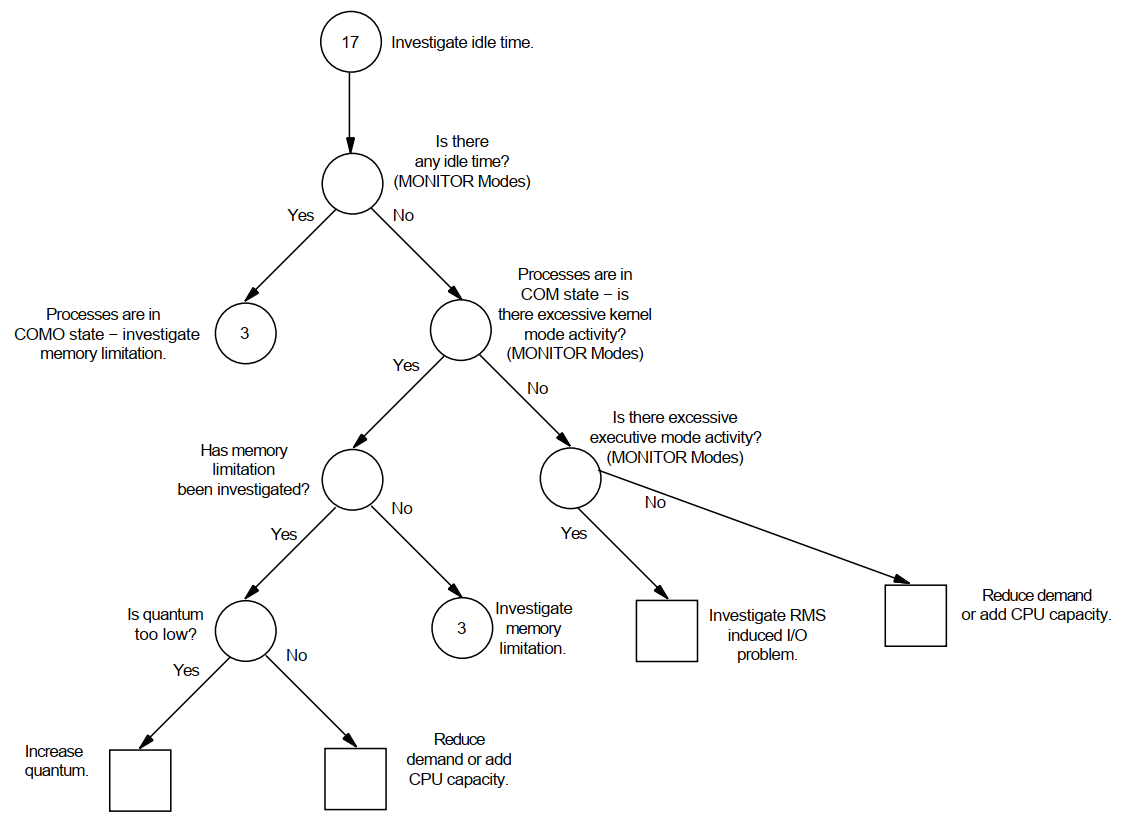

Decision-tree diagrams summarize the step-by-step descriptions in the text. These diagrams should also serve as useful reference tools for subsequent investigations of system performance.

This manual does not describe methods for capacity planning, nor does it attempt to provide details about using OpenVMS RMS features (hereafter referred to as RMS). Refer to the Guide to OpenVMS File Applications for that information. Likewise, the manual does not discuss DECnet for OpenVMS performance issues, because the VSI DECnet-Plus for OpenVMS Network Management Guide manual provides that information.

1. About VSI

VMS Software, Inc. (VSI) is an independent software company licensed by Hewlett Packard Enterprise to develop and support the OpenVMS operating system.

VSI seeks to continue the legendary development prowess and customer-first priorities that are so closely associated with the OpenVMS operating system and its original author, Digital Equipment Corporation.

2. Intended Audience

This manual addresses system managers and other experienced users responsible for maintaining a consistently high level of system performance, for diagnosing problems on a routine basis, and for taking appropriate remedial action.

3. Document Structure

This manual is divided into 13 chapters and 4 appendixes, each covering a related group of performance management topics as follows:

Chapter 1, "Performance Management" provides a review of workload management concepts and describes guidelines for evaluating user complaints about system performance.

Chapter 2, "Performance Options" lists post-installation operations for enhancing performance and discusses performance investigation and tuning strategies.

Chapter 3, "Memory Management Concepts" discusses OpenVMS memory management concepts.

Chapter 4, "Evaluating System Resources" explains how to use utilities and tools to collect and analyze data on your system’s hardware and software resources. Included are suggestions for reallocating certain resources should analysis indicate such a need.

Chapter 5, "Diagnosing Resource Limitations" outlines procedures for investigating performance problems.

Chapter 6, "Managing System Resources" describes how to evaluate system resource responsiveness.

Chapter 7, "Evaluating the Memory Resource" describes how to evaluate the performance of the memory resource and how to isolate specific memory resource limitations.

Chapter 8, "Evaluating the Disk I/O Resource" describes how to evaluate the performance of the disk I/O resource and how to isolate specific disk I/O resource limitations.

Chapter 9, "Evaluating the CPU Resource" describes how to evaluate the performance of the CPU resource and how to isolate specific CPU resource limitations.

Chapter 10, "Compensating for Resource Limitations" provides general recommendations for improving performance with available resources.

Chapter 11, "Compensating for Memory-Limited Behavior" provides specific recommendations for improving the performance of the memory resource.

Chapter 12, "Compensating for I/O-Limited Behavior" provides specific recommendations for improving the performance of the disk I/O resource.

Chapter 13, "Compensating for CPU-Limited Behavior" provides specific recommendations for improving the performance of the CPU resource.

Appendix A, "Decision Trees" lists the decision trees used in the various performance evaluations described in this manual.

Appendix B, "MONITOR Data Items" summarizes the MONITOR data items you will find useful in evaluating your system.

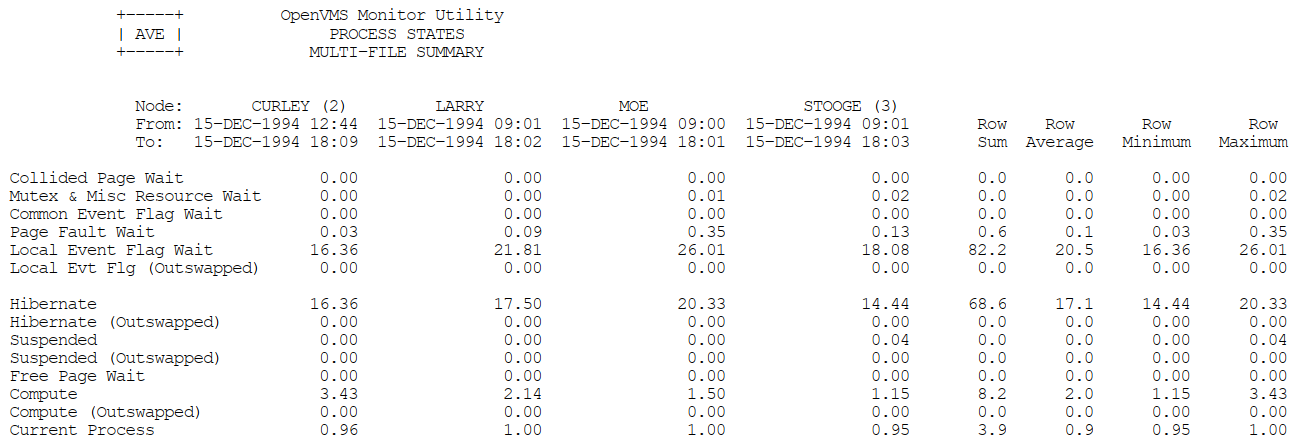

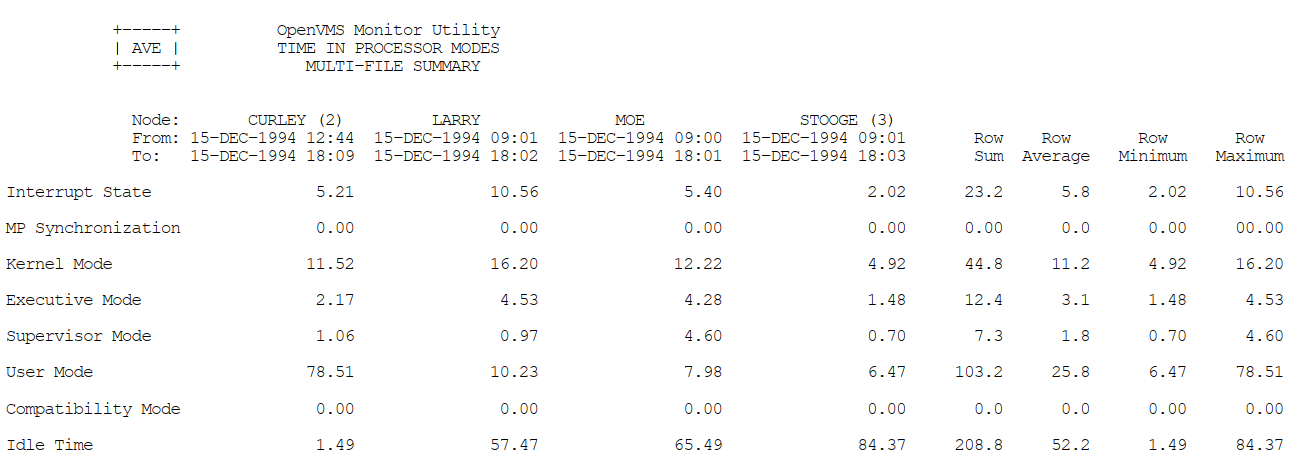

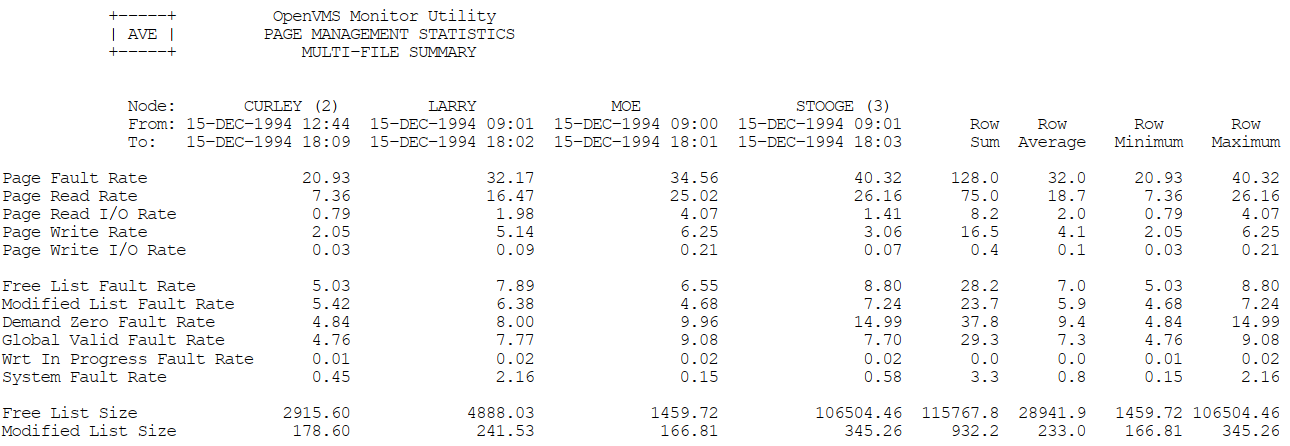

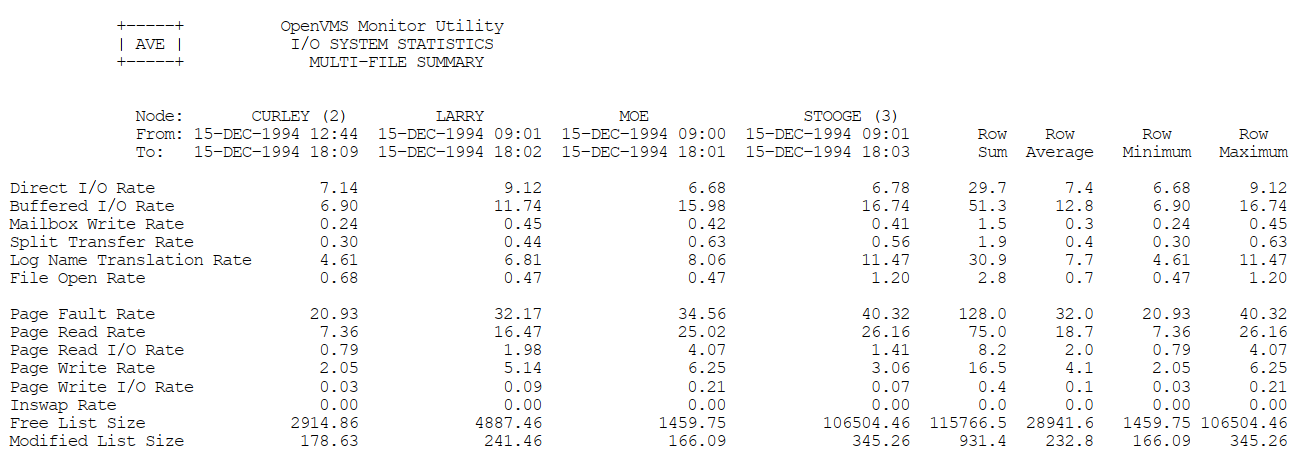

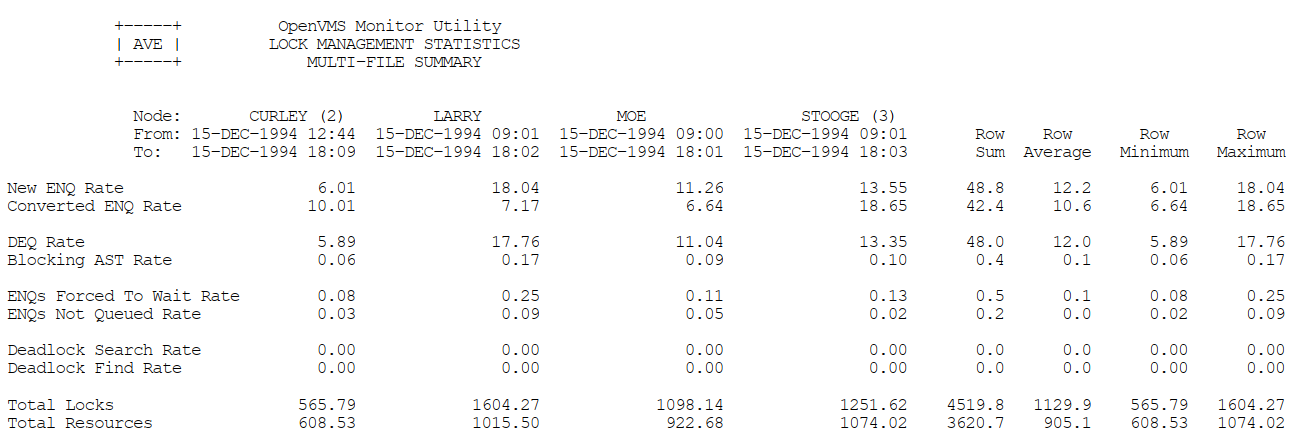

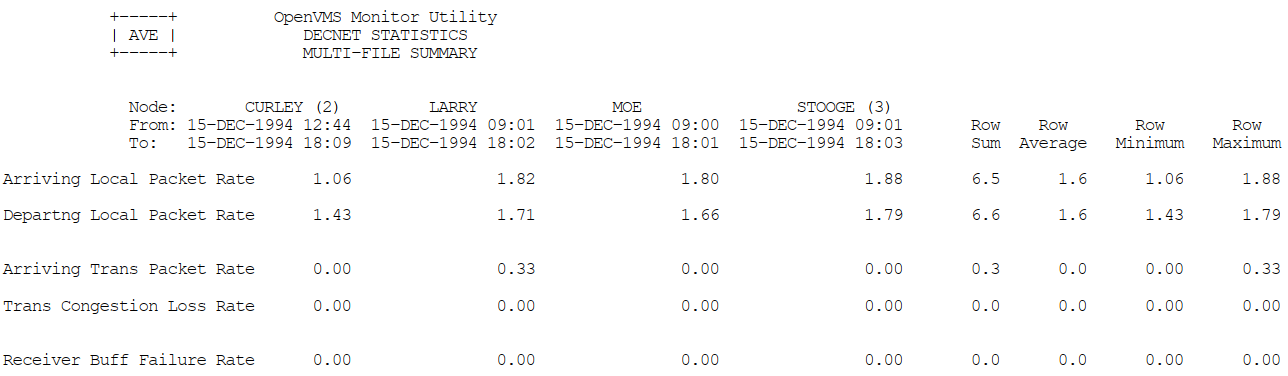

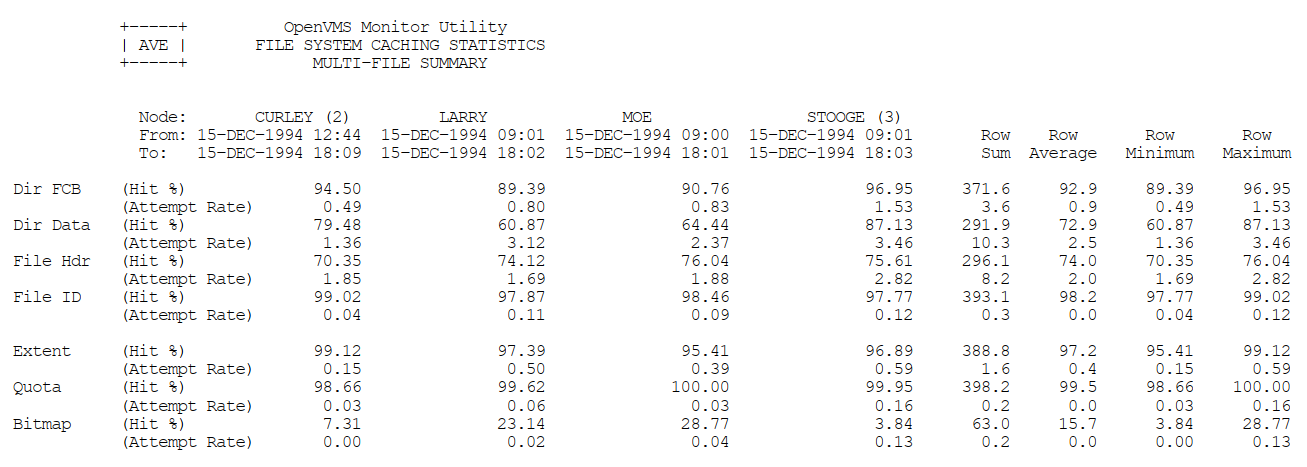

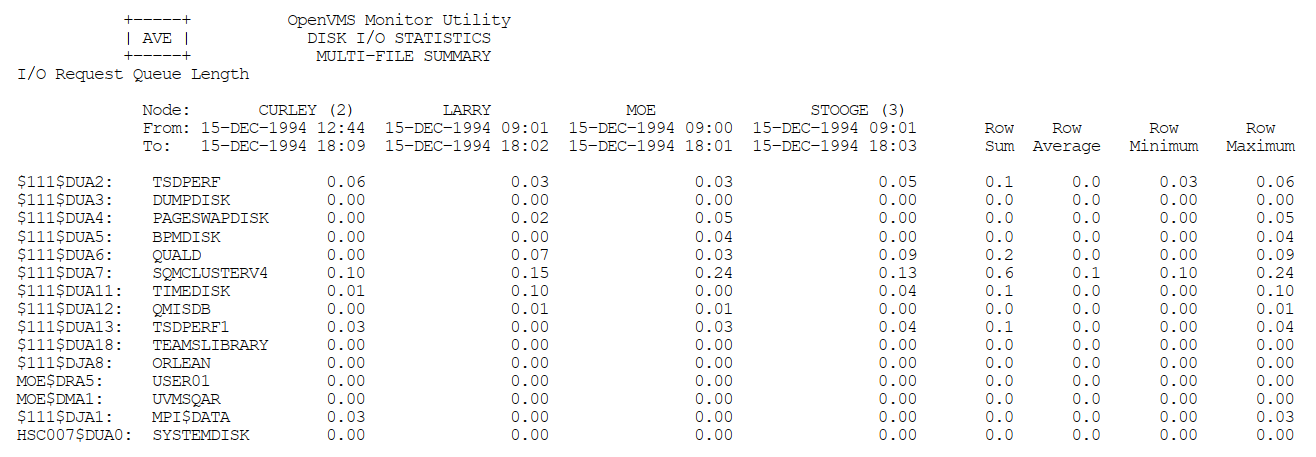

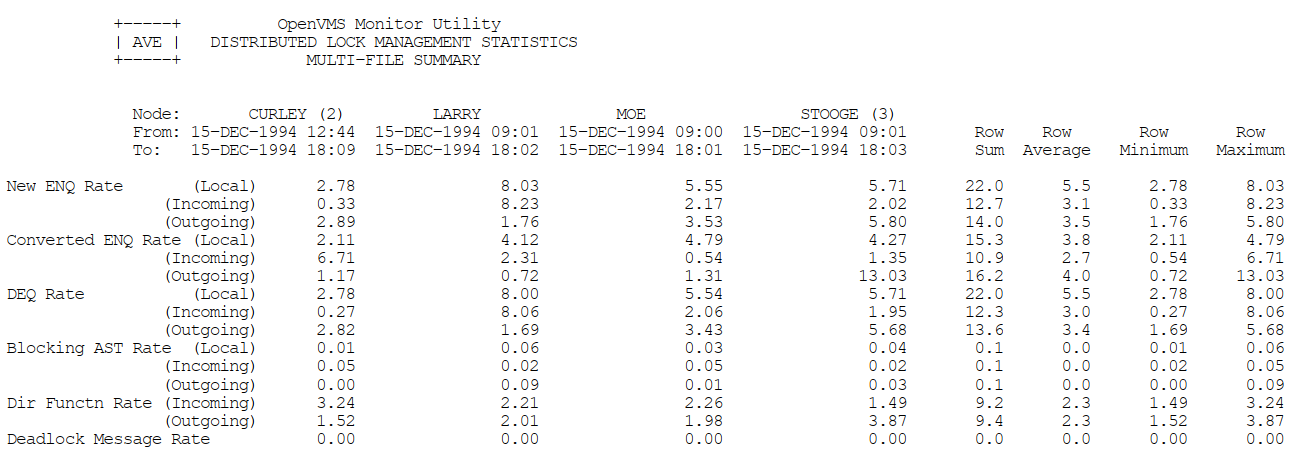

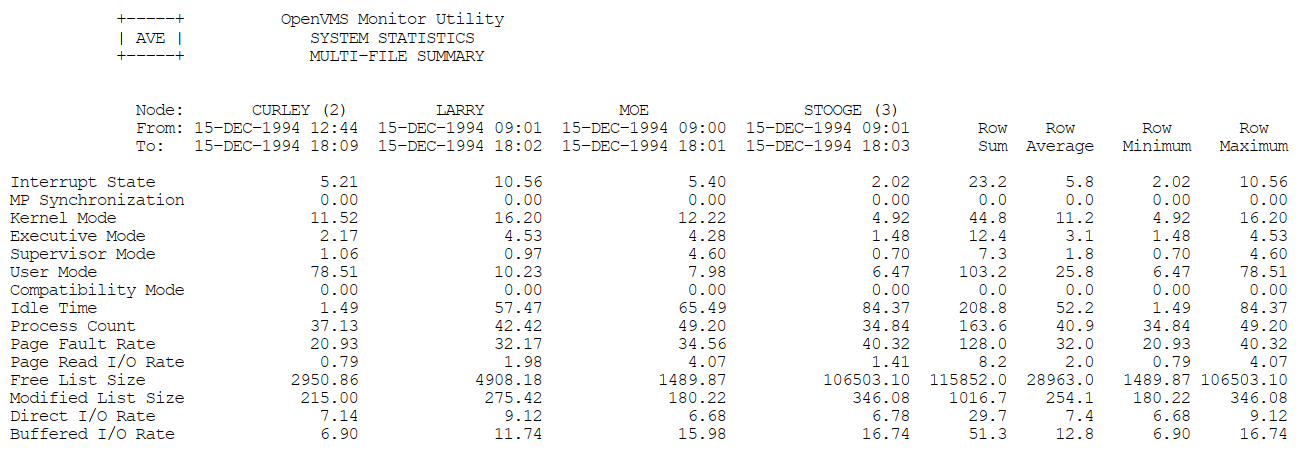

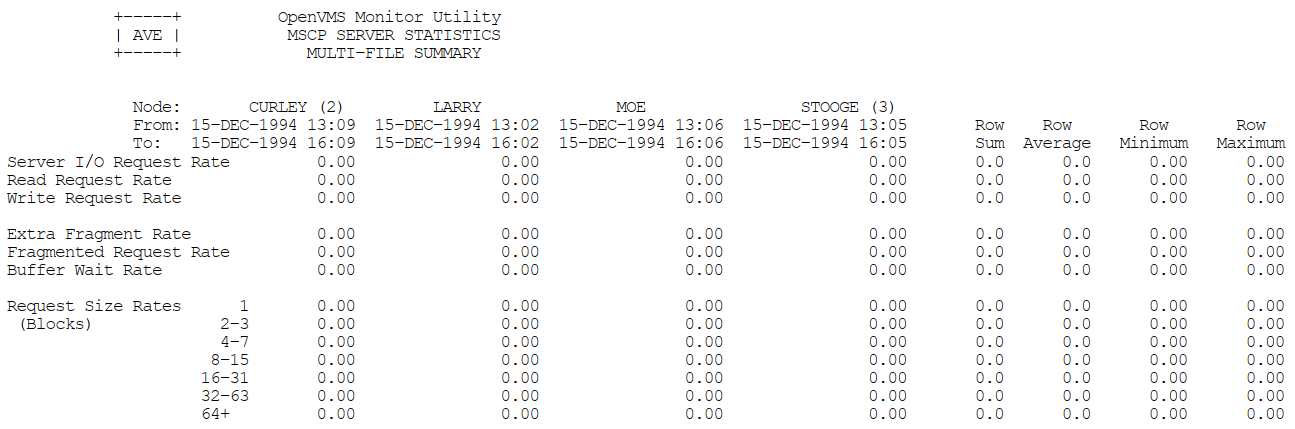

Appendix C, "MONITOR Multifile Summary Report" provides an example of a MONITOR multifile summary report.

Appendix D, "ODS–1 Performance Information" provides ODS-1 performance information.

4. Related Documents

For additional information on the topics covered in this manual, you can refer to the following documents:

VSI OpenVMS System Manager's Manual

Guide to OpenVMS File Applications

VSI OpenVMS System Management Utilities Reference Manual

Guidelines for OpenVMS Cluster Configurations

VSI OpenVMS Cluster Systems Manual

5. VSI Encourages Your Comments

You may send comments or suggestions regarding this manual or any VSI document by sending electronic mail to the following Internet address: <docinfo@vmssoftware.com>. Users who have VSI OpenVMS support contracts through VSI can contact <support@vmssoftware.com> for help with this product.

6. OpenVMS Documentation

The full VSI OpenVMS documentation set can be found on the VMS Software Documentation webpage at https://docs.vmssoftware.com.

7. Conventions

The following conventions are used in this manual:

| Convention | Meaning |

|---|---|

. . . | A vertical ellipsis indicates the omission of items from a code example or command format; the items are omitted because they are not important to the topic being discussed. |

| ( ) | In command format descriptions, parentheses indicate that you must enclose choices in parentheses if you specify more than one. |

| [ ] | In command format descriptions, brackets indicate optional choices. You can choose one or more items or no items. Do not type the brackets on the command line. However, you must include the brackets in the syntax for OpenVMS directory specifications and for a substring specification in an assignment statement. |

| | | In command format descriptions, vertical bars separate choices within brackets or braces. Within brackets, the choices are optional; within braces, at least one choice is required. Do not type the vertical bars on the command line. |

| bold type | This typeface represents the introduction of a new term. It also represents the name of an argument, an attribute, or a reason. |

| italic type | Italic text indicates important information, complete titles of manuals, or variables. Variables include information that varies in system output (Internal error number), in command lines (/PRODUCER=name), and in command parameters in text (where dd represents the predefined code for the device type). |

| UPPERCASE TYPE | Uppercase text indicates a command, the name of a routine, the name of a file, or the abbreviation for a system privilege. |

Monospace

text |

Monospace type indicates code examples and interactive screen displays. In the C programming language, monospace type in text identifies the following elements: keywords, the names of independently compiled external functions and files, syntax summaries, and references to variables or identifiers introduced in an example. |

| - | A hyphen at the end of a command format description, command line, or code line indicates that the command or statement continues on the following line. |

| numbers | All numbers in text are assumed to be decimal unless otherwise noted. Nondecimal radixes—binary, octal, or hexadecimal—are explicitly indicated. |

Chapter 1. Performance Management

Managing system performance involves being able to evaluate and coordinate system resources and workload demands.

CPU

Memory

Disk I/O

Network I/O

LAN I/O

Internet I/O

Cluster communication other than LAN (CI, FDDI, MC)

In addition to this manual, specific cluster information can be found in the Guidelines for OpenVMS Cluster Configurations and the VSI OpenVMS Cluster Systems Manual.

Acquiring a thorough knowledge of your work load and an understanding of how that work load exercises the system's resources

Monitoring system behavior on a routine basis in order to determine when and why a given resource is nearing capacity

Investigating reports of degraded performance from users

Planning for changes in the system work load or hardware configuration and being prepared to make any necessary adustments to system values

Performing certain optional system management operations after installation

A review of workload management concepts

Guidelines for developing a performance management strategy

Because many different networking options are available, network I/O is not formally covered in this manual. General performance concepts discussed here apply to networking, and networking should be considered within the scope of analyzing any system performance problem. You should consult the documentation available for the specific products that you have installed for specific guidelines concerning configuration, monitoring, and diagnosis of a networking product.

Similarly, database products are extremely complex and perform much of their own internal management. The settings of parameters external to OpenVMS may have a profound effect upon how efficiently OpenVMS is used. Thus, reviewing server application specific-material is a must if you are to efficiently understand and resolve a related performance issue.

1.1. System Performance Management Guidelines

Even if you are familiar with basic concepts discussed in this section, there are some details discussed that are specific to this process, so please read the entire section.

1.1.1. The Performance Management Process

Long term measurement and observation of your system is key to understanding how well it is working and is invaluable in identifying potential performance problems before they become so serious that the system grinds to a halt and it negatively affects your business. Thus, performance management should be a routine process of monitoring and measuring your systems to assure good operation through deliberate planning and resource management.

Systematically measuring the system

Gathering and analyzing the data

Evaluating trends

Archiving data to maintain a performance history

You will often observe trends and thus be able to address performance issues before they become serious and adversely affect your business operations. Should an unforeseen problem occur, your historical data will likely prove invaluable for pinpointing the cause and rapidly and efficiently resolving the problem. Without past data from your formerly well-running system, you may have no basis upon which to judge the value of the metrics you can collect on your currently poorly running system. Without historical data you are guessing; resolution will take much longer and cost far more.

Upgrades and Reconfigurations

Some systems are so heavily loaded that the cost of additional functionality of new software can push the system beyond the maximum load that the system was intended to handle and thus deliver unacceptable response times and throughput. If your system is running near its limit now during peak workload periods, you want to ensure that you take the steps necessary to avoid pushing your system beyond its limits when you cannot afford it.

Slow response times

Erratic system behavior

Unexplained system pauses, hangs, or crashes

If this is the case, you need a performance audit to determine your current workload and the resources necessary to adequately support your current and possibly future workloads. Implementing changes not specifically designed to increase such a system's capacity or reduce its workload can degrade performance further. Thus, investing in a performance audit will pay off by delivering you a more reliable, productive, available, and lower maintenance system.

Many factors involved in upgrades and reconfigurations contribute to increased resource consumption. Future workloads your system will be asked to support may be unforeseeable due to changes in the system, workload, and business.

Blind reconfiguration without measurement, analysis, modification, and contingency plans can result in serious problems. Significant increases in CPU, disk, memory, and LAN utilization demand serious consideration, measurement, and planning for additional workload and upgrades.

1.1.2. Conducting a Performance Audit

Evaluate whether your systems are viable candidates for proposed changes.

Identify modifications that must be made.

Insure that planned and implemented changes deliver expected results.

Characterize CPU, disk, memory, and LAN utilization on the systems under consideration before reconfiguration.

Measure system activity after an installation.

Measure, plan, understand, test, and confirm. To understand how system workloads vary, you should perform measurements for one week, if not longer, before installing your network

Take into account that workloads follow business cycles which vary predictably throughout the day, the week, the month, and the year. These variations may be affected by financial and legal deadlines as well as seasonal factors such as holidays and other cyclic activity.

Seek to identify periods of peak heavy loads (relatively long periods of heavy load lasting approximately five or more minutes). Understanding their frequency and the factors affecting them is key to successful system planning and management.

Peak Workloads and the Cyclic Nature of Workloads

You must first identify periods of activity during which you cannot afford to have system performance degrade and then measure overall system activity during these periods.

These periods will vary from system to system minute to minute, hour to hour, day to day, week to week, and month to month. Holidays and other such periods are often significant factors and should be considered. These periods depend upon the business cycles that the system is supporting.

If the periods you have identified as critical cannot be measured at this time, then measurements taken in the immediate future will have to be used as the basis for estimates of the activity during those periods. In such cases you will have to take measurements in the near term and make estimates based on the data you collect in combination with other data such as order rates from the previous calendar month or year, as well as projections or forecasts. But factors other than the CPU may become a bottleneck and slow down the system. For example, a fixed number of assistants can only process so many orders per hour, regardless of the number of callers waiting on the phone for service.

1.2. Strategies and Procedures

- Develop workload strategy (Chapter 1, "Performance Management")

Managing the work load

Distributing the work load

Sharing application code

- Develop tuning strategy (Chapter 2, "Performance Options")

Automatic Working Set Adjustment (AWSA)

AUTOGEN

Active memory management

- Perform general system resource evaluation (Chapter 4, "Evaluating System Resources")

CPU resource

Memory resource

Disk I/O resource

- Conduct a preliminary investigation of specific resource limitations (Chapter 5, "Diagnosing Resource Limitations")

Isolating memory resource limitations

Isolating disk I/O resource limitations

Isolating CPU resource limitations

- Review techniques for improving system resource responsiveness (Chapter 6, "Managing System Resources")

Providing equitable sharing of resources

Reducing resource consumption

Ensuring load balancing

Initiating offloading

- Apply specific remedy to compensate for resource limitations (Chapter 10, "Compensating for Resource Limitations")

Compensating for memory-limited behavior

Compensating for I/O-limited behavior

Compensating for CPU-limited behavior

1.3. System Manager's Role

Assume the responsibility for understanding the system's work load sufficiently to be able to recognize normal and abnormal behavior.

Predict the effects of changes in applications, operations, or usage.

Recognize typical throughput rates.

Evaluate system performance.

Perform tuning as needed.

1.3.1. Prerequisites

Be familiar with system tools and utilities.

Know your work load.

Develop a strategy for evaluating performance.

1.3.2. System Utilities and Tools

Accounting utility (ACCOUNTING)

Audit Analysis utility (ANALYZE/AUDIT)

Authorize utility (AUTHORIZE)

AUTOGEN command procedure

DCL SHOW commands

DECamds (Availability Manager)

DECevent utility (Alpha only)

Error Log utility (ANALYZE/ERROR_LOG)

Monitor utility (MONITOR)

On Alpha platforms, VSI recommends using the DECevent utility instead of the Error Log utility, ANALYZE/ERROR_LOG. (You invoke the DECevent utility with the DCL command, DIAGNOSE.) You can use ANALYZE/ERROR_LOG on Alpha systems, but the DECevent utility provides more comprehensive reports.

1.3.3. Why Use Them?

Collect and analyze key data items.

Observe usage trends.

Predict when your system reaches its capacity.

Adjust system parameters.

Modify users' privileges and quotas.

1.3.4. Knowing Your Work Load

What is the typical number of users on the system at each time of day?

What is the typical response time for various tasks for this number of users, at each hour of operation?

What are the peak hours of operation?

Which jobs typically run at which time of day?

Which commonly run jobs are intensive consumers of the CPU? of memory? of disk?

Which applications have the most image activations?

Which parts of the system software, if any, have been modified or user written, such as device drivers?

Are there any known system bottlenecks? Are there any anticipated ones?

Note

If you are a novice system manager, you should spend a considerable amount of time observing system operation using the DCL commands ACCOUNTING, MONITOR, and SHOW.

1.4. Developing a Strategy

Managing the work load

Distributing the work load

Sharing application code

1.4.1. Managing the Work Load

Is there a time of day when the work load peaks, that is, when it is noticeably heavier than at other times?

Is there any way to balance the work load better? Perhaps measures can be adopted by users.

Could any jobs be run better as batch jobs, preferably during nonpeak hours?

Have primary and secondary hours of operation been employed with users? Are the choices of hours the most appropriate for all users?

Can future applications be designed to work around any known or expected system bottlenecks? Can present applications be redesigned for the same purpose?

- Are you using all of the code-sharing features that the OpenVMS system offers you?

Note

Do not adjust any system values until you are satisfied that all these issues are resolved and that your workload management strategy is appropriate.

1.4.2. Distributing the Work Load

- Run large jobs as batch jobs.

Establish a site policy that encourages the submission of large jobs on a batch basis.

Regulate the number of batch streams so that batch usage is high when interactive usage is low.

Use DCL command qualifiers to run batch jobs at lower priority, adjust the working set sizes, and control the number of concurrent jobs.

For more information, see the VSI OpenVMS System Manager's Manual, Volume 1: Essentials.

- Restrict system use.

Do not permit more users to log in at one time than the system can support with adequate response time.

Restrict the number of interactive users with the DCL command SET LOGINS/INTERACTIVE.

Control the number of concurrent processes with the MAXPROCESSCNT system parameter.

Control the number of remote terminals allowed to access the system at one time with the RJOBLIM system parameter.

- Restrict system use to groups of users to certain days and hours of the day.

Use the Authorize utility to define permitted login hours for each user.

Use the DCL command SET DAY to override the conventional day-of-the-week associations for primary and secondary days.

- Design applications to reduce demand on binding resources.

Find out where system bottlenecks are located.

Plan use that minimizes demands on the bottleneck points.

1.4.3. Sharing Application Code

Application code sharing provides a cost-effective means of optimizing memory utilization. To ensure optimum performance of your system, make sure that frequently used code is shared.

Use the site-specific startup procedure to install as shared known images user-written programs and routines that are designed for sharing and have reached production status or are in general use.

Encourage programmers to write shareable code.

1.5. Analyzing Complaints

Ensure that hardware resources are adequate.

Know the work load reasonably well.

Have experience managing the work load according to the guidelines in Section 1.3.4, ''Knowing Your Work Load''.

1.5.1. Preliminary Steps

|

Step |

Action |

|---|---|

|

1 |

Obtain the following information:

|

|

2 |

Compare these facts with your knowledge of the normal work load and operation of your system. |

|

3 |

Follow the procedure shown in Figure A.1, ''Verifying the Validity of a Performance Complaint'' to verify the validity of the complaint. Did you observe the problem? Can you duplicate the problem? |

The following sections describe several reasons for performance problems.

1.5.2. Hardware Problem

|

Step |

Action |

|---|---|

|

1 |

Check the operator log and error log for indications of problems with specific devices. |

|

2 |

Enter the DCL commands SHOW ERROR and ANALYZE/ERROR_LOG to help determine if hardware is contributing to a performance problem. |

|

3 |

Review the previous day's error log as part of your morning routine. |

|

4 |

Obtain a count of errors logged since yesterday. Use the

following DCL command (which requires SYSPRV privilege):

For more information about error logging, see the VSI OpenVMS System Manager's Manual, Volume 2: Tuning, Monitoring, and Complex Systems; for information about using the Analyze/Error_Log utility, refer to the VSI OpenVMS System Management Utilities Reference Manual, Volume 1: A-L. |

1.5.3. Blocked Process

A process enters the miscellaneous resource wait (MWAIT or RWAST) state usually because some resource, such as a paging file or mailbox, is unavailable (for example, because of a low quota or a program bug).

|

If... |

Then... |

|---|---|

|

A process is in the MWAIT state |

Use the DCL commands MONITOR PROCESSES and SHOW SYSTEM to identify the reason for the MWAIT state. |

|

The system fails to respond while you are investigating an MWAIT condition |

Check the system console for error messages. |

|

The MWAIT condition persists after you increase the capacity of the appropriate resource |

Investigate the possibility of a programming design error. |

1.5.4. Unrealistic Expectations

Users expect response times to remain constant, even as the system work load increases.

An unusual set of circumstances has caused exceptionally high demand on the system all at once.

Note

Whenever you anticipate a temporary workload change that will affect your users, notify them through broadcasts, text, or both, in the system notices.

Chapter 2. Performance Options

Poor operation

Lack of understanding of the work load and its operational ramifications

Lack of resources

Poor application design

Human error

A combination of these factors

Decompressing system libraries

Disabling file system high-water marking

Setting RMS file-extend parameters

Installing frequently used images

Enabling extended file caching

Reducing system disk I/O

Tuning

Note that not all the options discussed in this chapter are appropriate at every site.

2.1. Decompressing System Libraries

Most of the OpenVMS libraries are in compressed format in order to conserve disk space.

The CPU dynamically decompresses the libraries whenever they are accessed. However, the resulting performance slowdown is especially noticeable during link operations and when users are requesting online help.

If you have sufficient disk space, decompressing the libraries will improve CPU and elapsed-time performance.

Note

Decompressed object libraries take up about 25 percent more disk space than when compressed; the decompressed help libraries take up about 50 percent more disk space.

2.2. Disabling File System High-Water Marking

High-water marking is set by default whenever a volume is initialized. This security feature guarantees that users cannot read data they have not written.

How often new files are created

How often existing files are extended

How fragmented the volume is

- Enter a DCL command similar to the following:

$SET VOLUME/NOHIGHWATER_MARKING device-spec[:] Dismount and remount the volume.

2.3. Setting RMS File-Extend Parameters

Specifying larger values for system file-extend parameters

Setting the system parameter RMS_EXTEND_SIZE

Specifying a larger multiblock count

Specifying a larger multibuffer count

2.4. Installing Frequently Used Images

All processes use the same physical copy of the image.

The image will be activated in the most efficient way.

INSTALL ADD/OPEN/SHARED/HEADER_RESIDENT filename INSTALL CREATE/OPEN/SHARED/HEADER_RESIDENT filename

ADD and CREATE are synonyms. The /SHARED and /HEADER_RESIDENT qualifiers imply the image is open. The /OPEN qualifier indicates the file is a permanently known image to the system.

2.5. Enabling File Caching

Enable extended file caching to reduce the number of disk I/O operations. See Section 12.2, ''Use Extended File Caching'' and the VSI OpenVMS System Manager's Manual.

2.6. Reducing System Disk I/O

|

Logical Name |

File |

|---|---|

|

ACCOUNTING |

System Accounting Data File |

|

AUDIT_SERVER |

Audit server master file |

|

QMAN$MASTER |

Job queue database master file ? |

|

Directory specification ? |

Job queue database queue and journal files |

|

NETPROXY |

NETPROXY.DAT |

|

OPC$LOGFILE_NAME |

Operator log files |

|

RIGHTSLIST |

RIGHTSLIST.DAT |

|

SYS$ERRORLOG |

ERRFMT log files |

|

SYS$JOURNAL |

DECdtm transaction log files |

|

SYS$MONITOR |

MONITOR log files |

|

SYSUAF |

SYSUAF.DAT |

|

VMSMAIL_PROFILE |

VMSMAIL_PROFILE.DATA |

In addition, the default DECNET account can reside on another disk. Refer to the DECNET record in the system authorization file.

You might consider moving paging and swapping activity off the system disk by creating large secondary page and swap files on a less heavily used disk.

In an educational or learning environment, there are several other files you might want to move off the system disk. If many users will frequently access the help libraries, you could move the directory pointed to by logical name SYS$HELP to another disk. However, the system installation and upgrade procedures assume SYS$HELP is on the system disk. If you move SYS$HELP to another disk, you will have to update it manually after a system upgrade.

If users frequently use computer-based instruction programs, you can move SYS$INSTRUCTION or DECW$CBI (or both) off the system disk, or make them into search lists.

Make these changes only if you have determined that the files in these directories are frequently used on your system.

2.7. Tuning

Tuning is the process of altering various system values to obtain the optimum overall performance possible from any given configuration and work load.

Note

When you have optimized your current system, the acquisition and installation of additional memory or devices can vastly improve system operation and performance.

Always aim for best overall performance, that is, performance viewed over time. The work load is constantly changing on most systems. Therefore, what guarantees optimal workload performance at one time might not produce optimal performance a short time later as the work load changes.

2.7.1. Prerequisites

Improper operation

Unreasonable performance expectations

Insufficient memory for the applications attempted

Inadequate hardware configuration for the work load, such as too slow a processor, too few buses for the devices, too few disks, and so forth

Improper device choices for the work load, such as using disks with insufficient speed or capacity

Hardware malfunctions

Poorly designed applications

Human error, such as allowing one process to consume all available resources

2.7.2. Tuning Suggestions

The effort required is often more expensive than a capacity upgrade.

The system includes AUTOGEN, which automatically establishes initial values for all system-dependent system parameters.

- The system includes features that in a limited way permit it to adjust itself dynamically during operation. The system can detect the need for adjustment in the following areas:

Nonpaged dynamic pool

Working set size

Number of pages on the free- and modified-page lists

As a result, these areas can grow dynamically, as appropriate, during normal operation. For more information on automatic adjustment features, see Section 3.5, ''Automatic Working Set Adjustment (AWSA)''. The most common cause of poor system performance is insufficient hardware capacity.

If you have adjusted your system for optimal performance with current resources and then acquire new capacity, you must plan to compensate for the new configuration. In this situation, the first and most important action is to execute the AUTOGEN command procedure.

If you anticipate a dramatic change in your workload, you should expect to compensate for the new workload.

2.7.3. Tools and Utilities

Caution

Do not directly modify system parameters using SYSGEN. AUTOGEN overrides system parameters set with SYSGEN, which can cause a setting to be lost months or years after it was made.

Use AUTHORIZE to change user account information, quotas, and privileges.

2.7.4. When to Use AUTOGEN

During a new installation or upgrade

Whenever your work load changes significantly

When you add an optional (layered) software product

When you install images with the /SHARED qualifier

On a regular basis to monitor changes in your system's work load

When you adjust system parameters

AUTOGEN will not fix a resource limitation.

2.7.5. Adjusting System Parameter Values

|

If you want to... |

Then... |

|---|---|

|

Modify system parameters |

Use the AUTOGEN command procedure. |

|

Change entries in the UAF |

Use AUTHORIZE. |

2.7.6. Using AUTOGEN Feedback

AUTOGEN has special features that allow it to make automatic adjustments for you in associated parameters. Periodically running AUTOGEN in feedback mode ensures that the system is optimally tuned.

The operating system keeps track of resource shortages in subsystems where resource expansion occurs. AUTOGEN in feedback mode uses this data to perform tuning.

2.7.7. Evaluating Tuning Success

|

Step |

Action |

|---|---|

|

1 |

Run a few programs that produce fixed and reproducible results at the same time you run your normal work load. |

|

2 |

Measure the running times. |

|

3 |

Adjust system values. |

|

4 |

Run the programs again at the same time you run your normal work load under nearly identical conditions as those in step 1. |

|

5 |

Measure the running times under nearly identical workload conditions. |

|

6 |

Compare the results. |

|

7 |

Continue to observe system behavior closely for a time after you make any changes. |

Note

This test alone does not provide conclusive proof of success. There is always the possibility that your adjustments have favored the performance of the image you are measuring—to the detriment of others.

2.7.7.1. When to Stop Tuning

In every effort to improve system performance, there comes a point of diminishing returns. After you obtain a certain level of improvement, you can spend a great deal of time tuning the system yet achieve only marginal results.

Because a system that has been improperly adjusted usually exhibits blatant symptoms with fairly obvious and simple solutions, you will likely find that tuning—in the form of adjustments to certain critical system values—produces a high return for the time and effort invested and that there is a much lower risk of error. As a guideline, if you make adjustments and see a marked improvement, make more adjustments and see about half as much improvement, and then fail to make more than a small improvement on your next attempt or two, you should stop and evaluate the situation. In most situations, this is the point at which to stop tuning.

2.7.7.2. Still Not Satisfied?

If you are not satisfied with the final performance, consider increasing your capacity through the addition of hardware.

Generally, memory is the single piece of hardware needed to solve the problem. However, some situations warrant obtaining additional disks or more CPU power.

Chapter 3. Memory Management Concepts

The operating system employs several memory management mechanisms and a memory reclamation policy to improve performance on the system. These features are enabled by default. In the majority of situations, they produce highly desirable results in optimizing system performance. However, under rare circumstances, they might contribute to performance degradation by incurring their own overhead. This chapter describes these features and provides insight into how to adjust, or even turn them off, through tuning.

3.1. Memory

Once you have taken the necessary steps to manage your work load, you can evaluate reports of performance problems. Before you proceed, however, it is imperative that you be well versed in the concepts of memory management.

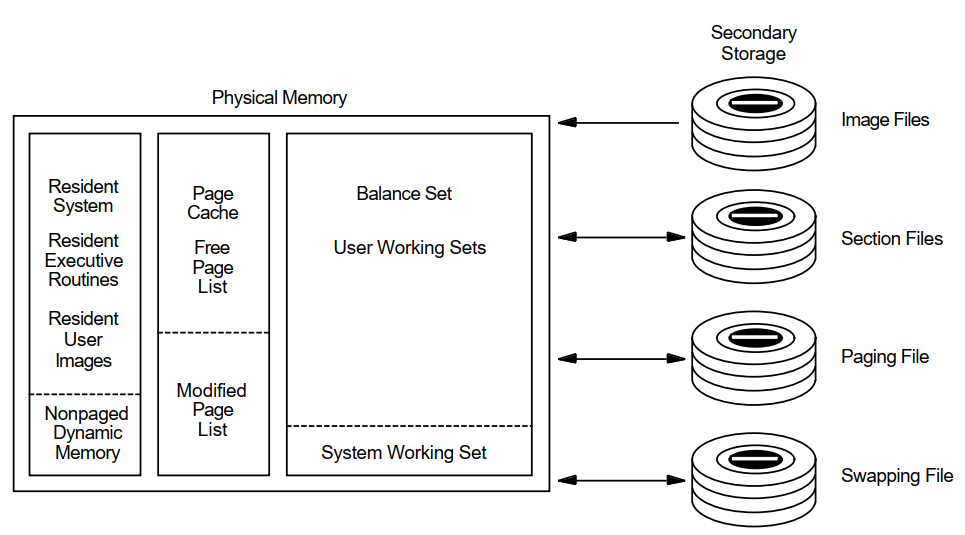

On OpenVMS VAX, system parameter values are allocated in units of 512-byte pages. On OpenVMS Alpha, some system parameter values are allocated in units of pages, while others are allocated in units of pagelets. (A pagelet is equal to a VAX page, or 512 bytes.) Figure 3.1, ''OpenVMS Memory Configuration'' illustrates the configuration of memory for OpenVMS systems.

|

3.1.1. Physical Memory

Primary page cache where processes execute: the balance set resides in the primary page cache.

- Secondary page cache where data is stored for movement to and from the disks. The secondary page cache consists of two sections as follows:

Free-page list—Pages whose contents have not been modified

Modified-page list—Pages whose contents have been modified

Operating system resident executive.

Each disk has only one access path available to transfer data from and to physical memory, that is, to perform disk I/O.

3.1.2. Virtual Memory

Physical memory

Secondary storage (disk)

From the programmer's point of view, the secondary storage locations appear to be locations in physical memory.

3.1.3. Process Execution Characteristics

A process executes in physical memory until it must wait—usually for the completion of an I/O request.

Every time a process has to wait, another process may use the CPU.

The operating system maintains an even balance in the use of memory, CPU time, and the number of processes running at once.

- Each time a process starts to execute, it is assigned a slice of computer processing time called a quantum. The process will continue to execute until one of three possible events occurs:

A higher priority process becomes executable. In this case the lower priority process is preempted and the higher priority process starts to execute.

The process enters a wait state, for example, in order to wait for the completion of an I/O operation.

The assigned quantum expires. If no other process of equal or higher priority is ready to execute, the current process obtains a new quantum and continues execution. If another process of equal priority is already waiting to execute, the current process must now wait and the new process obtains the CPU for the duration of 1 quantum.

This round-robin mode of scheduling does not apply to real-time processes. They cannot be interrupted by other processes of equal priority.

3.2. Working Set Paging

When... | Then... |

|---|---|

Image activation begins | The process brings in the first set of pages from the image file and uses them in its own working set. |

The process's demand for pages exceeds those available in the working set | Some of the process's pages must be moved to the page cache to make room or the process's working set is expanded. |

The page cache fills up | The swapper transfers a cluster of pages from the modified-page cache to a paging file. |

A hard fault requires a read operation from a page or image file on disk.

A soft fault involves mapping to a secondary page cache; this can be a global page or a page in the secondary page cache.

3.3. Process Swapping

When a process whose working set is in memory becomes inactive, the entire working set or part of it may be removed from memory to provide space for another process's working set to be brought in for execution.

Swapping is the partial or total removal of a process's working set from memory.

3.3.1. What Is the Swapper?

The swapper process schedules physical memory. It keeps track of the pages in both physical memory and on the disk paging and swapping files so it can ensure that each process has a steady supply of pages for each job.

3.3.2. Types of Swapping

Swapper trimming—Pages are removed from the target working set but the working set is not swapped out.

Process swapping—All pages are swapped out of memory.

3.4. Initial Working Set Quotas and Limits

The memory management strategy depends initially on the values in effect for the working set quota (WSQUOTA) and working set extent limit (WSEXTENT).

3.4.1. Processes

When a process is created, its quota and related limits must be specified. The LOGINOUT facility obtains the parameter settings for the quota and related limits from the record in the user authorization file (UAF) that characterizes the user. (System managers create a UAF record for each user.) LOGINOUT is run when a user logs in and when a batch process starts.

When an interactive job runs, the values in effect might have been lowered or raised either by the corresponding qualifiers on the last SET WORKING_SET command to affect them, or by the system service $ADJWSL.

The initial size of a process's working set is defined (in pagelets) by the process's working set default quota WSDEFAULT.

When ample memory is available, a process's working set upper growth limit can be expanded to its working set extent, WSEXTENT.

3.4.2. Subprocesses and Detached Processes

$CREPRC system service

DCL command RUN

|

Parameter |

Characteristic |

|---|---|

|

PQL_DWSDEFAULT |

Default WSDEFAULT |

|

PQL_DWSQUOTA |

Default WSQUOTA |

|

PQL_DWSEXTENT |

Default WSEXTENT |

|

PQL_MWSDEFAULT |

Minimum WSDEFAULT |

|

PQL_MWSQUOTA |

Minimum WSQUOTA |

|

PQL_MWSEXTENT |

Minimum WSEXTENT |

AUTOGEN checks all these values and overrides them if the values in the UAF are too large or too small.

PQL parameters are described in the VSI OpenVMS System Management Utilities Reference Manual.

3.4.3. Batch Queues

When a batch queue is created, the DCL command INITIALIZE/QUEUE establishes the default values for jobs with the /WSDEFAULT, /WSQUOTA, and /WSEXTENT qualifiers.

However, you can set these qualifiers to defer to the user's values in the UAF record.

When a batch job runs, the values may have been lowered by the corresponding qualifiers on the DCL commands SUBMIT or SET QUEUE/ENTRY.

3.4.4. Required Memory Resources

Small (Up to 200 pages) — For editing, and for compiling and linking small programs (typical interactive processing)

Large (400 or more pages) — For compiling and linking large programs, and for executing programs that manipulate large amounts of data in memory (typical batch processing)

Note that the definitions of "small" and "large" working sets change with time, and the memory required may increase with the addition of new features to programs. Furthermore, many applications now take advantage of the increased memory capacity of newer Alpha systems and the increased addressing space available to programs. Applications, especially database and other data handling programs, may need much larger working sets than similar applications required in the past.

Small (256 to 800 pages) — For editing, and for compiling and linking small programs (typical interactive processing)

Large (1024 pages or more) — For compiling and linking large programs, and for executing programs that manipulate large amounts of data in memory (typical batch processing)

3.4.5. User Programs

Working set limits for user programs depend on the code-to-data ratio of the program and on the amount of data in the program.

Programs that manipulate mostly code and that either include only small or moderate amounts of data or use RMS to process data on a per-record basis require only a small working set.

Programs that manipulate mostly data such as sort procedures, compilers, linkers, assemblers, and librarians require a large working set.

3.4.6. Guidelines

System parameters—Set WSMAX at the highest number of pages required by any program.

- UAF options

Set WSDEFAULT at the median number of pages required by a program that the user will run interactively.

Set WSQUOTA at the largest number of pages required by a program that the user will run interactively.

Set WSEXTENT at the largest number of pages you anticipate the process will need. Be as realistic as possible in your estimate.

- Batch queues for user-submitted jobs

Set WSDEFAULT at the median number of pages required.

Set WSQUOTA to the number of pages that will allow the jobs to complete within a reasonable amount of time.

Set WSEXTENT (using the DCL command INITIALIZE/QUEUE or START/QUEUE) to the largest number of pages required.

This arrangement effectively forces users to submit large jobs for batch processing because otherwise, the jobs will not run efficiently. To further restrict the user who attempts to run a large job interactively, you can impose CPU time limits in the UAF.

3.5. Automatic Working Set Adjustment (AWSA)

The automatic working set adjustment (AWSA) feature allows processes to acquire additional working set space (physical memory) under control of the operating system.

This activity reduces the amount of page faulting.

By reviewing the need for each process to add some pages to its working set limit (based on the amount of page faulting), the operating system can better balance the working set space allocation among processes.

3.5.1. What Are AWSA Parameters?

The AWSA mechanism depends heavily on the values of the key system parameters: PFRATH, PFRATL, WSINC, WSDEC, QUANTUM, AWSTIME, AWSMIN, GROWLIM, and BORROWLIM.

Note

The possibility that AWSA parameters are out of balance is so slight that you should not attempt to modify any of the key parameter values without a very thorough understanding of the entire mechanism.

3.5.2. Working Set Regions

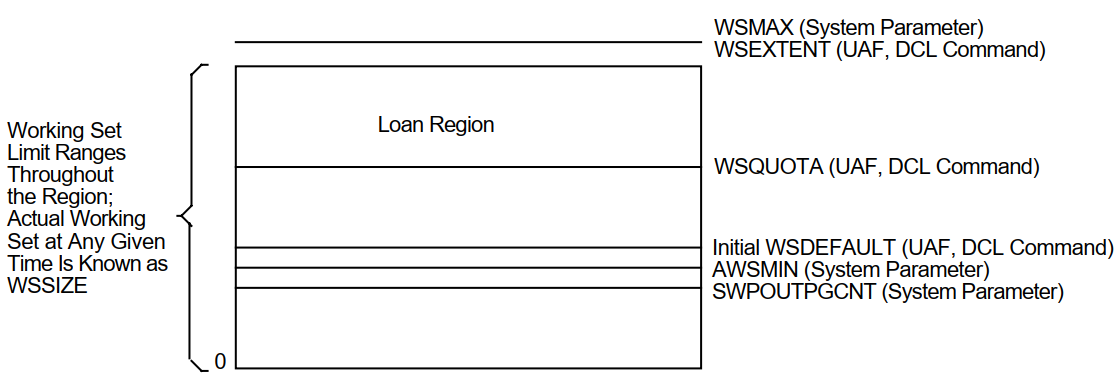

All processes have an initial default limit of pages of physical memory defined by the system parameter WSDEFAULT.

Any process that needs more memory is allowed to expand to the amount of a larger limit known as the working set quota defined by WSQUOTA.

Because page faulting is costly, the operating system has another feature for extending working set space to needy processes, provided the system has free memory available. If the conditions are right, the process can borrow working set space up to a final limit known as its working set extent, or WSEXTENT.

Whenever a process's working set size increases, the growth occurs in increments according to the value of the system parameter, WSINC.

Figure 3.2, ''Working Set Regions for a Process'' illustrates these important regions.

|

3.5.3. Adjustment Period

The system recognizes or reviews the need for growth by sampling the page faulting rate of each process over an adjustment period. The adjustment period is the time from the start of the quantum (defined by the system parameter QUANTUM) right after an adjustment occurs until the next quantum after a time interval specified by the parameter AWSTIME elapses. For example, if the system quantum is 200 milliseconds and AWSTIME is 700 milliseconds, the system reviews the need to add or subtract pages from a process every time the process consumes 800 milliseconds of CPU time, or every fourth quantum.

3.5.4. How Does AWSA Work?

|

Stage |

Description | |

|---|---|---|

|

1 |

The system samples the page faulting rate of each process during the adjustment period. | |

|

2 |

The system parameters PFRATL and PFRATH define the upper and lower limits of acceptable page faulting for all processes. At the end of each process's adjustment period, the system reviews the need for growth and does the following: | |

|

Too high compared with PFRATH |

Approves an increase in the working set size of that process in the amount of system parameter WSINC up to the value of its WSQUOTA. | |

|

Too low compared with PFRATL (when PFRATL is nonzero) |

Approves a decrease in the working set size of that process in the amount of system parameter WSDEC. No process will be reduced below the size defined by AWSMIN. This process is called voluntary decrementing. | |

|

3 |

If the increase in working set size puts the process above the value of WSQUOTA and thus requires a loan, the system compares the availability of free memory with the value of BORROWLIM. The AWSA feature allows a process to grow above its WSQUOTA value only if there are at least as many pages of free memory as specified by BORROWLIM. If too many processes attempt to add pages at once, an additional mechanism is needed to stop the growth while it is occurring. However, the system only stops the growth of processes that have already had the benefit of growing beyond their quota. | |

|

If... |

Then the system... | |

|

A process page faults after its working set count exceeds WSQUOTA |

Examines the value of the parameter GROWLIM before it allows the process to use more of its WSINC loan. Note that this activity is not tied into an adjustment period but is an event-driven occurrence based on page faulting. | |

|

The number of pages on the free-page list is at least equal to or greater than GROWLIM |

Continues to allow the process to add pages to its working set. | |

|

The number of free pages is less than GROWLIM |

Will not allow the process to grow; the process must give back some of its pages before it reads in new pages. | |

|

Active memory reclamation is enabled |

Sets BORROWLIM and GROWLIM to very small values to allow active processes maximum growth potential. | |

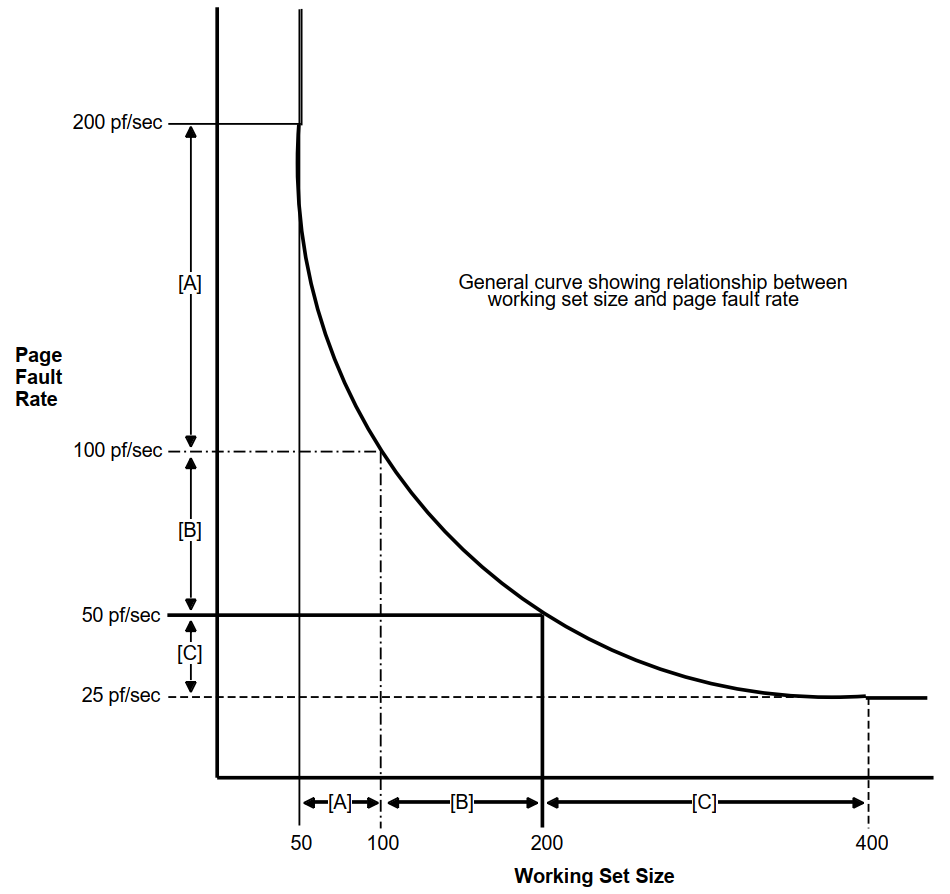

3.5.5. Page Fault Rates

Page fault rate varies as a function of the working set size for a program. For initial working set sizes that are small relative to the demands of the program, a small increase in working set size results in a very large decrease in page fault rate. Conversely, as initial working set size increases, the relative benefit of increased working set size diminishes. Figure 3.3, ''Effect of Working Set Size on Page Fault Rate'' shows that by increasing the working set size in the example from 50 to 100 pages, the page fault rate drops from 200 to 100 pf/s. In the midrange the program still benefits from an increase in its working set, but in this realm twice the increase in the working set (100 to 200 pages versus 50 to 100 pages) yields only half the decrease in page fault rate (100 to 50 pf/s versus 200 to 100 pf/s). A point may be reached at which further substantial increases in working set size yields little or no appreciable benefit.

|

Figure 3.3, ''Effect of Working Set Size on Page Fault Rate'' illustrates a general relationship between working set size and page fault rate. The numbers provided are for comparison only. Not all programs exhibit the same curve depicted. Three different ranges are delineated to show that the effect of increasing working set size can vary greatly depending upon a program's requirements and initial working set size.

Figure 3.4, ''Setting Minimum and Maximum Page Fault Rates'' illustrates setting minimum and maximum page fault rates and determining the required working set size for images running on your system.

|

If... | Then... |

|---|---|

You establish a maximum acceptable page fault rate of PFR1 | For each image there is a minimum required working set size as shown at point A in Figures 3.3 and 3.4. |

You determine that the minimum level of page faulting is defined by PFR2 for all images | For each image there is a point (shown at point B) that is the maximum size the working set needs to reach. |

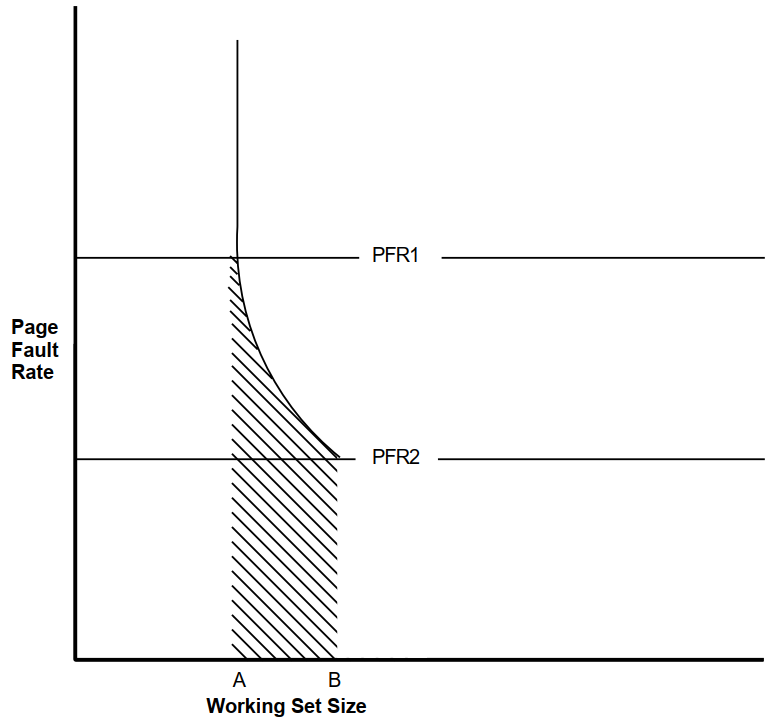

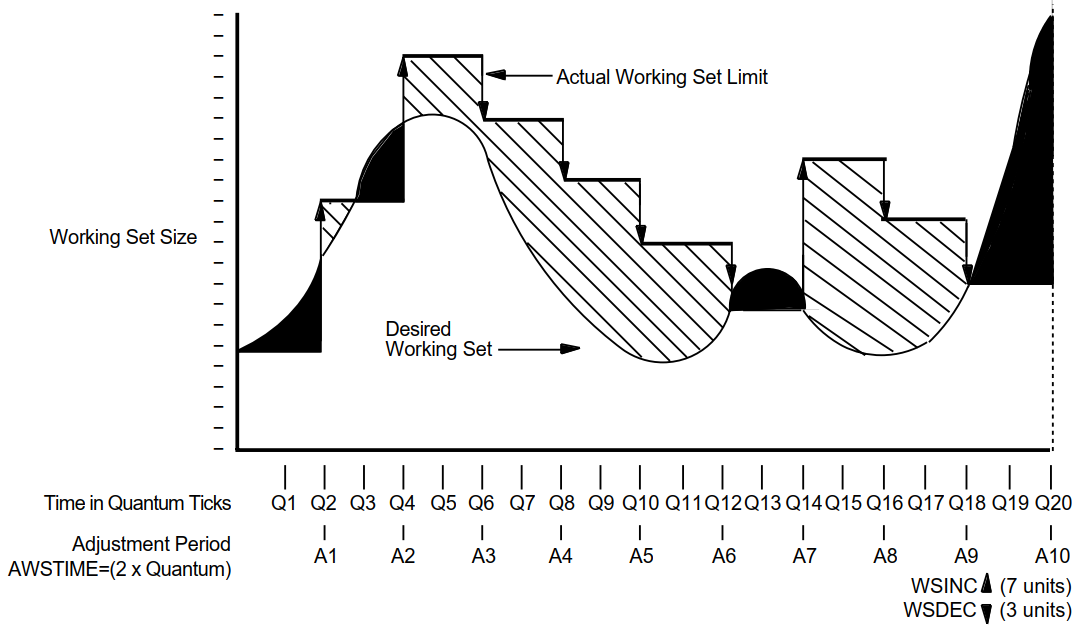

Figure 3.5, ''An Example of Working Set Adjustment at Work'' illustrates how automatic working set adjustment works over time, across the whole system, to minimize the amount of page faulting by adjusting working set sizes in balance with the amount of free memory available. The units used for AWSTIME, WSDEC, and WSINC in Figure 3.5, ''An Example of Working Set Adjustment at Work'' are for illustration; they are not recommendations.

In the figure, the shaded area identifies where paging occurs. The portion between the desired working set size and the actual working set limit (shown with cross-hatching) represents unnecessary memory overhead—an obvious instance where it costs memory to minimize page faulting.

|

3.5.6. Voluntary Decrementing

The parameters PFRATL and WSDEC, which control voluntary decrementing, are very sensitive to the application work load.

For the PFRATH and PFRATL parameters, it is possible to define values that appear to be reasonable page faulting limits but yield poor performance.

The problem results from the page replacement algorithm and the time spent maintaining the operation within the page faulting limits.

For example, for some values of PFRATL, you might observe that a process continuously page faults as its working set size grows and shrinks while the process attempts to keep its page fault rate within the limits imposed by PFRATH and PFRATL.

However, you might observe the same process running in approximately the same size working set, without page faulting once, with PFRATL turned off (set to zero).

Oscillation occurs when a process's working set size never stabilizes. To prevent the site from encountering an undesirable extreme of oscillation, the system turns off voluntary decrementing by initially setting parameter PFRATL equal to zero. You will achieve voluntary decrementing only if you deliberately turn it on.

3.5.7. Adjusting AWSA Parameters

|

Task |

Adjustment |

|---|---|

|

Enable voluntary decrementing |

Set PFRATL greater than zero. |

|

Disable borrowing |

Set WSQUOTA equal to WSEXTENT. |

|

Disable AWSA (per process) |

Enter the DCL command SET WORKING_SET/NOADJUST. |

|

Disable AWSA (systemwide) |

Set WSINC to zero. |

Note

If you plan to change any of these AWSA parameters, review the documentation for all of them before proceeding. You should also be able to explain why you want to change the parameters or the system behavior that will occur. In other words, never make whimsical changes to the AWSA parameters on a production system.

3.5.8. Caution

You can circumvent the AWSA feature by using the DCL command SET WORKING_SET/NOADJUST.

Use caution in disabling the AWSA feature, because conditions could arise that would force the swapper to trim the process back to the value of the SWPOUTPGCNT system parameter.

Once AWSA is disabled for a process, the process cannot increase its working set size after the swapper trims the process to the SWPOUTPGCNT value. If the value of SWPOUTPGNT is too low, the process is restricted to that working set size and will fault badly.

3.5.9. Performance Management Strategies for Tuning AWSA

By developing a strategy for performance management that considers the desired automatic working set adjustment, you will know when the AWSA parameters are out of adjustment and how to direct your tuning efforts.

- Rapid response—Tune to provide a rapid response whenever the load demands greater working set sizes, allowing active memory reclamation to return memory from idle processes. To implement this strategy:

Start processes off with small values for their working set defaults.

Set PFRATH low (possibly even to zero).

Set a low value for AWSTIME.

Set a relatively large value for WSINC.

Set BORROWLIM low and WSEXTENT high (even as high as WSMAX) to provide either large working set quotas or generous loans.

This is the default OpenVMS strategy where both BORROWLIM and GROWLIM are set equal to the value of FREELIM to allow maximum growth by active processes, and active reclamation is enabled to return memory idle processes.

- Less dynamic response—Tune for a less dynamic response that will stabilize and track moderate needs for working set growth. To implement this strategy:

Establish moderate values for AWSTIME, WSINC, and PFRATH. For example, set WSINC equal to approximately 10 percent of the typical value for WSDEFAULT.

Provide more generous working set defaults, so that you do not need to set BORROWLIM so low as to ensure that loans would always be granted.

The first strategy works best in the time-sharing environment where there can be wild fluctuations in demand for memory from moment to moment and where there tends to be some number of idle processes consuming memory at any moment. The second strategy works better in a production environment where the demand tends to be more predictable and far less volatile.

3.6. Swapper Trimming

The swapper process performs two types of memory management activities—swapping and swapper trimming. Swapping is writing a process to a reserved disk file known as a swapping file, so that the remaining processes can benefit from the use of memory without excessive page faulting.

To better balance the availability of memory resources among processes, the operating system normally reclaims memory through a more complicated sequence of actions known as swapper trimming.

|

Stage |

Description | |

|---|---|---|

|

1 |

The system detects too few pages (below the value of FREELIM) in the free-page list. | |

|

2 |

The system checks whether a minimum number of pages exists in the modified-page list as defined by system parameter MPW_THRESH. | |

|

If... |

Then the system... | |

|

The minimum exists in the modified-page list |

Invokes the modified-page writer to write out the modified-page list and free its pages for the free-page list. | |

|

The modified-page list does not contain enough pages to match FREEGOAL |

Does not invoke the modified-page writer, but concludes that some of the processes should be trimmed; that is, forced to relinquish some of their pages or else be swapped out. | |

Trimming takes place at two levels (at process level and systemwide) and occurs before the system resorts to swapping.

3.6.1. First-Level Trimming

The swapper performs first-level trimming by checking for processes with outstanding loans; that is, processes that have borrowed on their working set extent. Such processes can be trimmed, at the swapper's discretion, back to their working set quota.

3.6.2. Second-Level Trimming

If first-level trimming failed to produce a sufficient number of free pages, then the swapper can trim at the second level.

With second-level trimming, the swapper refers to the systemwide trimming value SWPOUTPGCNT. The swapper selects a candidate process and then trims the process back to SWPOUTPGCNT and outswaps it. If the deficit is still not satisfied, the swapper selects another candidate.

As soon as the needed pages are acquired, the swapper stops trimming on the second level.

3.6.3. Choosing Candidates for Second-Level Trimming

Because the swapper does not want to trim pages needed by an active process, it selects the processes that are candidates for second-level trimming based on their states.

Memory is always reclaimed from suspended processes before it is taken from any other processes. The actual algorithm used for the selection in each of these cases is complex, but those processes that are in either local event flag wait or hibernate wait state are the most likely candidates.

|

Stage |

Description |

|---|---|

|

1 |

The swapper compares the length of real time that a process has been waiting since entering the hibernate (HIB) or local event flag wait (LEF) state with the system parameter LONGWAIT. |

|

2 |

From its candidate list, the system selects the better processes for outswapping that have been idle for a time period equal to or greater than LONGWAIT. |

By freeing up pages through outswapping, the system should allow enough processes to satisfy their CPU requirements, so that those processes that were waiting can resume execution sooner.

Dormant process pseudoclass

The process must be a nonreal-time process whose current priority is equal to or less than the system parameter DEFPRI (default 4).

The process must be a computable process that has not had a significant event (page fault, direct or buffered I/O, CPU time allocation) within an elapsed time period defined by the system parameter DORMANTWAIT (default 10 seconds).

3.6.4. Disabling Second-Level Trimming

To disable second-level trimming, increase SWPOUTPGCNT to such a large value that second-level trimming is never permitted.

The swapper will still trim processes that are above their working set quotas back to SWPOUTPGCNT, as appropriate.

If you encounter a situation where any swapper trimming causes excessive paging, it may be preferable to eliminate second-level trimming and initiate swapping sooner. In this case, tune the swapping with the SWPOUTPGCNT parameter.

For a process with the PSWAPM privilege, you can also disable swapping and second-level trimming with the DCL command SET PROCESS/NOSWAPPING.

3.6.5. Swapper Trimming Versus Voluntary Decrementing

Swapper trimming occurs on an as-needed basis.

Voluntary decrementing occurs on a continuous basis and affects only active, computable processes.

Voluntary decrementing can reach a detrimental condition of oscillation.

The AUTOGEN command procedure, which establishes parameter values when the system is first installed, provides for swapper trimming but disables voluntary decrementing.

3.7. Active Memory Reclamation from Idle Processes

The memory management subsystem includes a policy that actively reclaims memory from inactive processes when a deficit is first detected but before the memory resource is depleted.

Long-waiting processes

Periodically waking processes

3.7.1. Reclaiming Memory from Long-Waiting Processes

A candidate process for this policy would be in the LEF or HIB state for longer than number of seconds specified by the system parameter LONGWAIT.

First-Level Trimming

By setting FREEGOAL to a high value, memory reclamation from idle processes is triggered before a memory deficit becomes crucial and thus results in a larger pool of free pages available to active processes. When a process that has been swapped out in this way must be swapped in, it can frequently satisfy its need for pages from the large free-page list.

The system uses standard first-level trimming to reduce the working set size.

Second-Level Trimming

Second-level trimming with active memory reclamation enabled occurs, but with a significant difference.

When shrinking the working set to the value of SWPOUTPGCNT, the active memory reclamation policy removes pages from the working set but leaves the working set size (the limit to which pages can be added to the working set) at its current value, rather than reducing it to the value of SWPOUTPGCNT.

In this way, when the process is outswapped and eventually swapped in, it can readily fault the pages it needs without rejustifying its size through successive adjustments to the working set by AWSA.

Swapping Long-Waiting Processes

Long-waiting processes are swapped out when the size of the free-page list drops below the value of FREEGOAL.

A candidate long-waiting process is selected and outswapped no more than once every 5 seconds.

3.7.2. Reclaiming Memory from Periodically Waking Processes

Wake periodically

Do minimal work

Return to a sleep state

Watchdog Processes

Because it has a periodically waking behavior, a watchdog process is not a candidate for swapping but might be a good candidate for memory reclamation (trimming).

For this type of process, the policy tracks the relative wait-to-execution time.

How Trimming Is Performed

When the active memory reclamation policy is enabled, standard first- and second-level trimming are not used.

When the size of the free-page list drops below twice the value of FREEGOAL, the system initiates memory reclamation (trimming) of processes that wake periodically.

If a periodically waking process is idle 99 percent of the time and has accumulated 30 seconds of idle time, the policy trims 25 percent of the pages in the process's working set as the process reenters a wait state. Therefore, the working set remains unchanged.

3.7.3. Setting the FREEGOAL Parameter

The system parameter FREEGOAL controls how much memory is reclaimed from idle processes.

Setting FREEGOAL to a larger value reclaims more memory; setting FREEGOAL to a smaller value reclaims less.

For information about AUTOGEN and setting system parameters, refer to the VSI OpenVMS System Manager's Manual, Volume 2: Tuning, Monitoring, and Complex Systems.

3.7.4. Sizing Paging and Swapping Files

Because it reclaims memory from idle processes by trimming and swapping, the active memory reclamation policy can increase paging and swapping file use.

Use AUTOGEN in feedback mode to ensure that your paging and swapping files are appropriately sized for the potential increase.

For information about sizing paging and swapping files using AUTOGEN, refer to the VSI OpenVMS System Manager's Manual, Volume 2: Tuning, Monitoring, and Complex Systems.

3.7.5. How Is the Policy Enabled?

Active memory reclamation is enabled by default.

By using the system parameter MMG_CTLFLAGS which is bit encoded, you can enable and disable proactive memory reclamation mechanisms.

|

Bit ? |

Meaning |

|---|---|

|

<0> |

If this bit is set, reclamation is enabled by trimming from periodically executing but otherwise idle processes. This occurs when the size of the free list drops below two times FREEGOAL. Otherwise, if clear, it disables it. |

|

<1> |

If this bit is set, reclamation is enabled by outswapping processes that have been idle for longer than LONGWAIT seconds. This occurs when the size of the free list drops below FREELIM. Otherwise, if clear, it disables it. |

|

<2-7> |

Reserved for future use. |

MMG_CTLFLAGS is a dynamic parameter and is affected by AUTOGEN.

3.8. Memory Sharing

Memory sharing allows multiple processes to map to (and thereby gain access to) the same pages of physical memory.

Memory sharing (either code or data) is accomplished using a systemwide global page table similar in function to the system page table.

3.8.1. Global Pages

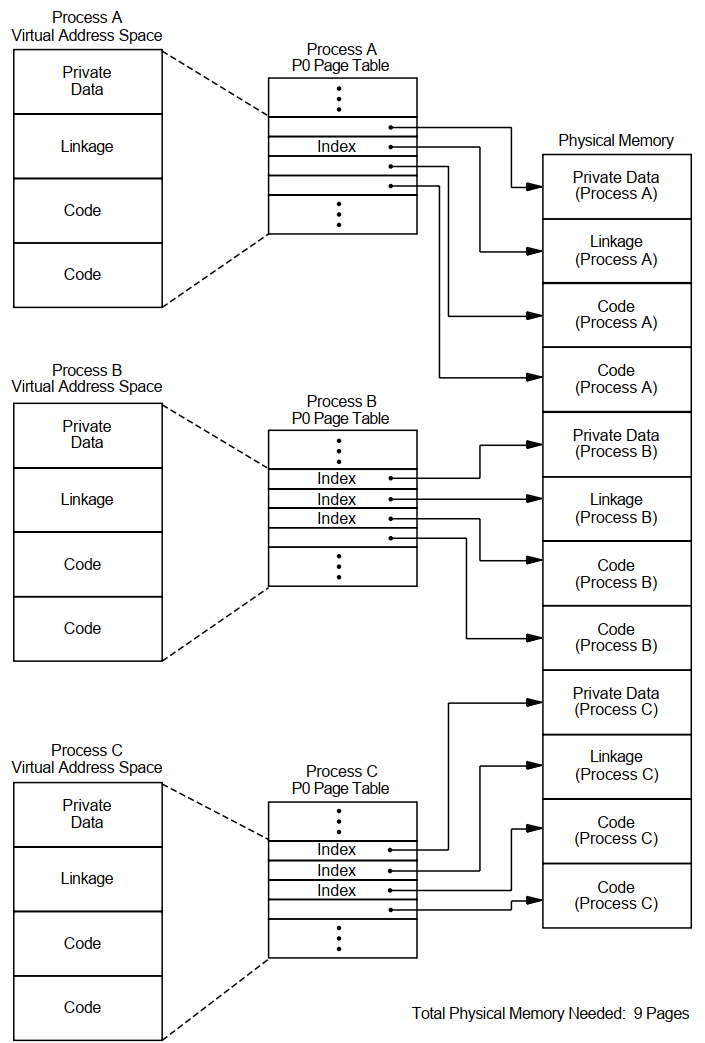

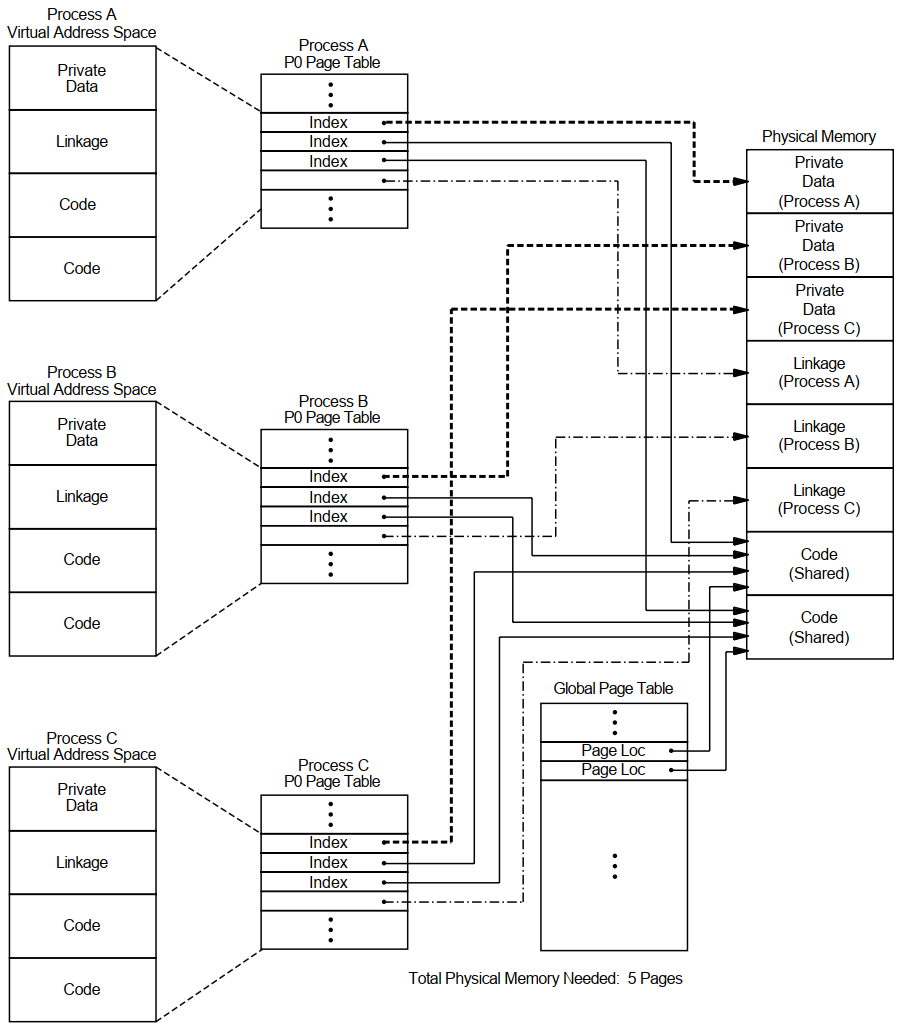

Figures 3.6 and 3.7 illustrate how memory can be conserved through the use of global (shared) pages. The three processes (A, B, and C) run the same program, which consists of two pages of read-only code and one page of writable data.

Figure 3.6, ''Example Without Shared Code'' shows the virtual-to-physical memory mapping required when each process runs a completely private copy of the program. Figure 3.7, ''Example with Shared Code'' illustrates the physical-memory gains possible and the data-structure linkage required when the read-only portion of the program is shared by the three processes. Note that each process must still maintain a private data area to avoid corrupting the data used by the other processes.

|

|

The amount of memory saved by sharing code among several processes is shown in the following formula:

For example, if 30 users share 300 pages of code, the savings are 8700 pages.

3.8.2. System Overhead

Each... | Requires a... | Allocated from the... |

|---|---|---|

Global page | Global page table entry | Global page table |

Global section | Global section table entry Global section descriptor | Global section table Paged dynamic pool |

For more information about global sections, see the VSI OpenVMS Linker Utility Manual.

3.8.3. Controlling the Overhead

GBLPAGES—Defines the size of the global page table. The system working set size as defined by SYSMWCNT must be increased whenever you increase GBLPAGES.

GBLSECTIONS—Defines the size of the global section table.

3.8.4. Installing Shared Images

Once an image has been created, it can be installed as a permanently shared image. (See the VSI OpenVMS Linker Utility Manual and the VSI OpenVMS System Manager's Manual, Volume 2: Tuning, Monitoring, and Complex Systems). This will save memory whenever there is more than one process actually mapped to the image at a time.

Also, use AUTHORIZE to increase the user's working set characteristics (WSDEF, WSQUO, WSEXTENT) wherever appropriate, to correspond to the expected use of shared code. (Note, however, that this increase does not mean that the actual memory usage will increase. Sharing of code by many users actually decreases the memory requirement.)

3.8.5. Verifying Memory Sharing

- Invoke the OpenVMS Install utility (INSTALL) and enter the LIST/FULL command. For example:

$INSTALLINSTALL>LIST/FULL LOGINOUTINSTALL displays information in the following format:DISK$AXPVMSRL4:.EXE LOGINOUT;3 Open Hdr Shar Priv Entry access count = 44 Current / Maximum shared = 3 / 5 Global section count = 2 Privileges = CMKRNL SYSNAM TMPMBX EXQUOTA SYSPRV - Observe the values shown for the Current/Maximum shared access counts:

The Current value is the current count of concurrent accesses of the known image.

The Maximum value is the highest count of concurrent accesses of the image since it became known (installed). This number appears only if the image is installed with the /SHARED qualifier.

Note

In general, your intuition, based on knowledge of the work load, is the best guide. Remember that the overhead required to share memory is counted in bytes of memory, while the savings are counted in pages of physical memory. Thus, if you suspect that there is occasional concurrent use of an image, the investment required to make it shareable is worthwhile.

3.9. OpenVMS Scheduling

The scheduler uses a modified round-robin form of scheduling: processes receive a chance to execute on rotating basis, according to process state and priority.

3.9.1. Time Slicing

A process of higher priority becomes computable

The process is no longer computable because of a resource wait

The process itself voluntarily enters a wait state

The quantum ends

If there is no other computable (COM) process at the same priority ready to execute when the quantum ends, the current process receives another time slice.

3.9.2. Process State

A change in process state causes the scheduler to reexamine which process should be allowed to run.

3.9.3. Process Priority

When required to select the next process for scheduling, the scheduler examines the priorities assigned to all the processes that are computable and selects the process with the highest priority.

Priorities are numbers from 0 to 31.

Processes assigned a priority of 16 or above receive maximum access to the CPU resource (even over system processes) whenever they are computable. These priorities, therefore, are used for real-time processes.

Processes

/PRIORITY qualifier in the UAF record

DEFAULT record in the UAF record

$SETPRI system service.

DCL command SET PROCESS/PRIORITY to reduce the priority of your process. You need ALTPRI privilege to increase the priority of your process.

A user requires GROUP or WORLD privilege to change the priority of other processes.

Subprocesses and Detached Processes

$CREPRC system service

DCL command RUN

If you do not specify a priority, the system uses the priority of the creator.

Batch Jobs

When a batch queue is created, the DCL command INITIALIZE/QUEUE/PRIORITY establishes the default priority for a job.

However, when you submit a job with the DCL command SUBMIT or change characteristics of that job with the DCL command SET QUEUE/ENTRY, you can adjust the priority with the /PRIORITY qualifier.

With either command, increases are permitted only for submitters with the OPER privilege.

3.9.4. Priority Boosting

|

Stage |

Description |

|---|---|

|

1 |

While processes run, the scheduler recognizes events such as I/O completions, the completion of an interval of time, and so forth. |

|

2 |

As soon as one of the recognized events occurs and the associated process becomes computable, the scheduler may increase the priority of that process. The amount of the increase is related to the associated event. ? |

|

3 |

The scheduler examines which computable process has the highest priority and, if necessary, causes a context switch so that the highest priority process runs. |

|

4 |

As soon as a process is scheduled, the scheduler reduces its priority by one to allow processes that have received a priority boost to begin to return to their base priority. ? |

3.9.5. Scheduling Real-Time Processes

They never receive a priority boost.

They do not experience automatic working set adjustments.

They do not experience quantum-based time slicing.

The system permits real-time processes to run until either they voluntarily enter a wait state or a higher priority real-time process becomes computable.

3.9.6. Tuning

Base priorities of processes

Length of time for a quantum

All other aspects of process scheduling are fixed by both the behavior of the scheduler and the characteristics of the work load.

3.9.7. Class Scheduler

The OpenVMS class scheduler allows you to tailor scheduling for particular applications. The class scheduler replaces the OpenVMS scheduler for specific processes. The program SYS$EXAMPLES:CLASS.C allows applications to do class scheduling.

3.9.8. Processor Affinity

You can associate a process or the initial thread of a multithreaded process with a particular processor in an SMP system. Application control is through the system services $PROCESS_AFFINITY and $SET_IMPLICIT_AFFINITY. The command SET PROCESS/AFFINITY allows bits in the affinity mask to be set or cleared individually, in groups, or all at once.

Processor affinity allows you to dedicate a processor to specific activities. You can use it to improve load balancing. You can use it to maximize the chance that a kernel thread will be scheduled on a CPU where its address translations and memory references are more likely to be in cache.

Maximizing the context by binding a running thread to a specific processor often shows throughput improvement that can outweigh the benefits of the symmetric scheduling model. Particularly in larger CPU configurations and higher-performance server applications, the ability to control the distribution of kernel threads throughout the active CPU set has become increasingly important.

Chapter 4. Evaluating System Resources

This chapter describes tools that help you evaluate the performance of the three major hardware resources—CPU, memory, and disk I/O.

Discussions focus on how the major software components use each hardware resource. The chapter also outlines the measurement, analysis, and possible reallocation of the hardware resources.

You can become knowledgeable about your system's operation if you use MONITOR, ACCOUNTING, and AUTOGEN feedback on a regular basis to capture and analyze certain key data items.

4.1. Prerequisites

It is assumed that your system is a general timesharing system. It is further assumed that you have followed the workload management techniques and installation guidelines described in Chapter 1, "Performance Management" and Section 2.4, ''Installing Frequently Used Images'', respectively.

They are designed to help you conduct an evaluation of your system and its resources, rather than to execute an investigation of a specific problem. If you discover problems during an evaluation, refer to the procedures described in Chapters 5 and 10 for further analysis.

For simplicity, they are less exhaustive, relying on certain rules of thumb to evaluate the major hardware resources and to point out possible deficiencies, but stopping short of pinpointing exact causes.

They are centered on the use of MONITOR, particularly the summary reports, both standard and multifile.

Note

Some information in this chapter may not apply to certain specialized types of systems or to applications such as workstations, database management, real-time operations, transaction processing, or any in which a major software subsystem is in control of resources for other processes.

4.2. Guidelines

You should exercise care in selecting the items you want to measure and the frequency with which you capture the data.

If you are overzealous, the consumption of system resources required to collect, store, and analyze the data can distort your picture of the system's work load and capacity.

Complete the entire evaluation. It is important to examine all the resources in order to evaluate the system as a whole. A partial examination can lead you to attempt an improvement in an area where it may have minimal effect because more serious problems exist elsewhere.

Become as familiar as possible with the applications running on your system. Get to know their resource requirements. You can obtain a lot of relevant information from the ACCOUNTING image report shown in Example 4.1, ''Image-Level Accounting Report''. VSI and third-party software user's guides can also be helpful in identifying resource requirements.

- If you believe that a change in software parameters or hardware configuration can improve performance, execute such a change cautiously, being sure to make only one change at a time. Evaluate the effectiveness of the change before deciding to make it permanent.

Note

When specific values or ranges of values for MONITOR data items are recommended, they are intended only as guidelines and will not be appropriate in all cases.

4.3. Collecting and Interpreting Image-Level Accounting Data

Image-level accounting is a feature of ACCOUNTING that provides statistics and information on a per-image basis.

By knowing which images are heavy consumers of resources at your site, you can better direct your efforts of controlling them and the resources they consume.