VSI OpenVMS Record Management Utilities Reference Manual

- Operating System and Version:

- VSI OpenVMS IA-64 Version 8.4-1H1 or higher

VSI OpenVMS Alpha Version 8.4-2L1 or higher

Preface

1. About VSI

VMS Software, Inc. (VSI) is an independent software company licensed by Hewlett Packard Enterprise to develop and support the OpenVMS operating system.

2. Intended Audience

This manual is intended for all programmers who use OpenVMS RMS data files, including high-level language programmers who use only their language's input and output statements.

3. Document Structure

Description – Provides a full description of the Analyze/RMS_File utility.

- Usage Summary – Outlines the following ANALYZE/RMS_FILE information:

- –Invoking the utility

- –Exiting from the utility

- –Directing output

- –Copying and analyzing shared files

Qualifiers – Describes ANALYZE/RMS_FILE qualifiers, including format, parameters, and examples.

Commands – Describes ANALYZE/RMS_FILE commands, including format, parameters, and examples.

Examples – Provides additional ANALYZE/RMS_FILE examples.

Description – Provides a full description of the Convert utility.

- Usage Summary – Outlines the following CONVERT information:

- –Invoking the utility

- –Exiting from the utility

- –Directing output

- –Executing commands over a network

Qualifiers – Describes the CONVERT qualifiers, including format, parameters, and examples.

Examples – Provides additional CONVERT examples.

Description – Provides a full description of the Convert/Reclaim utility.

- Usage Summary – Outlines the following CONVERT/RECLAIM information:

- –Invoking the utility

- –Exiting from the utility

- –Directing output

- –Executing commands over a network

Qualifiers – Describes the CONVERT/RECLAIM qualifier, /STATISTICS.

Chapter 4, "File Definition Language Facility" describes in detail the File Definition Language facility (FDL). This chapter provides an overview and detailed descriptions about the File Definition Language. It describes in detail each file section and associated file attributes.

Description – Provides a description of the utility.

- Usage Summary – Outlines the following information:

- –Invoking the utility

- –Exiting from the utility

Qualifiers – Describes the sole CREATE/FDL qualifier, /STATISTICS.

Examples – Provides additional examples of implementing CREATE/FDL.

Description – Provides a description of the Edit/FDL utility.

- Usage Summary – Outlines the following EDIT/FDL information:

- –Invoking the utility

- –Exiting from the utility

Qualifiers – Describes the EDIT/FDL commands and qualifiers, together with related examples.

Examples – Provides additional examples of implementing EDIT/FDL.

4. Related Documents

VSI OpenVMS Record Management Services Reference Manual

RMS Journaling for OpenVMS Manual

5. VSI Encourages Your Comments

You may send comments or suggestions regarding this manual or any VSI document by sending electronic mail to the following Internet address: <docinfo@vmssoftware.com>. Users who have VSI OpenVMS support contracts through VSI can contact <support@vmssoftware.com> for help with this product.

6. OpenVMS Documentation

The full VSI OpenVMS documentation set can be found on the VMS Software Documentation webpage at https://docs.vmssoftware.com.

7. Conventions

| Convention | Meaning |

|---|---|

|

Ctrl/ x |

A sequence such as Ctrl/x indicates that you must hold down the key labeled Ctrl while you press another key or a pointing device button. |

|

PF1 x |

A sequence such as PF1 x indicates that you must first press and release the key labeled PF1 and then press and release another key or a pointing device button. |

|

Return |

In examples, a key name enclosed in a box indicates that you press a key on the keyboard. (In text, a key name is not enclosed in a box.) |

... |

A horizontal ellipsis in examples indicates one of the

following possibilities:

|

. . . |

A vertical ellipsis indicates the omission of items from a code example or command format; the items are omitted because they are not important to the topic being discussed. |

|

( ) |

In command format descriptions, parentheses indicate that you must enclose the options in parentheses if you choose more than one. |

|

[ ] |

In command format descriptions, brackets indicate optional choices. You can choose one or more items or no items. Do not type the brackets on the command line. However, you must include the brackets in the syntax for OpenVMS directory specifications and for a substring specification in an assignment statement. |

|

[ |] |

In command format descriptions, vertical bars separate choices within brackets or braces. Within brackets, the choices are options; within braces, at least one choice is required. Do not type the vertical bars on the command line. |

|

{ } |

In command format descriptions, braces indicate required choices; you must choose at least one of the items listed. Do not type the braces on the command line. |

|

bold text |

This typeface represents the introduction of a new term. It also represents the name of an argument, an attribute, or a reason. |

|

italic text |

Italic text indicates important information, complete titles of manuals, or variables. Variables include information that varies in system output (Internal error number), in command lines (/PRODUCER= name), and in command parameters in text (where dd represents the predefined code for the device type). |

|

UPPERCASE TEXT |

Uppercase text indicates a command, the name of a routine, the name of a file, or the abbreviation for a system privilege. |

|

|

Monospace type indicates code examples and interactive screen displays. In the C programming language, monospace type in text identifies the following elements: keywords, the names of independently compiled external functions and files, syntax summaries, and references to variables or identifiers introduced in an example. |

|

- |

A hyphen at the end of a command format description, command line, or code line indicates that the command or statement continues on the following line. |

|

numbers |

All numbers in text are assumed to be decimal unless otherwise noted. Nondecimal radixes—binary, octal, or hexadecimal—are explicitly indicated. |

Chapter 1. Analyze/RMS_File Utility

The Analyze/RMS_File utility (ANALYZE/RMS_FILE) allows you to examine, either with or without an interactive terminal dialogue, the internal structure of a Record Management Services (RMS) file. The Analyze/RMS_File utility can check the structure of the file for errors, generate a statistical report on the structure and use of the file, or generate a File Definition Language (FDL) file from a data file.

The Analyze/RMS_File utility also provides information about RMS Journaling for OpenVMS (RMS journaling) files marked for after-image journaling, before-image journaling, and, where applicable, information about the state of recovery units affecting RMS journaling files.

The Analyze/RMS_File utility provides a set of commands that you may use to analyze a file interactively.

FDL files created with the Analyze/RMS_File utility have special sections that contain statistics about the structure of the specified data file. You can use FDL files created with the Analyze/RMS_File utility in conjunction with other Record Management

Support a Files-11 volume structure, On-Disk Structure Level 5 (ODS-5), that provides a volume structure for creating and storing files with expanded file names

Support additional character sets for naming files from file names that use the 8-bit ISO Latin-1 character set to the 16-bit Unicode (UCS-2) character set

Support extended file names with file specifications exceeding the traditional 39.39 character limit up to a maximum of 255 characters

Support preserving the case of file specifications created with the ODS-5 attributes

Support deep, multilevel directory structures up to a maximum of 512 characters

1.1. Analyzing RMS File Structure Interactively

One of the most useful features of the Analyze/RMS_File utility is its interactive mode. You enter the interactive mode by specifying the /INTERACTIVE qualifier to the ANALYZE/RMS_FILE command; you then begin an interactive session, during which you can examine the structure of an RMS file. Enter the HELP command at the ANALYZE> prompt for help about the various commands available to you for traversing the file.

The Analyze/RMS_File utility treats the internal RMS file as a multilevel entity. All RMS files are identical, relative to the first two levels. Level 1 contains the file header and level 2 contains the file attributes.

Files marked for RMS journaling, level 2, include information relative to before-image journaling and after-image journaling, where applicable.

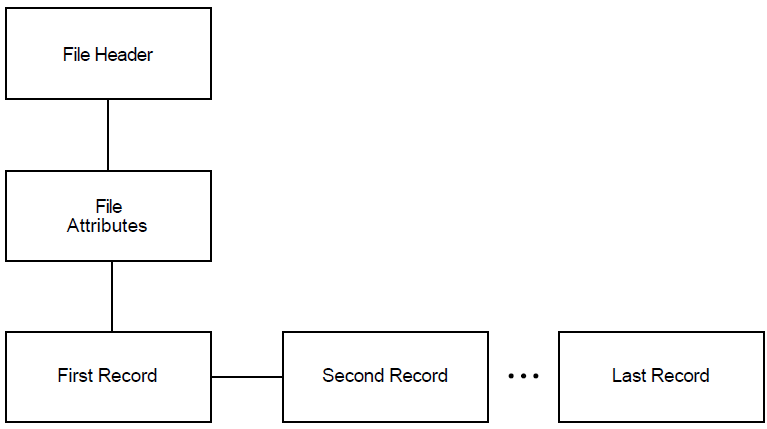

Some differentiation occurs at level 3. For sequential files, level 3 is the lowest level and it contains data records (see Figure 1.1, ''Structure of Sequential Files'') that ANALYZE/RMS_FILE can display individually. For relative files and indexed files, level 3 contains the file prolog.

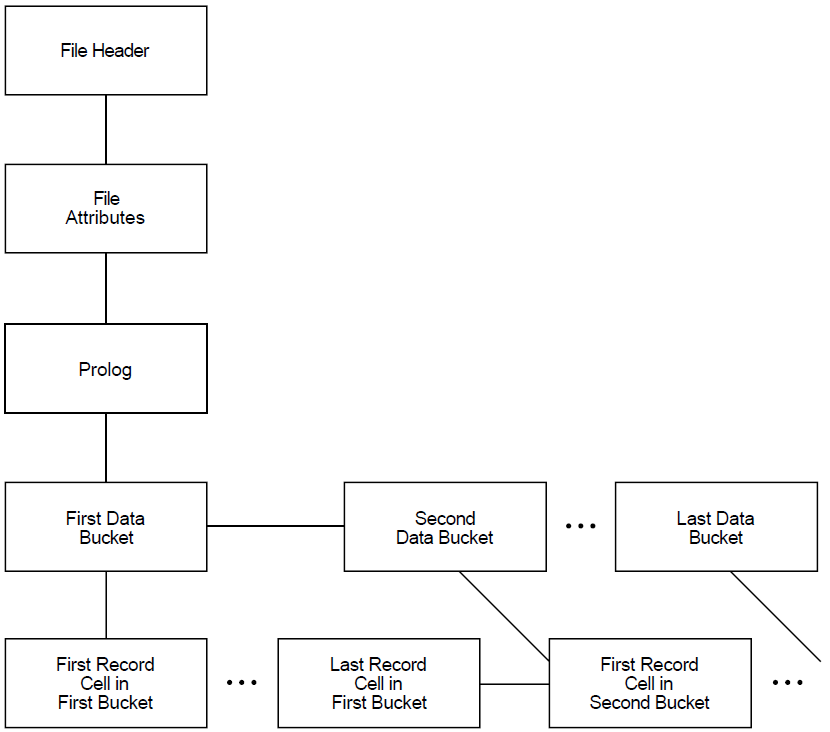

For relative files, level 4 contains data buckets that are accessible individually (see Figure 1.2, ''Structure of Relative Files''). Using the Analyze/RMS_File utility, you can view the contents of each individual data bucket.

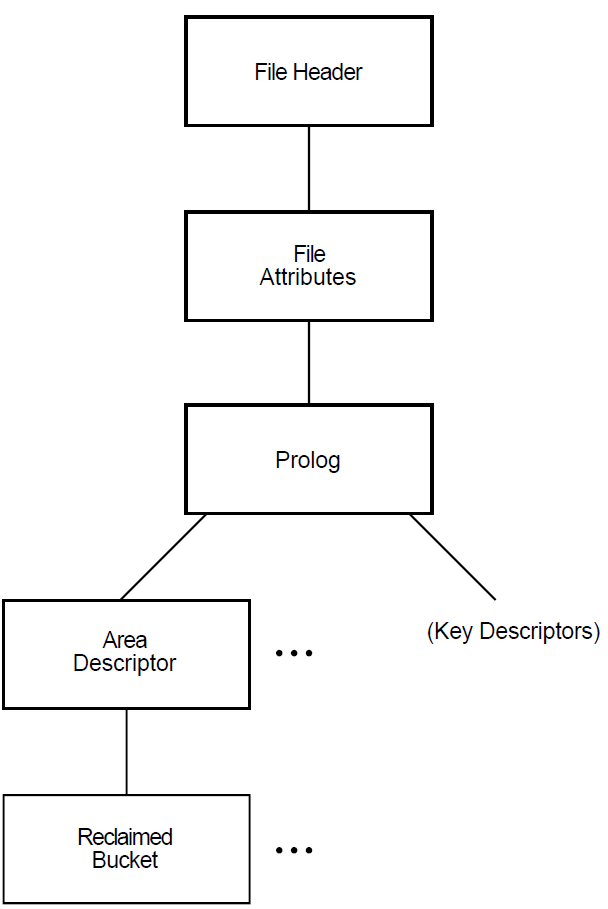

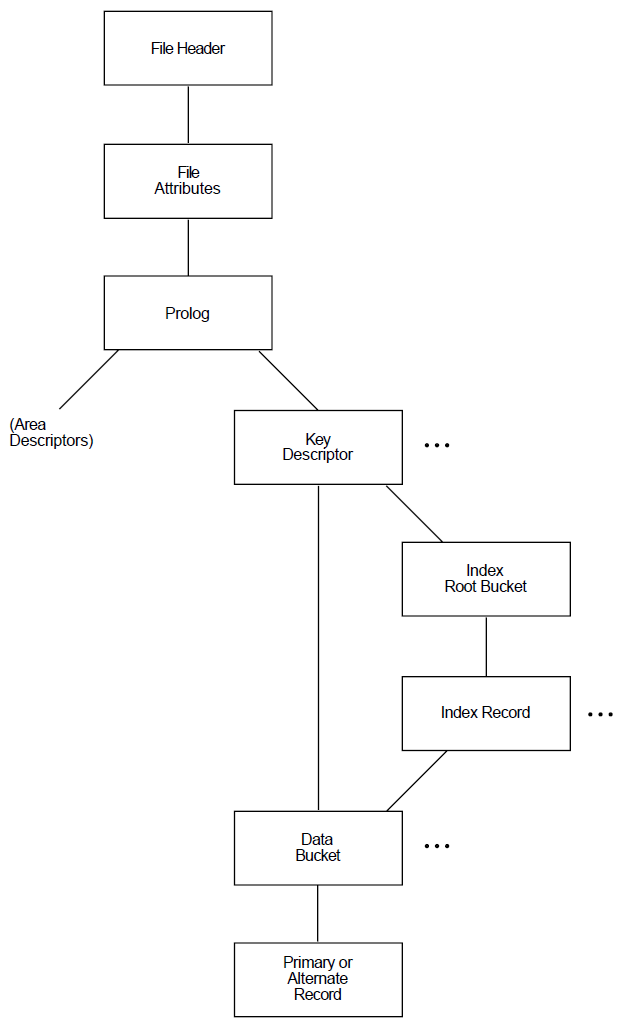

For indexed files, the Analyze/RMS_File utility presents you with two options at level 4: you can proceed down the path that begins with a level of area descriptors (see Figure 1.3, ''AREA DESCRIPTOR Path'') or you can choose the path that begins with a level of key descriptors (see Figure 1.4, ''KEY DESCRIPTOR Path'').

Level 5 is the final level in a relative file. This level contains the record cells that are accessible individually. For indexed files, the contents of level 5 depend on whether you have chosen the area descriptor path or the key descriptor path:

If you select the area descriptor path, level 5 is the lowest level and it contains reclaimed data records—that is, records that are effectively empty and are available for storing new data.

If you select the key descriptor path, the Analyze/RMS_File utility gives you the option of either viewing the index root bucket or the data bucket for the selected key, or traversing the level laterally and viewing another key.

When you choose to view the index root bucket, the next level down contains the index record for the selected key. After you view the index record, the Analyze/RMS_File utility provides you with direct access to the first data bucket for the selected key.

At the data bucket level, you can either view the data record or traverse the data level laterally and select another data bucket for the selected key.

The structure of an indexed file is more complicated than that of sequential and relative files. From the PROLOG level, the structure branches to the AREA DESCRIPTORs and the KEY DESCRIPTORs. Each AREA DESCRIPTOR describes the attributes and the virtual block numbers for the different file areas. The KEY DESCRIPTOR path contains the primary index structures (and data records) as well as the alternate index structures.

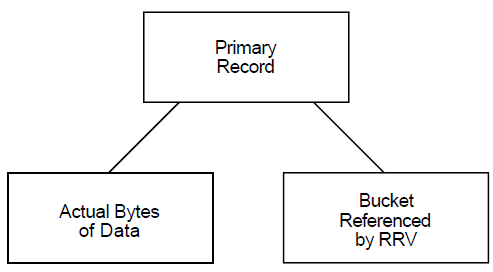

There are two types of record structures: primary records and alternate records. If you follow the primary index structure (key = 0), you find the primary record structures, which contain the actual data records (see Figure 1.5, ''Structure of Primary Records''). You can examine the actual bytes of data in the record. If the record has been moved to another bucket as a result of a bucket split, you can examine the bucket to which the record reference vector (RRV) points. An RRV is a forwarding pointer that a record leaves behind in its former bucket location when it moves to a new bucket.

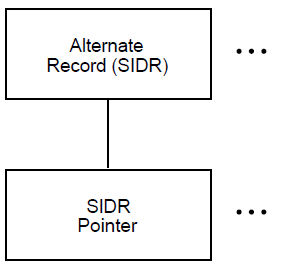

If you follow any of the alternate index structures, you find the alternate record structures, which contain the secondary index data records (SIDRs). A SIDR consists of an alternate key value and one or more pointers to the actual data records in the primary index structure (see Figure 1.6, ''Data Buckets in the Alternate Index Structures'').

1.2. Using the Analyze/RMS_File Utility with DECnet for OpenVMS

The ANALYZE/RMS_FILE command is supported only for the examination of files accessible to OpenVMS RMS or RMS–11.

You use the ANALYZE/RMS_FILE command over a network to analyze the internal structure of a remote file in exactly the same way that you use it to analyze the internal structure of a local file. For example, you can specify the /FDL qualifier to generate an FDL file from the data file. Using other qualifiers, you can check the file structure for errors, generate a statistical report on the file's structure and use, or enter interactive mode to explore the structure of the file. However, you can specify only one of these qualifiers in each command.

Note that you need the NETMBX privilege to execute the ANALYZE/RMS_FILE command over a network.

1.3. Handling Error Conditions

You handle the Analyze/RMS_File utility errors for two general error categories: nonjournaling errors and journaling errors. Even if you do not have RMS Journaling software, you may find that you have imported files marked for RMS Journaling from another system or from other nodes within an OpenVMS Cluster.

1.3.1. Nonjournaling Errors

If you receive any of the Analyze/RMS_File utility error messages while analyzing a file interactively, the file has been corrupted by a serious error. Note that the Analyze/RMS_File utility errors are not listed in the OpenVMS system messages documentation because in all cases the user response to errors signaled by the Analyze/RMS_File utility is identical, as described in the following paragraphs.

If the application program encounters errors during noninteractive analysis, the Analyze/RMS_File utility returns to the program, as exit status, the first occurrence of the most severe error it encounters. For example, if a warning (A) and two errors (B and C) are signaled, then the first error (B) is placed in the DCL symbol $STATUS at image exit.

If you have had a hardware problem (for example, a power or disk head failure), then the hardware most likely caused the corruption.

If you have no hardware problems, then a software error may have been introduced through either the user software or the system software. First, verify the user software and computer operations. Possibly, data files may have been corrupted by a process being stopped abnormally; for example, if a STOP/ID or DELETE/ENTRY occurs in the midst of data processing.

One test of whether the problem is in the system software is to note the situations where errors occur. For example, if a particular application uses an unusual I/O sequence that seems to result in file corruption, it may be that the problem is in the system software. In a situation like this, you should attempt to reproduce the problem and note precisely the I/O sequence. This information, together with appropriate supporting information, should be submitted with a Software Performance Report (SPR).

In either case, try to fix the problem with the Convert utility, using the same file specification for both the input file and the output file. If this procedure does not yield the result you want, use the Backup utility to restore a backup copy of the file.

1.3.2. Journaling Errors

If RMS Journaling software is not installed on your system and you attempt to write to a file marked for journaling, the system issues the following error message:

%RMS-F-JNS, operation not supported by RMS Journaling

If RMS Journaling software is installed and you receive this message, you attempted an operation that is not supported by RMS Journaling. For more information on handling RMS Journaling errors, see the RMS Journaling for OpenVMS Manual.

To turn off journaling in either case, use the following DCL command:

$ SET FILE/NOAI_JOURNAL/NOBI_JOURNAL/NORU_JOURNAL

Note that the SET FILE commands for turning off journaling are available to users who do not have RMS Journaling software as well as to users who do. Another error condition may occur if you import a file marked for recovery-unit journaling that has active recovery units. This can happen when a file is not recovered properly before the volume is moved to your system.

If you try to back up the file, RMS issues the following error message:

%BACKUP-E-OPENIN, error opening DISK$DATA:[USER]FILE.DAT;1 as input -SYSTEM-F-RUCONFLICT, another facility has active recovery units on file

To turn off the active recovery units, use the following DCL command:

$SET FILE/RU_FACILITY=RMS/NORU_ACTIVE

Note that this may leave the file in an inconsistent state with respect to recovery units because active recovery units are not rolled back (aborted).

1.4. Using the ANALYZE/RMS_FILE Utility

ANALYZE/RMS_FILE Usage Summary

ANALYZE/RMS_FILE Usage Summary — The Analyze/RMS_File utility (ANALYZE/RMS_FILE) allows you to examine the internal structure of an RMS file by performing the following functions: checking the structure of a file for errors; generating a statistical report on the file's structure and use; entering an interactive mode through which you can explore a file's structure. This analysis can determine whether the file is properly designed for its application and can point out improvements to make in the file's File Definition Language (FDL) specification; generating an FDL file from a data file; generating a summary report on the file's structure and use; generating information related to the file's journaling status.

Format

ANALYZE/RMS_FILE filespec[,...]

- filespec[,...]

Specifies the data file to be analyzed. The default file type is .DAT. You can use multiple file specifications and wildcard characters with the /CHECK qualifier, the /RU_JOURNAL qualifier, the /STATISTICS qualifier, and the /SUMMARY qualifier, but not with the /FDL qualifier or the /INTERACTIVE qualifier.

Usage Summary

Invoke the utility by entering the ANALYZE/RMS_FILE command at the DCL command level. This command can perform only one of the utility functions at a time; in other words, you must enter a new ANALYZE/RMS_FILE command each time you choose a different function.

If you specify the /INTERACTIVE qualifier, exit the Analyze/RMS_File utility by entering the EXIT command. Otherwise, let the utility run to successful completion.

If the Analyze/RMS_File utility terminates with an error message, you should try converting the file and then running the utility again. If the error condition persists, verify the integrity of the hardware and software. If the hardware and software appear to be functioning properly, submit a Software Performance Report (SPR) about the condition.

You can control the Analyze/RMS_File utility output by using the /OUTPUT qualifier. For a more detailed explanation of the /OUTPUT qualifier, refer to the ANALYZE/RMS_FILE Qualifiers section.

During the time that you are using the Analyze/RMS_File utility to examine the system authorization file (SYSUAF.DAT), you prevent other users from logging in to the system. Similarly, while you are analyzing your mail file, you cannot receive mail. So if you need to analyze these or other shared files, you may want to make a copy of the file and analyze the copy to avoid this problem.

Note

If you want to analyze files over a network, you need the NETMBX privilege. If you want to analyze journal files using the /RU_JOURNAL qualifier, you must have CMEXEC privilege and you must have access to the [SYSJNL] directory.

ANALYZE/RMS_FILE Qualifiers

ANALYZE/RMS_FILE Qualifiers — This section describes the ANALYZE/RMS_FILE qualifiers and how you use them to select the utility functions. Unless otherwise noted, these qualifiers do not take a qualifier value.

/CHECK

/CHECK — Checks the integrity of the file and generates a report of any errors in its structure.

Format

/CHECK

Description

The report produced by the /CHECK qualifier includes a list of any errors and a summary of the file's structure. If you do not specify an output file, the report is written to the current SYS$OUTPUT device, which is generally your terminal. You can use wildcards and multiple file specifications. If you specify /NOOUTPUT, no report is generated; instead, you only get a message indicating whether the file has errors.

The check function is active by default when you use the ANALYZE/RMS_FILE command without any qualifiers. The /CHECK qualifier is not compatible with the /FDL qualifier, the /INTERACTIVE qualifier, the /STATISTICS qualifier, or the /SUMMARY qualifier. If /CHECK is used with any of the other qualifiers, /FDL takes precedence, next /INTERACTIVE, then /STATISTICS, and lastly /SUMMARY.

Example

$ANALYZE/RMS_FILE/CHECK CUSTFILE

This command checks the file CUSTFILE.DAT for errors and displays the report on the terminal.

/FDL

/FDL — Generates an FDL file describing the RMS data file being analyzed.

Format

/FDL

Description

By default, the /FDL qualifier creates a file with the file type .FDL and the same file name as the input data file. To assign a different type or name to the FDL file, use the /OUTPUT qualifier. If the data file is corrupted, the FDL file contains the Analyze/RMS_File utility error messages.

For indexed files, the FDL file contains special analysis sections you can use with the EDIT/FDL Optimize script to make better design decisions when you reorganize the file. For more information on these special analysis sections, see Chapter 4, "File Definition Language Facility".

You cannot use wildcards or multiple file specifications with the /FDL qualifier. The /FDL qualifier is not compatible with the /CHECK qualifier, the /INTERACTIVE qualifier, the /STATISTICS qualifier, the /SUMMARY qualifier, or the /UPDATE_HEADER qualifier. The /FDL qualifier takes precedence over all other qualifiers.

Example

$ANALYZE/RMS_FILE/FDL ADDRFILE

This command generates an FDL file named ADDRFILE.FDL from the data file ADDRFILE.DAT.

/INTERACTIVE

/INTERACTIVE — Begins an interactive examination of the file's structure. You cannot use wildcards or multiple file specifications. For help with the interactive commands, enter the HELP command at the ANALYZE> prompt. Do not use this qualifier with the /CHECK, /FDL, /STATISTICS, /SUMMARY, or /UPDATE_HEADER qualifiers. If used with the /FDL qualifier, the /FDL takes precedence; all other qualifiers are ignored when used with /INTERACTIVE. For a list of interactive commands, see the ANALYZE/RMS_FILE Commands section.

Format

/INTERACTIVE

Example

$ ANALYZE/RMS_FILE/INTERACTIVE SUPPLIERS.DAT

This command begins an interactive session during which you can examine the structure of the data file SUPPLIERS.DAT.

/OUTPUT

/OUTPUT — Identifies the destination file for the results of the analysis. The /NOOUTPUT qualifier specifies that no output file is to be created. In all cases, the Analyze/RMS_File utility displays a message indicating whether the data file has errors.

Format

/OUTPUT [=output-filespec]

/NOOUTPUT

Qualifier Value

output-filespec

Identifies the output file for the results of the analysis. The use of the output file depends on which of the other qualifiers you specify.

|

/CHECK |

Places the integrity report in the output file. The default file type is .ANL, and the default file name is ANALYZE. If you omit the output-filespec parameter, output is written to the current SYS$OUTPUT device, which is generally your terminal. |

|

/FDL |

Places the resulting FDL specification in the output file. The default file type is .FDL, and the default file name is that of the input file. |

|

/INTERACTIVE |

Places a transcript of the interactive session in the output file. The default file type is .ANL, and the default file name is ANALYZE. If you omit the output-filespec parameter, no transcript of your interactive session is produced. |

|

/RU_JOURNAL |

Places the recovery-unit journal information in the output file. The default file type is .ANL, and the default file name is ANALYZE. If you omit the output-filespec parameter, output is written to the current SYS$OUTPUT device, which is generally your terminal. |

|

/STATISTICS |

Places the statistics report in the output file. The default file type is .ANL, and the default file name is ANALYZE. If you omit the output-filespec parameter, output is written to the current SYS$OUTPUT device, which is generally your terminal. |

|

/SUMMARY |

Places the summary report in the output file. The default file type is .ANL, and the default file name is ANALYZE. If you omit the output-filespec parameter, output is written to the current SYS$OUTPUT device, which is generally your terminal. |

Examples

$ANALYZE/RMS_FILE/STATISTICS/OUTPUT=.TXT SEQ.ADDThis command generates a statistics report named ANALYZE.TXT from the data file SEQ.ADD.

$ANALYZE/RMS_FILE/NOOUTPUT/CHECK PARTS_INVENTORY.DATThis command checks the structure of the data file PARTS_INVENTORY.DAT. No output is produced except the message telling whether the data file contains errors.

/RU_JOURNAL

/RU_JOURNAL — Provides information about recovery-unit journaling where applicable.

Format

/RU_JOURNAL

Description

You can use the /RU_JOURNAL qualifier on any file, but it is inoperative on files not marked for recovery-unit journaling.

This qualifier provides the only way of accessing a file that would otherwise be inaccessible because of unresolved recovery units. This situation might be the result of an unavailable recovery-unit journal file or of unavailable data files that were included in the recovery unit.

To use the /RU_JOURNAL qualifier, your process must have both CMEXEC privilege and access to the [SYSJNL] directory (either SYSPRV privilege or access for UIC [1,4]).

This qualifier is compatible with all of the ANALYZE/RMS_FILE qualifiers, and you can use it with wildcards and multiple file specifications.

The journal file specification and the journal creation date

The recovery-unit identification, recovery-unit start time, cluster system identification number (CSID), and process identification (PID)

Information about the files involved in the recovery unit, including the file specification, the name of the volume where the file resides, the file identification, the date and time the file was created, and the current status of the file

The state of the recovery unit — active, none, started, committed, or not available (for more information, see the RMS Journaling for OpenVMS Manual)

An error statement

Example

$ANALYZE/RMS_FILE/RU_JOURNAL SAVINGS.DAT

This command generates information regarding the journaling status of the data file SAVINGS.DAT.

/STATISTICS

/STATISTICS — Specifies that a report is to be produced containing statistics about the file.

Format

/STATISTICS

Description

The /STATISTICS qualifier is used mainly on indexed files.

By default, if you do not specify an output file with the /OUTPUT qualifier, the statistics report is written to the current SYS$OUTPUT device, which is generally your terminal.

The /STATISTICS qualifier is not compatible with the /CHECK qualifier, the /FDL qualifier, the /INTERACTIVE qualifier, or the /SUMMARY qualifier. If /STATISTICS is used with any other qualifiers, /FDL takes precedence, and then /INTERACTIVE; all other qualifiers are ignored. The /STATISTICS qualifier does an implicit check.

Example

$ ANALYZE/RMS_FILE/STATISTICS SEQ.DAT

This command generates a statistics report from the data file SEQ.DAT and displays the report on the current SYS$OUTPUT device, which is generally your terminal.

/SUMMARY

/SUMMARY — Specifies that a summary report is to be produced containing information about the file's structure and use.

Format

/SUMMARY

Description

The /SUMMARY qualifier generates a summary report containing information about the file's structure and use.

If the file has no errors, the output generated from the /SUMMARY qualifier is identical to that produced by the /CHECK qualifier. Unlike the /CHECK qualifier, however, the /SUMMARY qualifier does not check the structure of your file, so output is generated more quickly.

Do not use this qualifier with the /CHECK qualifier, the /FDL qualifier, the /INTERACTIVE qualifier, the /STATISTICS qualifier, or the /UPDATE_HEADER qualifier. If /SUMMARY is used with any other qualifiers, /FDL takes precedence, next /INTERACTIVE, and then /STATISTICS.

Example

$ANALYZE/RMS_FILE/SUMMARY INVENTORY.DAT

This command generates a summary report from the data file INVENTORY.DAT and displays the report on the current SYS$OUTPUT device, which is generally your terminal.

/UPDATE_HEADER

/UPDATE_HEADER — Attempts to update the following attributes in the header of the file: longest record length (LRL) and/or file length hint attribute. You must use this qualifier in combination with either /STATISTICS or /CHECK (the default). This qualifier only applies to sequential file organizations and is ignored for any other file organization.

Format

/UPDATE_HEADER ALL is default if qualifier is specified with no

values

/UPDATE_HEADER=ALL Both LRL and HINT

/UPDATE=([LRL],[HINT])

Description

The /UPDATE_HEADER qualifier attempts to update the LRL and/or file hint attribute in the file header if the calculated value(s) differ from the current value(s) in the file header. The /UPDATE_HEADER qualifier applies to:

An LRL request - if the file is sequential and has a record format other than undefined (UDF).

A HINT request - if the file is sequential, the record format is either variable (VAR) or variable with fixed control (VFC), and the file is located on an ODS-5 disk device.

It is not supported for remote accesses; requests are ignored.

The /UPDATE_HEADER qualifier requires either the STATISTICS or CHECK (default) functions since calculating new values for the LRL and/or file length hint presumes that all the records in the sequential file are processed. It is not compatible with the /FDL qualifier, the /INTERACTIVE qualifier, or the /SUMMARY qualifier.

Any errors returned by the file system when an attempt to update the file header fails are ignored. If the update succeeds, the updated values are displayed at the end of the report.

Example

$ ANALYZE/RMS_FILE/UPDATE_HEADER=HINT A.A

FILE HEADER

File Spec: DISK$REGRES:[REGRES]A.A;3

...

RMS FILE ATTRIBUTES

File Organization: sequential

Record Format: variable

Record Attributes: carriage-return

Maximum Record Size: 0

Longest Record: 52

Blocks Allocated: 4, Default Extend Size: 0

End-of-File VBN: 1, Offset: %X'008E'

File Monitoring: disabled

File Length Hint (Record Count): 6 (invalid)

File Length Hint (Data Byte Count): 42 (invalid)

Global Buffer Count: 0

The analysis uncovered NO errors.

UPDATED File Length Hint (Record Count) to: 10

UPDATED File Length Hint (Data Byte Count) to: 118

ANALYZE/RMS_FILE/UPDATE_HEADER=HINT A.AANALYZE/RMS_FILE Commands

ANALYZE/RMS_FILE Commands — This section describes the Analyze/RMS_File utility commands that you use in the interactive mode. Unless otherwise noted, these commands do not take parameters or qualifiers. In the interactive mode, you use various commands to move through the file structure, examining its various components. Interactive sessions always begin at the FILE HEADER level.

AGAIN

AGAIN — Redisplays the structure you are currently viewing.

Format

AGAIN

Example

FIXED PROLOGNumber of Areas: 8, VBN of First Descriptor: 3Prolog Version : 3ANALYZE>AGAINFIXED PROLOGNumber of Areas: 8, VBN of First Descriptor: 3Prolog Version : 3

This command redisplays the FIXED PROLOG structure.

BACK

BACK — Displays a previous structure at the current level, if one exists.

Format

BACK [n]

Parameter

n

Specifies the number of times that the structure pointer moves back.

Description

You can use the optional parameter n instead of entering multiple

BACK commands. For example, the command BACK 6 has the same effect as six BACK

commands.

Examples

ANALYZE>BACKThis command displays the previous structure at the current level. For example, if you are currently viewing the second key descriptor of the primary key, this command displays the primary key descriptor.

ANALYZE>BACK 3This command displays the third structure back at the current level.

DOWN

DOWN — Moves the structure pointer down to the next level. From the FILE HEADER level, the first command you enter is the DOWN command, which moves the structure pointer to the FILE ATTRIBUTE level.

Format

DOWN [branch]

Parameter

branch

Specifies the branch you want to follow when the current level has several branches. If there are several branches from the current level and you do not specify a value for the branch parameter, the Analyze/RMS_File utility prompts you by displaying a list of possible branches.

You can also use a question mark after the DOWN command to obtain a list of the possible branches.

Example

ANALYZE> DOWN ? %ANLRMS-I-DOWNHELP, The following is a list of paths down from this structure: %ANLRMS-I-DOWNPATH, AREAS Area descriptors %ANLRMS-I-DOWNPATH, KEYS Key descriptors

This command displays the branches available to you from the current location in the file structure. In this case, you can specify the AREAS branch or the KEYS branch.

ANALYZE> DOWN AREAS AREA DESCRIPTOR #0 (VBN 3, offset %X'0000') Bucket Size: 1 Reclaimed Bucket VBN: 0 Current Extent Start: 1, Blocks: 9, Used: 4, Next: 5 Default Extend Quantity: 2 Total Allocation: 9This command displays information about the descriptor structure for the first area in the file.

DUMP

DUMP — Displays a hexadecimal dump of the specified virtual block.

Format

DUMP n

Parameter

n

Specifies the virtual block number from which you want a dump. The number can be decimal or hexadecimal. The format for a hexadecimal number is %X n.

Example

ANALYZE> DUMP 10

DUMP OF VIRTUAL BLOCK 10:

7 6 5 4 3 2 1 0 01234567

------------------------ --------

73 20 73 27 65 6C 69 66| 0000 |file's s|

65 72 75 74 63 75 72 74| 0008 |tructure|

20 75 6F 59 00 43 00 2E| 0010 |..C.You |

20 65 73 75 20 6E 61 63| 0018 |can use |

66 20 4C 44 46 20 6E 61| 0020 |an FDL f|

64 6F 72 70 20 65 6C 69| 0028 |ile prod|

20 79 62 20 64 65 63 75| 0030 |uced by |

2F 45 5A 59 4C 41 4E 41| 0038 |ANALYZE/|

45 4C 49 46 5F 53 4D 52| 0040 |RMS_FILE|

74 6F 20 68 74 69 77 20| 0048 | with ot|

20 53 4D 52 20 72 65 68| 0050 |her RMS |

65 69 74 69 6C 69 74 75| 0058 |utilitie|

20 20 20 20 20 20 00 73| 0060 |s. |This command shows the first part of a dump of virtual block number (VBN) 10. The left column shows the bytes of the block in hexadecimal, read from right to left. The middle column shows the byte offset in hexadecimal from the beginning of the blocks. In the right column, the character equivalents of each byte are displayed. Nonprintable characters are represented by a period (.).

EXIT

EXIT — Ends an interactive session.

Format

EXIT

Example

ANALYZE>EXIT$

This command terminates the interactive session and returns you to the DCL level.

FIRST

FIRST — Displays the first structure on the current level.

Format

FORMAT

Example

ANALYZE>FIRST

If you are examining the primary and alternate key descriptors, this command displays the first key descriptor.

HELP

HELP — Displays help information about the interactive commands.

Format

HELP [keyword...]

Parameter

keyword

Specifies the interactive command you want help with.

Examples

ANALYZE> HELP Information available: AGAIN BACK DOWN DUMP EXIT File_Structure FIRST HELP New_features NEXT POSITION Radix REST TOP UP

This command shows the available help topics.

Topic? AGAIN AGAIN This command displays the current structure one more time. Topic?

This command displays information about the AGAIN command.

NEXT

NEXT — Displays the next structure at the current level, if one exists. Because NEXT is the default command, pressing the Return key is equivalent to executing a NEXT command.

Format

NEXT [n]

Parameter

n

Specifies the number of times the structure pointer moves forward.

Description

You can use the optional parameter n instead of entering multiple

NEXT commands. For example, the command NEXT 6 has the same effect as six NEXT commands

(or pressing the Return key six times).

Examples

ANALYZE>NEXTThis command displays the next structure at the current level. For example, if you are viewing key descriptors, this command displays the next key descriptor.

ANALYZE>NEXT 3This command moves the location pointer forward three times. For example, if you are viewing the first structure at the current level, this command displays the fourth structure.

POSITION/BUCKET

POSITION/BUCKET — Directly positions the structure pointer to a specific bucket of an indexed file or a relative file.

Format

POSITION/BUCKET bucket_vbn [/INDEX=n]

Parameter

bucket_vbn

The virtual block number (VBN) of the selected bucket. If the bucket includes more than one block, specify the VBN of the first block.

Qualifier

- /INDEX=n

Specifies the relative key for the bucket of an indexed file. The /INDEX qualifier is necessary only when the index number information is unavailable in the bucket header. For example, you use this qualifier to analyze a Prolog 1 or Prolog 2 file (no bucket header) or a Prolog 3 file with a corrupted bucket header. You can also use this qualifier to override the index number in a Prolog 3 file bucket header.

The number you use specifies the key. For example, /INDEX=0 specifies that the bucket is a primary index or primary data bucket, and /INDEX=1 specifies that the bucket is found in the first alternate index structure.

Description

The POSITION/BUCKET command lets you position the structure pointer to a specific bucket of your file. You can use this command to bypass step-by-step positioning. You can also use it to position the structure pointer at a bucket that is inaccessible because of structural errors in the file.

When the structure pointer is positioned at the beginning of the bucket, you can step forward or down through the index structure using the NEXT or DOWN command. If you enter an UP command when the structure pointer is positioned at the beginning of the bucket, the Analyze/RMS_File utility positions the pointer to the bucket's key descriptor. If you enter a BACK command when the structure pointer is positioned at the beginning of the bucket, the Analyze/RMS_File utility displays an appropriate error message and the pointer remains stationary.

Using the POSITION/BUCKET command allows you to specify a particular bucket header from which key descriptor information and valid path information are derived. The Analyze/RMS_File utility does not verify that the specified VBN is at the beginning block of a bucket. If the Analyze/RMS_File utility displays a series of error messages when you enter the POSITION/BUCKET command, it may be that the structure pointer is not positioned at the beginning of the bucket, or it may be that you specified an incorrect index number with the /INDEX qualifier.

Example

ANALYZE> POSITION/BUCKET 4

BUCKET HEADER (VBN 4)

Check Character: %X'93'

Key of Reference: 0

VBN Sample: 4

Free Space Offset: %X'0055'

Free Record ID: 24

Next Bucket VBN: 36

Level: 0

Bucket Header Flags:

(0) BKT$V_LASTBKT 0This command displays the information for the bucket that begins at VBN4. Because this is a Prolog 3 file, you do not have to specify the key using the /INDEX= n qualifier. In a Prolog 3 file, the key information is available in the bucket header (Key of Reference: 0).

POSITION/RECORD

POSITION/RECORD — Positions the pointer at a specific record in an indexed or relative file.

Format

POSITION/RECORD record-offset

Parameter

record-offset

The offset (in bytes) from the beginning of the bucket to the desired record. By default, the offset is a decimal number. If you want to use hexadecimal notation to specify the offset, use the format %Xn.

Description

Use this command to display a specific record in the bucket. When the structure pointer is positioned at the desired record, you can move it down and forward to display the various records in the bucket; you cannot display previous records.

The POSITION/RECORD command is valid only when you are positioned at a bucket header. The command positions the structure pointer at the specified byte offset. If the pointer is not positioned at the beginning of a valid record, a series of error messages is generated.

Example

ANALYZE> POSITION/RECORD %XE

PRIMARY DATA RECORD (VBN 4, offset %X'000E')

Record Control Flags:

(2) IRC$V_DELETED 0

(3) IRC$V_RRV 0

(4) IRC$V_NOPTRSZ 0

(5) IRC$V_RU_DELETE 0

(6) IRC$V_RU_UPDATE 0

Record ID: 11

RRV ID: 11, 4-Byte Bucket Pointer: 4

Key:

7 6 5 4 3 2 1 0 01234567

------------------------ --------

00 00 00 00 00 00 00 02| 0000 |........|This command positions the pointer at byte offset %XE, which is the location of the beginning of a record. This command is valid because the pointer was positioned at a bucket header before the POSITION/RECORD %XE command was entered.

REST

REST — Sequentially displays structures at the current level.

Format

REST

Example

ANALYZE>REST

This command displays each structure at the current level. For example, if you are viewing the primary and alternate key descriptors, the REST command displays each key descriptor sequentially.

TOP

TOP — Displays the FILE HEADER level.

Format

TOP

Example

ANALYZE>TOPFILE HEADERFile Spec: DISK$:[JONES.PROGRAM]INVENTORY.DAT;6File ID: (6367,16,1)Owner UIC: [DOC,DOE]Protection: System: RWE, Owner: RWED, Group: R, World:Creation Date: 13-NOV-1993 09:10:29.83Revision Date: 16-DEC-1993 14:10:37:16, Number: 4Expiration Date: none specifiedBackup Date: none postedContiguity Options: nonePerformance Options: noneReliability Options: noneJournaling Enabled: none

This command displays the file header information for the file INVENTORY.DAT.

UP

UP — Displays the data structures at the next higher level.

Format

UP

Example

ANALYZE>UP

This command positions the pointer at the next higher level of the file's structure. For example, if you are currently examining the RMS FILE ATTRIBUTES level, entering the UP command positions you at the FILE HEADER level and displays that level.

ANALYZE/RMS_FILE Examples

ANALYZE/RMS_FILE Examples — See the examples below to get a better understanding of how to use the ANALYZE/RMS_FILE utility.

Examples

$ANALYZE/RMS_FILE/INTERACTIVE/OUTPUT=INVENTORY INVENTORY.DATThis command begins an interactive session during which you can examine the structure of the data file INVENTORY.DAT. A transcript of the session is placed in the output file INVENTORY.ANL.

$ANALYZE/RMS_FILE/NOOUTPUT *.*;*This command verifies the structural integrity of all files in the current default directory.

$ANALYZE/RMS_FILE/FDL PARTS.DATThis command produces the FDL file PARTS.FDL from the data file PARTS.DAT. Assuming that PARTS.DAT is an indexed file, the new FDL file contains two special sections that FDL files created with the Edit/FDL utility do not have: ANALYSIS_OF_AREA and ANALYSIS_OF_KEY. You can use these sections with the EDIT/FDL Optimize script to tune your original data file, PARTS.DAT. To complete the tuning cycle, enter the following DCL commands:

$EDIT/FDL/ANALYSIS=PARTS/SCRIPT=OPTIMIZE PARTS$CONVERT/FDL=PARTS PARTS.DAT *$ANALYZE/RMS_FILE DENVER::DB1:[PROD]RUN.DATThis command analyzes the structure of the file RUN.DAT residing at remote node DENVER.

$ANALYZE/RMS_FILE/FDL/OUTPUT=TEST.FDL$_File(s):DENVER::DB1:[PROD]RUN.DATThis command analyzes the structure of the file RUN.DAT at remote node DENVER and generates the FDL file TEST.FDL at the local node.

Chapter 2. Convert Utility

The Convert utility (CONVERT) can be used to reorganize files by copying records from one or more source files to an output file, while converting the records to be compatible with the output file's organization and record format.

You can also use the Convert utility to improve the efficiency of indexed files that have had many record deletions and insertions by reformatting the file. In this case, the input file and the output file use the same file name and have the same organization, but the output file's version number is one greater than the highest previous version of the input file. When it reorganizes an indexed file, the utility establishes new record file addresses (RFAs) and may reorder duplicate records along secondary keys.

You can use callable routines to perform the functions of the Convert utility from within a program. For more information, refer to the Convert utility routines in the VSI OpenVMS Utility Routines Manual.

Support a Files-11 volume structure, On-Disk Structure Level 5 (ODS-5), that provides a volume structure for creating and storing files with expanded file names

Support additional character sets for naming files from file names that use the 8-bit ISO Latin-1 character set to the 16-bit Unicode (UCS-2) character set

Support extended file names with file specifications exceeding the traditional 39.39 character limit up to a maximum of 255 characters

Support preserving the case of file specifications created with the ODS-5 attributes

Support deep, multilevel directory structures up to a maximum of 512 characters

For more information, see the VSI OpenVMS Guide to Extended File Specifications.

This chapter tells you how to use the Convert utility. Section 2.1, ''Output Files'' explains how to produce converted output files with the utility. Section 2.2, ''Converting Carriage Control Formats'' describes converting between carriage control formats. Section 2.3, ''Using the Convert Utility with DECnet for OpenVMS Operations'' discusses using the Convert utility with DECnet for OpenVMS operations. Section 2.4, ''Exception Conditions'' explains how to handle the Convert utility exception conditions.

2.1. Output Files

You can reorganize the input file to be organized like an existing output file.

You can reorganize the input file in a new file. If you want the new file to be organized differently from the input file, use a File Definition Language (FDL) file to specify the characteristics for the new file. If you want the new file to be organized the same as the input file, do not use an FDL file to create the new file.

Note

If you specify an input FDL file that uses a collating sequence from the local system's National character set (NCS) library, be sure that the collating sequence does not have the same name as the collating sequence from the input index file. If the two collating sequences have the same name, the output file might be sorted improperly.

Typically, this might happen when the input indexed file is created on one system and is later transported to another system that already has a collating sequence with the same name. Both NCS and RMS use collating sequences that are identified by a character string that is processed as part of the named collating sequence. You can avoid this problem by renaming collating sequences that have conflicting names.

For more information about NCS, see the OpenVMS National Character Set Utility Manual.

You can use an existing output file that has records or an output file that has no records. If the output file is sequential, specifying the /APPEND qualifier causes the converted records from the input file to be added sequentially to the end of the output file. Note that the /APPEND qualifier is ignored if the output file is formatted for direct access; that is, either a relative file or an indexed file. If the output file is indexed and contains records, you can use the /MERGE qualifier to insert the new records in their proper order.

Sorting the records from an input file can be costly in terms of processing time and disk space. If the records in the output file are to be ordered in the same manner as the input file records, use the /NOSORT qualifier to save processing time and space. For more information about sorting indexed files, see both the /FAST_LOAD and the /SORT qualifiers in the CONVERT Qualifiers section.

RMS appends records with duplicate key values to the end of a list of duplicate keys so that the records are retrieved in chronological order. However, the Convert utility does not preserve chronological order for secondary keys. Instead, records having duplicate secondary keys are reordered and retrieved by the collating value of the primary key. For example, assume that you have a file of names that uses the primary key (Key0) FIRST_NAME and a secondary key (Key1) LAST_NAME. Assume, too, that the application program inserts a group of records in the following order:

Key0 Key1 . . John Jones Martin Smith David Jones Joseph Brown Gary Adams Adam Jones . .

When an application program accesses the records sequentially by the secondary key (Key1), the records are retrieved in the following order:

Key0 Key1 . . Gary Adams Joseph Brown John Jones David Jones Adam Jones Martin Smith . .

When an application program accesses the records sequentially by the secondary key (Key1) after the file is converted, the records are retrieved in the following order:

Key0 Key1 . . Gary Adams Joseph Brown Adam Jones David Jones John Jones Martin Smith . .

Note the revised order of retrieval for the people named Jones.

2.2. Converting Carriage Control Formats

A file can have one of four carriage control formats:

CARRIAGE_RETURN

FORTRAN

PRINT

NONE

These formats are all represented differently, so when you are converting a file from one carriage control format to another, the carriage control information has to be translated.

Translation is especially important when you are converting to or from a file with the FORTRAN format. Records with the FORTRAN format contain one byte of carriage control information at the beginning of each record.

For most conversions, the FORTRAN carriage control information is preserved as the first data byte of the record, and the printing characteristics are lost. However, certain conversions can preserve the printing characteristics of the FORTRAN carriage control information. When FORTRAN carriage control is converted to the equivalent PRINT carriage control, the information preceding each FORTRAN record is changed but not lost.

When PRINT carriage control is converted to FORTRAN carriage control, certain characters that supply carriage control information to the printer cannot be translated exactly. These untranslatable characters are represented as a single-spaced FORTRAN record.

When FORTRAN carriage control is converted to STREAM, control characters affecting carriage

returns (<CR>), line feeds (LF), and form feeds (

FF) are prefixed and appended to each FORTRAN record. These characters may

affect the STREAM output because they are considered record delimiters for stream files. As a

result, you may have a different number of records in the STREAM output file, and some of the

records may be null.

|

STREAM Format Equivalent | ||

|---|---|---|

|

FORTRAN Format |

Characters Prefixed |

Characters Appended |

|

1 |

FF |

|

|

0 |

LF LF |

|

|

space |

LF |

|

|

$ |

LF |

Nothing appended |

|

+ |

Nothing prefixed |

|

|

null |

Nothing prefixed |

Nothing appended |

All other conversions from FORTRAN preserve the carriage control information as data. All other conversions to FORTRAN prefix the converted records with the ASCII space character to obtain single spacing.

For more information about carriage control, see the description of the File Definition Language (FDL) in Chapter 4, "File Definition Language Facility".

2.3. Using the Convert Utility with DECnet for OpenVMS Operations

You can use the CONVERT command to transfer files to and from a remote node, either with or without modifying file attributes. If the output file exists, the Convert utility changes the organization and format of the input data file to that of the output file. If the output file does not exist, the utility creates it from the file attributes specified in an FDL file.

You can also use the Convert utility to copy files to or from a remote node without modifying file attributes. The Convert utility transfers the file record by record, just as it does on a single node. However, you must have NETMBX privilege to execute CONVERT commands over a network.

2.4. Exception Conditions

Certain conversions cause exception conditions. An exception condition occurs when a record from the input file cannot be placed in the output file because of some format incompatibility. The Convert utility sends a warning error message to SYS$ERROR upon encountering a record that causes an exception condition.

For example, an exception condition occurs when the length of the input records exceeds the length you specified for fixed-length output records. You can avoid this exception condition by specifying the /TRUNCATE qualifier. Converting short fixed-length records into longer fixed-length records also causes an exception. To avoid this exception condition, use the /PAD qualifier to fill in the output records. The /PAD qualifier allows you to specify your choice of pad character.

To keep a copy of the exception records, create an exceptions file with the /EXCEPTIONS_FILE qualifier. The exceptions file is a sequential file with variable-length records; it receives a copy of any record that cannot be placed in the output data file. Exceptions files have the file type .EXC, by default.

2.5. Using the CONVERT Utility

CONVERT Usage Summary

CONVERT Usage Summary — The Convert utility (CONVERT) copies records from one or more files to an output file, changing the record format and file organization to those of the output file.

Format

CONVERT input-filespec[,...] output-filespec

input-filespec [,...]

Specifies the file or files to be converted. You may specify multiple input files but wildcard characters are not allowed. Multiple input files are concatenated to form a single output file.

If your process open-file limit is reached, or if RMS runs out of dynamic memory, then the file conversion prematurely terminates with an appropriate message.

output-filespec

Specifies the output file for the converted records. If you omit the file type, the Convert utility assigns the output file the file type of the first input file. No wildcard characters are allowed.

Usage Summary

Invoke the Convert utility by entering the CONVERT command at the DCL level.

Exit the Convert utility by letting the utility run to successful completion.

Output from the Convert utility is directed to the file you indicate with the output-filespec parameter. For more information, see Section 2.1, ''Output Files''.

If you want to execute CONVERT commands over a network, you need NETMBX privilege.

2.6. CONVERT Qualifiers

This section describes the CONVERT command qualifiers used to select the organization and format of the output file.

/APPEND

/APPEND — Controls whether converted records from an input file are appended to an existing sequential file.

Format

/APPEND

/NOAPPEND (DEFAULT)

Description

The /APPEND qualifier is useful when you want to convert an existing file to the format of an existing output file and append the converted records to the existing output file.

If you specify the /APPEND qualifier and the /CREATE qualifier, /APPEND overrides the /CREATE.

You should use this option when you are loading records into a sequential file that already contains records, or when you are creating a new sequential file. When the output file is a direct access file (relative or indexed), the /APPEND qualifier is ignored.

Example

$CONVERT/APPEND N_Z_FILE.DAT A_M_FILE.DAT

This command causes the sequential input file N_Z_FILE.DAT to be attached to the end of the sequential file A_M_FILE.DAT.

/CREATE

/CREATE — Determines whether the Convert utility creates a file or uses an existing file for output.

Format

/CREATE (DEFAULT)

/NOCREATE

Description

The /CREATE qualifier causes the Convert utility to create an output file instead of using an existing file for output.

If the output file is to have different characteristics from the input file, you must also specify the /FDL qualifier. To create an output file with the same characteristics as the input file, omit the /FDL qualifier.

If you specify the /NOCREATE qualifier, the Convert utility uses an existing file for output. You would use this option, for instance, to load records into a data file that you created previously with the Create/FDL utility.

Examples

$CONVERT/CREATE OLDFILE.DAT NEWFILE.DATThis command creates the new output file NEWFILE.DAT and loads it with the records from OLDFILE.DAT.

$CONVERT/CREATE/FDL=UPDATE.FDL OLDFILE.DAT NEWFILE.DATThis command creates the new output file NEWFILE.DAT and loads it with the OLDFILE.DAT records that have been reformatted according to the characteristics in the FDL file UPDATE.

/EXCEPTIONS_FILE

/EXCEPTIONS_FILE — Specifies whether an exceptions file (file type .EXC) is to be generated during the conversion.

Format

/EXCEPTIONS_FILE [=filespec]

/NOEXCEPTIONS_FILE (DEFAULT)

Qualifier Value

filespec

Specifies the file in which the exception records are returned. If you specify /EXCEPTIONS_FILE but omit the filespec parameter, the exception records are displayed on the SYS$OUTPUT device.

Example

$CONVERT/EXCEPTIONS_FILE=EXFILE.EXC/FDL=NEWFILE.FDL OLDFILE.DAT NEWFILE.DAT

This command loads the records from OLDFILE.DAT into NEWFILE.DAT and writes any records that cause exceptions into the file EXFILE.EXC.

/EXIT

/EXIT — Controls whether the Convert utility exits when it encounters an exception record. By default, the Convert utility continues processing records when it encounters an exception record.

Format

/EXIT

/NOEXIT (DEFAULT)

Example

$ CONVERT/FDL=NEWFILE.FDL/EXIT OLDFILE.DAT NEWFILE

This command loads the records from OLDFILE.DAT into NEWFILE.DAT and causes the Convert utility to exit if an exception record is processed. Because no output file type is specified, the Convert utility assigns the output file the same file type as the input file.

/FAST_LOAD

/FAST_LOAD — Specifies whether the Convert utility uses a fast-loading algorithm for indexed files.

Format

/FAST_LOAD (DEFAULT)

/NOFAST_LOAD

Description

Note

By default, the Convert utility uses the fast-loading algorithm, but if CONVERT/FAST_LOAD is executed across a network, the Convert utility automatically changes from /FAST_LOAD to /NOFAST_LOAD.

The /FAST_LOAD qualifier and the /NOFAST_LOAD qualifier both sort primary keys, and both qualifiers require multiple scratch disk files.

Essentially, the difference between the /NOFAST_LOAD option and the /FAST_LOAD option is the way records are inserted into an indexed file. The /NOFAST_LOAD qualifier uses the normal RMS Put service to load each record; RMS updates the indexes of both the primary and secondary (alternate) keys as each record is inserted.

The main disadvantage of using the /NOFAST_LOAD option is the slower system performance that results from bucket splits and updates to the index. As each primary key is inserted, any secondary keys for that record are inserted in the order of the primary key. In other words, the secondary keys are not inserted in order of their own keys. These unsorted secondary keys may eventually cause bucket splits; as a result, the index structure for the secondary keys may be less efficient.

The advantage of the /NOFAST_LOAD option is that the Convert utility does not attempt to sort secondary keys. Conversely, if you specify the /FAST_LOAD option, the Convert utility sorts the primary and the secondary keys.

- The primary keys are sorted. If the input file is on magnetic tape or if you specify multiple input files, the sort work file contains the sorted records. If the input file is on a disk, however, the sort work file contains only pointers to the sorted records.

Note

If your input records are already ordered by the primary key or if the primary key of the input and output files is the same, you should specify /NOSORT. This qualifier ensures that the primary keys are not sorted again. For more information about sorting, see the description of the /SORT qualifier.

The Convert utility builds the primary data record level from the sorted output file. The utility completely fills a bucket with data before it creates the lowest primary index level (the level 1 index). When an index bucket is filled, the Convert utility creates an index record in the next highest index level. If there is at least one secondary key, the Convert utility sends the first secondary key's value along with a pointer to the new output record to the Sort utility using the record interface. If there are multiple secondary keys, Convert sends as many of those keys up to the value specified by the /SECONDARY qualifier to a temporary file along with a pointer to the new output record for later sorting. This is performed in parallel while the primary key's data structures are being loaded.

When the Convert utility is finished with the primary key, it updates the associated KEY DESCRIPTOR in the file's prolog, closes any input files, deletes any temporary files and closes any input files. At this point, the utility has created a valid output file with records ordered by the primary key. If you specified no alternate keys, the Convert utility terminates.

The Sort utility is then called to sort the first alternate key (if one has been specified). These records were passed to sort during the load of the primary key.

The Convert utility loads these sorted pointers into the secondary index data record (SIDR) level and adjusts them to point to the records in the primary data level. Again, the utility completely fills a bucket with data before it creates the lowest secondary index level. When an index bucket is filled, the Convert utility creates an index record in the next highest secondary index level.

When the Convert utility is finished with this secondary key, it updates the associated KEY DESCRIPTOR in the file's prolog. At this point, the Convert utility has created a valid output file, containing sorted primary keys and secondary keys. If you specified no alternate keys, the Convert utility terminates.

If there are additional secondary keys, the Convert utility calls the Sort utility to sort the temporary file on the next secondary key value. These records are then loaded to the output file as in steps 5 and 6 until all secondary keys have been processed.

The primary advantage of using the /FAST_LOAD option is that it is considerably faster than the RMS method used by the /NOFAST_LOAD option. In most cases, you can increase processing speed by a factor of 10. Even greater speed results when you load large files with many keys.

In addition, the index structure can be very efficient because each key is sorted before it is loaded. The only disadvantage is the large amount of disk space needed for the work files. However, you can control the amount of disk space by using the /WORK_FILES qualifier and by reassigning the work files to different devices. See the /WORK_FILES qualifier for more information.

Examples

$CONVERT/FAST_LOAD UPDATE.DAT MASTER.DATThis command loads the records from the file UPDATE.DAT into the output file MASTER.DAT using the /FAST_LOAD option. The Convert utility attains the added speed by building the indexes directly and then using RMS for block I/O only.

$CONVERT/NOFAST_LOAD UPDATE.DAT MASTER.DATThis command loads the records from the file UPDATE.DAT into the output file MASTER.DAT. In this case, the operation takes longer because the Convert utility uses RMS Put services to output each individual record.

Exit the convert utility by letting the utility run to successful completion.

Output from the Convert utility is directed to the file you indicate with the

output-filespec parameter. For more information, see Section 2.1, ''Output Files''.

If you want to execute Convert commands over a network, you need NETMBX privilege.

/FDL

/FDL — Indicates that an FDL file is to be used in creating the output file.

Format

/FDL=fdl-filespec

Qualifier Value

fdl-filespec

Specifies the FDL file to be used in creating the output file.

Description

The default file type for the FDL file is .FDL.

Example

$CONVERT/FDL=INDEXFILE CUSTSEQ.DAT CUSTIND.DAT

This command creates the new file CUSTIND.DAT according to the specifications in the FDL file INDEXFILE.FDL. Records are then loaded from CUSTSEQ.DAT into CUSTIND.DAT.

/FILL_BUCKETS

/FILL_BUCKETS — Controls whether to override the bucket fill percentage parameter associated with the output file.

Format

/FILL_BUCKETS

/NOFILL_BUCKETS (DEFAULT)

Description

If you specify /FILL_BUCKETS, the Convert utility fills the output file buckets with as many records as possible. This behavior is advantageous if you do not plan to do random file processing, because using fewer buckets saves disk space and processing time.

With /NOFILL_BUCKETS, however, the Convert utility does not fill the buckets completely. Therefore, you can add records at a later date without splitting buckets or extending the file.

This option is valid only for indexed output files.

Example

$CONVERT/FILL_BUCKETS SALES_DATA.DAT CUST_DATA.DAT

This command loads the records from the indexed file SALES_DATA.DAT into the indexed file CUST_DATA.DAT, filling the buckets of the output file with as many records as possible.

/FIXED_CONTROL

/FIXED_CONTROL — Controls file conversions between files having variable-length with fixed-length control field (VFC) records and files having other record formats.

Format

/FIXED_CONTROL

/NOFIXED_CONTROL (DEFAULT)

Description

This qualifier applies only to conversions where either the input or the output file, but not both, uses VFC records. This option is applicable only to sequential files.

When you use this qualifier, you must account for the size of the fixed-control area when you calculate the maximum size of the output record.

If you specify /FIXED_CONTROL and the input file uses VFC records but the output file does not, the fixed-length control field from the input record is inserted into the output record as data.

If you specify /FIXED_CONTROL and the output file has VFC records but the input file does not, the leading part of the input record is used to fill the fixed-length control part of the output record.

If you specify /NOFIXED_CONTROL and the input file uses VFC records but the output file does not, the fixed-length control field from the input record is not included as data in the output record.

If you specify /NOFIXED_CONTROL and the output file has VFC records but the input file does not, the control field attached to the output record is set to null.

Example

$CONVERT/FIXED_CONTROL VFC_FILE.DAT OUTFILE.DAT

This command loads the VFC records in the input file VFC_FILE.DAT into the output file OUTFILE.DAT.

/KEY

/KEY — Directs the Convert utility to read records from an indexed file using a specified key of reference, such as the primary key, the first alternate key, or the second alternate key.

Format

/KEY=n

Qualifier Value

n

A numeric value that specifies the key of reference that the Convert utility uses for reading records from the input indexed file. For example, you can specify the primary key as the key of reference by using the value 0 (/KEY=0), which is the default, or you can specify the first alternate key as the key of reference by using the value 1 (/KEY=1).

Description

The /KEY qualifier is valid for indexed input files only. If you use the /KEY qualifier, you must specify a key value (/KEY=0, /KEY=1, and so on). If you do not specify the /KEY qualifier, the default is the primary key (/KEY=0).

Example

$CONVERT/NOCREATE/KEY=1 CUST_INX.DAT CUST_SEQ.DAT

This command loads the records from the indexed input file CUST_INX.DAT into the sequential output file CUST_SEQ.DAT. The records in the output file are ordered by the first alternate key in the input file.

/MERGE

/MERGE — Specifies that records are to be inserted into their proper position in an existing indexed file.

Format

/MERGE

/NOMERGE (DEFAULT)

Description

The /MERGE qualifier is useful when your input records are not sorted and you do not want them to be sorted as they are loaded into an output file.

If you specify both /MERGE and /CREATE, /MERGE overrides the /CREATE qualifier.

Example

$CONVERT/MERGE ACCOUNTS.DAT MASTER_INX.DAT

This command loads the records from the input file ACCOUNTS.DAT into the existing indexed output file MASTER_INX.DAT according to primary key values.

/PAD

/PAD — Determines whether short records are to be padded.

Format

/PAD [=[%b]x]

/NOPAD (DEFAULT)

Qualifier Value

x

Specifies that the short records are to be padded with either ASCII characters (A through Z, a through z, or 0 through 9) or numeric values.

To specify x as a numeric value, you must specify the numeric base

using the percent symbol (%) followed by one of the following characters:

|

D |

Indicates that |

|

O |

Indicates that |

|

X |

Indicates that |

The numeric value can be any number from 0 to 255.

Description

The /PAD option is valid only for fixed-output record formats and is used to pad short records with ASCII characters or numeric values. A record is too short when it contains fewer bytes than the number of bytes specified for fixed-length records.

If you specify /PAD without a qualifier value, the default pad character is the ASCII null character (binary value 0).

Example

$CONVERT/NOCREATE/PAD=%X20 INFILE.DAT OUTFILEThis command specifies that any short records in the input file INFILE.DAT are to be padded with an ASCII space character before being loaded into the fixed-length output file OUTFILE.DAT.

$CONVERT/FDL=FIXED/PAD=X INFILE.VAR OUTFILE.FIXThis command creates the fixed format file OUTFILE.FIX and then loads it with records from the variable input file INFILE.VAR. Any short records from the input file are padded with ASCII X characters before they are loaded into the output file.

/PROLOG

/PROLOG — Specifies the prolog version number of the output indexed file.

Format

/PROLOG=n

Qualifier Value

Specifies the prolog number 1, 2, or 3.

If you specify 2 for n, the output file will be either a Prolog 1 or a

Prolog 2 file.

If you specify 3, the Convert utility creates a Prolog 3 file for output. Prolog 3 files accept multiple keys (or alternate keys), all data types, and segmented keys. The only restriction to using a Prolog 3 file applies to files containing overlapping key segments for the primary key. In this case, you would have to use a Prolog 2 file.

Description

If you do not specify the /PROLOG qualifier, the Convert utility uses the prolog version of the first input file. If the input file is not indexed, the utility uses the RMS default. To see what this default is on your system, enter the DCL command SHOW RMS_DEFAULT.

The /PROLOG qualifier overrides the value given with the FDL attribute KEY PROLOG.

Example

$CONVERT/PROLOG=3 INFILE_2 OUTFILE_3

This command loads the records from the Prolog 2 input file INFILE_2 into the Prolog 3 output file OUTFILE_3. Both the input and output file are indexed files.

/READ_CHECK

/READ_CHECK — Specifies whether each input record is to be read from the file a second time and compared to the record originally read.

Format

/READ_CHECK

/NOREAD_CHECK (DEFAULT)

Example

$CONVERT/READ_CHECK Q3_SALES.DAT YTD_SALES.DAT

This command specifies that the records from the input file Q3_SALES.DAT are to be read and checked by the file processor, and then loaded into the output file YTD_SALES.DAT.

/SHARE

/SHARE — Specifies whether the input file is to be opened for sharing with other processes during the conversion.

Format

/SHARE

/NOSHARE (DEFAULT)

Description

You can use the /SHARE option to generate a rough backup of a file that is always opened for sharing by some applications. However, another process can alter the records during the Convert utility operations. As a result, the consistency of the output file cannot be guaranteed.

Example

$CONVERT/SHARE SYSUAF.DAT BACKUP.DAT

This command indicates that the input file SYSUAF.DAT is open for sharing with other processes at the same time its records are being loaded into the output file BACKUP.DAT.

/SECONDARY

/SECONDARY — Increases the Convert utility's performance by reducing the number of required passes through the input data. This is accomplished by placing alternate key information into the CONVWORK file.

Format

/SECONDARY=n

Qualifier Value

Specifies the number of alternate keys that will be loaded to the CONVWORK file with each pass through the input data.

The default number of alternate keys written to the CONVWORK file is 1.

Description

This qualifier is valid when you are fast-loading a file with more than one alternate key. While the primary key is being loaded, the first alternate key of the file is passed to the Sort utility using the record interface. Additionally, a number of /SECONDARY number of alternate keys are extracted and placed into the CONVWORK file for subsequent Sort and Load operations.

Example

$CONVERT/SECONDARY=2 Q3_SALES.DAT YTD_SALES.DAT

This command causes the Convert utility to load the records from the input file Q3_SALES.DAT into the output file YTD_SALES.DAT. The 2nd and 3rd alternate keys are placed into the CONVWORK file while loading the primary key, reducing the need to read through the input data to process them.

/SORT

/SORT — Specifies whether the input file is to be sorted before being loaded into an indexed file. The sort is done according to the primary key of the output file.

Format

/SORT (DEFAULT)

/NOSORT

Description

Two procedures can improve the sort performance:

Increasing the size of the working set for the duration of the sort. The general rule is to use as large a working set as allowed by your working set quota. To set this value, use the DCL command SET WORKING_SET. To see what your authorized quota is, enter the SHOW WORKING_SET command.

Placing the input file, the output file, and the temporary work files on separate disk devices. The default operation is to place the work files on your default device, which could cause the Convert utility to run out of disk space. To specify the location of the work files, enter a command in the following form:

ASSIGN device-name: SORTWORKn

The

nrepresents the number of the work file, from 0 to 9. The colon is required after the device name. For example, the following two ASSIGN commands would place the work files on disks named TMPD and DEVD:$ ASSIGN TMPD: SORTWORK0 $ ASSIGN DEVD: SORTWORK1

Using more than two work files is not particularly advantageous unless you have to use many smaller ones in order to fit on crowded disks. You can control the number of work files with the /WORK_FILES qualifier.

For more information about using the Sort utility with the Convert utility, see the /FAST_LOAD qualifier.

Examples

$CONVERT/SORT IN_INX.DAT OUT_INX.DATThis command causes the records in the input indexed file IN_INX.DAT to be sorted according to the primary key values before being loaded into the output indexed file OUT_INX.DAT.

$CONVERT/NOSORT/FDL=REORG INX.DAT INX.DATThis command reorganizes the file INX.DAT according to the attributes specified in the FDL file REORG.FDL. The primary keys are not sorted because INX.DAT is already ordered by the primary key, and the primary key definition did not change.

/STATISTICS

/STATISTICS — Determines whether statistics about the file conversion are to be displayed.

Format

/STATISTICS [=keyword]

/NOSTATISTICS (DEFAULT)

Keywords

BRIEF

Displays a summary of the file conversion at the completion of the operation.

FULL