VSI OpenVMS x86-64 Boot Manager User Guide

- Operating System and Version:

- VSI OpenVMS x86-64 V9.2-2 or higher

Preface

1. About VSI

VMS Software, Inc. (VSI) is an independent software company licensed by Hewlett Packard Enterprise to develop and support the OpenVMS operating system.

2. About the Guide

Booting VSI OpenVMS x86-64 (using the

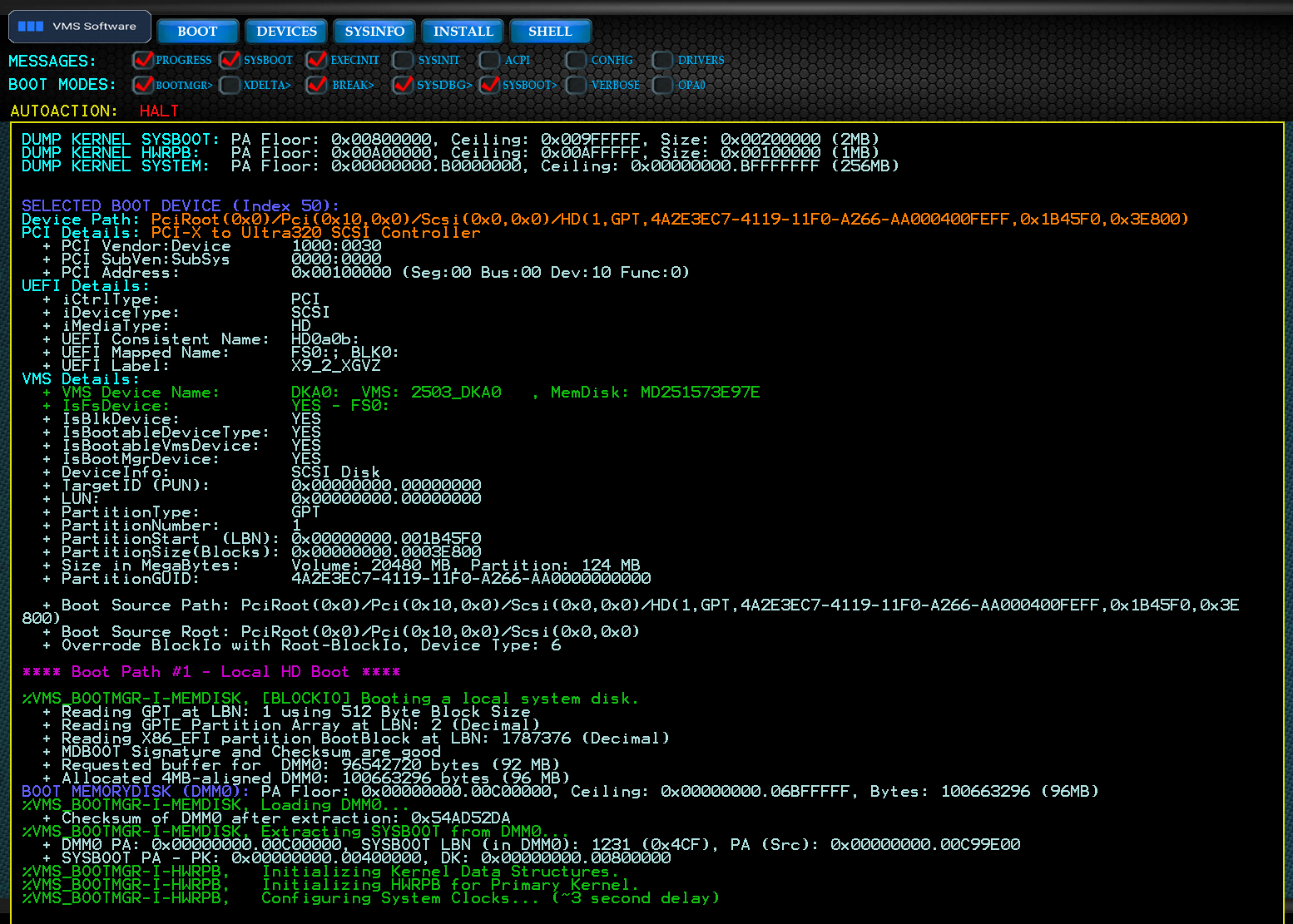

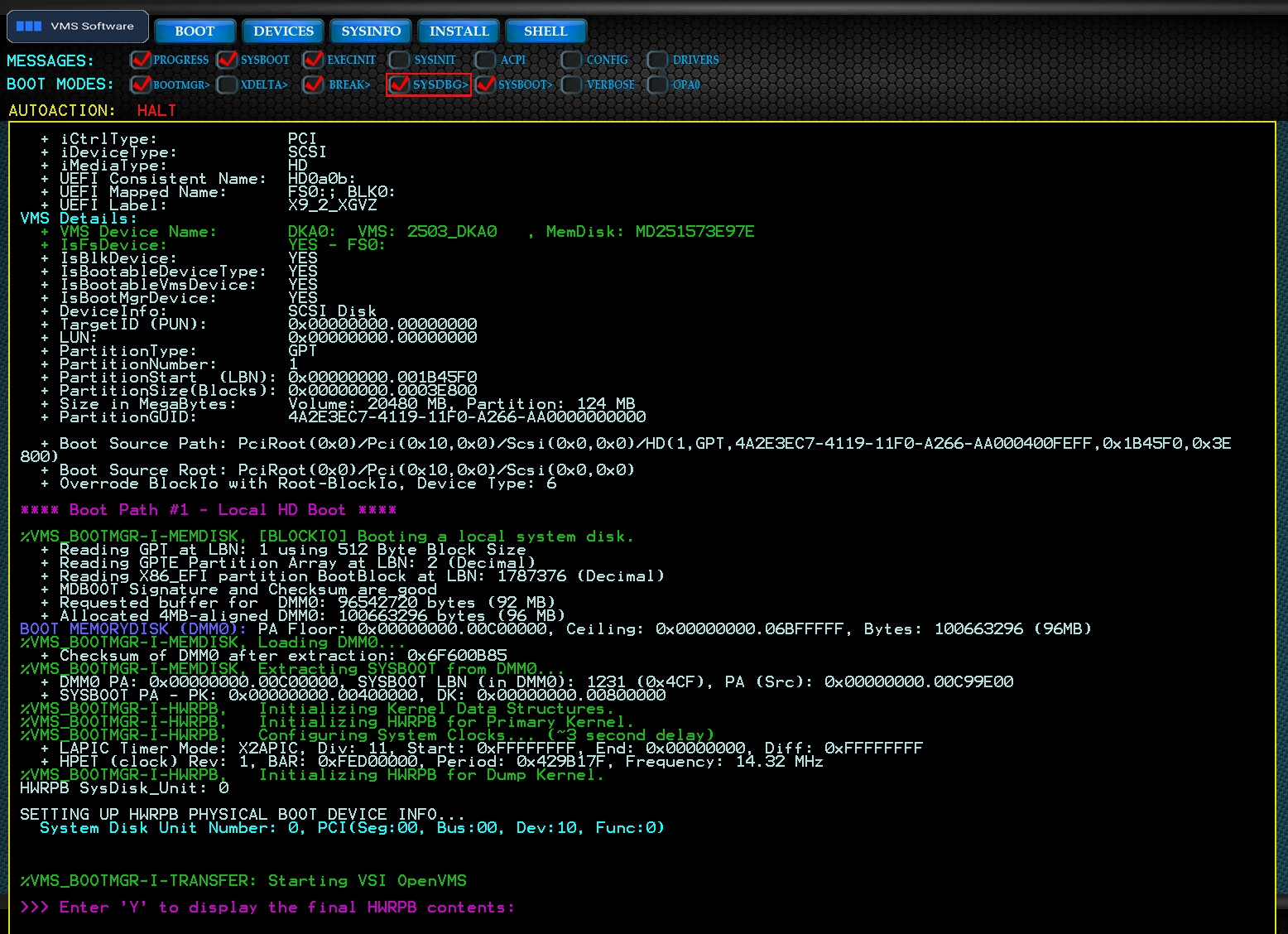

BOOTcommand)The boot process from pre-boot environment through transfer to SYSBOOT

Boot Manager operation and commands

Troubleshooting tips

3. Intended Audience

This guide is intended for system managers of the VSI OpenVMS x86-64 operating system.

4. Document Structure

Chapter 1, "Overview": Provides an overview of the Boot Manager software.

Chapter 2, "The Boot Process": Describes the boot process in detail.

Chapter 3, "The Boot Manager": Gives an overview of the Boot Manager and details the boot modes, messages, and provides a dictionary of commands.

Chapter 4, "Pre-Installation, Installation, and Post-Installation": Details the system preparation before installation and the post-installation procedures.

Chapter 5, "Device Enumeration": Describes specific features of the device enumeration process.

Chapter 6, "Dump Kernel": Describes how to handle system crashes using the dump kernel.

Chapter 7, "Troubleshooting": Provides information about troubleshooting tools to help you with failures or errors that might occur while using the Boot Manager.

5. Related Documents

The Installation Guide for your version of VSI OpenVMS x86-64 (available at docs.vmssoftware.com) describes how to install the VSI OpenVMS operating system.

The VSI OpenVMS Delta/XDelta Debugger Manual describes the use of the Delta/XDelta debugger utility.

The VSI OpenVMS System Manager's Manual, Volume 1: Essentials provides comprehensive information on the conversational boot feature.

6. OpenVMS Documentation

The full VSI OpenVMS documentation set can be found on the VMS Software Documentation webpage at https://docs.vmssoftware.com.

7. VSI Encourages Your Comments

You may send comments or suggestions regarding this manual or any VSI document by sending electronic mail to the following Internet address: <docinfo@vmssoftware.com>. Users who have VSI OpenVMS support contracts through VSI can contact <support@vmssoftware.com> for help with this product.

8. Conventions

| Convention | Meaning |

|---|---|

|

Ctrl/x |

A sequence such as Ctrl/x indicates that you must hold down the key labeled Ctrl while you press another key or a pointing device button. |

|

PF1 x |

A sequence such as PF1 x indicates that you must first press and release the key labeled PF1 and then press and release another key or a pointing device button. |

... |

A horizontal ellipsis in examples indicates one of the

following possibilities:

|

.

.

.

|

A vertical ellipsis indicates the omission of items from a code example or command format; the items are omitted because they are not important to the topic being discussed. |

|

( ) |

In command format descriptions, parentheses indicate that you must enclose the options in parentheses if you choose more than one. |

|

[ ] |

In command format descriptions, brackets indicate optional choices. You can choose one or more items or no items. Do not type the brackets on the command line. However, you must include the brackets in the syntax for VSI OpenVMS directory specifications and for a substring specification in an assignment statement. |

|

| |

In command format descriptions, vertical bars separate choices within brackets or braces. Within brackets, the choices are options; within braces, at least one choice is required. Do not type the vertical bars on the command line. |

|

Bold type |

Bold type represents the name of an argument, an attribute, or a reason. It also represents the introduction of a new term. |

|

Italic type |

Italic type indicates important information, complete

titles of manuals, or variables. Variables include

information that varies in system output (Internal error

number), in command lines

( |

|

UPPERCASE TYPE |

Uppercase type indicates the name of a routine, the name of a file, or the abbreviation for a system privilege. |

|

|

Monospace type indicates code examples and interactive screen displays. In the C programming language, monospace type in text identifies the following elements: keywords, the names of independently compiled external functions and files, syntax summaries, and references to variables or identifiers introduced in an example. |

|

Bold monospace type indicates a command or command qualifier. |

- |

A hyphen at the end of a command format description, command line, or code line indicates that the command or statement continues on the following line. |

|

Numbers |

All numbers in text are assumed to be decimal unless otherwise noted. Nondecimal radixes—binary, octal, or hexadecimal—are explicitly indicated. |

Chapter 1. Overview

Important

Virtual machine (VM) configuration details and specific hypervisors are not covered in this guide. It is assumed that readers are familiar with the operation of their chosen host environment. Refer to the documentation for your platform/hypervisor for more information on how to set up and configure VMs.

1.1. The Boot Manager Environment

Two forms of a boot manager will exist on every VSI OpenVMS x86-64 operating system. The platform firmware provides a form of a boot manager that is used to set up various parameters and boot options. This typically consists of a series of menus providing access to system-specific chipset, configuration, and boot option parameters. It is aware of the platform features, but not of the operating system that is being booted. In this document, this is referred to as either setup or the boot manager (of your platform firmware).

The second form of a boot manager is the VSI OpenVMS Boot Manager, which is a stand-alone, native UEFI (Unified Extensible Firmware Interface) application. It manages OpenVMS boot options, but knows very little about the underlying platform setup. In this document, this is referred to as the VSI OpenVMS Boot Manager or simply the Boot Manager.

The VSI OpenVMS Boot Manager identifies and enumerates devices, maps memory, builds critical data structures required to boot VSI OpenVMS, creates the stand-by dump kernel, loads the MemoryDisk, and ultimately transfers control to the VSI OpenVMS SYSBOOT executable.

UEFI (as opposed to BIOS) has existed since 2005, so the vast majority of today's systems support UEFI. While most systems provide UEFI services, some do not expose the UEFI shell application (hence, they lack command input capability).

If you are not sure whether this issue affects your system, look at your platform firmware's setup screens and see if you can find any options for enabling UEFI. On some systems, you will need to first disable the Secure Boot feature before UEFI options will be presented. Most hypervisors provide an option for creating UEFI-enabled guests, although some may require installing optional packages to enable UEFI. Refer to your hardware/hypervisor documentation for additional recommendations.

If you determine your platform does not provide a UEFI shell, the OpenVMS Boot Manager can be launched from a platform firmware boot option, but it may operate with reduced functionality. Alternately, you may obtain a suitable UEFI shell application from your hypervisor vendor.

1.2. Secure Boot and TPM

Secure Boot is a security feature used by several operating systems. It essentially requires that each UEFI executable be signed. Secure Boot must be disabled to run VSI OpenVMS. Any system that does not allow Secure Boot to be disabled cannot run VSI OpenVMS.

Trusted Platform Module (TPM) is another security feature. TPM is intended to detect certain types of platform intrusions. It may not be necessary to disable TPM to run VSI OpenVMS, but there is a possibility that this feature, when enabled, could result in interrupt misbehavior while running VSI OpenVMS.

1.3. Supported Installation Methods

Refer to the Installation Guide for your version of VSI OpenVMS x86-64 (available at docs.vmssoftware.com) for specific installation instructions.

VSI supports three methods for installing VSI OpenVMS x86-64. Each option starts with downloading the OpenVMS installation kit, which is a binary ISO image file, from the online VSI Service Platform.

Once you have obtained a copy of the installation kit, you can:

attach the kit file as a virtual DVD or an optical disk if installing OpenVMS on a VM.

create a physical DVD using DVD burner software.

upload the file to your web server and network boot the installation kit.

Your choice of method depends on your own requirements and how many installations you intend to perform.

- Virtual DVD

Using a virtual DVD is a simple method if you have a small number of installations, as you can internally share the virtual DVD file location. As the OpenVMS installation kit is provided as a hybrid image that features a boot section in the ISO 9660 format, it can be used as is.

VM users can directly attach the installation kit file to their VM as an optical drive.

- Physical DVD

If you prefer to use a physical DVD, the installation kit can be burned to a DVD. If you choose to burn a DVD, keep in mind that the file is already an ISO disc image, so it must be burned as-is to the DVD in order to retain the correct boot block and partition table structures. Do not attempt further conversions of the file.

Note

Even though VSI OpenVMS has no USB support, the Boot Manager is fully capable of accessing a USB-based DVD reader. On most VMs, you can define the USB DVD reader as a SATA optical disk. When loading an installation kit, the Boot Manager reads the entire DVD image into memory using UEFI block I/O drivers, thus eliminating the need for VSI OpenVMS runtime drivers during installation.

- Web Server

The installation kit can be uploaded to your internal web server, allowing it to be accessed and installed by your VM guests.

To boot from a web server, users need to first download a small UEFI (console) utility named VMS_KITBOOT.EFI. The kitboot utility is available from an existing VSI OpenVMS installation and is also included with the OpenVMS Boot Manager in the \EFI\BOOT or \EFI\VMS folder. The utility can be copied to a USB stick or to the EFI partition of a local disk. When executed, the utility will prompt for the IP address of the web server and download the specified kit to the guest. Web server installation is only possible on a network managed by a DHCP server.

These methods are described in greater detail in Chapter 4, "Pre-Installation, Installation, and Post-Installation"; the virtual DVD method is also covered in the Installation Guide for your version of VSI OpenVMS x86-64 (available at docs.vmssoftware.com).

Chapter 2. The Boot Process

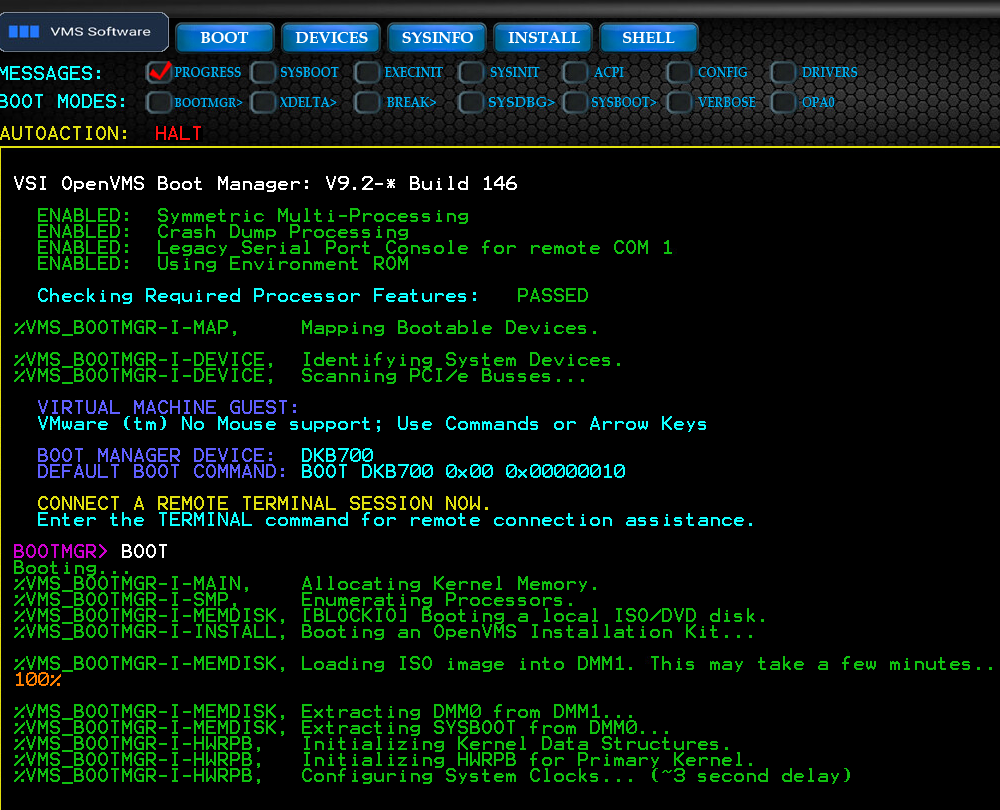

2.1. Basic Boot

Start your VM guest.

VSI OpenVMS is booted from the UEFI

Shell>prompt or from a platform firmware boot option. If you have configured your system's boot options and boot order correctly, the UEFI shell application should start automatically.Note

Some hypervisors/platforms may require additional configuration steps to obtain a UEFI shell application. For details, refer to the Installation Guide for your version of VSI OpenVMS x86-64 (available at docs.vmssoftware.com), as well as your hypervisor documentation.

Follow the steps below to boot from UEFI:

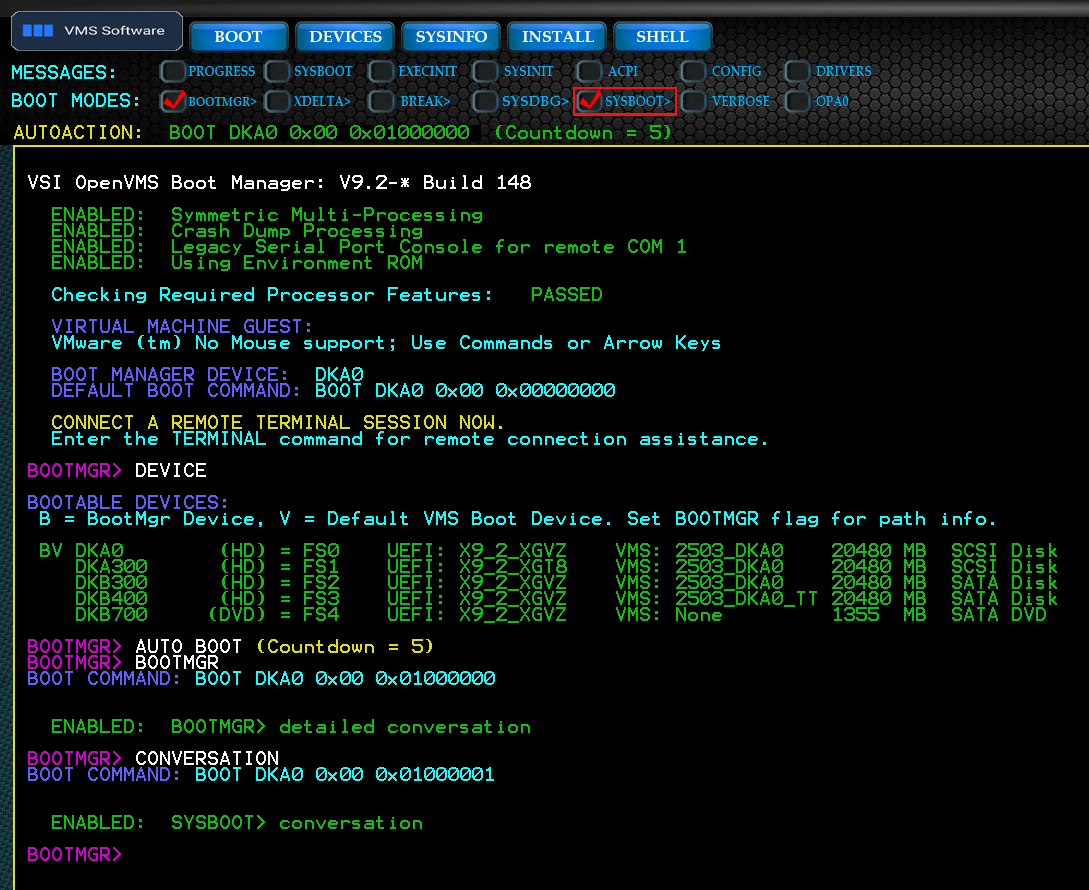

At the UEFI prompt... ...type this command Shell>VMS_BOOTMGR.EFIBOOTMGR>DEVICEThis command will return the list of VSI OpenVMS-bootable devices.

BOOTMGR>BOOT deviceThis command will cause the specified disk to boot.

As shown above, from the UEFI Shell> prompt, launch the VSI OpenVMS

Boot Manager, select your boot source device, and issue the BOOT

command specifying your desired boot device name. If you have previously booted,

then your prior BOOT command will be used by default and you can

omit the device name from your BOOT command. Pay attention to

your Default Boot Command as shown, to be sure you are booting from the

desired

device.

Note

On x86-64 systems, the platform firmware boot options simply point to a disk

where the Boot Manager resides. Since the Boot Manager can boot any bootable

disk, the actual system disk to be booted is selected by the Boot Manager. If

the Boot Manager previously booted a different system disk, you need to manually

enter the desired BOOT command (one time) to update the

default boot device. Subsequent automatic boots will then work as

expected.

Boot flags control various aspects of booting. Boot flags and system roots can be

managed by the Boot Manager FLAGS and ROOT

commands, specified as parameters of the BOOT command, or

specified on the initial command line

or platform

firmware boot option when you launch the Boot Manager. The

existing boot flags are described in Section 3.3.3, ''Boot Flags''.

For example, to boot from system root 0 and hex flag value

807, you could take one of the following three approaches:

Set individual parameters using the Boot Manager

FLAGandROOTcommands, before issuing theBOOTcommand:FLAG 807 ROOT 0 BOOT deviceIssue the following fully-qualified

BOOTcommand:BOOT device 0 807Issue the following shell command:

Shell> VMS_BOOTMGR.EFI device -fl 0,807

UEFI commands can also be inserted (one per line) into the file STARTUP.NSH if you prefer to always boot the same device.

The Boot Manager AUTO BOOT command provides a brief countdown

prior to taking an automatic action. This countdown can be adjusted by the command

AUTO seconds, where

seconds is a value between 1 and 30.

Entering

AUTO 0 is equivalent to entering AUTO HALT

without changing the countdown.

Note

The boot flags for VSI OpenVMS on x86-64 are significantly different from

those on prior architectures. Refer to Section 3.3, ''Boot Modes, Boot Messages, and Boot Flags''

for details, or simply issue the Boot Manager FLAGS command

to see the flag definitions.

The VSI OpenVMS Boot Manager is independent from the VSI OpenVMS x86-64 operating system version. Any version of Boot Manager should be able to load any instance of VSI OpenVMS x86-64 V9.2-2 (or higher). The features offered by specific Boot Manager versions will continue to develop, but all versions will support essential boot operation.

Shell> fs2: fs2:> cd EFI\VMS

If you launch the Boot Manager without first selecting the UEFI file system device and directory, your platform firmware will scan a search path of fsx: file system devices and launch the first copy of VMS_BOOTMGR.EFI it locates. Often, platform firmware setup mode allows you to define or constrain this search path so that your desired boot operation is automatic. We will discuss several options for setting up automatic actions in later sections.

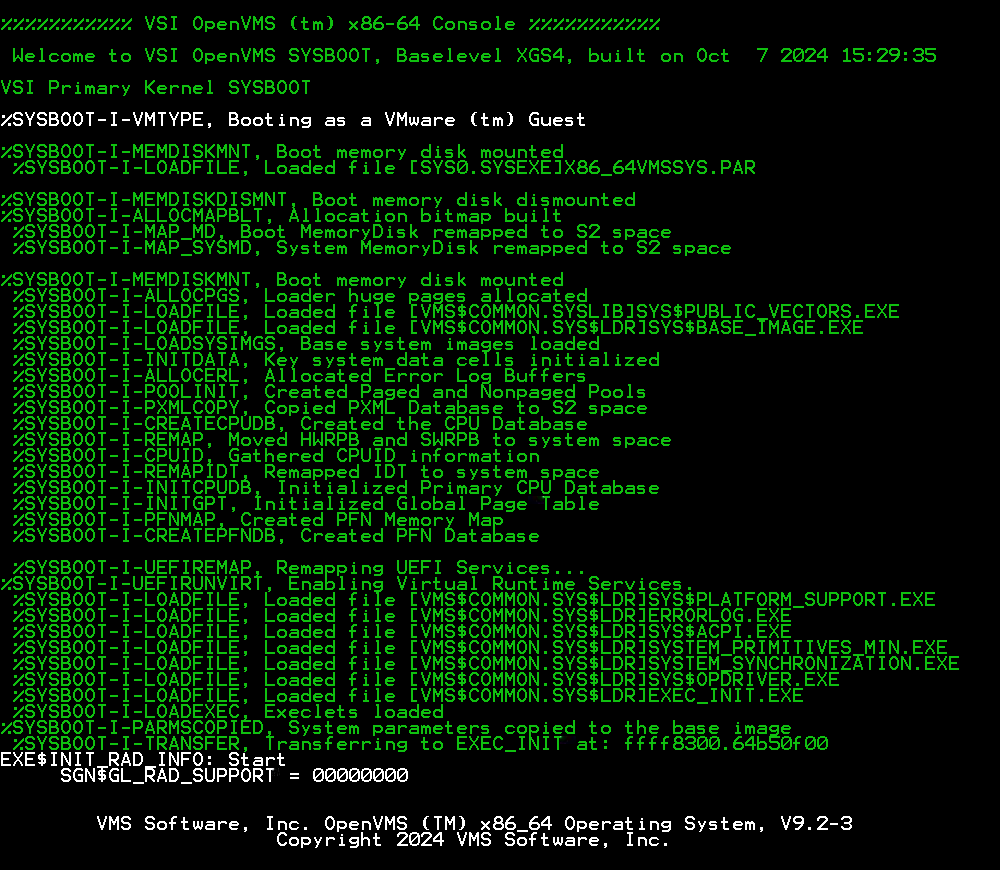

2.2. Boot Process Overview

VSI OpenVMS x86-64 V9.2 introduced a new boot process, which is also used in VSI OpenVMS x86-64 V9.2-2 or higher. This section contains a brief overview of the components involved in the new process. The next section describes the process in greater detail.

- Secure Boot

If this feature is present in your platform’s firmware, it must be disabled. See Section 1.2, ''Secure Boot and TPM'' for more details.

- Platform Firmware

VSI OpenVMS requires UEFI-based console firmware, as opposed to older legacy BIOS-based consoles.

BIOS (Basic Input/Output System) is an older form of platform firmware based in system flash memory, or ROM. BIOS provides the most essential methods for initializing, loading, and booting an operating system, but it is very limited and its specifications were putting multiple constraints on modern hardware.

In 1999, Intel introduced EFI (Extensible Firmware Interface) which is a set of procedures designed to provide a consistent and feature-rich boot environment. In 2005, EFI was moved to an open-source project (TianoCore) and renamed to UEFI (Unified Extensible Firmware Interface). Practically all systems today provide UEFI. UEFI is also typically installed in flash memory and sometimes as a layer of firmware on top of traditional BIOS. The term "Legacy Boot" refers to booting with traditional BIOS or using a compatibility mode of UEFI which interfaces with BIOS. The VSI OpenVMS Boot Manager makes extensive use of UEFI’s more advanced features.

Note

VSI OpenVMS does not support legacy BIOS mode and cannot be installed on x86-64 systems that do not provide support for UEFI.

- Firmware Shell Utility

Upon initialization, x86-64 systems should be set up to run the UEFI shell application. The UEFI shell can be set up to automatically launch the VSI OpenVMS Boot Manager utility; alternatively, the Boot Manager can be invoked manually from the shell command line. Quite often, the shell is hidden from users and requires changing some setup parameters. For example, disabling Secure Boot will often enable additional setup menu options for accessing the UEFI shell. If necessary, a shell application can be installed where one is not provided by default.

- VSI OpenVMS Boot Manager

The VSI OpenVMS Boot Manager is bundled into the installation media and will be run from it during the initial installation. After installation, the Boot Manager can be run from any UEFI file system device, as it is not coupled to a specific VSI OpenVMS instance or version. When performing an installation from a web server, the Boot Manager is downloaded separately, prior to downloading the full ISO image. After installation, the Boot Manager is typically, but not necessarily, run from the installed system disk.

The VSI OpenVMS Boot Manager should not be confused with a platform firmware boot manager (typically blue setup screens and menus). The VSI OpenVMS Boot Manager executes after a system or VM has booted and is ready to load VSI OpenVMS. The VSI OpenVMS Boot Manager provides commands for managing boot modes, messages, and devices. Potential boot devices are identified and enumerated using VSI OpenVMS device naming conventions. A

BOOT devicecommand causes the Boot Manager to locate and load the operating system from the specified boot device.- MemoryDisk

Unlike prior releases of VSI OpenVMS for VAX, Alpha, or IA-64 systems, all of the files that comprise the core VSI OpenVMS kernel are pre-packaged into a logical disk container file, referred to as the MemoryDisk. Whether booting from a local drive or over a network, the MemoryDisk is loaded into memory by the VSI OpenVMS Boot Manager using UEFI physical block I/O drivers. This greatly simplifies and speeds up the boot process while eliminating the need for VSI OpenVMS to provide boot drivers for every supported type of boot device.

During installation, the MemoryDisk will contain the full ISO image, regardless of whether it is downloaded from a web server or loaded from a virtual or physical DVD. After installation, on subsequent boots, the MemoryDisk contains only the minimal kernel files that are required to achieve the ability to switch to runtime drivers.

- Dump Kernel

During boot, after the Boot Manager loads the MemoryDisk into memory and initiates boot of the primary kernel, the MemoryDisk remains memory-resident. In the event of a primary kernel shutdown or crash, the same MemoryDisk is booted a second time into separate memory space, forming what is known as the dump kernel. The dump kernel is a limited-functionality VSI OpenVMS instance that processes a crash dump using runtime drivers and, upon completion, initiates a system reset. Because the MemoryDisk is already resident in memory, the dump kernel boots nearly instantly and processes the crash data much faster than prior implementations that required primitive device drivers.

- Boot Blocks

Since the embedded MemoryDisk is an entity bootable separately from the system disk, VSI OpenVMS V9.2-2 (or higher) supports additional inner boot blocks around the MemoryDisk itself. The MemoryDisk container file resides within the system disk image, which has its own set of boot blocks. We refer to these as the outer boot blocks. When booting a system disk, the outer boot blocks are used to locate the inner boot blocks. For the most part, this added complexity is transparent to the user. Related utilities such as Backup, PCSI, SYSGEN, etc. have been modified to maintain the integrity and security of the embedded MemoryDisk. The use of symlinks allows access to MemoryDisk-resident files from system components.

2.3. Boot Process in Greater Detail

The VSI OpenVMS x86-64 boot process is significantly different from the VAX, Alpha, or IA-64 implementations. The VSI OpenVMS Boot Manager leverages many of the advanced capabilities of UEFI to provide a rich pre-boot environment.

The port of VSI OpenVMS to the x86-64 architecture has also provided an opportunity to modernize and improve the efficiency of the boot process.

The many individual files (~180) involved in OS startup are now consolidated into a single MemoryDisk image. Consolidation of these files into a single logical disk container file offers new options for network booting and greatly enhances boot performance and integrity.

Some of the major features of the Boot Manager and new boot process include:

A graphical user interface (GUI) that supports touchpad, touchscreen, and USB keyboard/mouse (on systems that report mouse events).

A rich command set for customers, support staff, and developers.

Functional decoupling of the Boot Manager from the operating system, which means it can boot the system from a different device.

Enumeration of bootable devices, which uses VSI OpenVMS device naming conventions.?

A single MemoryDisk image file to contain the bootable VSI OpenVMS kernel.

An ability to download the boot source from a web server.

A second kernel that can be initialized to handle shutdown and crash dump processing.

Embedded security features to assure boot process integrity.

2.3.1. MemoryDisk

The most significant change in the boot process is the use of a new "MemoryDisk" boot method.

This small disk image is contained in the file SYS$COMMON:[SYS$LDR]SYS$MD.DSK. During boot, this device is represented as DMM0:; when connected as a logical disk at runtime, it is also represented as LDM1:.

The minimum bootable VSI OpenVMS kernel is presented by approximately 180 files.

On prior architectures, during a network installation, the OS loader (an EFI application) constructed a memory-resident, pseudo-system disk containing a set of the essential directories found on a system disk. It then parsed a list of files, downloading and copying each file into its proper directory. The boot process ran from files in this memory-resident disk, eventually reaching a point where it could mount the real system disk and use runtime drivers for ongoing file access. The initial memory-resident disk was then dissolved.

This relatively slow and complex process resulted in an OS loader that needed to understand VSI OpenVMS disk and file structures and was tied to the specific device and version of VSI OpenVMS being booted. It also required special primitive boot drivers for every supported boot device.

The new MemoryDisk boot method eliminates most of this complexity and decouples the OS loader (Boot Manager) from a specific device or instance of x86-64 VSI OpenVMS. Instead of downloading a list of files and constructing a pseudo-system disk image in memory at boot time, a single pre-packaged MemoryDisk container file (SYS$MD.DSK) is provided on distribution media (the installation kit) and on every bootable VSI OpenVMS system device.

The MemoryDisk contains all of the files that are required to boot the minimum VSI OpenVMS kernel. The Boot Manager loads the MemoryDisk into memory and transfers control to the secondary bootstrap program (SYSBOOT.EXE). It can do this using the physical block I/O and file I/O drivers provided by UEFI, eliminating the need for VSI OpenVMS boot drivers and knowledge of VSI OpenVMS file systems. All of the files required by the dump kernel also reside in the MemoryDisk, allowing the dump kernel to directly boot from the pre-loaded MemoryDisk.

As the Boot Manager loads the MemoryDisk, it performs several security and integrity checks before transferring control to SYSBOOT.EXE. Errors in these checks are deemed fatal and result in clearing memory and performing a system reset.

The MemoryDisk is a container file that is structured as a bootable system disk, having its own (inner) boot blocks and GUID Partition Table (GPT), but containing only those files which are required by the early boot process; hence, a mini-kernel. When the system is running, the OS uses symlinks to direct file references to their actual locations for any files that reside inside the MemoryDisk.

The contents of the MemoryDisk must be kept up to date. Any changes to these MemoryDisk-resident files through parameter file modifications, PCSI kit or patch installation, and so on, require the operating system to execute a procedure to update the MemoryDisk container. This ensures that the next boot will use the new images. This is handled by system utilities and is largely transparent to end users. The command procedure SYS$UPDATE:SYS$MD.COM handles updating the MemoryDisk container.

Note

Do not invoke SYS$MD.COM directly unless you are advised to do so by VSI Support, or when required while following documented procedures. For example, if you load a user-written execlet by running SYS$UPDATE:VMS$SYSTEM_IMAGES.COM, you must then invoke SYS$UPDATE:SYS$MD.COM.

Important

VSI does not recommend changing, moving, or manipulating SYS$MD.DSK or SYS$EFI.SYS (or the underlying EFI partition) in any way. These files are needed to boot the system and are maintained by OpenVMS.

As the MemoryDisk is an actual bootable disk image, it implements its own (inner) boot blocks consisting of a Protective Master Boot Record (PMBR), a GPT, and multiple disk partition table entries (GPTEs).

The MemoryDisk image contains two ODS-5 structured partitions (signatures: X86VMS_SYSDISK_LOW and X86VMS_SYSDISK_HIGH) and one FAT-structured UEFI partition (signature: X86_EFI).

An installed system disk also has its own (outer) boot blocks consisting of GPT structures and three partitions, similar to the MemoryDisk. The outer boot blocks contain a pointer to the MemoryDisk. Therefore, we can either boot a system disk ( extracting the MemoryDisk from it), or we can directly boot a MemoryDisk. Direct boot of a MemoryDisk is primarily a development feature with some future uses.

During a normal boot from an installed system disk, the Boot Manager uses the outer boot blocks to locate and extract the MemoryDisk (SYS$MD.DSK) from the system disk. The MemoryDisk displayed to the user as DMM0: is loaded into memory and, once the logical disk is connected (as LDA1:), it becomes the system device until the boot process reaches a point where it can mount the full, physical system disk. At that stage, DMM0: is set offline but is retained in case the dump kernel needs to boot.

Note

It should never be necessary to run the BACKUP command

on the (memory-resident) MemoryDisk as its own device

(DMM0: or LDA1:). The

MemoryDisk container file is backed up during a normal system backup

operation.

Important

To avoid invalidating the MemoryDisk inner boot blocks, never dismount, disconnect, or remove DMM0: or LDM1:.

During a network installation, the entire installation kit image is downloaded into memory as DMM1: and the embedded MemoryDisk DMM0: is extracted from the kit (using the outer boot blocks). In this one special case and only for the duration of the installation procedure, we have two memory-resident disk images: the installation kit (DMM1:) and the MemoryDisk contained within the installation kit (DMM0:). Both of these memory-resident disks are serviced by the new MemoryDisk driver: SYS$DMDRIVER.EXE.

2.3.2. UEFI Partition

VSI OpenVMS is a three-partition system. Of the three disk partitions, the UEFI FAT-structured partition is the only partition that is recognized as a bootable partition by UEFI. Thus, during pre-boot execution, UEFI only sees this one partition and the files contained therein. This is where the VSI OpenVMS Boot Manager and any other UEFI executables reside. The other two ODS-5 structured partitions (and the files they contain) remain invisible to UEFI.

You can view the UEFI disk device paths using the following shell command:

Shell> map -b

The resulting display shows device paths for both block I/O devices (blkx:) and file system devices (fsx:). File system devices are those which contain a UEFI partition and UEFI executable files. Each fsx: device path maps to its underlying blkx: device, but the blkx: devices are not directly accessible from the UEFI shell.

A bootable VSI OpenVMS disk will have four device mappings associated with it; one file system (fsx:) device, where the Boot Manager resides, and three block I/O (blkx:) devices, representing the three partitions (one FAT and two ODS-5 partitions). Looking at these device paths, you will note that they contain, among other things, a PCI device path, a storage device type (Sata, Fibre, etc.), and a hard disk identifier (HD1, HD2, CDROM, etc.). The bootable partition will always be shown as HD1. HD2 and HD3 are the ODS-5 partitions where the majority of VSI OpenVMS files reside.

If you want to launch a specific instance of the Boot Manager, then you will need to identify the UEFI fsx: device that is assigned to your desired boot disk (as you always had to do in IA-64 systems). You can then switch to that fsx: device and to the \EFI\VMS folder where you will find and launch VMS_BOOTMGR.EFI.

For x86-64, the Boot Manager can be run from any fsx: disk and boot any other (bootable) fsx: disk. Thus, you can simply launch the Boot Manager directly from the shell as follows:

Shell> fs0:\EFI\VMS\VMS_BOOTMGR.EFI

BOOTMGR> BOOT DKA0

BOOTMGR> BOOT FS2

When a BOOT command has been issued, the Boot Manager

locates an extent map contained in the boot blocks of the target disk. The

extent map contains the information required to locate and load the MemoryDisk

and the secondary bootstrap image SYSBOOT.EXE which

resides in one of the ODS partitions.

The Boot Manager loads the MemoryDisk and SYSBOOT.EXE using the UEFI block I/O protocol, the LBA (Logical Block Address, or LBN), and the size specified in the extent map. Since UEFI contains block I/O protocols for every supported type of boot device, we no longer need VMS-specific boot drivers. During early boot, while running memory-resident, SYSBOOT.EXE loads and uses the new VSI OpenVMS MemoryDisk driver (SYS$DMDRIVER). Later, during SYSINIT, device-specific runtime drivers are loaded for access to the full physical system disk.

The UEFI partition is implemented as a logical disk container file named [SYSEXE]SYS$EFI.SYS. This is a full implementation of the UEFI partition; the folders and files are visible from the UEFI shell and typically include \EFI\BOOT, \EFI\VMS, \EFI\UPDATE, and \EFI\TOOLS.

In order to allow the MemoryDisk itself to be a bootable entity, the MemoryDisk container file [SYSEXE]SYS$MD.DSK contains a reduced-functionality UEFI partition implemented as another logical disk container file named: [SYSEXE]MEM$EFI.SYS. Although there are no UEFI utilities in this boot-only UEFI partition, its inner boot blocks contain just enough information to locate and load SYSBOOT.EXE when the MemoryDisk is directly booted.

To be technically complete, the installation files and kit must adhere to ISO 9660 structure and boot method. Per ISO specification, yet another copy of the UEFI partition exists as the ISO boot file. Per UEFI specification, the ISO boot file must be a UEFI partition instead of an executable image. Therefore, when an ISO DVD is detected by UEFI firmware, UEFI treats the ISO boot file as a file system partition. Subsequently, if the partition contains a UEFI application named \EFI\BOOT\BOOTX64.EFI, the UEFI firmware will automatically launch the application. In the case of OpenVMS, this is a copy of the VSI OpenVMS Boot Manager. This causes the Boot Manager to execute automatically when the installation kit file is assigned to an optical device or an installation kit DVD is inserted into the DVD drive.

Due to the automatic launch feature of optical discs that contain fsx:\EFI\BOOT\BOOTX64.EFI, VMS Software strongly suggests that you disconnect these devices after installation to prevent unwanted launching from the write-locked DVD device. After installation completes, the installation procedure will launch the Boot Manager from fsx:\EFI\VMS\VMS_BOOTMGR.EFI on the installed disc.

2.3.3. UEFI Folders

There are several folders in the UEFI partition:

- \EFI\BOOT (Default Boot File)

This folder is used for automatic booting from a disk. The Boot Manager and related files are in this folder. There is also a file named BOOTX64.EFI, which is just a copy of VMS_BOOTMGR.EFI given a special name. When a UEFI boot option that points to a specific disk is defined, UEFI will look for a default boot file, \EFI\BOOT\BOOTX64.EFI, and if found, will execute it.

- \EFI\VMS

This folder contains the normal OS-specific boot files. In our case, it has all the same Boot Manager files in it, except it does not contain BOOTX64.EFI.

- \EFI\TOOLS

This folder may contain optional UEFI applications deemed useful for maintenance.

- \EFI\UPDATE

This folder may contain firmware updates for the system or adapters.

Note

Users should avoid including additional files in the UEFI folders, except where necessary to perform firmware and adapter updates. Copies of any such files should be kept elsewhere to avoid potential loss should the partition be updated.

2.3.4. UEFI Files

The following files may be found in the \EFI\VMS and \EFI\BOOT folders:

- VMS_BOOTMGR.EFI

This is the VSI OpenVMS Boot Manager executable.

- VMS_KITBOOT.EFI

This is the utility for downloading VSI OpenVMS from a web server. It is an optional file that is used only for downloading installation kits from a local web server. If this file is renamed or removed, the Boot Manager's

INSTALLcommand and corresponding push button will be disabled.- VMS_IMG.BMP

This is a background bitmap used by the Boot Manager when in graphical mode. This image can be customized per instructions in Section 3.2.3, ''Custom Background Image''. If this file is renamed or removed, the Boot Manager will fall back to a less graphical mode of operation.

- VMS_ENV.DAT

This is created by the Boot Manager and contains various application parameters in binary form. The contents can be viewed by the Boot Manager command

ENV. It is updated and saved whenever one of the parameters is changed and the user issues one of the following commands:BOOT,EXIT,SHELL, orSAVE. If the file is deleted, a new copy will be created when the Boot Manager is next launched.- VMS_OPT.TXT

This is an optional file created by the user. It contains a simple list of fully-qualified

BOOTcommands. If the file exists, the Boot Manager allows selection ofBOOTcommands by line number, i.e.,BOOT #3would execute theBOOTcommand from the third line. This is primarily intended for VMS Software internal use.

Additional files may appear as development continues.

2.3.5. Locating the MemoryDisk

When the Boot Manager is launched, it scans the system hardware to locate all

bootable devices. A bootable device is one that contains a

GPT and, minimally, a UEFI partition. If the

GPT contains structures indicating the presence of VSI OpenVMS partitions, the

volume details (labels, size, etc.) will be displayed as a bootable VSI OpenVMS

device by the DEVICE

command.

When a BOOT command is issued, the Boot Manager attempts to

locate the MemoryDisk inside the boot source device. It does this by scanning

the boot block structures for a specific signature. If found, additional extent

records in the boot block indicate the LBA (Logical Block Address) and size of

the MemoryDisk. Up to 24 extent records can describe the full MemoryDisk image.

Since device access at this stage is performed exclusively based on physical

block references, the Boot Manager can use the built-in block

I/O drivers provided by UEFI to load the MemoryDisk

into system memory. This same method is used regardless of whether we are

booting an installed system disk or an installation kit from an optical drive or

over a network.

When booting from a virtual or physical device, the boot source device's (outer) boot block contains the location (Logical Block Address) and size of the MemoryDisk and the relative location and size of SYSBOOT.EXE within the MemoryDisk. SYSBOOT.EXE is the initial VSI OpenVMS bootstrap program to be loaded and executed.

When booting the installation kit over a network, the VMS_KITBOOT.EFI utility downloads the entire installation kit image. It then launches the Boot Manager. The Boot Manager, using the outer boot blocks, locates, extracts, validates, and loads the MemoryDisk from within the memory-resident installation kit image and then, using the inner boot blocks, locates, extracts, validates, loads, and executes SYSBOOT.EXE.

Before transferring control to SYSBOOT.EXE, the Boot Manager performs a variety of other tasks required to prepare the system for execution, including allocation and initialization of boot-related data structures, such as the Hardware Restart Parameter Block (HWRPB), enumeration of devices, and establishing the memory maps. It does this for both the primary kernel and the dump kernel.

If a bugcheck occurs at any point after VSI OpenVMS is booted, the dump kernel is already resident in system memory (this includes the MemoryDisk and a separate copy of SYSBOOT.EXE). The crashing primary kernel passes control to the dump kernel, which will rapidly boot, perform its tasks, and reset the system. This independent dump kernel provides for faster crash dump and shutdown processing using runtime device drivers.

The operating system procedures that build and maintain the MemoryDisk must also maintain the inner boot blocks of the MemoryDisk and the outer boot blocks of the system disk's UEFI partition. If system files that reside within the MemoryDisk are updated, by installation of a patch kit for example, the involved utilities will invoke a command procedure (SYS$MD.COM) to create a new MemoryDisk image so that any changes will be preserved for the next system boot.

Chapter 3. The Boot Manager

3.1. VSI OpenVMS Boot Manager

The VSI OpenVMS x86-64 Boot Manager loads the VSI OpenVMS operating system as a guest operating system on a hypervisor.

The Boot Manager is a UEFI application located on the boot device, installation media, or network distribution site. The actual on-disk file is fsx:\EFI\VMS\VMS_BOOTMGR.EFI.

While VSI OpenVMS IA-64 systems used a similar console application named VMS_LOADER.EFI, they are crucially different. The Boot Manager application is also an OS loader, but it specifically serves OpenVMS x86-64 and provides a considerably different and extended functionality. The IA-64 loader image offered very few features. Additional EFI utilities were required to help users identify devices and manage the boot environment. The VSI OpenVMS x86-64 Boot Manager has many built-in features, eliminating the need for additional utilities.

It is important to note that platform firmware often provides its own form of a built-in boot manager, usually presented as a series of simple menus that manage platform setup features and let users define various boot options. Do not confuse these platform-specific setup programs with the VSI OpenVMS Boot Manager. If you are familiar with the Linux boot process, you can consider the VSI OpenVMS Boot Manager to be analagous to the GRUB boot loader utility, but specifically tailored to the needs of VSI OpenVMS.

To boot VSI OpenVMS, the x86-64 system

should

be set up to launch the UEFI shell application. From the Shell> prompt,

launch (manually or automatically) the VSI OpenVMS Boot Manager. Although the VSI

OpenVMS Boot Manager can run without the UEFI shell, the shell provides local UEFI

file services. Without these services, certain functions of the VSI OpenVMS Boot

Manager will not be available. Most notably, the enumeration of VSI OpenVMS device

names (DKA100:, etc.) is not possible or precise, and the user may need to boot

using UEFI file system device names (fs1:, etc.).

3.2. Boot Manager Basics

This section describes the operation of the VSI OpenVMS Boot Manager. Most customers will be unlikely to need anything more than the basic features of the Boot Manager. Normally functioning systems can be set up to automatically boot with no interaction with the Boot Manager at all. The more advanced features of the Boot Manager provide functions required to initially establish the desired boot environment, set it up for automatic booting, and diagnose boot problems.

3.2.1. Automatic Launching

There are several ways to set up a system to automatically launch the VSI OpenVMS Boot Manager. As a stand-alone UEFI application, it can be launched from one disk and boot another. This can lead to confusion when several bootable disks are present, so the user needs to be careful about how this is set up.

Most UEFI implementations maintain a path-like list of all file system devices. UEFI can use this path to search for specific files among multiple disks. Such searches begin with fs0: and continue incrementally for the remaining fsx: devices.

The subsequent sections describe the two most common methods for setting up automatic launch.

Using a Startup File

If a file STARTUP.NSH is found on any disk that is contained in UEFI’s path,

it will be executed by the UEFI shell, after a brief countdown. The

STARTUP.NSH file is a simple, optional text file

containing UEFI shell commands. You can create this file using the shell

edit command or other method of placing the file in an

fsx:\EFI folder.

The file should contain one shell command per line. In its simplest form, a

single line containing VMS_BOOTMGR will launch the VSI OpenVMS Boot

Manager, but because no specific

fsx: device was specified,

UEFI will search its path for the first instance of

VMS_BOOTMGR.EFI available to it.

fs2: CD EFI\VMS VMS_BOOTMGR

By specifying the fsx: device first, you have identified the root device that UEFI will use. This is important because it helps to locate other files (such as environment variable files).

Any combination of shell commands can be placed in the STARTUP.NSH script. It is often used to configure low-level network and adapter details.

Typically, STARTUP.NSH is found in the fsx:\EFI folder. If multiple disks contain STARTUP.NSH files, UEFI will execute the first one it finds. You can place this file on fs0: (making it the first one found), or you can adjust the boot options and boot order in your platform firmware to point to the desired disk. Some systems allow STARTUP.NSH to be located on a network.

Setting Up Boot Options and Boot Order in the Platform Firmware

This is performed to point to the disk that you wish to automatically boot.

Entering the platform boot manager screens typically involves pressing a

function key during system power-on or exiting from the Shell>

prompt. Your boot options are a list of bootable devices that can be edited. The

boot order specifies the order in which the boot options are processed.

Typical platform firmware implementations may provide some pre-defined boot options, one of which might be the "Internal UEFI Shell". Often the shell option says it is unsupported, meaning that it is not actually a boot device. You can still use this option if you want the UEFI shell to be launched by default.

You can add new boot options and adjust the boot order as needed to place your desired default device at the top of the list. When adding a new boot option, if you specify only a boot device, UEFI will expect to find the default boot file at fsx:\EFI\BOOT\BOOTX64.EFI. It is better to specify the full folder and file (fsx:\EFI\VMS\VMS_BOOTMGR.EFI) to avoid ambiguity. Failing to be precise means that if the default boot file is not found, the next boot option will be tried, which is likely to be a different disk.

Precise boot options can be entered using the Boot from a file

function or careful construction of a new boot option entry. Unfortunately, the

syntax and functions vary among platforms.

BOOTMGR> AUTO BOOTThe next time the VSI OpenVMS Boot Manager is launched, it will perform a brief countdown, then automatically boot.

BOOTMGR> AUTO nwhere

n is a number of seconds from 1 to 30.

Entering

AUTO 0 is equivalent to entering AUTO

HALT without changing the countdown. Note that

if you find yourself in a situation where you cannot prevent auto-boot, issue

the command:BOOTMGR> EXITAfter that, proceed immediately to repeatedly press the key that invokes the setup screen? of the platform firmware.

3.2.2. Display and Input

Depending on the capabilities of the current system, the Boot Manager will operate in one of the four distinct modes. The Boot Manager evaluates and selects the most capable mode in the following order:

GRAPHICAL_IO Mode

The system provides full graphical display features; it supports mouse, touchpad, or touchscreen input. In this mode, the Boot Manager supports push buttons, check buttons, and a graphical background image.

GRAPHICAL Mode

The system provides full graphical display features but does not support mouse, touchpad, or touchscreen input. In this mode, the Boot Manager supports push buttons, check buttons, and a graphical background image, but the keyboard arrow keys must be used to interact with these graphical controls.

RENDERED_TEXT Mode

The system supports simple graphical display features, such as full-screen colored text, but without graphical controls or a background image. Input is limited to keyboard only. On systems that normally support the graphical modes, rendered text mode can be intentionally selected by deleting or renaming the background image file (fsx:\EFI\VMS\VMS_IMG.BMP).

SERIAL_TEXT Mode

The system does not support graphical display features. Input and output will be routed to a physical or virtual serial communication port.

When using the VSI OpenVMS Boot Manager, you can usually connect a remote terminal session to use in parallel with the graphical display.

The remote serial connection always uses the architectural COM1 port (port

address 0x3F8). Currently, only COM1 is supported. Use any terminal emulator

that supports serial port communication, such as

PuTTY.

You can also set up a console connection via network (TCP port 2023, etc.) or

named pipes (i.e., \\.\pipe\pipe-name,

typically limited to a common host system unless a proxy server is used).

Unfortunately, the number and variety of utilities and remote access methods, along with specific differences between the various hypervisors, make console port setup exceedingly difficult to describe. Individual users will likely have to experiment and refer to relevant documentation to find what works for them.

Some platform firmware and

hypervisors

do not support remote connections to the UEFI shell. Others may support

output-only (no keyboard input) until VSI OpenVMS is booted.

Unless you

have enabled the Guest Console feature, the VSI OpenVMS Boot

Manager will suspend keyboard input after a BOOT command has

been issued. This is because the UEFI keyboard drivers terminate once the boot

has begun, and keyboard input will not resume until the VSI OpenVMS runtime

drivers take over (just in time to support a conversational boot dialog).

When booted, VSI OpenVMS always assumes the use of COM1 for OPA0: interaction, except in specific instances where hardware does not support legacy COM ports.

Note

For security reasons, the graphical subsystem's frame buffer memory is cleared prior to transferring control to the operating system.

3.2.3. Custom Background Image

On systems that provide a graphical display, the Boot Manager will attempt to load an optional background image file that may be customized by the user. A default background image has been provided by VMS Software.

The background image file must adhere to the following guidelines pertaining to location, name, format, color depth, and dimensions:

The file location and name must be fsx:\EFI\VMS\VMS_IMG.BMP.

The background bitmap image must be non-compressed, BMP format, with 24-bit color depth.

The image dimensions must be a minimum of 1024 × 768 pixels and, ideally, 1920 × 1080 pixels to support most Full HD resolution monitors. If the image is smaller than the current display, it will be tiled as needed to fill the display. If the image is larger than the current display, it will be cropped. Image scaling and rotation are not supported.

A region in the upper-left corner of the image, measuring 900 × 144 pixels, is reserved for overlaying the various buttons and the VMS Software logo. The user cannot alter the placement or color of the graphical controls, so any custom image should provide adequate contrast in this region to assure that the controls remain visible. Selected controls are highlighted in red, so this too should be considered when selecting a custom image. A VMS Software copyright statement will be overlayed along the lower edge of the image. The text output area will be overlayed on top of the provided image.

If no suitable background image is found, the Boot Manager will fall back to the rendered text mode. In this mode, the full display dimensions will be used for output. If this full-size display mode is desirable, the user may rename or delete the bitmap image file. This effectively prevents the Boot Manager from entering graphical modes.

3.2.4. Display Within a Window

Some remote server management utilities and hypervisors will run the Boot Manager within a child window that is smaller than the physical monitor. Without having specific interfaces to these various utilities, the Boot Manager is unaware of the size of its containing window and will attempt to size its display according to the physical monitor size. This results in the need to scroll the window to see the complete Boot Manager display.

To improve usability when confined to a child window, do the following:

If you are using VirtualBox, the host provides a top menu item (when running) to scale the guest display. Unfortunately, not all hosts provide this feature.

- Alternatively, to display line numbers along the left side of the text output region, issue the following command:

BOOTMGR> LINES

Determine how many lines are visible without having to scroll.

- Issue the command again with the desired number of lines. For example:

BOOTMGR> LINES 34

This will cause the Boot Manager to recalculate its display height to fit the

window. This can also be handy if you would like to shrink the Boot Manager to

save screen space. Changes to the display size are preserved in response to a

BOOT, EXIT, or SAVE

command. If you wish to restore the full display size, issue the

LINES command specifying an excessively large number of

lines. The Boot Manager will then determine the maximum number of lines it can

use with the current monitor.

Note that the Boot Manager does not currently support display width adjustment.

3.2.5. Page Mode and Scrolling

The Boot Manager supports a limited form of text scrolling. The Page Up/Page Down keys will redisplay prior pages of text output. The number of pages that can be recalled depends on the density of text on each page, but in typical use, six or more pages can usually be recalled, which is sufficient for most users. Page numbers at the bottom-left corner will indicate which page is currently displayed.

Commands that produce more than a single page of output will automatically

pause as each full page has been displayed, and the user may enter

Q to quit the current command output.

Although the Boot Manager is able to automatically control multiple-page

output, the PAGE command can be issued to enter page mode.

This will cause the display to pause at the end of each page and wait for the

user to press a key to continue. The display will be cleared as each subsequent

page is displayed. Page mode greatly improves the display performance when using

remote server management utilities, particularly when the display is being sent

to a web browser interface. You can disable page mode using the

NOPAGE command.

3.2.6. Routing and Logging Boot Manager Output

Occasionally, it is useful to capture boot process output for presentation or problem reporting. End-to-end logging of the boot process can be difficult to accomplish due to the many transitions that occur during the boot process. For example, the graphical or text mode Boot Manager runs within UEFI boot context. It transitions into UEFI runtime context as it transfers control to VSI OpenVMS.

In UEFI runtime context and beyond, the Boot Manager has no access to UEFI file protocols, thus precluding UEFI file-based logging. When VSI OpenVMS is able to initialize its operator terminal driver, it can commence logging to a VSI OpenVMS file. Seamless logging of output across these complex transitions is best accomplished by routing Boot Manager and operator output to a terminal session where logging of session output is possible.

When booting VSI OpenVMS as a VM guest, the hypervisor will often provide a means of logging a session. You may also define a serial port for your virtual machine that can be directed to a TCP port and address. In this case, you can route the output to a terminal emulator to provide both scrolling and logging capability.

The Boot Manager COM x command, where

x is the x86-64 architecturally defined COM port number

(1 through 4), will cause Boot Manager output to be echoed to the specified

serial port. COM 0 disables this function. You may route

output to multiple ports by issuing additional COM

x commands.

COM1 (0x3F8) will become the default port for OPA0:

terminal communications once VSI OpenVMS has booted, even if Boot Manager output

was disabled via a COM 0 command.

Your selected COM port routing is preserved when you issue a

BOOT, EXIT, or SAVE

command.

3.2.7. Capturing and Projecting Graphical Display Output

BOOTMGR> prompt or at any

PAGE prompt will cause the Boot Manager to capture the current

screen to a UEFI file.Note

Currently, only a single snapshot file is supported. Subsequent captures will overwrite the prior capture unless you copy or rename the previous file, which is saved as a bitmap file named SNAPSHOT.BMP in the root UEFI file system device (fs0:, etc.). Be careful to avoid filling up the UEFI partition with these large bitmap files.

Occasionally, it is useful to project the display to a larger screen for demonstration or training purposes. This can be problematic due to the number of display resolution changes upon transferring control between BIOS, UEFI, Boot Manager, and VSI OpenVMS. Experience has shown that most modern projectors will synchronize to the resolution used by UEFI. This is fine for projecting the initial power-on sequence. Once you launch the Boot Manager, the resolution will almost certainly increase, and some projectors are not able to recognize the change. To accommodate this, we recommend that you avoid enabling output to your projector until you have launched the Boot Manager. This allows the projector to synchronize to the active resolution used by the Boot Manager.

3.2.8. Input Devices

Note

Many hypervisors fail to provide mouse movement notifications while executing in UEFI and Boot Manager context. Keyboard input, initially using UEFI protocols, will be disabled during transition between UEFI and the operating system. Once the operating system is running, it will establish terminal devices as needed. As of the latest VSI OpenVMS x86-64 release, OpenVMS does not yet provide USB keyboard drivers. As such, your locally attached keyboard may not be usable once booted. Instead, user input is expected to occur through a terminal utility.

User input on x86-64 systems is typically provided by a locally attached keyboard. This may be a standard keyboard, a USB keyboard, a local or remote serial terminal, or a terminal emulator. Some systems require that the console keyboard be plugged into a specific port in order to recognize it as a boot keyboard. This is particularly true for USB keyboards on systems with firmware that does not yet support USB 3.x speeds. Refer to the documentation for your specific hardware.

As the system boots, the various input devices must transition to a runtime driver environment. During this transition, there may be a brief period of time (typically a second or two) when these devices become unavailable. For developers using the XDelta debugger, this problem is avoided by using a traditional serial port keyboard or special serial debug port device instead of the USB keyboard.

The Boot Manager and system firmware support a minimum subset of keyboard functionality as required to boot the system. Special function keys and languages other than English are not currently supported by the Boot Manager. Systems that provide a mouse, touchpad, or touchscreen input device should function as expected, but special features of these devices, such as gesture recognition or specialized buttons, are not supported in the boot environment. Some hypervisors, such as VirtualBox, do not report mouse movement at all in the UEFI environment.

The keyboard Up and Down arrows operate a command recall buffer. The Right and Left arrow keys move input focus to the next or previous button in a circular chain of buttons. Whenever the arrow keys highlight a button, the Escape or Enter keys will activate the highlighted button. If the user presses any key other than the arrow keys, input focus is returned to the Boot Manager command line. The Page Up and Page Down keys display previous output pages. Pay attention to the page numbers in the lower-left corner of the display. The number of pages that can be redisplayed depends on the density of text in each page, but typically up to six pages of output can be redisplayed.

3.2.9. Environment Variables

VSI OpenVMS has traditionally been dependent on UEFI environment variables to control certain aspects of the boot procedures. However, there is some inconsistency among the behaviors of some hypervisors with regards to how they handle UEFI environment variables.

While most hypervisors support interfaces for managing environment variables, some fail to provide persistence of these values across power cycles. Worse yet, some hypervisors fail to provide the fundamental interfaces and also fail to report errors when these standard UEFI features are not available.

In order to address this problem, the Boot Manager maintains an internal structure containing the required variables and attempts to store or retrieve these variables from both environment variable (if present) and a UEFI binary file, fsx:\EFI\VMS\VMS_ENV.DAT.

Using a binary file to store environment variables works with the hypervisors that preserve such files across power cycles, although there remain some persistence issues. The VMS_ENV.DAT file should not be edited. Nothing in the file is critical, and the file is deleted if it fails various validity checks.

3.3. Boot Modes, Boot Messages, and Boot Flags

There are many optional controls that affect the VSI OpenVMS boot procedures. Control of boot modes and boot messages can be accomplished using boot flags. However, most commonly used features also have Boot Manager commands or buttons that are easier to use than flags. This section describes these modes, messages, and flags, and how they affect the boot procedures.

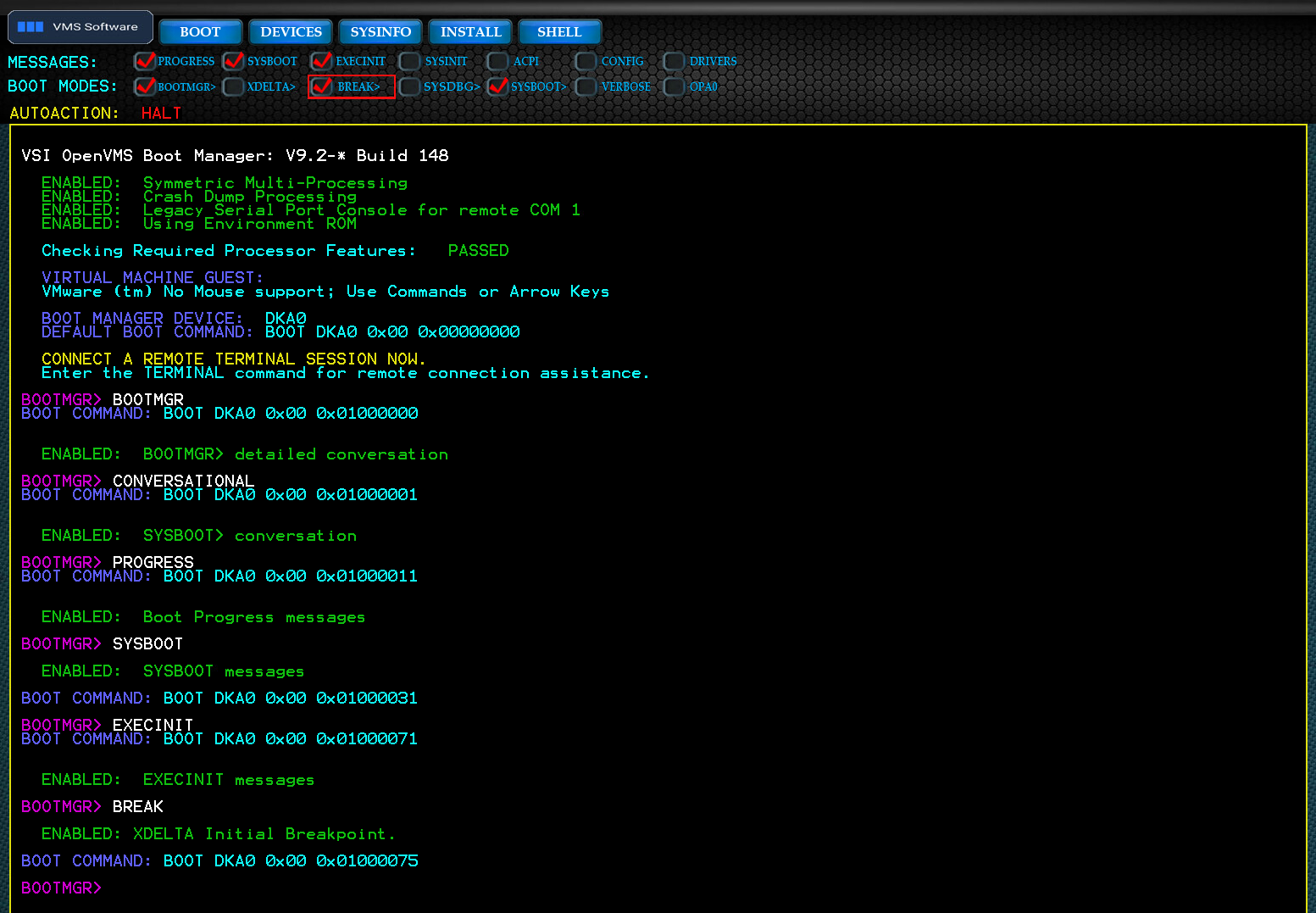

3.3.1. Boot Modes

- AUTO-ACTION

Most users will choose to set up their systems to boot with little to no interaction. Systems set up to auto-boot are assured the fastest recovery following a change in power state or fatal system event. The Boot Manager command

[NO]AUTOenables or disables a brief countdown before executing the previous defaultBOOTcommand. Refer to Section 3.5, ''Command Dictionary'' for further details.- BOOTMGR INTERACTION

The Boot Manager can operate in an interactive mode that is designed to help diagnose boot problems. The Boot Manager command

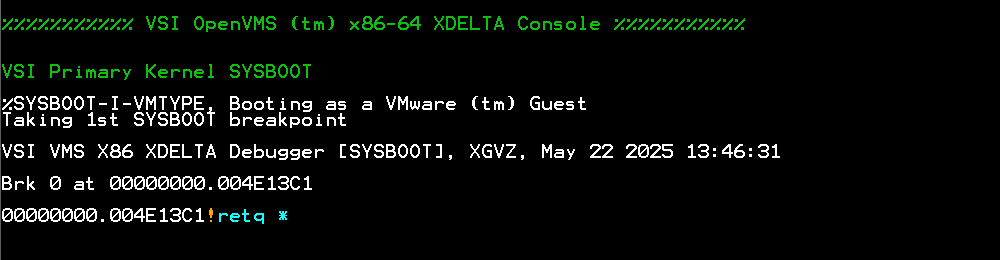

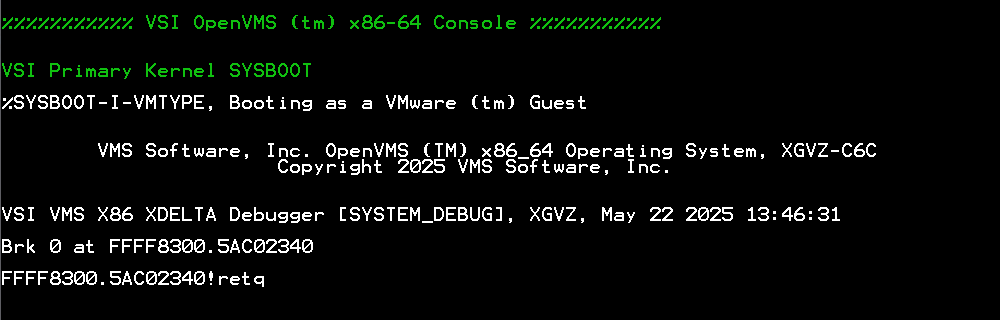

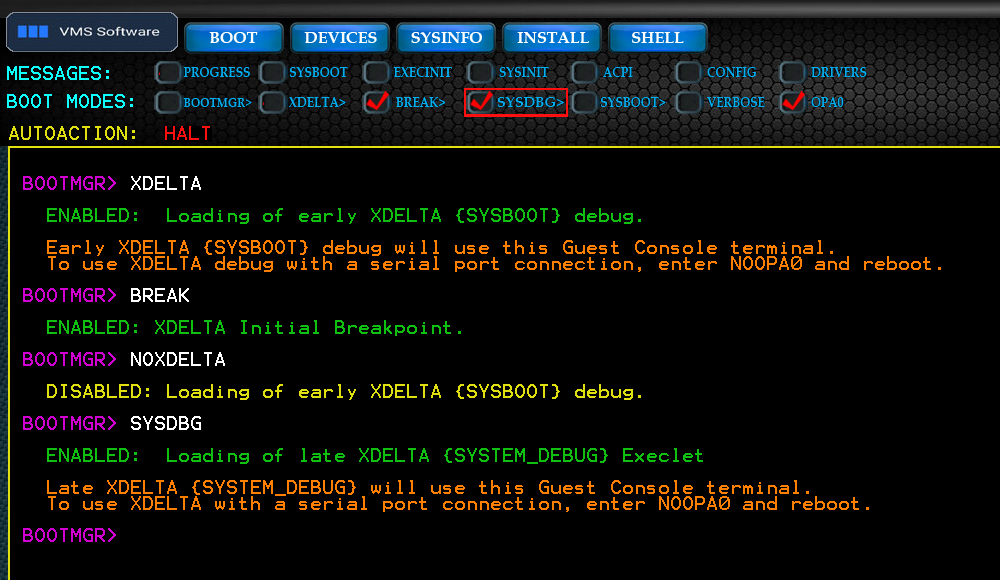

[NO]BOOTMGRenables or disables the interactive features that provide insight into the earliest UEFI phase of boot.- XDELTA

Developers can use the XDelta instruction level debugger in one of two ways. To debug the early boot process, XDelta is linked into SYSBOOT. To debug later phases of operation, XDelta will be loaded by SYSBOOT as a loadable (execlet) image. The Boot Manager command

[NO]XDELTAenables the XDelta debugger that is linked into SYSBOOT. This is most useful to developers working in the early phase of boot where the system is still using physical addressing.- BREAK

When used along with the XDELTA or SYSDBG modes, the instruction level debugger will respond to the first breakpoint it encounters, typically a call to INI$BRK() in a code module.

- SYSDBG

The Boot Manager command

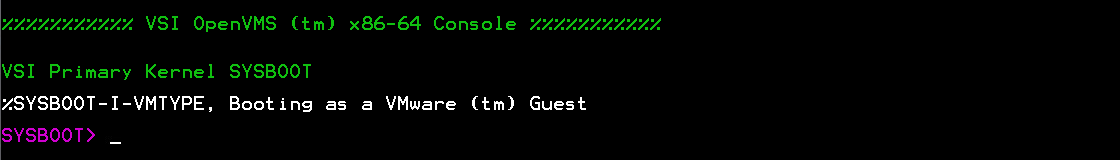

[NO]SYSDBGenables the loadable XDelta execlet debugger. This is most useful to developers working in later phases of boot where the system is using virtual addressing. It is also useful to device driver developers and for those working with other system components.- SYSBOOT INTERACTION

The Boot Manager command

[NO]CONVERSATIONenables or disables a pause in the boot process at theSYSBOOT>prompt. This is also known as a conversational boot. Refer to the relevant section in the VSI OpenVMS System Manager's Manual, Volume 1: Essentials for more details.- VERBOSE

This mode works in conjunction with the various message-related boot flags. When in VERBOSE mode, VSI OpenVMS system components may produce extended diagnostic information of interest to developers. This extended information is subject to change and does not follow any message formatting rules. Used with certain other flags, the amount of diagnostic data is substantial and may delay booting by many minutes.

Warning

Do not specify both the BOOTMGR and VERBOSE flags. This would result in an extreme number of messages.

3.3.2. Boot Messages

In order to manage the number of messages that VSI OpenVMS can produce during boot, the operating system provides a defined subset of boot flags to control messages from specific phases of the boot procedures. The Boot Manager provides commands and buttons to control each of these phase-specific messaging features.

The boot phases having their own boot message flags include: BOOTMGR, SYSBOOT, EXECINIT, SYSINIT, ACPI, HWCONFIG, and DRIVER. Both graphical mode check buttons and commands are provided for each of these flags.

In addition to each phase-specific message flag, the

[NO]VERBOSE command (or its associated push button)

controls the extent of message detail.

%FACILITY-Severity-Mnemonic, Message String. For

example:%SYSBOOT-I-PARAM, Loaded Parameter FileThese messages are intended to provide basic progress indication and highlight interesting operations.

If the VERBOSE mode flag is set, extended debug information will be provided. These messages are not formalized, and their display depends on specific developers' requirements for extensive troubleshooting.

3.3.3. Boot Flags

Boot flags are a 32-bit mask of numeric constants that enable or disable specific boot-related features. These features include boot modes, boot messages, and a variety of less common features.

The definition of boot flags were changed for VSI OpenVMS x86-64. Many of the earlier flags no longer applied to the x86-64 architecture, or else served functions that were unrelated to booting.

For VSI OpenVMS on x86-64, we have reorganized the flags into three categories:

Message controls

Boot mode controls

Debug and diagnostic controls

|

The Boot Manager presents graphical check buttons for the most commonly used

flags and commands to set and clear individual flags by name. Note, however,

that most hypervisors do not support mouse movement under UEFI, so if you wish

to use the graphical buttons, you will need to press the arrow keys to select a

button, then press Enter to toggle the state of the button. As

an alternative, you can use commands to control the flag states. Refer to FLAGS and Message Check Buttons for more information on the FLAGS

command and message check buttons.

Each boot flag has a corresponding command to set or clear the flag. All such

Boot Manager commands can be prefixed with NO to clear the

flag. For example: PROGRESS sets the progress message flag

and NOPROGRESS clears the flag. Most commands can be

abbreviated to a minimum number of unique characters.

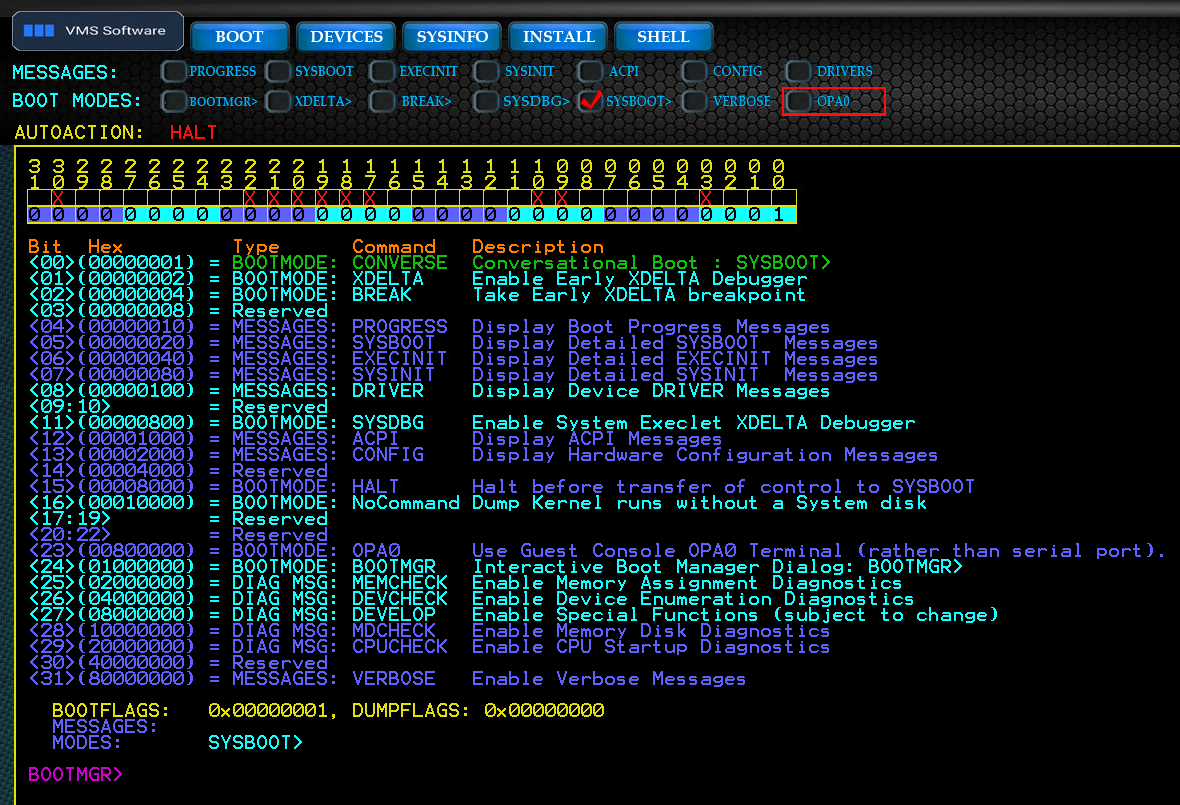

A FLAGS command is also available for managing the full set

of available flags. Entering the FLAGS command without an

additional value will display and interpret the current boot flag settings,

as

follows (in graphical modes, a chart of flag bits will also be

displayed):

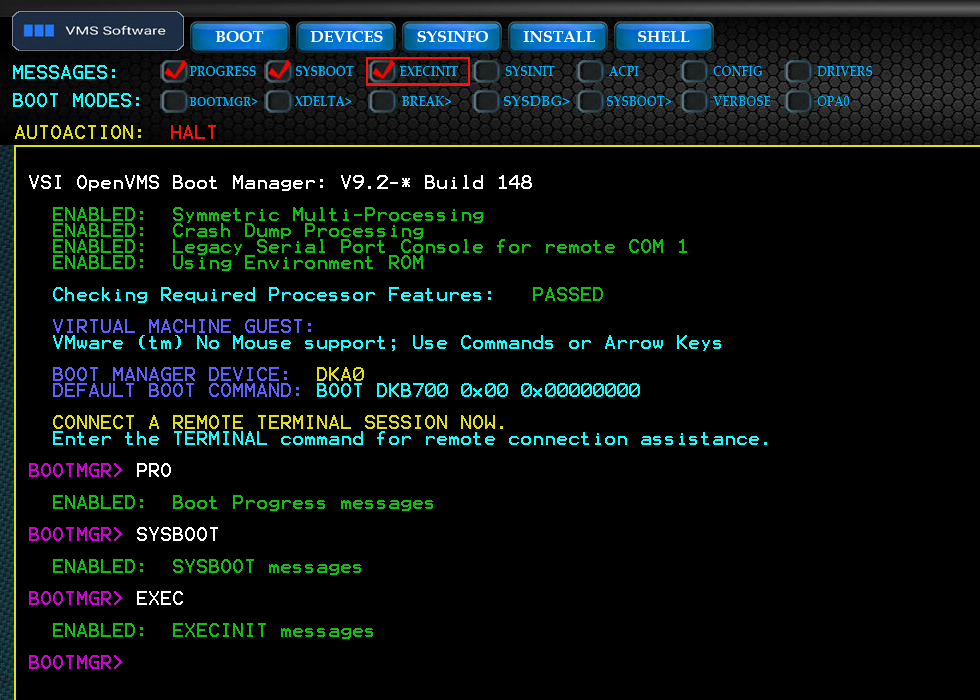

BOOTMGR> FLAGS

3 3 2 2 2 2 2 2 2 2 2 2 1 1 1 1 1 1 1 1 1 1 0 0 0 0 0 0 0 0 0 0

1 0 9 8 7 6 5 4 3 2 1 0 9 8 7 6 5 4 3 2 1 0 9 8 7 6 5 4 3 2 1 0

X X X X X X X X X X

0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 1 0 0 0 0

Bit Hex Type Command Description

<00>(00000001) = BOOTMODE: CONVERSE Conversational Boot : SYSBOOT>

<01>(00000002) = BOOTMODE: XDELTA Enable Early XDELTA Debugger

<02>(00000004) = BOOTMODE: BREAK Take Early XDELTA breakpoint

<03>(00000008) = Reserved

<04>(00000010) = MESSAGES: PROGRESS Display Boot Progress Messages

<05>(00000020) = MESSAGES: SYSBOOT Display Detailed SYSBOOT Messages

<06>(00000040) = MESSAGES: EXECINIT Display Detailed EXECINIT Messages

<07>(00000080) = MESSAGES: SYSINIT Display Detailed SYSINIT Messages

<08>(00000100) = MESSAGES: DRIVER Display Device DRIVER Messages

<09:10> = Reserved

<11>(00000800) = BOOTMODE: SYSDBG Enable System Execlet XDELTA Debugger

<12>(00001000) = MESSAGES: ACPI Display ACPI Messages

<13>(00002000) = MESSAGES: CONFIG Display Hardware Configuration Messages

<14>(00004000) = Reserved

<15>(00008000) = BOOTMODE: HALT Halt before transfer of control to SYSBOOT

<16>(00010000) = BOOTMODE: NoCommand Dump Kernel runs without a System disk

<17:19> = Reserved

<20:22> = Reserved

<23>(00800000) = BOOTMODE: OPA0 Use Guest Console OPA0 Terminal

(rather than serial port).

<24>(01000000) = BOOTMODE: BOOTMGR Interactive Boot Manager Dialog: BOOTMGR>

<25>(02000000) = DIAG MSG: MEMCHECK Enable Memory Assignment Diagnostics

<26>(04000000) = DIAG MSG: DEVCHECK Enable Device Enumeration Diagnostics

<27>(08000000) = DIAG MSG: DEVELOP Enable Special Functions (subject to change)

<28>(10000000) = DIAG MSG: MDCHECK Enable Memory Disk Diagnostics

<29>(20000000) = DIAG MSG: CPUCHECK Enable CPU Startup Diagnostics

<30>(40000000) = Reserved

<31>(80000000) = MESSAGES: VERBOSE Enable Verbose Messages

BOOTFLAGS: 0x00000010, DUMPFLAGS: 0x00000000

MESSAGES: PROGRESS

MODES:To set or clear boot flags, the desired hexadecimal value can be provided

following the FLAGS command. For example, FLAGS

1070 would set boot flag bits <4>, <5>, <6>, and <12>,

enabling general boot PROGRESS messages and additional messages from SYSBOOT,

EXEC_INIT, and ACPI facilities.

When

entering a new flag value (longword) using the FLAG

command, be aware that the value you enter will supersede

flags that had been set previously. For this reason, you may want to use the

FLAGS command first to see the current value, then adjust

the value to enable or disable the flag bit(s) you are interested in.

Setting

individual flags using commands or buttons logically OR's their bits in the

flags field.

The Boot Manager saves your boot flags value in response to a

BOOT, EXIT, or SAVE

command. Flag values that were set during a BOOT command will

also be preserved as the default BOOT command string to be

used on subsequent boots.

3.4. Command Usage

The Boot Manager provides a set of commands that serve the needs of developers and system managers. The subset of these commands that support developers is subject to change, and some of the commands will be disabled in production releases. This version of the Boot Manager User Guide describes all of the commands that are active at the time of writing. Each command description will indicate its intended audience.

3.4.1. Command Syntax

Boot Manager commands use a very simple syntax.

A command verb is followed by an optional parameter separated by a space.

Command verbs can be abbreviated to the smallest number of characters required to assure uniqueness. Certain commands having significant effect must be entered in full as a means of verifying user intent.

Commands that set a flag or enable a feature are typically cleared by

prefixing the verb NO in front of the original command. For

example, the PROGRESS command enables a flag to produce boot

progress messages. The NOPROGRESS command clears the flag. In

other words, the Boot Manager does not use SET,

CLEAR, ENABLE, or

DISABLE command precursors.

3.4.2. Command Recall and Logging

The Boot Manager provides a limited command recall buffer that can be accessed with the Up or Down arrow keys. The Boot Manager supports a limited form of scrolled output using the Page Up and Page Down keyboard keys.

It is unusual for the early boot process to produce large amounts of text prior to transfer to VSI OpenVMS, at which point the operator terminal and logging are available. In cases where Boot Manager commands produce more lines of output than will fit on a single screen, the Boot Manager may pause the output as text exceeds each visible display page.

In cases where a permanent log of Boot Manager output is desired, output can be directed to a COM port and terminal emulator. These features are described in their respective command descriptions.

3.4.3. Command Push Buttons

When operating in graphical mode, common commands are represented by rows of push buttons (see the figure below). If a pointing device (mouse or touchpad) is active, the push buttons can be activated as you would expect from a GUI-based application.

If no pointing device is active, the push buttons can be selected using the Right or Left arrow keys. The selected button will be outlined in red. To activate the selected button, press the Enter or Escape key. If you press any other keys, input focus will return to the command line.

|

3.5. Command Dictionary

This section describes the commands that are available from the

BOOTMGR> prompt.

The command descriptions show the full-length command verbs. Most commands can be abbreviated to the shortest number of characters that would uniquely identify them. In certain instances, commands that result in particularly significant operations may not support an abbreviated form.

AUTOACTION

AUTOACTION — Determines what automatic action occurs when the Boot Manager is launched.

Syntax

AUTOACTION [arg]Arguments

- HALT

Remain at the

BOOTMGR>command prompt.- BOOT

Countdown before issuing the previous

BOOTcommand. Refer to Section A.1, ''DEVICE, AUTOACTION, and CONVERSATION Commands'' for an example of output for theAUTOACTIONcommand.- seconds

Sets a countdown delay between 1 and 30 seconds.

If the boot countdown is interrupted by pressing the

Escape key, the countdown stops and the

BOOTMGR> prompt is displayed. Pressing any other key during

the countdown skips the remaining countdown and proceeds to boot.

Entering

AUTO 0 is equivalent to entering AUTO

HALT without changing the countdown. The countdown does not

apply to HALT.

BOOT

BOOT — Initiates a system boot. Several optional arguments are available.

Syntax

BOOT [device] [root] [flags]

Before You Begin

If you have previously booted the system, a BOOT

command with no additional arguments will attempt to boot the system using

the prior BOOT command string, including device, root,

and flags.

If you have not previously booted the system, a BOOT

command with no additional arguments will attempt to boot the system from

the device where the Boot Manager resides. In most cases, this will be your

default system disk.

Refer to Section A.4, ''Progress Messages on Guest Display After BOOT'' for an example output for an unqualified BOOT command.

Optional Arguments

- BOOT [device]

- The first optional argument is a VSI OpenVMS device name that you wish to boot from. Unlike prior versions of VSI OpenVMS, the Boot Manager can be run from one device and boot a different device. You no longer need to figure out which UEFI file system device (fsx:) equates to your boot device. The Boot Manager will do this for you. For example:

BOOTMGR> BOOT DKA100:

Note

Mapping of VSI OpenVMS to UEFI file system devices requires support of the UEFI shell protocol. In some rare cases, this protocol is not available in platform firmware. In this case, you can use the UEFI file system device name (fs0:, etc.) in the

BOOTcommand.You must choose one of the bootable devices listed by the

DEVICEcommand. The listed devices are those that have passed a series of tests to determine that they contain bootable VSI OpenVMS images. Specifying the trailing colon is optional. Note that the scope of devices that the Boot Manager sees during initialization is limited to those for which UEFI drivers are available. Complex storage systems do not always expose their devices to UEFI for booting. Most often, the listed devices will include the system boot device. Once VSI OpenVMS has booted, it gains additional capability for detecting devices and, in some rare cases, the VSI OpenVMS device names may differ from the name that was used during boot. If you are unable to find your device, confirm that it has been enabled for booting in your VM configuration. - BOOT [device] [root]

- The second optional argument is a system root to boot from. You can set the default system root using the

ROOTcommand, or you can specify it as the second argument in a fully-qualifiedBOOTcommand. For example:BOOTMGR> BOOT DKA0 0 10

This command bootsDKA0using Root0with boot flag (hex)10; it sets bit <4>, which is the PROGRESS message flag. - BOOT [device] [root] [flags]

The third optional argument is a hexadecimal value representing boot flags. Refer to the description of FLAGS and Message Check Buttons for more details. Boot flags may also be passed into the Boot Manager when it is launched from the UEFI shell prompt. If you specify flags at the UEFI shell prompt, prefix the flags with

-flas you would on earlier versions of VSI OpenVMS. For example:Shell> VMS_BOOTMGR.EFI DKA0 –fl 0,807

The above command boots device

DKA0using system root0and boot flags0x807.You do not need the

-flprefix or comma when entering aBOOTcommand at theBOOTMGR>prompt. The equivalent command line would be as follows:BOOTMGR> BOOT DKA0 0 807

Note

The VSI OpenVMS x86-64 boot flag definitions are different from prior versions of VSI OpenVMS. Refer to the FLAGS and Message Check Buttons command description for details.

BOOT OPTIONS LIST

As an alternative to entering BOOT command arguments,

you can also boot from a pre-defined list of boot options. These are

not the same as UEFI boot option variables. UEFI

boot option variables get you to the Boot Manager, not

to a VSI OpenVMS boot device.

To use a predefined list of BOOT commands, you must

first create the list in the same folder where the VMS Boot Manager is

launched from, typically fsx:\EFI\VMS. The list is

a simple text file that can be created by the UEFI editor or copied to the

system using FTP or another mechanism to get the file into the EFI

partition.

Refer to the OPTIONS command for details on creating the options list.

If the Boot Manager finds the options list, the OPTIONS

command with no arguments will enumerate and display the list.

To select a listed option, enter BOOT # followed by the

option number in the boot options list.

This feature is intended to simplify the task of booting many system configurations for test purposes, but it may be useful for other reasons too.

- AUTOACTION BOOT | HALT | seconds

If you have previously set AUTOACTION to BOOT, the Boot Manager will provide a countdown prior to launching the default